1. 迭代优化

尝试 RANSAC PnP 加上迭代优化的方式估计相机位姿

看看是否对 视觉SLAM笔记(46) 基本的 VO 的效果有所改进

由于现在目标是估计位姿而非结构

以相机位姿

为优化变量,通过最小化重投影误差,来构建优化问题

2. 优化边

与之前一样,自定义一个 g2o 中的优化边

它只优化一个位姿,因此是一个一元边

相关头文件位于/include/myslam/g2o_types.h

#ifndef MYSLAM_G2O_TYPES_H

#define MYSLAM_G2O_TYPES_H

#include "myslam/common_include.h"

#include "camera.h"

#include <g2o/core/base_vertex.h>

#include <g2o/core/base_unary_edge.h>

#include <g2o/core/block_solver.h>

#include <g2o/core/optimization_algorithm_levenberg.h>

#include <g2o/types/sba/types_six_dof_expmap.h>

#include <g2o/solvers/dense/linear_solver_dense.h>

#include <g2o/core/robust_kernel.h>

#include <g2o/core/robust_kernel_impl.h>

namespace myslam

{

class EdgeProjectXYZRGBD : public g2o::BaseBinaryEdge<3, Eigen::Vector3d, g2o::VertexSBAPointXYZ, g2o::VertexSE3Expmap>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

virtual void computeError();

virtual void linearizeOplus();

virtual bool read(std::istream& in) {}

virtual bool write(std::ostream& out) const {}

};

class EdgeProjectXYZRGBDPoseOnly : public g2o::BaseUnaryEdge<3, Eigen::Vector3d, g2o::VertexSE3Expmap >

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

// Error: measure = R*point+t

virtual void computeError();

virtual void linearizeOplus();

virtual bool read(std::istream& in) {}

virtual bool write(std::ostream& out) const {}

Vector3d point_;

};

// 投影方程边

class EdgeProjectXYZ2UVPoseOnly : public g2o::BaseUnaryEdge<2, Eigen::Vector2d, g2o::VertexSE3Expmap >

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

virtual void computeError();

virtual void linearizeOplus();

virtual bool read(std::istream& in) {}

virtual bool write(std::ostream& os) const {};

Vector3d point_;

Camera* camera_;

};

}

#endif // MYSLAM_G2O_TYPES_H

把三维点和相机模型放入它的成员变量中,方便计算重投影误差和雅可比

在源文件中,给出 g2o 方法的实现 /src/g2o_types.cpp :

#include "myslam/g2o_types.h"

namespace myslam

{

void EdgeProjectXYZRGBD::computeError()

{

const g2o::VertexSBAPointXYZ* point = static_cast<const g2o::VertexSBAPointXYZ*> (_vertices[0]);

const g2o::VertexSE3Expmap* pose = static_cast<const g2o::VertexSE3Expmap*> (_vertices[1]);

_error = _measurement - pose->estimate().map(point->estimate());

}

void EdgeProjectXYZRGBD::linearizeOplus()

{

g2o::VertexSE3Expmap* pose = static_cast<g2o::VertexSE3Expmap *> (_vertices[1]);

g2o::SE3Quat T(pose->estimate());

g2o::VertexSBAPointXYZ* point = static_cast<g2o::VertexSBAPointXYZ*> (_vertices[0]);

Eigen::Vector3d xyz = point->estimate();

Eigen::Vector3d xyz_trans = T.map(xyz);

double x = xyz_trans[0];

double y = xyz_trans[1];

double z = xyz_trans[2];

_jacobianOplusXi = -T.rotation().toRotationMatrix();

_jacobianOplusXj(0, 0) = 0;

_jacobianOplusXj(0, 1) = -z;

_jacobianOplusXj(0, 2) = y;

_jacobianOplusXj(0, 3) = -1;

_jacobianOplusXj(0, 4) = 0;

_jacobianOplusXj(0, 5) = 0;

_jacobianOplusXj(1, 0) = z;

_jacobianOplusXj(1, 1) = 0;

_jacobianOplusXj(1, 2) = -x;

_jacobianOplusXj(1, 3) = 0;

_jacobianOplusXj(1, 4) = -1;

_jacobianOplusXj(1, 5) = 0;

_jacobianOplusXj(2, 0) = -y;

_jacobianOplusXj(2, 1) = x;

_jacobianOplusXj(2, 2) = 0;

_jacobianOplusXj(2, 3) = 0;

_jacobianOplusXj(2, 4) = 0;

_jacobianOplusXj(2, 5) = -1;

}

void EdgeProjectXYZRGBDPoseOnly::computeError()

{

const g2o::VertexSE3Expmap* pose = static_cast<const g2o::VertexSE3Expmap*> (_vertices[0]);

_error = _measurement - pose->estimate().map(point_);

}

void EdgeProjectXYZRGBDPoseOnly::linearizeOplus()

{

g2o::VertexSE3Expmap* pose = static_cast<g2o::VertexSE3Expmap*> (_vertices[0]);

g2o::SE3Quat T(pose->estimate());

Vector3d xyz_trans = T.map(point_);

double x = xyz_trans[0];

double y = xyz_trans[1];

double z = xyz_trans[2];

_jacobianOplusXi(0, 0) = 0;

_jacobianOplusXi(0, 1) = -z;

_jacobianOplusXi(0, 2) = y;

_jacobianOplusXi(0, 3) = -1;

_jacobianOplusXi(0, 4) = 0;

_jacobianOplusXi(0, 5) = 0;

_jacobianOplusXi(1, 0) = z;

_jacobianOplusXi(1, 1) = 0;

_jacobianOplusXi(1, 2) = -x;

_jacobianOplusXi(1, 3) = 0;

_jacobianOplusXi(1, 4) = -1;

_jacobianOplusXi(1, 5) = 0;

_jacobianOplusXi(2, 0) = -y;

_jacobianOplusXi(2, 1) = x;

_jacobianOplusXi(2, 2) = 0;

_jacobianOplusXi(2, 3) = 0;

_jacobianOplusXi(2, 4) = 0;

_jacobianOplusXi(2, 5) = -1;

}

void EdgeProjectXYZ2UVPoseOnly::computeError()

{

const g2o::VertexSE3Expmap* pose = static_cast<const g2o::VertexSE3Expmap*> (_vertices[0]);

_error = _measurement - camera_->camera2pixel(

pose->estimate().map(point_));

}

void EdgeProjectXYZ2UVPoseOnly::linearizeOplus()

{

g2o::VertexSE3Expmap* pose = static_cast<g2o::VertexSE3Expmap*> (_vertices[0]);

g2o::SE3Quat T(pose->estimate());

Vector3d xyz_trans = T.map(point_);

double x = xyz_trans[0];

double y = xyz_trans[1];

double z = xyz_trans[2];

double z_2 = z * z;

_jacobianOplusXi(0, 0) = x * y / z_2 * camera_->fx_;

_jacobianOplusXi(0, 1) = -(1 + (x*x / z_2)) *camera_->fx_;

_jacobianOplusXi(0, 2) = y / z * camera_->fx_;

_jacobianOplusXi(0, 3) = -1. / z * camera_->fx_;

_jacobianOplusXi(0, 4) = 0;

_jacobianOplusXi(0, 5) = x / z_2 * camera_->fx_;

_jacobianOplusXi(1, 0) = (1 + y * y / z_2) *camera_->fy_;

_jacobianOplusXi(1, 1) = -x * y / z_2 * camera_->fy_;

_jacobianOplusXi(1, 2) = -x / z * camera_->fy_;

_jacobianOplusXi(1, 3) = 0;

_jacobianOplusXi(1, 4) = -1. / z * camera_->fy_;

_jacobianOplusXi(1, 5) = y / z_2 * camera_->fy_;

}

}

3. 姿态估计

在视觉里程visual_odometry.cpp中的姿态估计有些改动:

void VisualOdometry::poseEstimationPnP()

{

// 构建3d、2d观测

vector<cv::Point3f> pts3d;

vector<cv::Point2f> pts2d;

for (cv::DMatch m : feature_matches_)

{

pts3d.push_back(pts_3d_ref_[m.queryIdx]);

pts2d.push_back(keypoints_curr_[m.trainIdx].pt);

}

Mat K = (cv::Mat_<double>(3, 3) <<

ref_->camera_->fx_, 0, ref_->camera_->cx_,

0, ref_->camera_->fy_, ref_->camera_->cy_,

0, 0, 1

);

Mat rvec, tvec, inliers;

cv::solvePnPRansac(pts3d, pts2d, K, Mat(), rvec, tvec, false, 100, 4.0, 0.99, inliers);

num_inliers_ = inliers.rows;

cout << "pnp inliers: " << num_inliers_ << endl;

T_c_r_estimated_ = SE3(

SO3(rvec.at<double>(0, 0), rvec.at<double>(1, 0), rvec.at<double>(2, 0)),

Vector3d(tvec.at<double>(0, 0), tvec.at<double>(1, 0), tvec.at<double>(2, 0))

);

// 优化姿态

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6, 2>> Block;

// 线性方程求解器

Block::LinearSolverType* linearSolver = new g2o::LinearSolverDense<Block::PoseMatrixType>();

// 矩阵块求解器

Block* solver_ptr = new Block(std::unique_ptr<Block::LinearSolverType>(linearSolver));

// 梯度下降方法

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(std::unique_ptr<Block>(solver_ptr));

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm(solver);

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap();

pose->setId(0);

pose->setEstimate(g2o::SE3Quat(

T_c_r_estimated_.rotation_matrix(),

T_c_r_estimated_.translation()

));

optimizer.addVertex(pose);

// edges

for (int i = 0; i < inliers.rows; i++)

{

int index = inliers.at<int>(i, 0);

// 三维->二维投影

EdgeProjectXYZ2UVPoseOnly* edge = new EdgeProjectXYZ2UVPoseOnly();

edge->setId(i);

edge->setVertex(0, pose);

edge->camera_ = curr_->camera_.get();

edge->point_ = Vector3d(pts3d[index].x, pts3d[index].y, pts3d[index].z);

edge->setMeasurement(Vector2d(pts2d[index].x, pts2d[index].y));

edge->setInformation(Eigen::Matrix2d::Identity());

optimizer.addEdge(edge);

}

optimizer.initializeOptimization();

optimizer.optimize(10);

T_c_r_estimated_ = SE3(

pose->estimate().rotation(),

pose->estimate().translation()

);

}

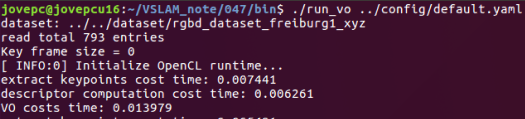

4. 结果对比

运行此程序,对比之前的结果

由于新增的优化仍是无结构的,规模很小,对计算时间的影响基本可以忽略不计

整体的视觉里程计计算时间仍在 14 毫秒左右

将发现估计的运动明显稳定了很多

参考:

相关推荐:

视觉SLAM笔记(46) 基本的 VO

视觉SLAM笔记(45) 搭建 VO 框架

视觉SLAM笔记(44) RGB-D 的直接法

视觉SLAM笔记(43) 直接法

视觉SLAM笔记(42) 光流法跟踪特征点