CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(vo1)

set(CMAKE_BUILD_TYPE "Release")

add_definitions("-DENABLE_SSE")

set(CMAKE_CXX_FLAGS "-std=c++11 -O2 ${SSE_FLAGS} -msse4")

list(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake)

find_package(OpenCV 3 REQUIRED)

find_package(G2O REQUIRED)

find_package(Sophus REQUIRED)

include_directories(

${OpenCV_INCLUDE_DIRS}

${G2O_INCLUDE_DIRS}

${Sophus_INCLUDE_DIRS}

"/usr/include/eigen3/"

)

add_executable(pose_estimation_3d2d pose_estimation_3d2d.cpp)

target_link_libraries(pose_estimation_3d2d

g2o_core g2o_stuff

${OpenCV_LIBS})

slambook2/ch7/pose_estimation_3d2d.cpp

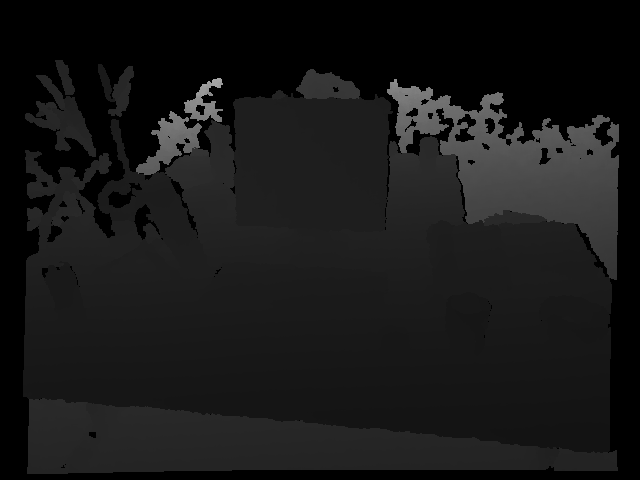

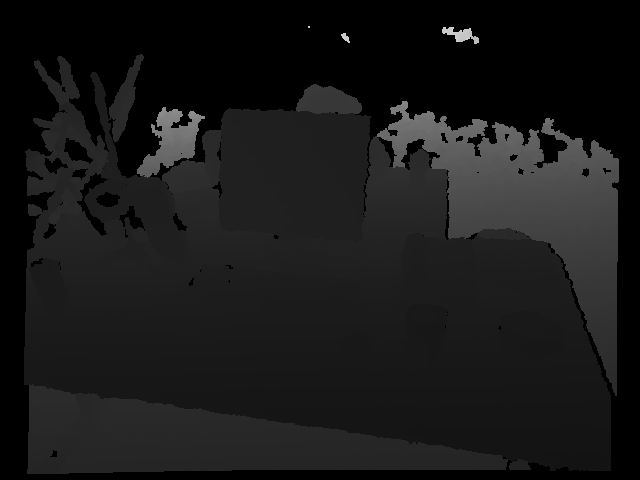

演示使用OpenCV的EPnP求解PnP问题,然后通过非线性化再次求解。由于PnP需要使用3D点,为了避免初始化带来的麻烦,我们使用了RGB-D相机中的深度图(1_depth.png) 作为特征点的3D位置。在例程中,得到配对特征点后,我们在第一个图的深度图中寻找它们的深度,并求出空间位置。以此空间位置为3D点,再以第二个图像的像素位置为2D点,调用EPnP求解PnP问题。

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <Eigen/Core>

#include <sophus/se3.hpp>

#include <chrono>

#include <iomanip>

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches);

// 像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p, const Mat &K);

int main(int argc, char **argv) {

if (argc != 5) {

cout << "usage: pose_estimation_3d2d img1 img2 depth1 depth2" << endl;

return 1;

}

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

assert(img_1.data && img_2.data && "Can not load images!");

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

// 建立3D点

Mat d1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED); // 深度图为16位无符号数,单通道图像

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point3f> pts_3d;

vector<Point2f> pts_2d;

for (DMatch m:matches) {

ushort d = d1.ptr<unsigned short>(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

if (d == 0) // bad depth

continue;

float dd = d / 5000.0;

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

pts_3d.push_back(Point3f(p1.x * dd, p1.y * dd, dd));

pts_2d.push_back(keypoints_2[m.trainIdx].pt);

}

cout << "3d-2d pairs: " << pts_3d.size() << endl;

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

Mat r, t;

solvePnP(pts_3d, pts_2d, K, Mat(), r, t, false); // 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法

Mat R;

cv::Rodrigues(r, R); // r为旋转向量形式,用Rodrigues公式转换为矩阵

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in opencv cost time: " << time_used.count() << " seconds." << endl;

cout << "R=" << endl << R << endl;

cout << "t=" << endl << t << endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

//-- 初始化

Mat descriptors_1, descriptors_2;

// used in OpenCV3

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

// use this if you are in OpenCV2

// Ptr<FeatureDetector> detector = FeatureDetector::create ( "ORB" );

// Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create ( "ORB" );

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<DMatch> match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

文件准备

1.png

2.png

1_depth.png

2_depth.png

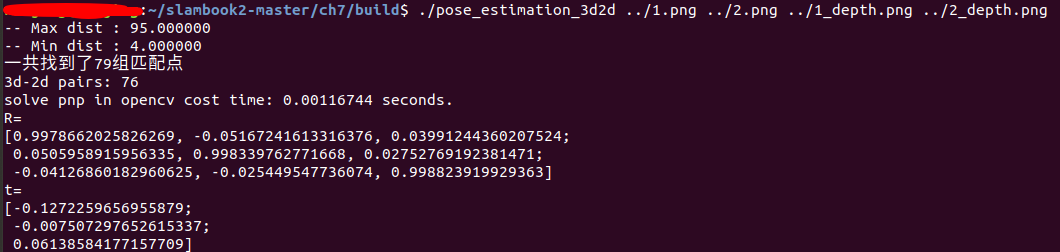

运行结果: