导语

昨天的分享中,从微观的层面上了解了关于Kafka消息处理机制,但是当面对一个kafka集群的时候从宏观的角度上来说怎么保证kafka集群的高可用性呢?下面就来看看

文章目录

Kafka集群基本信息实时查看和修改

Kafka提供的集群信息实时查看工具是topic工具

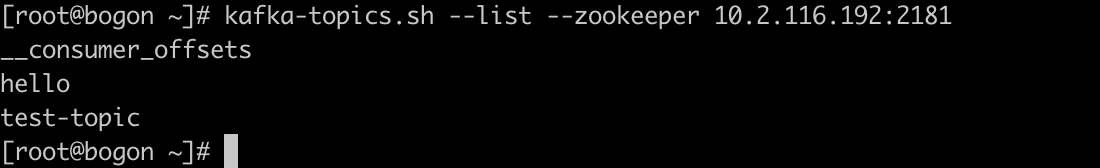

- 列出集群当前所有可用的topic

kafka-topics.sh --list --zookeeper 10.2.116.192:2181

会看到在上面这张图中有两个可以看懂的topic,一个是在创建集群的时候测试的topic,一个是通过SpringBoot调用客户端自己创建的hello topic。

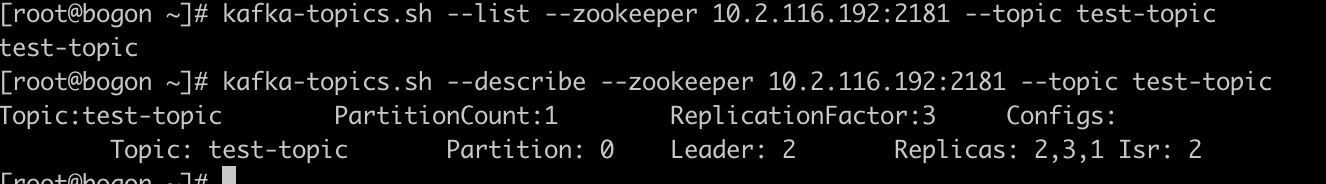

- 查看集群中特定的topic信息

kafka-topics.sh --list --zookeeper 10.2.116.192:2181 --topic test-topic

kafka-topics.sh --describe --zookeeper 10.2.116.192:2181 --topic test-topic

集群信息实时修改topic工具

- 创建topic

[root@bogon ~]# kafka-topics.sh --create --zoookeeper 10.2.116.192:2181 --replication-factor 1 --partitions 1 --topic nihui

Exception in thread "main" joptsimple.UnrecognizedOptionException: zoookeeper is not a recognized option

at joptsimple.OptionException.unrecognizedOption(OptionException.java:108)

at joptsimple.OptionParser.handleLongOptionToken(OptionParser.java:510)

at joptsimple.OptionParserState$2.handleArgument(OptionParserState.java:56)

at joptsimple.OptionParser.parse(OptionParser.java:396)

at kafka.admin.TopicCommand$TopicCommandOptions.<init>(TopicCommand.scala:576)

at kafka.admin.TopicCommand$.main(TopicCommand.scala:49)

at kafka.admin.TopicCommand.main(TopicCommand.scala)

[root@bogon ~]#

会发现为什么上面会报错呢?是因为创建的时候不小心在Zookeeper多加了一个o。下面就创建成功了

[root@bogon ~]# kafka-topics.sh --create --zookeeper 10.2.116.192:2181 --replication-factor 1 --partitions 1 --topic nihui

Created topic nihui.

[root@bogon ~]#

使用查看命令进行查看会发现由于在集群状态下Zookeeper会进行主从同步所以对于节点A上的数据在节点B上同样可以查询到

[root@bogon ~]# kafka-topics.sh --list --zookeeper 10.2.116.190:2181

__consumer_offsets

hello

nihui

test-topic

[root@bogon ~]#

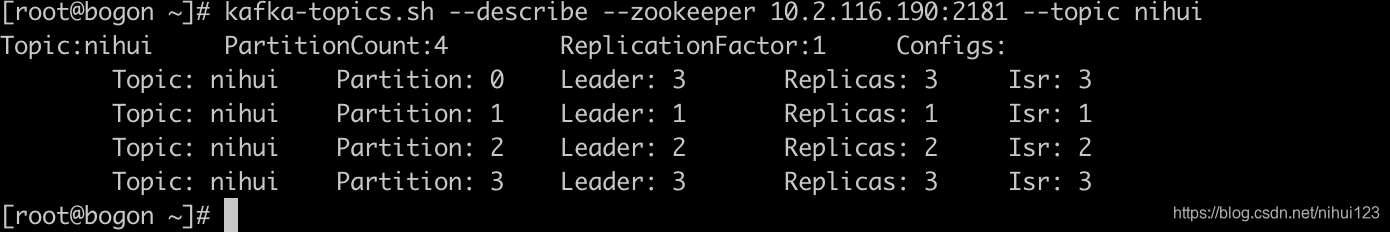

- 增加(不能减少)partition

注意这个有个警告,但是实际操作是成功的

[root@bogon ~]# kafka-topics.sh --zookeeper 10.2.116.120:2181 --alter --topic nihui --partitions 4

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!

[root@bogon ~]#

查看详细信息

[root@bogon ~]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic nihui

Topic:nihui PartitionCount:4 ReplicationFactor:1 Configs:

Topic: nihui Partition: 0 Leader: 3 Replicas: 3 Isr: 3

Topic: nihui Partition: 1 Leader: 1 Replicas: 1 Isr: 1

Topic: nihui Partition: 2 Leader: 2 Replicas: 2 Isr: 2

Topic: nihui Partition: 3 Leader: 3 Replicas: 3 Isr: 3

[root@bogon ~]#

当然对于Topic-level configuration 配置都能修改,通过kafka官方提供的可修改列表。对于kafka的操作就介绍这么,如果有需要其他命令,可以在需要的时候进行查看具体的使用方式。通过直接输入命令然后会提示命令参数。

Kafka集群Leader平衡机制

拿上面topic 为nihui来说,每个partition的所有replicas叫做“assigned replicas”,“assigned replicas”中的第一个replicas叫做“preferred replica”,刚刚创建的topic 一般 “preferred replica” 是Leader。就如下面图中所展示的一样,Partition 0的broker 3 就是 preferred replica。默认会成为该分区的Leader。

那么集群平衡是怎么实现的呢?

由于机器的频繁的上下线,就会导致集群不断的进行选主操作,那么就会导致preferred replica分区不是Leader,就要重新去选 ,就可以通过如下的操作进行。

[root@bogon ~]# kafka-preferred-replica-election.sh --zookeeper 10.2.116.190

Warning: --zookeeper is deprecated and will be removed in a future version of Kafka.

Use --bootstrap-server instead to specify a broker to connect to.

Created preferred replica election path with __consumer_offsets-22,__consumer_offsets-30,__consumer_offsets-8,__consumer_offsets-21,__consumer_offsets-4,__consumer_offsets-27,__consumer_offsets-7,__consumer_offsets-9,__consumer_offsets-46,nihui-0,__consumer_offsets-25,__consumer_offsets-35,__consumer_offsets-41,__consumer_offsets-33,__consumer_offsets-23,__consumer_offsets-49,__consumer_offsets-47,__consumer_offsets-16,__consumer_offsets-28,__consumer_offsets-31,__consumer_offsets-36,__consumer_offsets-42,__consumer_offsets-3,__consumer_offsets-18,__consumer_offsets-37,__consumer_offsets-15,__consumer_offsets-24,__consumer_offsets-38,__consumer_offsets-17,nihui-2,__consumer_offsets-48,hello-0,__consumer_offsets-19,__consumer_offsets-11,__consumer_offsets-13,__consumer_offsets-2,__consumer_offsets-43,__consumer_offsets-6,__consumer_offsets-14,nihui-3,test-topic-0,__consumer_offsets-20,nihui-1,__consumer_offsets-0,__consumer_offsets-44,__consumer_offsets-39,__consumer_offsets-12,__consumer_offsets-45,__consumer_offsets-1,__consumer_offsets-5,__consumer_offsets-26,__consumer_offsets-29,__consumer_offsets-34,__consumer_offsets-10,__consumer_offsets-32,__consumer_offsets-40

Successfully started preferred replica election for partitions Set(__consumer_offsets-22, __consumer_offsets-30, __consumer_offsets-8, __consumer_offsets-21, __consumer_offsets-4, __consumer_offsets-27, __consumer_offsets-7, __consumer_offsets-9, __consumer_offsets-46, nihui-0, __consumer_offsets-25, __consumer_offsets-35, __consumer_offsets-41, __consumer_offsets-33, __consumer_offsets-23, __consumer_offsets-49, __consumer_offsets-47, __consumer_offsets-16, __consumer_offsets-28, __consumer_offsets-31, __consumer_offsets-36, __consumer_offsets-42, __consumer_offsets-3, __consumer_offsets-18, __consumer_offsets-37, __consumer_offsets-15, __consumer_offsets-24, __consumer_offsets-38, __consumer_offsets-17, nihui-2, __consumer_offsets-48, hello-0, __consumer_offsets-19, __consumer_offsets-11, __consumer_offsets-13, __consumer_offsets-2, __consumer_offsets-43, __consumer_offsets-6, __consumer_offsets-14, nihui-3, test-topic-0, __consumer_offsets-20, nihui-1, __consumer_offsets-0, __consumer_offsets-44, __consumer_offsets-39, __consumer_offsets-12, __consumer_offsets-45, __consumer_offsets-1, __consumer_offsets-5, __consumer_offsets-26, __consumer_offsets-29, __consumer_offsets-34, __consumer_offsets-10, __consumer_offsets-32, __consumer_offsets-40)

[root@bogon ~]#

直接通过在配置文件中添加配置项的方式来进行配置,当然这里展示的是一个自己搭建的测试集群这个测试没有办法完成,加上这个配置之后就可以实现PR的平衡机制了。

auto.leader.rebalance.enable=true

集群分区日志迁移

假设开始的时候只有现在的三台机器,日志都集中到了现有的三台机器上面,后期如果研究的需要需要新增加机器,由于新增加的机器上并没有任何的数据,就需要将现有的机器上的数据移到新机器上,那么就需要集群分区日志迁移。

集群分区日志迁移主要分为两种情况

- 将某个TOPIC上的数据全部进行迁移

- 只需要将某个TOPIC的某个分区进行迁移

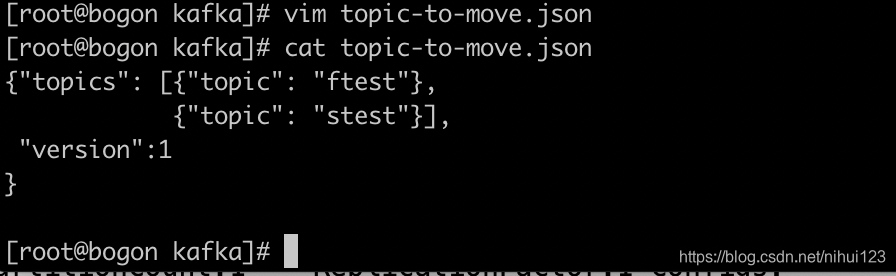

迁移整个TOPIC的信息到其他broker

写入JSON文件格式如下

{"topics": [{"topic": "foo1"},

{"topic": "foo2"}],

"version":1

}

创建两个测试TOPIC

[root@bogon ~]# kafka-topics.sh --create --zookeeper 10.2.116.192:2181 --replication-factor 1 --partitions 1 --topic ftest

Created topic ftest.

[root@bogon ~]# kafka-topics.sh --create --zookeeper 10.2.116.192:2181 --replication-factor 1 --partitions 1 --topic stest

Created topic stest.

[root@bogon ~]#

[root@bogon ~]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic ftest

Topic:ftest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: ftest Partition: 0 Leader: 1 Replicas: 1 Isr: 1

[root@bogon ~]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic stest

Topic:stest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: stest Partition: 0 Leader: 2 Replicas: 2 Isr: 2

[root@bogon ~]#

使用-generate生成迁移计划,将某些或者某个topic迁移到某个机器上

下面的操作是将topic ftest stest 迁移到 broker 5,6 上

[root@bogon kafka]# kafka-reassign-partitions.sh --zookeeper 10.2.116.190:2181 --topic-to-move-json-file topic-to-move.json --broker-list "5,6" -generate

当然这一步完成之后只是做了一个计划,并没有实际的去操作数据

[root@bogon kafka]# kafka-reassign-partitions.sh --zookeeper 10.2.116.190:2181 --topic-to-move-json-file topic-to-move.json --broker-list "3" -generate

Exception in thread "main" joptsimple.UnrecognizedOptionException: topic-to-move-json-file is not a recognized option

at joptsimple.OptionException.unrecognizedOption(OptionException.java:108)

at joptsimple.OptionParser.handleLongOptionToken(OptionParser.java:510)

at joptsimple.OptionParserState$2.handleArgument(OptionParserState.java:56)

at joptsimple.OptionParser.parse(OptionParser.java:396)

at kafka.admin.ReassignPartitionsCommand$ReassignPartitionsCommandOptions.<init>(ReassignPartitionsCommand.scala:500)

at kafka.admin.ReassignPartitionsCommand$.validateAndParseArgs(ReassignPartitionsCommand.scala:416)

at kafka.admin.ReassignPartitionsCommand$.main(ReassignPartitionsCommand.scala:52)

at kafka.admin.ReassignPartitionsCommand.main(ReassignPartitionsCommand.scala)

[root@bogon kafka]#

命令写错了应该是下面这样子的

[root@bogon kafka]# kafka-reassign-partitions.sh --zookeeper 10.2.116.190:2181 --topics-to-move-json-file topic-to-move.json --broker-list "3" -generate

Current partition replica assignment

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[1],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[2],"log_dirs":["any"]}]}

Proposed partition reassignment configuration

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[3],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[3],"log_dirs":["any"]}]}

[root@bogon kafka]#

进行查看之后发现并没有发生变化

[root@bogon kafka]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic ftest

Topic:ftest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: ftest Partition: 0 Leader: 1 Replicas: 1 Isr: 1

[root@bogon kafka]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic stest

Topic:stest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: stest Partition: 0 Leader: 2 Replicas: 2 Isr: 2

[root@bogon kafka]#

下面将第二个json文件进行如下的操作

[root@bogon kafka]# vim expand-cluster-reassignment.json

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[3],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[3],"log_dirs":["any"]}]}

使用 -execute执行计划 会看到如下的效果。

[root@bogon kafka]# kafka-reassign-partitions.sh --zookeeper 10.2.116.190 --reassignment-json-file expand-cluster-reassignment.json --execute

Current partition replica assignment

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[1],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[2],"log_dirs":["any"]}]}

Save this to use as the --reassignment-json-file option during rollback

Successfully started reassignment of partitions.

[root@bogon kafka]#

我们会看到,其实在执行成功之后会显示回滚的语句并且,提供了回滚的方式,

当然在执行之前最好先保存当前的操作,以防出错进行回滚操作。也就是上面的结果中的第一个结果进行备份,如果没有迁移成功就可以进行回滚。

使用上面的操作进行验证

[root@bogon kafka]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic ftest

Topic:ftest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: ftest Partition: 0 Leader: 3 Replicas: 3 Isr: 3

[root@bogon kafka]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic stest

Topic:stest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: stest Partition: 0 Leader: 3 Replicas: 3 Isr: 3

[root@bogon kafka]#

到这里整个的备份迁移就完成了,这个就是整个TOPIC实现了数据的迁移。

上面的是展示了如何将整个的TOPIC进行迁移,那么下面就来看看如何将其中的一部分进行迁移。上面我们将ftest和stest都迁移到了3上面,那么如果现在想将ftest迁回到1上那么应该如何操作呢?那么就需要修改刚刚执行的配置文件了。

[root@bogon kafka]# cat expand-cluster-reassignment.json

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[3],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[3],"log_dirs":["any"]}]}

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[1],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[3],"log_dirs":["any"]}]}

[root@bogon kafka]# vim expand-cluster-reassignment.json

[root@bogon kafka]# kafka-reassign-partitions.sh --zookeeper 10.2.116.190 --reassignment-json-file expand-cluster-reassignment.json --execute

Current partition replica assignment

{"version":1,"partitions":[{"topic":"ftest","partition":0,"replicas":[3],"log_dirs":["any"]},{"topic":"stest","partition":0,"replicas":[3],"log_dirs":["any"]}]}

Save this to use as the --reassignment-json-file option during rollback

Successfully started reassignment of partitions.

[root@bogon kafka]#

查看结果

[root@bogon kafka]# kafka-topics.sh --describe --zookeeper 10.2.116.190:2181 --topic ftest

Topic:ftest PartitionCount:1 ReplicationFactor:1 Configs:

Topic: ftest Partition: 0 Leader: 1 Replicas: 1 Isr: 1

[root@bogon kafka]#

使用–verify 验证是否已经迁移完成

[root@bogon kafka]# kafka-reassign-partitions.sh --zookeeper 10.2.116.190 --reassignment-json-file expand-cluster-reassignment.json --verify

Status of partition reassignment:

Reassignment of partition stest-0 completed successfully

Reassignment of partition ftest-0 completed successfully

[root@bogon kafka]#

到这里就和之前的平衡机制挂钩了,如果操作了很多的这样的操作,就会导致集群的某个分区PR分区不是Leader了,利用平衡机制就是将整个集群的Leader可以在各个机器上平衡分布,数据量也会做到均匀分布

注意

分区日志迁移工具会复制磁盘上的日志文件,只有当完全复制完成才会删除迁移之前磁盘上的日志文件,执行分区日志迁移需要注意一下几点

- 1、迁移工具的粒度只能到broker,不能到broker的目录,如果broker上有多个目录,按照磁盘上面已驻留的分区数来均匀分配,如果topic之间的数据,或者topic的partition之间的数据本省就是不均匀的,就会导致磁盘数据的不均匀。

- 2、对于分区数据较多的分区进行数据迁移会消耗大量的时间,所以在topic数据量少或者磁盘有效数据较少的情况下执行数据迁移操作。

- 3、正如上面提到的,进行分区迁移的时候最好保留一个分区在原来的磁盘,这样既不会影响正常消费和生产,假设,如果目的是将分区5 broker 1,5 迁移到broker 2,3,可以先将5迁移到2,1,最后在迁移2,3,而不是直接进行操作,否则会导致正常的生产和消费不能正常执行。

总结

从上面可以看到如果想实现这样的一个集群操作的练习,第一需要直接到测试环境或者生产环境去练习,第二就是按照规则自己搭建一个数据自己的Kafka集群像是博主一样。通过这样的练习,可以加深对于Kafka集群的了解,为合理的开发提供理论和实践支持。