1.用json_tuple函数处理json字符串

//创建外部表 读取整个json字符串

use hive_01;

create external table weibo_json(json string) location '/usr/test/weibo_info';

//载入数据

load data local inpath '/usr/test/testdate/weibo' into table weibo_json;

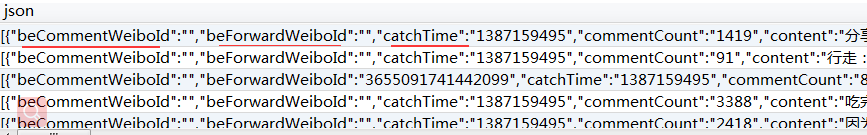

select * from weibo_json;

//创建内部表 做微博统计

create table weibo_info(

beCommentWeiboId string,

beForwardWeiboId string,

catchTime string,

commentCount int,

content string,

createTime string,

info1 string,

info2 string,

info3 string,

mlevel string,

musicurl string,

pic_list string,

praiseCount int,

reportCount int,

source string,

userId string,

videourl string,

weiboId string,

weiboUrl string) row format delimited fields terminated by '\t';

//json_tuple('字符串‘,json串中对应的每个字段名)

//截取字符串并向weibo_info表载入数据

insert overwrite table weibo_info select json_tuple(substring(a.json,2,length(a.json)-2),"beCommentWeiboId",

"beForwardWeiboId","catchTime","commentCount","content","createTime","info1","info2","info3","mlevel",

"musicurl","pic_list","praiseCount","reportCount","source","userId","videourl","weiboId","weiboUrl") from weibo_json a;

2.其他操作

//修改分区(新分区中没有之前的数据)

alter table test_partition partition(year=2016) set location '/user/hive/warehouse/new_part/hive_01.db/test_partition/year=2016';

//表结构的修改信息同步到数据库中的元信息

msck repair table test_partition;

//时间戳转换成日期格式

FROM_UNIXTIME(时间戳,'yyyy-MM');

FROM_UNIXTIME(时间戳,'yyyy-MM-dd');

//带有复杂数据类型的表

create table test02(

id int,

name string,

hobby array<string>,//数组类型

decs struct<age:int,addr:string>,//相当于对象类型,数据集按照数组分割

others map<string,string>)//map类型,存的是k,v

row format delimited

fields terminated by ','//列的分割符号

COLLECTION ITEMS TERMINATED by':'//数组的分割符

MAP KEYS TERMINATED by '-';//集合的分割符

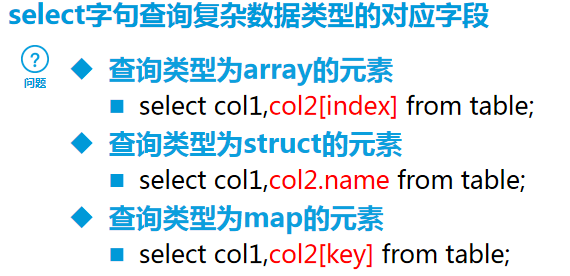

//复杂类型数据的查询

select id,name,hobby[1],struct.age,map[k值] from test02;

内连接:inner join on (返回两个表中都符合条件的数据)

左外连接:left outter jon on(以左表为基准,返回数据,右表没有的返回null)

右外连接:right outter jon on(以右表为基准,返回数据,左表没有的返回null)

全连接:full outter join on(两个表的数据都返回,相当于查询两表的全部)

左半连接:left semi join on(只返回左表的数据,右表只能在on字句中设置过滤条件,相当于IN/EXISTS 子查询)

select count(u.uid) from user_login_info u left semi join weibo_info w on u.uid=w.userid;

3.排序

sort by是局部排序,每个Reducer内部进行排序,最后组合成结果 若需要全局排序结果, 只需再进行一次归并排序即可 需要根据实际情况设置Reducer个数

set mapreduce.job.reduces = 5;//设置Reducer个数(默认是3个)

使用distribute by和sort by进行分组排序 被distribute by设定的字段为KEY,数据会被HASH分发到不同的reducer机器上,然后sort by会对同一个reducer机器上的每组数据进行局部排序。

如果sort by与distribute by所使用的字段相同,可以缩写为cluster by 以便同时指定两者所用的列,默认数据只能为升序排序。不允许指定ASC/DESC