一、概述

网站日志流量分析系统之数据清洗处理(离线分析)已经将数据进行清洗处理,但是处理过程分区信息(日期reportTime是写死的),而且hql语句也是需要人工手动去执行,在实际开发中肯定不会容忍这样的事情,所以让程序自动程序那些hql语句,作离线分析是当下我们要解决的问题。

二、自动化脚本

(1)编写logdemo.hql脚本

use logdb; alter table logdemo add partition (reportTime='${tday}') location '/logdemo/reportTime=${tday}'; insert into dataclear partition(reportTime='${tday}') select split(url,'-')[2],urlname,ref,uagent,stat_uv,split(stat_ss,'_')[0],split(stat_ss,'_')[1],split(stat_ss,'_')[2],cip from logdemo where reportTime = '${tday}'; insert into tongji1_temp select '${tday}','pv',pv_tab.pv from (select count(*) as pv from dataclear where reportTime='${tday}') as pv_tab;create table tongji1_temp(reportTime string,k string,v string); insert into tongji1_temp select '${tday}','uv',uv_tab.uv from (select count(distinct uvid) as uv from dataclear where reportTime='${tday}') as uv_tab; insert into tongji1_temp select '${tday}','vv',vv_tab.vv from (select count(distinct ssid) as vv from dataclear where reportTime='${tday}') as vv_tab; insert into tongji1_temp select '${tday}','br',br_tab.br from (select round(br_left_tab.br_count / br_right_tab.ss_count,4) as br from (select count(*) as br_count from (select ssid from dataclear group by ssid having count(*) = 1) as br_intab) br_left_tab,(select count(distinct ssid) as ss_count from dataclear where reportTime='${tday}') as br_right_tab) as br_tab; insert into tongji1_temp select '${tday}','newip',newip_tab.newip from (select count(distinct out_dc.cip) as newip from dataclear as out_dc where out_dc.reportTime='${tday}' and out_dc.cip not in (select in_dc.cip from dataclear as in_dc where datediff('${tday}',in_dc.reportTime)>0)) as newip_tab; insert into tongji1_temp select '${tday}','newcust',newcust_tab.newcust from (select count(distinct out_dc.uvid) as newcust from dataclear as out_dc where out_dc.reportTime='${tday}' and out_dc.uvid not in (select in_dc.uvid from dataclear as in_dc where datediff('${tday}',in_dc.reportTime)>0)) as newcust_tab; insert into tongji1_temp select '${tday}','avgtime',avgtime_tab.avgtime from (select avg(at_tab.usetime) as avgtime from (select max(sstime) - min(sstime) as usetime from dataclear where reportTime='${tday}' group by ssid) as at_tab) as avgtime_tab; insert into tongji1_temp select '${tday}','avgdeep',avgdeep_tab.avgdeep from (select avg(ad_tab.deep) as avgdeep from (select count(distinct url) as deep from dataclear where reportTime='${tday}' group by ssid) as ad_tab) as avgdeep_tab; insert into tongji1 select '${tday}', pv_tab.pv, uv_tab.uv, vv_tab.vv, newip_tab.newip, newcust_tab.newcust, avgtime_tab.avgtime, avgdeep_tab.avgdeep from (select v as pv from tongji1_temp where reportTime='${tday}' and k='pv') as pv_tab, (select v as uv from tongji1_temp where reportTime='${tday}' and k='uv') as uv_tab, (select v as vv from tongji1_temp where reportTime='${tday}' and k='vv') as vv_tab, (select v as newip from tongji1_temp where reportTime='${tday}' and k='newip') as newip_tab, (select v as newcust from tongji1_temp where reportTime='${tday}' and k='newcust') as newcust_tab, (select v as avgtime from tongji1_temp where reportTime='${tday}' and k='avgtime') as avgtime_tab, (select v as avgdeep from tongji1_temp where reportTime='${tday}' and k='avgdeep') as avgdeep_tab;

注:不要有中文注释。

(2)进入hive的bin目录启动该脚本:-d表示变量,-f表示文件(这里你可能注意到我们还是手写的日期,别急。)

[root@hadoopalone bin]# ./hive -d tday=2019-09-07 -f /home/software/logdemo.hql

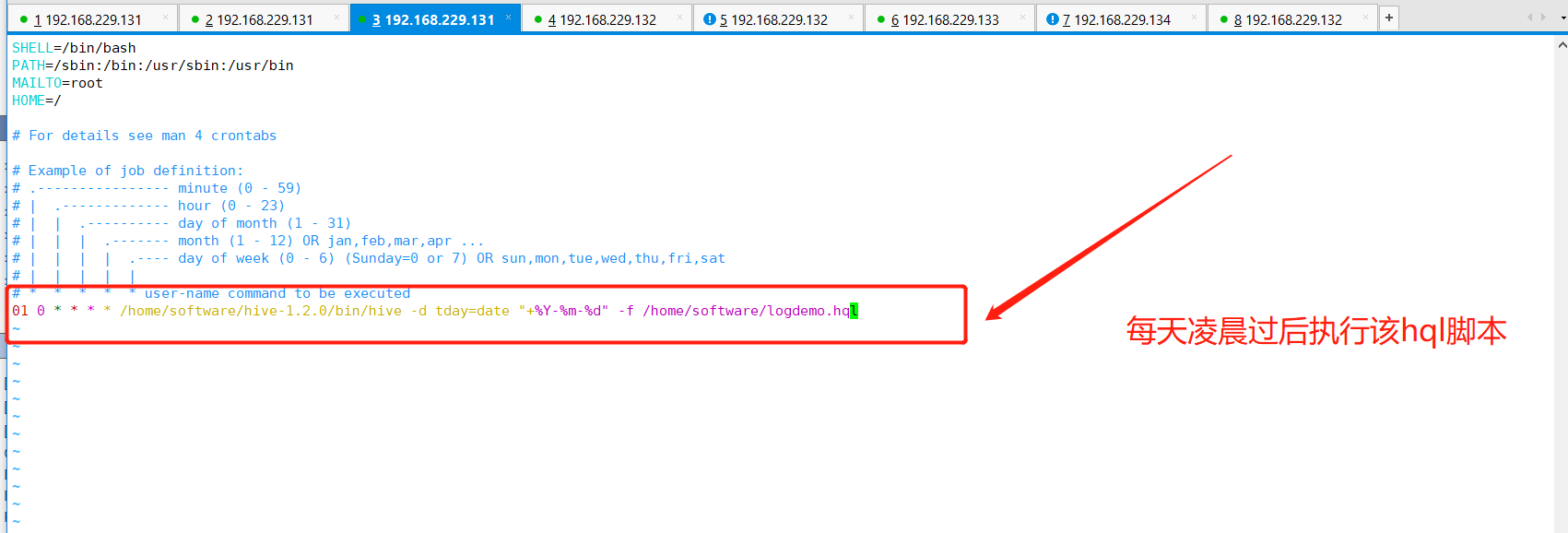

(3)设定linux的定时任务,要求每天零点过后,调用这个脚本(./hive -d tday=date "+%Y-%m-%d" -f /home/software/logdemo.hql)

1.可以在Linux配置文件/etc/crontab中配置定时任务,配置方式:

其中可以使用通配符:

①星号(*):代表每的意思,例如month字段如果是星号,则表示每月都执行该命令操作。

②逗号(,):表示分隔时段的意思,例如,“1,3,5,7,9”。

③中杠(-):表示一个时间范围,例如“2-6”表示“2,3,4,5,6”。

④正斜线(/):可以用正斜线指定时间的间隔频率,例如“0-23/2”表示每两小时执行一次。同时正斜线可以和星号一起使用,例如*/10,如果用在minute字段,表示每十分钟执行一次。

实例:

##每月每天凌晨3点30分和中午12点20分执行test.sh脚本 30 3,12 * * * /home/test.sh ##每月每天每隔6小时的每30分钟执行test.sh脚本 30 */6 * * * /home/test.sh ##每月每天早上8点到下午18点每隔2小时的每30分钟执行test.sh脚本 30 8-18/2 * * * /etc/init.d/network restart ##每月每天晚上21点30分执行test.sh脚本 30 21 * * * /etc/init.d/network restart ##每月1号、10号、22号凌晨4点45分执行test.sh脚本 45 4 1,10,22 * * /etc/init.d/network restart ##8月份周一、周日凌晨1点10分执行test.sh脚本 10 1 * 8 6,0 /etc/init.d/network restart ##每月每天每小时整点执行test.sh脚本 00 */1 * * * /etc/init.d/network restart

2.管理crontab命令

service crond start //启动服务 service crond stop //关闭服务 service crond restart //重启服务 service crond reload //重新载入配置

3.本项目的配置

4.启动crontab配置即可完成定时任务:service crond start //启动服务

三、总结

至此已经完成了hql脚本的自动化执行,接下来就是对数据清洗处理完成的结果进行可视化展示:网站日志流量分析系统之数据可视化展示