一.概念解释

Kubernetes的名字来自希腊语,意思是“舵手” 或 “领航员”。K8s是将8个字母“ubernete”替换为“8”的缩写。

Kubernetes是Google开源的一个容器编排引擎,它支持自动化部署、大规模可伸缩、应用容器化管理。在生产环境中部署一个应用程序时,通常要部署该应用的多个实例以便对应用请求进行负载均衡。

在Kubernetes中,我们可以创建多个容器,每个容器里面运行一个应用实例,然后通过内置的负载均衡策略,实现对这一组应用实例的管理、发现、访问,而这些细节都不需要运维人员去进行复杂的手工配置和处理。

特点:

- 可移植: 支持公有云,私有云,混合云,多重云(multi-cloud)

- 可扩展: 模块化,插件化,可挂载,可组合

- 自动化: 自动部署,自动重启,自动复制,自动伸缩/扩展

二.构建K8s管理集群

1.清除实验环境(server1、server2、server3操作相同)

- 清除列表管理(之前部署swarm的集群)

[root@server1 ~]# docker service ls

[root@server1 ~]# docker service rm web

[root@server1 ~]# docker stack rm portainer

- 清理卷

[root@server1 ~]# docker volume ls

[root@server1 ~]# docker volume prune

- 删除容器

[root@server1 ~]# docker rm -f `docker ps -aq`

- 删除网络

[root@server1 ~]# docker network ls

[root@server1 ~]# docker network rm we_net1

- 节点离开swarm集群

[root@server1 ~]# docker swarm leave -f

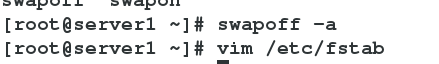

- 禁用swap分区

[root@server1 ~]# swapoff -a

[root@server1 ~]# vim /etc/fstab

[root@server2 ~]# swapoff -a

[root@server2 ~]# vim /etc/fstab

[root@server3 ~]# swapoff -a

[root@server3 ~]# vim /etc/fstab

2.为server1,server2,server3安装软件包

[root@server1 ~]# ls

cri-tools-1.13.0-0.x86_64.rpm kubectl-1.15.0-0.x86_64.rpm kubernetes-cni-0.7.5-0.x86_64.rpm

kubeadm-1.15.0-0.x86_64.rpm kubelet-1.15.0-0.x86_64.rpm

[root@server1 ~]# yum install * -y

[root@server1 ~]# scp * server2:

[root@server1 ~]# scp * server3:

[root@server2 ~]# ls

cri-tools-1.13.0-0.x86_64.rpm kubectl-1.15.0-0.x86_64.rpm kubernetes-cni-0.7.5-0.x86_64.rpm

kubeadm-1.15.0-0.x86_64.rpm kubelet-1.15.0-0.x86_64.rpm

[root@server2 ~]# yum install *

[root@server3 ~]# ls

cri-tools-1.13.0-0.x86_64.rpm kubectl-1.15.0-0.x86_64.rpm kubernetes-cni-0.7.5-0.x86_64.rpm

kubeadm-1.15.0-0.x86_64.rpm kubelet-1.15.0-0.x86_64.rpm

[root@server3 ~]# yum install * -y

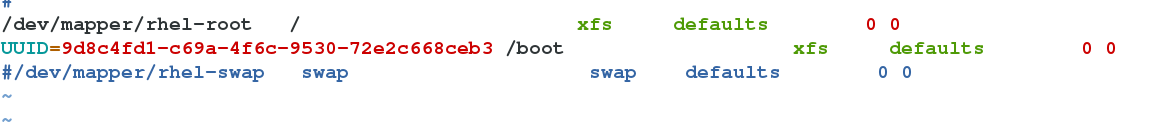

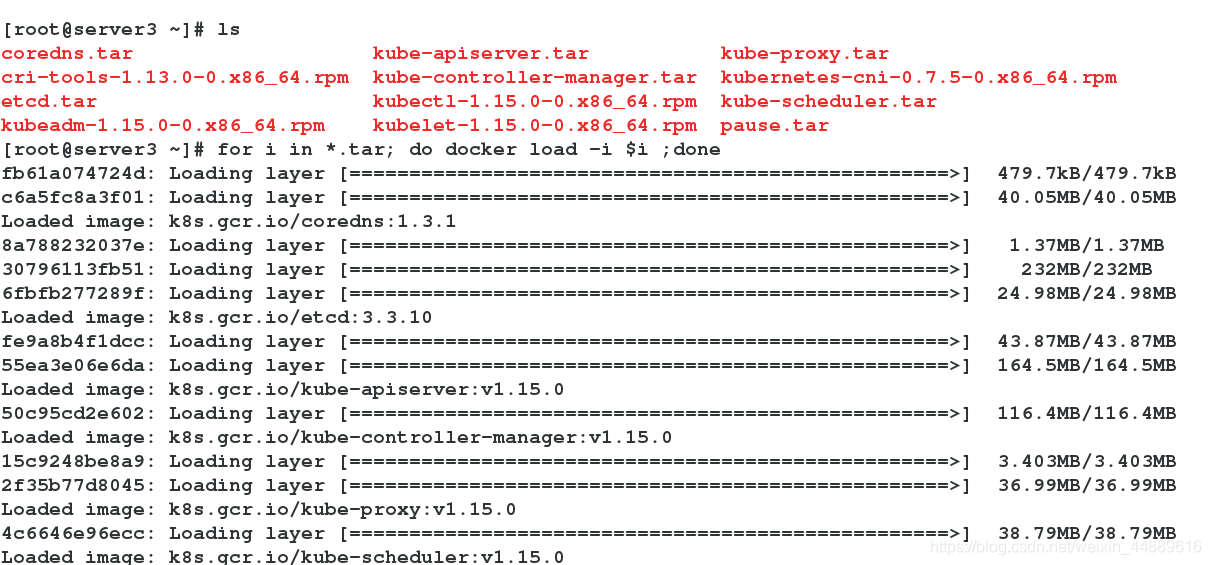

3.所有节点导入相关镜像

[root@server1 ~]# for i in *.tar; do docker load -i $i ;done

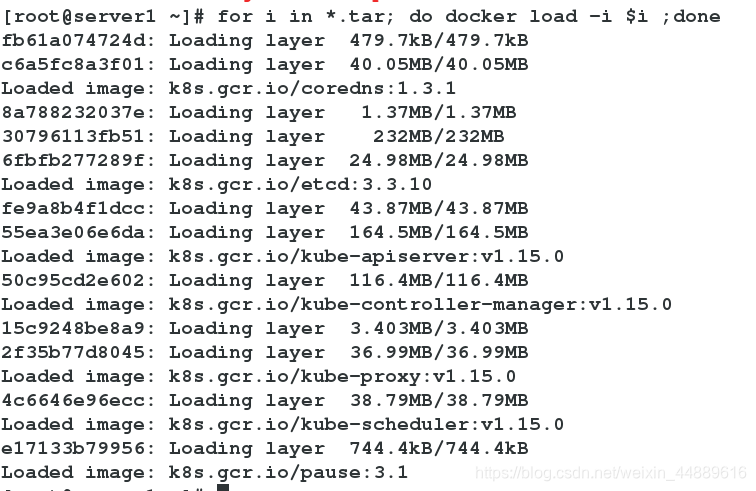

4.将镜像传给server2、server3,并导入镜像

[root@server1 ~]# scp *.tar server2:

[root@server1 ~]# scp *.tar server3:

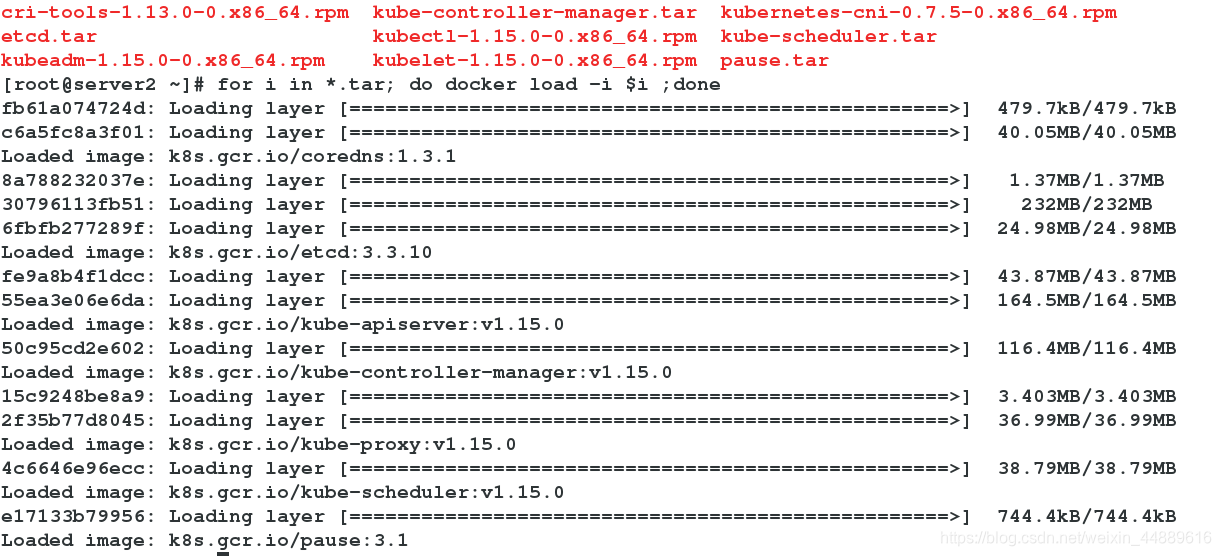

[root@server2 ~]# for i in *.tar; do docker load -i $i ;done

[root@server3 ~]# for i in *.tar; do docker load -i $i ;done

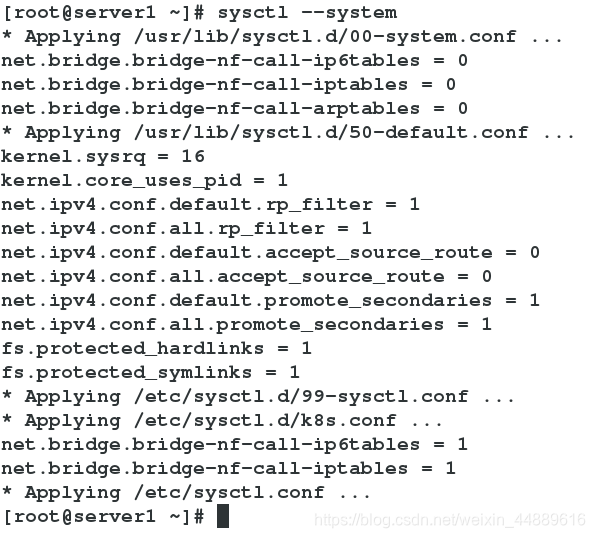

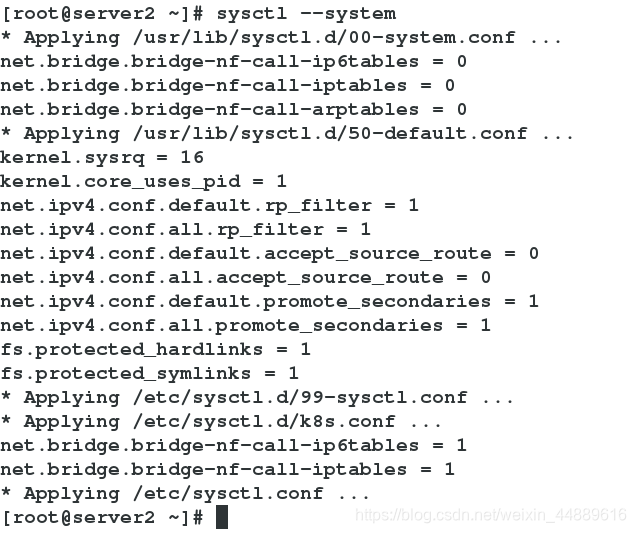

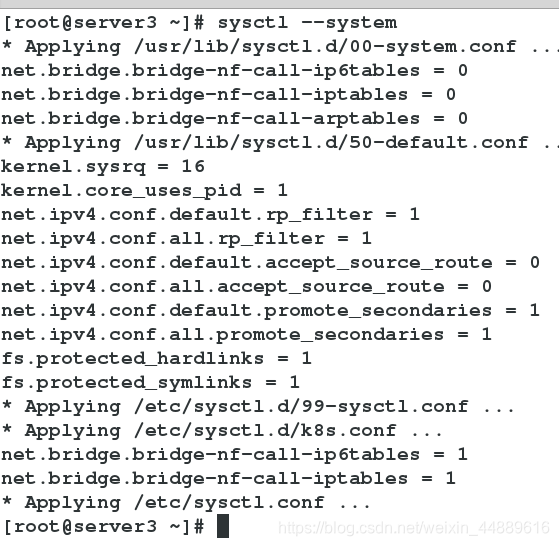

5.在所有节点的/etc/sysctl.d/k8s.conf内写入内容并查看

[root@server1 ~]# vim /etc/sysctl.d/k8s.conf

[root@server1 ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@server1 ~]# scp /etc/sysctl.d/k8s.conf server2:/etc/sysctl.d/

k8s.conf 100% 79 0.1KB/s 00:00

[root@server1 ~]# scp /etc/sysctl.d/k8s.conf server3:/etc/sysctl.d/

k8s.conf

[root@server1 ~]# sysctl --system

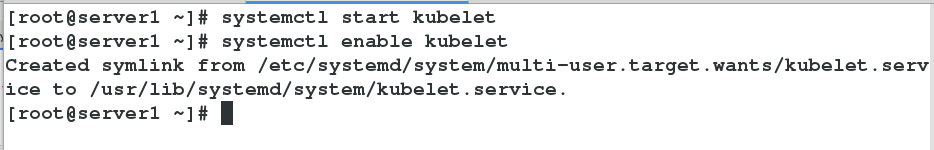

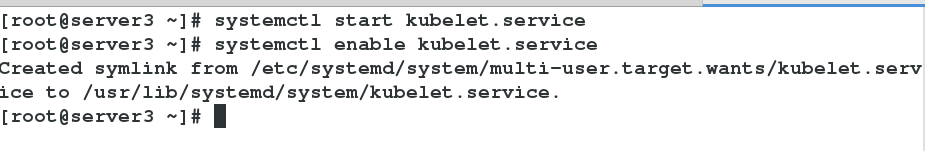

6.在server1,server2,server3开启kubelet服务(此时查看状态仍未开启)

[root@server1 ~]# systemctl start kubelet

[root@server1 ~]# systemctl enable kubelet

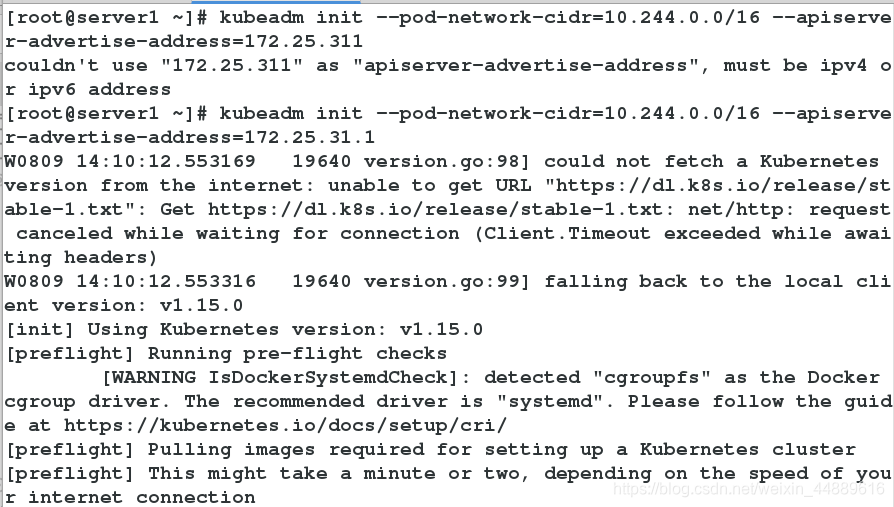

6.初始化管理节点

[root@server1 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=172.25.31.1

注意:如果第一次初始化集群失败,需要执行命令"kubeadm reset"进行重置,重置之后再执行初始化集群的命令,进行集群初始化。

注意:如果第一次初始化集群失败,需要执行命令"kubeadm reset"进行重置,重置之后再执行初始化集群的命令,进行集群初始化。

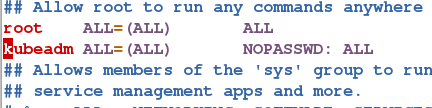

7.创建用户kubeadm,并赋予所有权限

[root@server1 k8s]# useradd kubeadm

[root@server1 k8s]# vim /etc/sudoers

8.切换到kubeadm用户下创建文件并将其复制

[root@server1 ~]# su - kubeadm

[kubeadm@server1 ~]$ mkdir -p $HOME/.kube

[kubeadm@server1 ~]$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[kubeadm@server1 ~]$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

[kubeadm@server1 ~]$ exit

logout

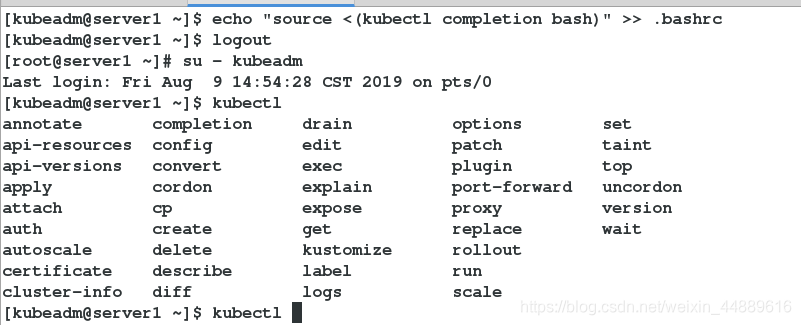

9.在server1上配置 kubectl 命令补齐功能:

[kubeadm@server1 ~]$ echo "source <(kubectl completion bash)" >> .bashrc

[kubeadm@server1 ~]$ logout

[root@server1 ~]# su - kubeadm

10.在server1上将kube-flannel.yml文件发送到/home/k8s目录下。因为kube-flannel.yml文件原来的/root/k8s目录下,普通用户k8s无法访问

[root@server1 ~]# cp kube-flannel.yml /home/kubeadm/

[root@server1 ~]# su - kubeadm

Last login: Fri Aug 9 14:25:33 CST 2019 on pts/0

[kubeadm@server1 ~]$ ls

kube-flannel.yml

[kubeadm@server1 ~]$ kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

11.在所有节点导入flannel

[root@server1 ~]# docker load -i flannel.tar

cd7100a72410: Loading layer 4.403MB/4.403MB

3b6c03b8ad66: Loading layer 4.385MB/4.385MB

93b0fa7f0802: Loading layer 158.2kB/158.2kB

4165b2148f36: Loading layer 36.33MB/36.33MB

b883fd48bb96: Loading layer 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.10.0-amd64

[root@server1 ~]# scp flannel.tar server2:

flannel.tar 100% 43MB 43.2MB/s 00:00

[root@server1 ~]# scp flannel.tar server3:

flannel.tar 100% 43MB 43.2MB/s 00:0

server2:

[root@server2 ~]# docker load -i flannel.tar

cd7100a72410: Loading layer 4.403MB/4.403MB

3b6c03b8ad66: Loading layer 4.385MB/4.385MB

93b0fa7f0802: Loading layer 158.2kB/158.2kB

4165b2148f36: Loading layer 36.33MB/36.33MB

b883fd48bb96: Loading layer 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.10.0-amd64

server3:

[root@server3 ~]# docker load -i flannel.tar

cd7100a72410: Loading layer 4.403MB/4.403MB

3b6c03b8ad66: Loading layer 4.385MB/4.385MB

93b0fa7f0802: Loading layer 158.2kB/158.2kB

4165b2148f36: Loading layer 36.33MB/36.33MB

b883fd48bb96: Loading layer 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.10.0-amd64

11.在server2和server3上加载ipvs内核模块并使其临时生效

[root@server2 ~]# modprobe ip_vs_wrr

[root@server2 ~]# modprobe ip_vs_rr

[root@server2 ~]# modprobe ip_vs_sh

[root@server2 ~]# modprobe ip_vs

[root@server3 ~]# modprobe ip_vs_wrr

[root@server3 ~]# modprobe ip_vs_rr

[root@server3 ~]# modprobe ip_vs_sh

[root@server3 ~]# modprobe ip_vs

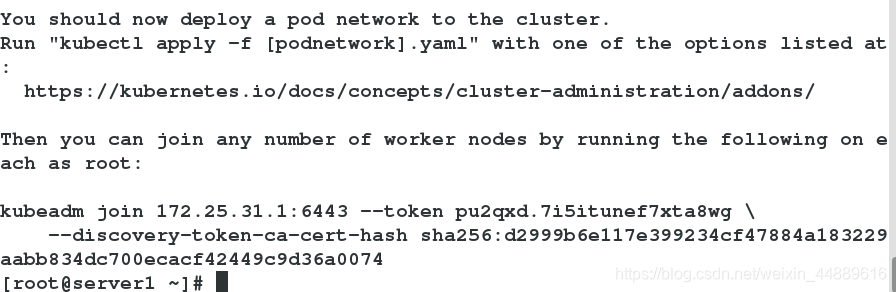

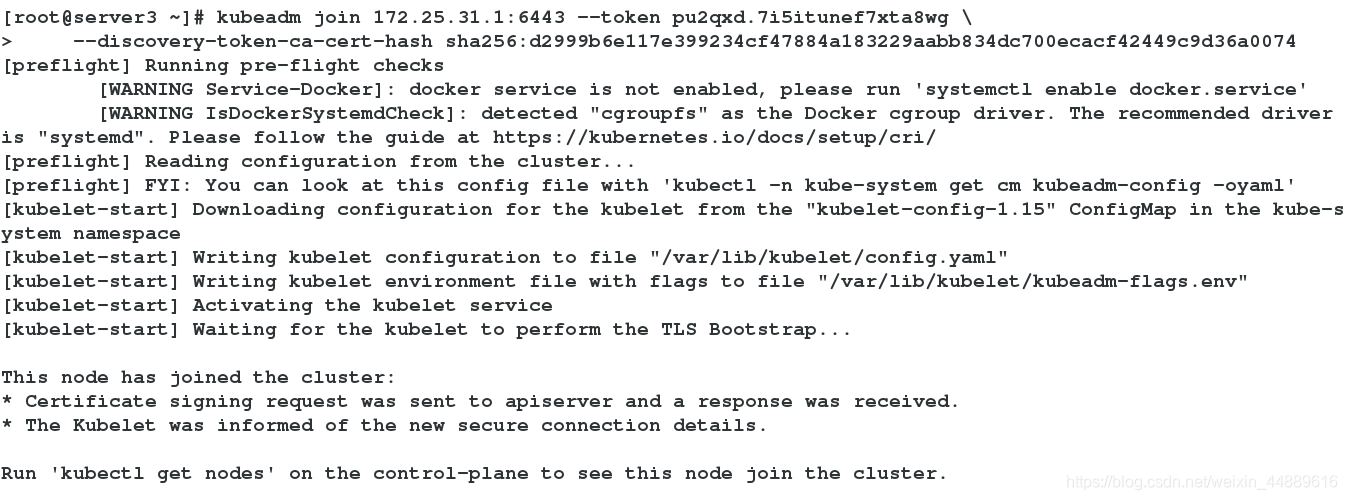

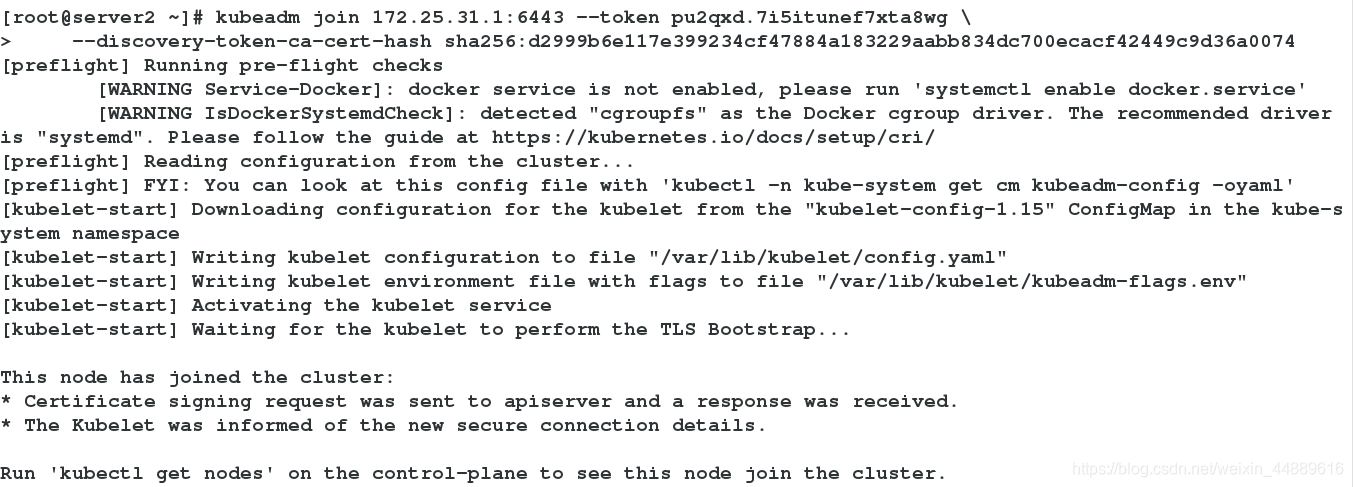

12.server2、server3加入节点

[root@server3 ~]# kubeadm join 172.25.31.1:6443 --token pu2qxd.7i5itunef7xta8wg \

> --discovery-token-ca-cert-hash sha256:d2999b6e117e399234cf47884a183229aabb834dc700ecacf42449c9d36a0074

[root@server2 ~]# kubeadm join 172.25.31.1:6443 --token pu2qxd.7i5itunef7xta8wg \

> --discovery-token-ca-cert-hash sha256:d2999b6e117e399234cf47884a183229aabb834dc700ecacf42449c9d36a0074

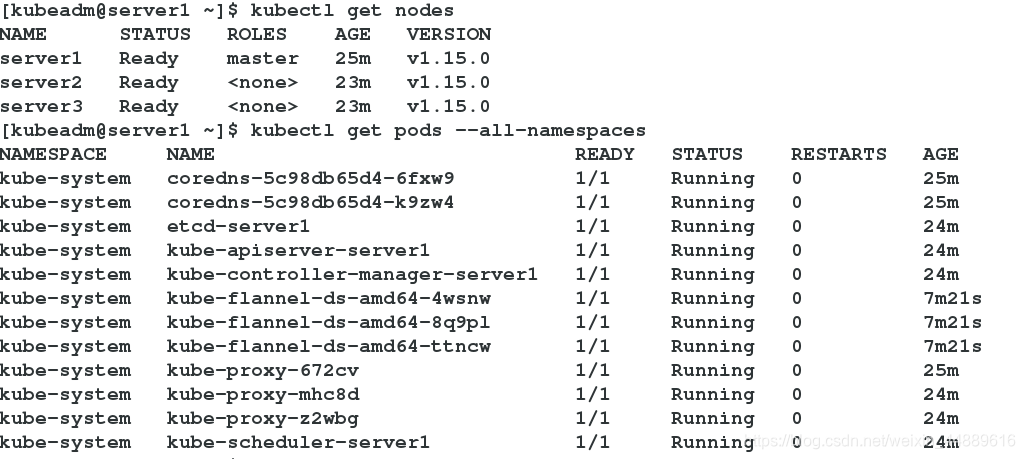

13.获取默认namespace(default)下的pod,查看所有节点的状态是否都是ready,查看pods状态是否是running

13.获取默认namespace(default)下的pod,查看所有节点的状态是否都是ready,查看pods状态是否是running

[kubeadm@server1 ~]$ kubectl get nodes

[kubeadm@server1 ~]$ kubectl get pods --all-namespaces

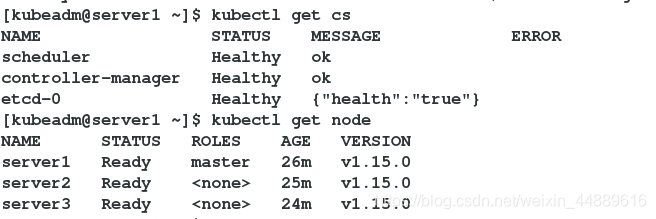

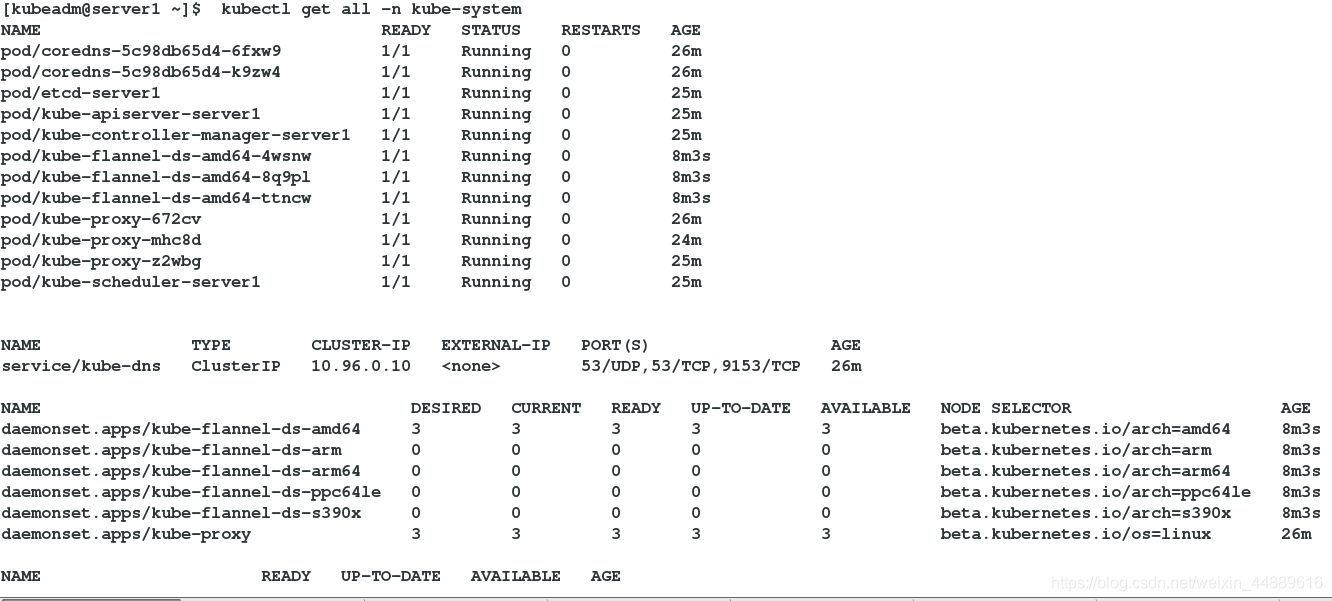

14.查看master状态

[kubeadm@server1 ~]$ kubectl get cs

[kubeadm@server1 ~]$ kubectl get node

[kubeadm@server1 ~]$ kubectl get all -n kube-system

注意:

1.master节点要求cpu>=2

2.

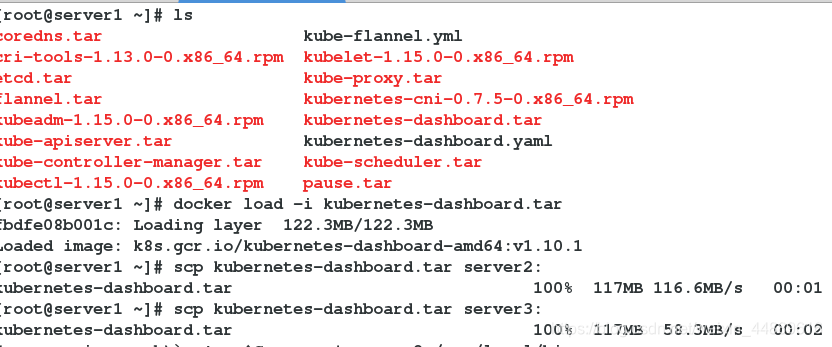

三.布置UI界面

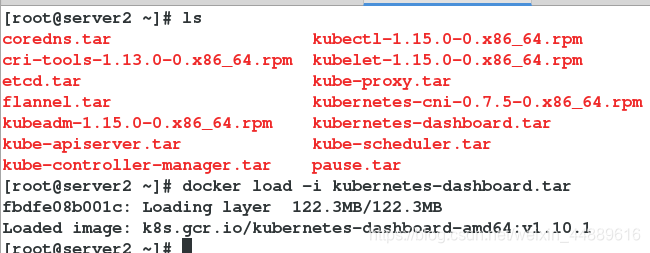

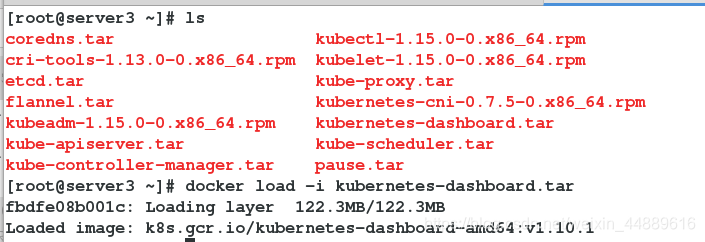

1.在所有节点导入kubernetes-dashboard.tar镜像

server1:

server2:

server2:

server3:

server3:

2.获取或者自己编写kubernetes-dashboard.yaml并发送到/home/kubeadm/

[root@server1 ~]# cp kubernetes-dashboard.yaml /home/kubeadm/

[root@server1 ~]# cd /home/kubeadm/

[root@server1 kubeadm]# ls

kube-flannel.yml kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

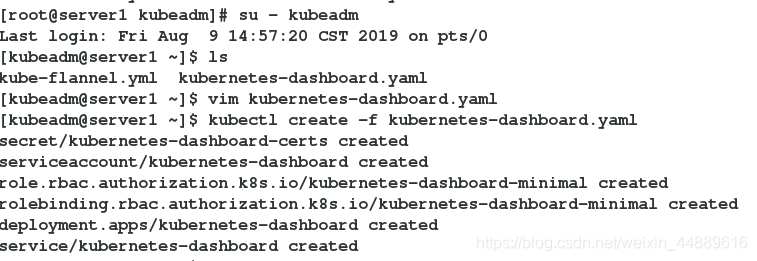

3.切换用户

[root@server1 kubeadm]# su - kubeadm

Last login: Fri Aug 9 14:57:20 CST 2019 on pts/0

[kubeadm@server1 ~]$ ls

kube-flannel.yml kubernetes-dashboard.yaml

[kubeadm@server1 ~]$ vim kubernetes-dashboard.yaml

[kubeadm@server1 ~]$ kubectl create -f kubernetes-dashboard.yaml

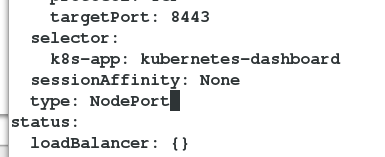

4.更改type

[kubeadm@server1 ~]$ kubectl edit service kubernetes-dashboard -n kube-system

service/kubernetes-dashboard edited

##编辑类型type:NodePort

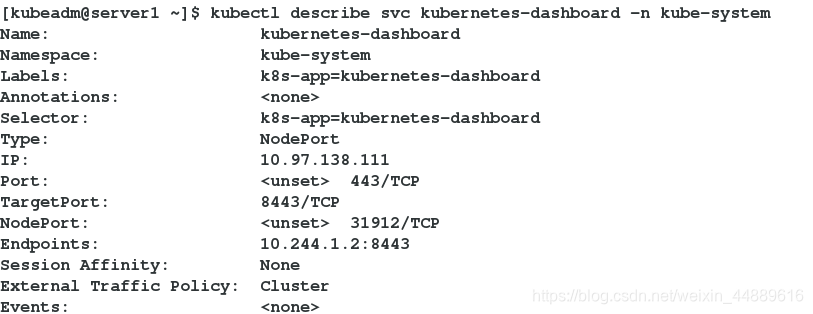

5.获取NodePort

[kubeadm@server1 ~]$ kubectl describe svc kubernetes-dashboard -n kube-system

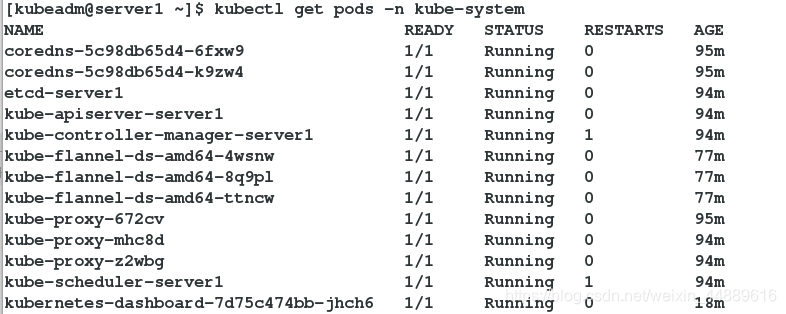

[kubeadm@server1 ~]$ kubectl get pods -n kube-system

6.新建用户,用来管理UI界面

6.新建用户,用来管理UI界面

[kubeadm@server1 ~]$ vim dashboard-admin.yaml

[kubeadm@server1 ~]$ kubectl create -f dashboard-admin.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

文件内容:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

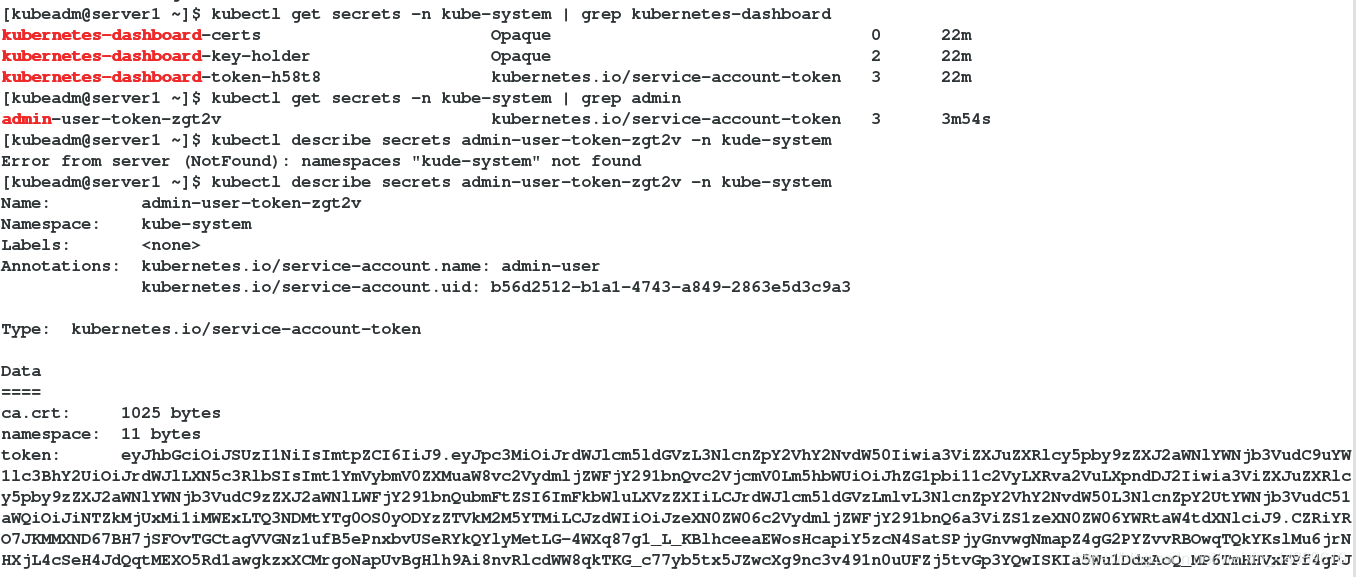

7.获取令牌(token)

[kubeadm@server1 ~]$ kubectl get secrets -n kube-system | grep admin

admin-user-token-zgt2v kubernetes.io/service-account-token 3 3m54s

[kubeadm@server1 ~]$ kubectl describe secrets admin-user-token-zgt2v -n kube-system

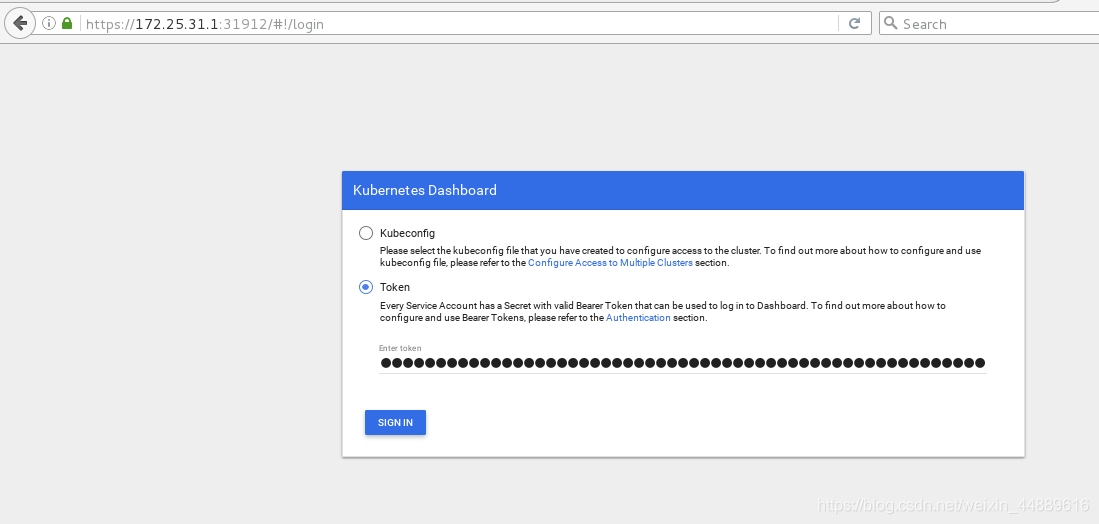

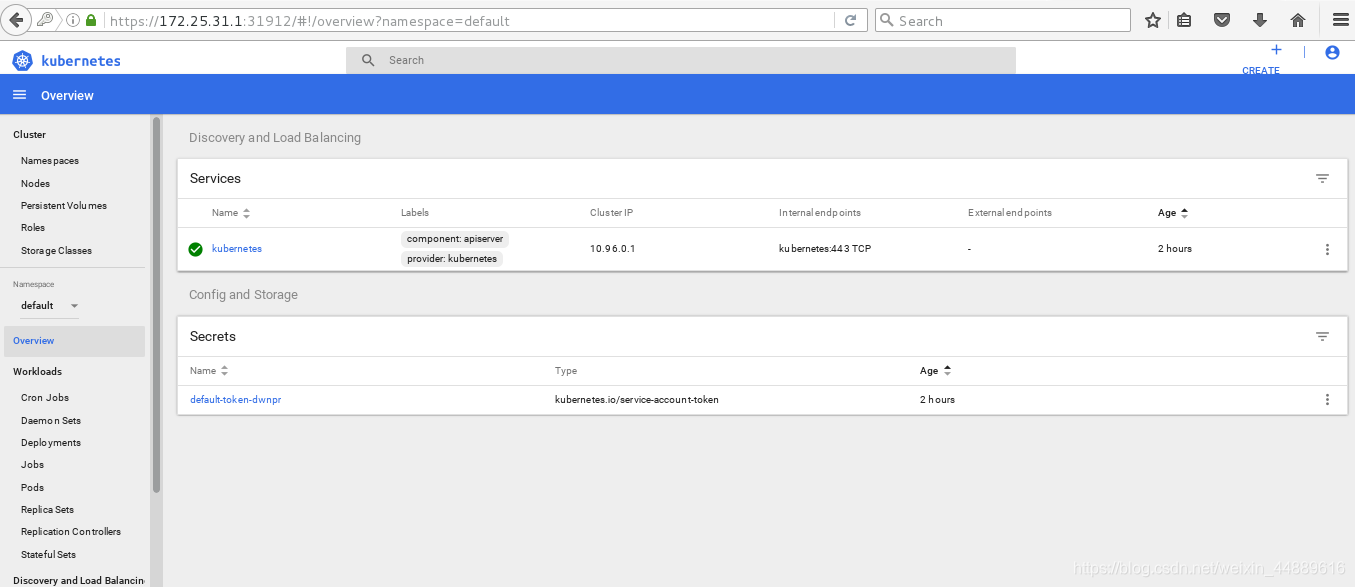

测试:

浏览器输入:https://172.25.31.1:31912

注意:

- 确保每个节点都加入镜像

- 每个节点都要有网关,并能上网,(刚开始要连网)