代码:

Properties connectionProperties = new Properties();

connectionProperties.put("user", Constants.PG_USERNAME);

connectionProperties.put("password", Constants.PG_PASSWD);

connectionProperties.put("fetchsize", "10000");

spark.read().jdbc(Constants.PG_JDBC, "tb_grid_export", "google_gci", 2669989, 2682906, 10, connectionProperties)

.write().mode(SaveMode.Overwrite).saveAsTable("tb_grid_export");

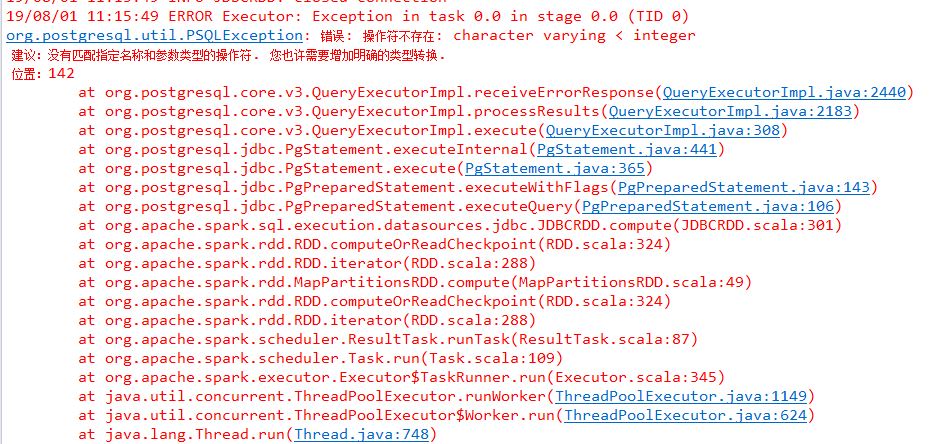

原因:

spark并行读取时候,会根据指定的字段和指定的上下限值生成对于的where条件,这里报错的原因是PG库里指定并行的字段存储类型为string

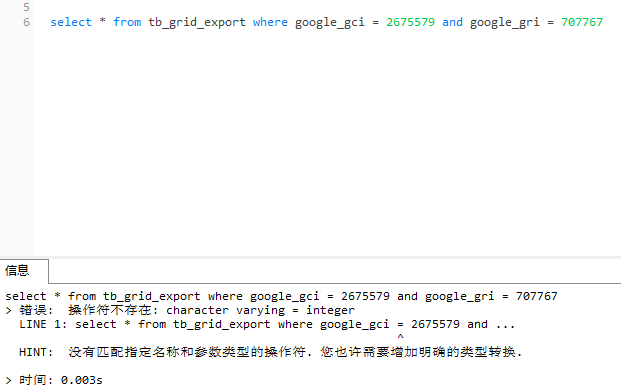

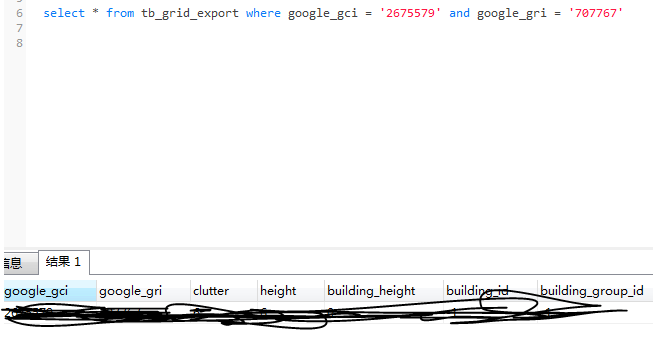

如下测试: