结构简单说明一下:nginx+Logstash+redis单机+Logstash+Elasticsearch集群+grafana+kibana(这里没有用,而是用grafana展示)

一。nginx日志格式

log_format main '$remote_addr [$time_local] "$request" '

'$request_body $status $body_bytes_sent "$http_referer" "$http_user_agent" '

'$request_time $upstream_response_time';

查看日志:

172.16.16.132 [21/Jul/2019:09:56:12 -0400] "GET /favicon.ico HTTP/1.1" - 404 555 "http://172.16.16.74/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36" 0.000 -

二。elstaticsearch集群搭建

要注意不能用root安装而且要调整一些参数,具体报错可以查看日志,然后百度。

只需要修改以下参数,因为我一台机器开了俩elasticsearch,所以 "172.16.16.80:9300","172.16.16.80:9301",注意第三台机器端口是9201

三个elastaicsearch注意修改node.name。

cluster.name: my-application

node.name: node-1

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["172.16.16.74:9300", "172.16.16.80:9300","172.16.16.80:9301"]

cluster.initial_master_nodes: ["node-1"]

如果不行的话,删除 data下的node文件然后重启,然后就是根据具体的报错,去查。一般就是会报用root用户运行,或者某些参数要修改

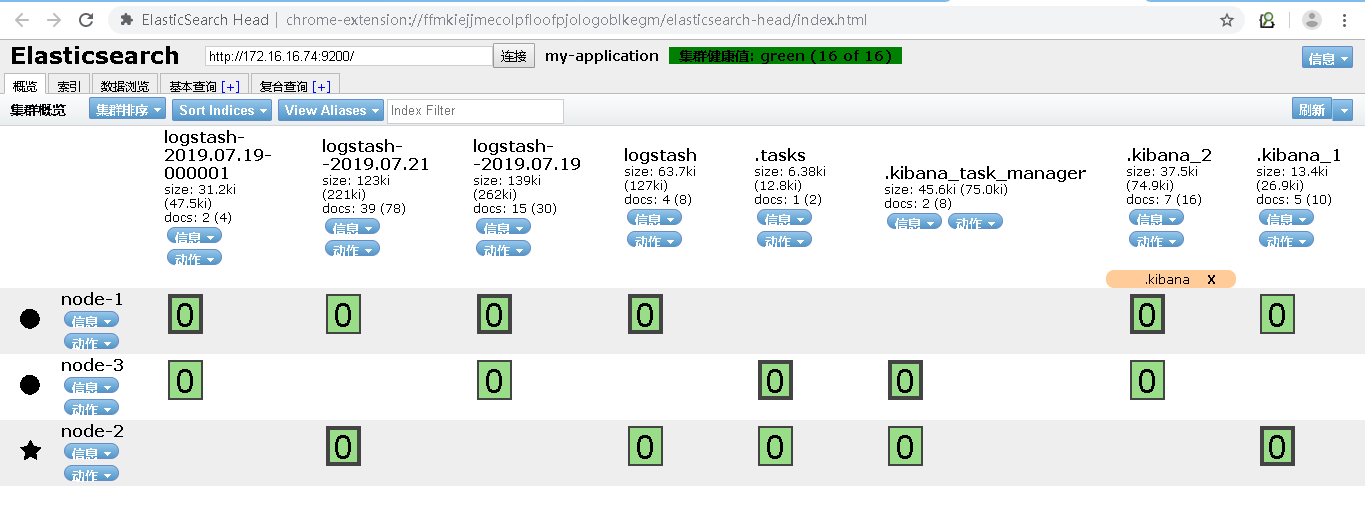

如何检测集群:

谷歌浏览器有个elasticsearch-head插件

原本master是node-1的,后面测试集群所以现在截图是这样的。

三。logstash收集日志存到redis

1.解压后logstash到conf 目录下建立 logstash_in.conf

input {

file {

type => "nginx"

path => "/usr/local/nginx/logs/host.access.log"######nginx的Log文件

start_position => "beginning"

}

}

output {

stdout { codec => rubydebug }#############打印显示

if [type] == "nginx" {

redis {

host => "172.16.16.74"

data_type => "list"

key => "logstash-service_name"

}

}

}

2.nohup ./logstash -f ../conf/logstash_in.conf

3.tail -f nohup.out 检查有没有报错

四。logstash抓取redis日志到elasticsearch

1.这里用的gork正则来解析nginx的日志,并且解决url带中文的问题,这个正则有点麻烦

这个网站可以帮忙调正则 http://grokdebug.herokuapp.com/

input {

redis {

host => "172.16.16.74"

type => "nginx"

data_type => "list"

key => "logstash-service_name"

}

}

filter {

grok { match => { "message" => "%{IPORHOST:clientip} \[%{HTTPDATE:time}\] \"%{WORD:verb} %{URIPATHPARAM:request} HTTP/%{NUMBER:httpversion}\" \- %{NUMBER:http_status_code} %{NUMBER:bytes} \"(?<http_referer>\S+)\" \"(?<http_user_agent>(\S+\s+)*\S+)\".* %{BASE16FLOAT:request_time}"

}}

urldecode {

all_fields => true

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["172.16.16.74:9200","172.16.16.80:9200","172.16.16.80:9201"]

index => "logstash--%{+YYYY.MM.dd}"}

}

可以查看 nohup.out文件,发现gork解析了

五。grafana展示

1.首先配置数据源

这里报这个错误是因为要选择对应的elastaticsearch版本 这里选择2点多的版本所以报错了,No date field named @timestamp found