目录

本质

torch.nn.functional.normalize(input, p=2, dim=1, eps=1e-12, out=None)

本质上就是按照某个维度计算范数,p表示计算p范数(等于2就是2范数),dim计算范数的维度(这里为1,一般就是通道数那个维度)

官方参考

官方api:https://pytorch.org/docs/stable/nn.html#normalize

源码:

def normalize(input, p=2, dim=1, eps=1e-12, out=None):

# type: (Tensor, float, int, float, Optional[Tensor]) -> Tensor

r"""Performs :math:`L_p` normalization of inputs over specified dimension.

For a tensor :attr:`input` of sizes :math:`(n_0, ..., n_{dim}, ..., n_k)`, each

:math:`n_{dim}` -element vector :math:`v` along dimension :attr:`dim` is transformed as

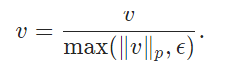

.. math::

v = \frac{v}{\max(\lVert v \rVert_p, \epsilon)}.

With the default arguments it uses the Euclidean norm over vectors along dimension :math:`1` for normalization.

Args:

input: input tensor of any shape

p (float): the exponent value in the norm formulation. Default: 2

dim (int): the dimension to reduce. Default: 1

eps (float): small value to avoid division by zero. Default: 1e-12

out (Tensor, optional): the output tensor. If :attr:`out` is used, this

operation won't be differentiable.

"""

if out is None:

denom = input.norm(p, dim, True).clamp_min(eps).expand_as(input)

ret = input / denom

else:

denom = input.norm(p, dim, True).clamp_min(eps).expand_as(input)

ret = torch.div(input, denom, out=out)

return ret实例

input_ = torch.randn((3, 4))

a = torch.nn.Softmax()(input_)

b = torch.nn.functional.normalize(a)

a的结果为:

tensor([[0.2074, 0.2850, 0.1973, 0.3103],

[0.2773, 0.1442, 0.3652, 0.2132],

[0.3244, 0.3206, 0.0216, 0.3334]])

b的结果为:

tensor([[0.4071, 0.5595, 0.3874, 0.6092],

[0.5274, 0.2743, 0.6945, 0.4054],

[0.5738, 0.5671, 0.0381, 0.5896]])b中的0.4071其实就是a中的 0.2074/根号下(0.2074*0.2074+0.285*0.285+0.1973*0.1973+0.3103*0.3103) = 0.4071