CentOS 使用二进制部署 Kubernetes 1.13集群

一、概述

kubernetes 1.13 已发布,这是 2018 年年内第四次也是最后一次发布新版本。Kubernetes 1.13 是迄今为止发布间隔最短的版本之一(与上一版本间隔十周),主要关注 Kubernetes 的稳定性与可扩展性,其中存储与集群生命周期相关的三项主要功能已逐步实现普遍可用性。

Kubernetes 1.13 的核心特性包括:利用 kubeadm 简化集群管理、容器存储接口(CSI )以及将 CoreDNS 作为默认 DNS 。

利用 kubeadm 简化集群管理功能

大多数与 Kubernetes 接触频繁的人或多或少都会亲自动手使用 kubeadm ,它是管理集群生命周期的重要工具,能够帮助从创建到配置再到升级的整个流程。;随着 1.13 版本的发布,kubeadm 功能进入 GA 版本,正式普遍可用。kubeadm 处理现有硬件上的生产集群的引导,并以最佳实践方式配置核心 Kubernetes 组件,以便为新节点提供安全而简单的连接流程并支持轻松升级。

该 GA 版本中最值得注意的是已经毕业的高级功能,尤其是可插拔性和可配置性。kubeadm 旨在为管理员与高级自动化系统提供一套工具箱,如今已迈出重要一步。

容器存储接口(CSI)

容器存储接口最初于 1.9 版本中作为 alpha 测试功能引入,在 1.10 版本中进入 beta 测试,如今终于进入 GA 阶段正式普遍可用。在 CSI 的帮助下,Kubernetes 卷层将真正实现可扩展性。通过 CSI ,第三方存储供应商将可以直接编写可与 Kubernetes 互操作的代码,而无需触及任何 Kubernetes 核心代码。事实上,相关规范也已经同步进入 1.0 阶段。

随着 CSI 的稳定,插件作者将能够按照自己的节奏开发核心存储插件,详见 CSI 文档。

CoreDNS 成为 Kubernetes 的默认 DNS 服务器

在 1.11 版本中,开发团队宣布 CoreDNS 已实现基于 DNS 服务发现的普遍可用性。在最新的 1.13 版本中,CoreDNS 正式取代 kuber-dns 成为 Kubernetes 中的默认 DNS 服务器。CoreDNS 是一种通用的、权威的 DNS 服务器,能够提供与 Kubernetes 向下兼容且具备可扩展性的集成能力。由于 CoreDNS 自身单一可执行文件与单一进程的特性,因此 CoreDNS 的活动部件数量会少于之前的 DNS 服务器,且能够通过创建自定义 DNS 条目来支持各类灵活的用例。此外,由于 CoreDNS 采用 Go 语言编写,它具有强大的内存安全性。

CoreDNS 现在是 Kubernetes 1.13 及后续版本推荐的 DNS 解决方案,Kubernetes 已将常用测试基础设施架构切换为默认使用 CoreDNS ,因此,开发团队建议用户也尽快完成切换。KubeDNS 仍将至少支持一个版本,但现在是时候开始规划迁移了。另外,包括 1.11 中 Kubeadm 在内的许多 OSS 安装工具也已经进行了切换。

1、安装环境准备:

部署节点说明

一、官方文档二、下载链接三、角色划分四、Master部署4.1 下载软件 4.2 cfssl安装 4.3 创建etcd证书 1)etcd ca配置 2)etcd ca证书 3)etcd server证书 4)生成etcd ca证书和私钥 初始化ca 生成server证书 4.4 etcd安装 1)解压缩 2)配置etcd主文件 3)配置etcd启动文件 4)启动 注意启动前etcd02、etcd03同样配置下 5)服务检查 4.5 生成kubernets证书与私钥 1)制作kubernetes ca证书 2)制作apiserver证书 3)制作kube-proxy证书 4.6部署kubernetes server

1)解压缩文件 2)部署kube-apiserver组件 创建TLS Bootstrapping Token 创建Apiserver配置文件 创建apiserver systemd文件 启动服务 3)部署kube-scheduler组件 创建kube-scheduler配置文件 参数备注: –address:在 127.0.0.1:10251 端口接收 http /metrics 请求;kube-scheduler 目前还不支持接收 https 请求; –kubeconfig:指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证 kube-apiserver; –leader-elect=true:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态; 创建kube-scheduler systemd文件 启动服务 4)部署kube-controller-manager组件 创建kube-controller-manager配置文件 创建kube-controller-manager systemd文件 启动服务 4.7 验证kubeserver服务 设置环境变量 查看master服务状态 五、Node部署

5.1 Docker环境安装 5.2 部署kubelet组件

1)安装二进制文件 2)复制相关证书到node节点 3)创建kubelet bootstrap kubeconfig文件 通过脚本实现 执行脚本 4)创建kubelet参数配置模板文件 5)创建kubelet配置文件 6)创建kubelet systemd文件 7)将kubelet-bootstrap用户绑定到系统集群角色 注意这个默认连接localhost:8080端口,可以在master上操作 8)启动服务 systemctl daemon-reload systemctl enable kubelet systemctl start kubelet 9)Master接受kubelet CSR请求 可以手动或自动 approve CSR 请求。推荐使用自动的方式,因为从 v1.8 版本开始,可以自动轮转approve csr 后生成的证书,如下是手动 approve CSR请求操作方法 查看CSR列表 接受node 再查看CSR 5.3部署kube-proxy组件 kube-proxy 运行在所有 node节点上,它监听 apiserver 中 service 和 Endpoint 的变化情况,创建路由规则来进行服务负载均衡 1)创建 kube-proxy 配置文件 2)创建kube-proxy systemd文件 3)启动服务 systemctl daemon-reload systemctl enable kube-proxy systemctl start kube-proxy 4)查看集群状态 5)同样操作部署node 10.2.8.34并认证csr,认证后会生成kubelet-client证书

六 Flanneld网络部署

6.1 etcd注册网段 flanneld 当前版本 (v0.10.0) 不支持 etcd v3,故使用 etcd v2 API 写入配置 key 和网段数据; 写入的 Pod 网段 ${CLUSTER_CIDR} 必须是 /16 段地址,必须与 kube-controller-manager 的 –cluster-cidr 参数值一致; 6.2 flannel安装 1)解压安装 2)配置flanneld 创建flanneld systemd文件 注意

3)配置Docker启动指定子网 修改EnvironmentFile=/run/flannel/subnet.env,ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS即可 4)启动服务 注意启动flannel前要关闭docker及相关的kubelet这样flannel才会覆盖docker0网桥 5)验证服务 |

||||

|---|---|---|---|---|

k8s安装包下载

链接:https://pan.baidu.com/s/1wO6T7byhaJYBuu2JlhZvkQ

提取码:pm9u

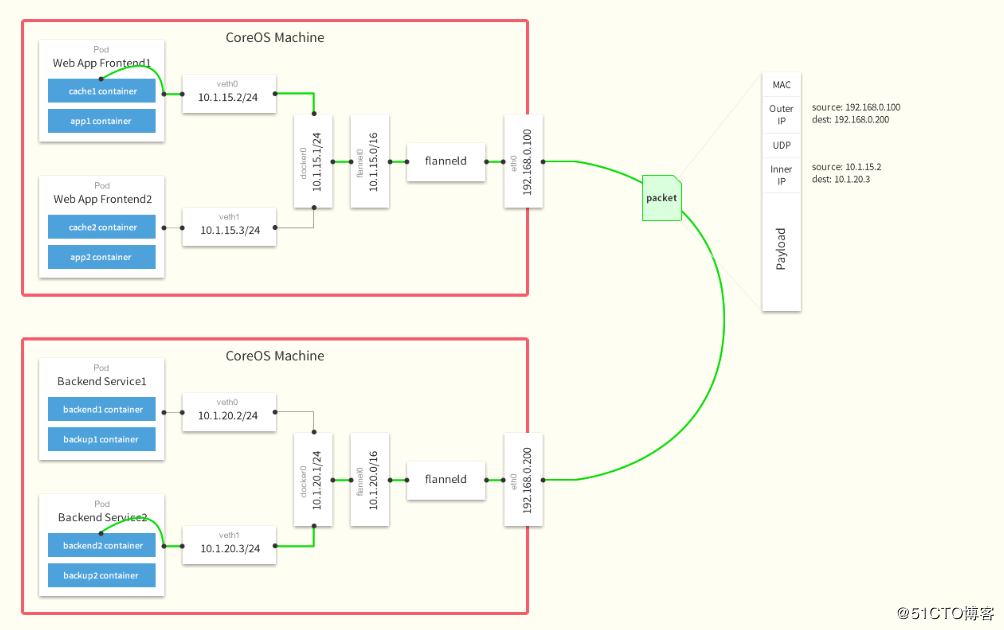

部署网络说明

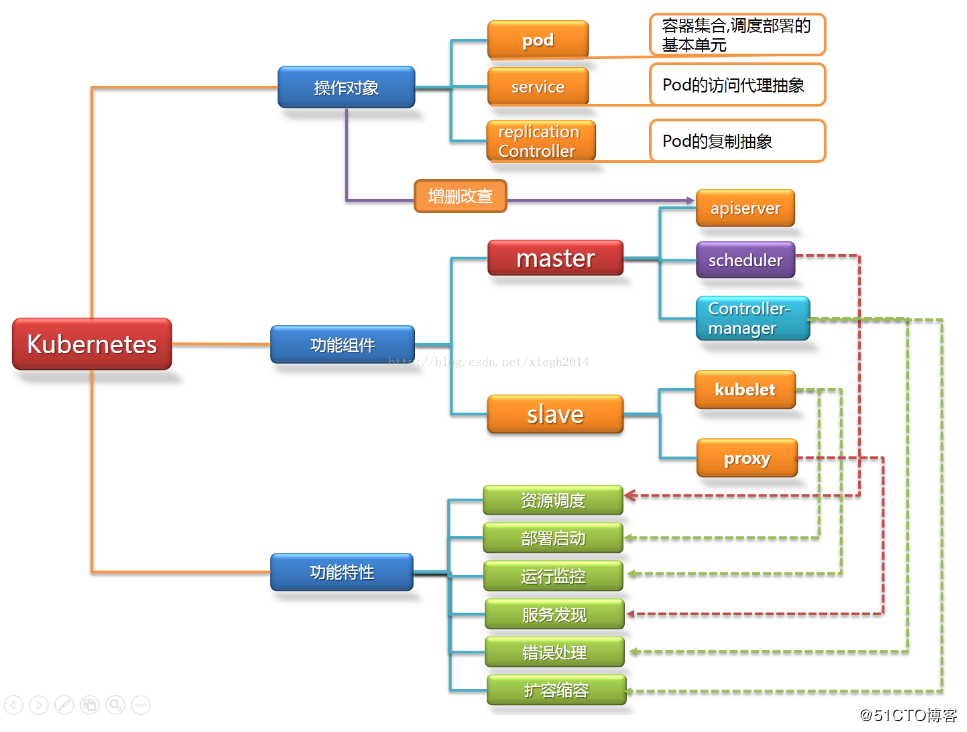

2、架构图

Kubernetes 架构图

Flannel网络架构图

- 数据从源容器中发出后,经由所在主机的docker0虚拟网卡转发到flannel0虚拟网卡,这是个P2P的虚拟网卡,flanneld服务监听在网卡的另外一端。

- Flannel通过Etcd服务维护了一张节点间的路由表,在稍后的配置部分我们会介绍其中的内容。

- 源主机的flanneld服务将原本的数据内容UDP封装后根据自己的路由表投递给目的节点的flanneld服务,数据到达以后被解包,然后直接进入目的节点的flannel0虚拟网卡,

然后被转发到目的主机的docker0虚拟网卡,最后就像本机容器通信一下的有docker0路由到达目标容器。

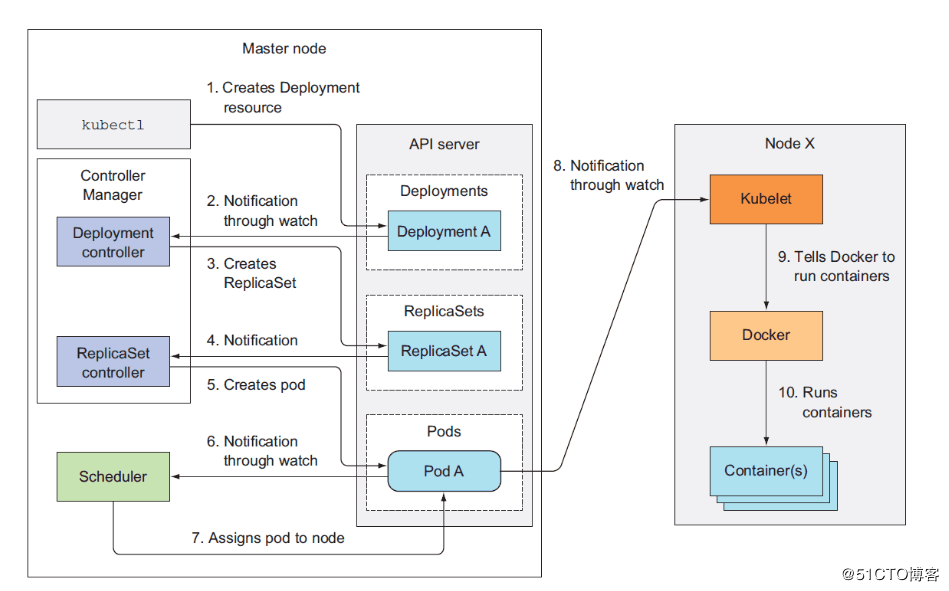

3、 Kubernetes工作流程

集群功能各模块功能描述:

Master节点:

Master节点上面主要由四个模块组成,APIServer,schedule,controller-manager,etcd

APIServer: APIServer负责对外提供RESTful的kubernetes API的服务,它是系统管理指令的统一接口,任何对资源的增删该查都要交给APIServer处理后再交给etcd,如图,kubectl(kubernetes提供的客户端工具,该工具内部是对kubernetes API的调用)是直接和APIServer交互的。

schedule: schedule负责调度Pod到合适的Node上,如果把scheduler看成一个黑匣子,那么它的输入是pod和由多个Node组成的列表,输出是Pod和一个Node的绑定。 kubernetes目前提供了调度算法,同样也保留了接口。用户根据自己的需求定义自己的调度算法。

controller manager: 如果APIServer做的是前台的工作的话,那么controller manager就是负责后台的。每一个资源都对应一个控制器。而control manager就是负责管理这些控制器的,比如我们通过APIServer创建了一个Pod,当这个Pod创建成功后,APIServer的任务就算完成了。

etcd:etcd是一个高可用的键值存储系统,kubernetes使用它来存储各个资源的状态,从而实现了Restful的API。

Node节点:

每个Node节点主要由三个模板组成:kublet, kube-proxy

kube-proxy: 该模块实现了kubernetes中的服务发现和反向代理功能。kube-proxy支持TCP和UDP连接转发,默认基Round Robin算法将客户端流量转发到与service对应的一组后端pod。服务发现方面,kube-proxy使用etcd的watch机制监控集群中service和endpoint对象数据的动态变化,并且维护一个service到endpoint的映射关系,从而保证了后端pod的IP变化不会对访问者造成影响,另外,kube-proxy还支持session affinity。

kublet:kublet是Master在每个Node节点上面的agent,是Node节点上面最重要的模块,它负责维护和管理该Node上的所有容器,但是如果容器不是通过kubernetes创建的,它并不会管理。本质上,它负责使Pod的运行状态与期望的状态一致。

二、Kubernetes 安装及配置

1、初始化环境

1.1、设置关闭防火墙及SELINUX

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

vi /etc/selinux/config

SELINUX=disabled1.2、关闭Swap

swapoff -a && sysctl -w vm.swappiness=0

vi /etc/fstab

#UUID=7bff6243-324c-4587-b550-55dc34018ebf swap swap defaults 0 01.3、设置Docker所需参数

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf1.4、安装 Docker

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum list docker-ce --showduplicates | sort -r

yum install docker-ce -y

systemctl start docker && systemctl enable docker1.5、创建安装目录

mkdir /k8s/etcd/{bin,cfg,ssl} -p

mkdir /k8s/kubernetes/{bin,cfg,ssl} -p1.6、安装及配置CFSSL

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo1.7、创建认证证书

创建 ETCD 证书

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF创建 ETCD CA 配置文件

cat << EOF | tee ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen"

}

]

}

EOF创建 ETCD Server 证书

cat << EOF | tee server-csr.json

{

"CN": "etcd",

"hosts": [

"172.16.8.100",

"172.16.8.101",

"172.16.8.102"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen"

}

]

}

EOF生成 ETCD CA 证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server1.8、 ssh-key认证

# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:FQjjiRDp8IKGT+UDM+GbQLBzF3DqDJ+pKnMIcHGyO/o root@qas-k8s-master01

The key's randomart image is:

+---[RSA 2048]----+

|o.==o o. .. |

|ooB+o+ o. . |

|B++@o o . |

|=X**o . |

|o=O. . S |

|..+ |

|oo . |

|* . |

|o+E |

+----[SHA256]-----+

# ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]

# ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]2 、部署ETCD

解压安装文件

tar -xvf etcd-v3.3.10-linux-amd64.tar.gz

cd etcd-v3.3.10-linux-amd64/

cp etcd etcdctl /k8s/etcd/bin/vim /k8s/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.8.100:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.8.100:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.8.100:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.8.100:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.16.8.100:2380,etcd02=https://172.16.8.101:2380,etcd03=https://172.16.8.102:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"创建 etcd的 systemd unit 文件

vim/usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/k8s/etcd/cfg/etcd

ExecStart=/k8s/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=/k8s/etcd/ssl/server.pem \

--key-file=/k8s/etcd/ssl/server-key.pem \

--peer-cert-file=/k8s/etcd/ssl/server.pem \

--peer-key-file=/k8s/etcd/ssl/server-key.pem \

--trusted-ca-file=/k8s/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/k8s/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target拷贝证书文件

cp ca*pem server*pem /k8s/etcd/ssl启动ETCD服务

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd将启动文件、配置文件拷贝到 节点1、节点2

cd /k8s/

scp -r etcd 172.16.8.101:/k8s/

scp -r etcd 172.16.8.102:/k8s/

scp /usr/lib/systemd/system/etcd.service 172.16.8.101:/usr/lib/systemd/system/etcd.service

scp /usr/lib/systemd/system/etcd.service 172.16.8.102:/usr/lib/systemd/system/etcd.service

vim /k8s/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.8.101:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.8.101:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.8.101:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.8.101:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.16.8.100:2380,etcd02=https://172.16.8.101:2380,etcd03=https://172.16.8.102:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

vim /k8s/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.8.102:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.8.102:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.8.102:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.8.102:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.16.8.100:2380,etcd02=https://172.16.8.101:2380,etcd03=https://172.16.8.102:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"验证集群是否正常运行

./etcdctl \

--ca-file=/k8s/etcd/ssl/ca.pem \

--cert-file=/k8s/etcd/ssl/server.pem \

--key-file=/k8s/etcd/ssl/server-key.pem \

--endpoints="https://172.16.8.100:2379,\

https://172.16.8.101:2379,\

https://172.16.8.102:2379" cluster-health

member 5db3ea816863435 is healthy: got healthy result from https://172.16.8.102:2379

member 991b5845cecb31b is healthy: got healthy result from https://172.16.8.101:2379

member c67ee2780d64a0d4 is healthy: got healthy result from https://172.16.8.100:2379

cluster is healthy

注意:

启动ETCD集群同时启动二个节点,启动一个节点集群是无法正常启动的;3、部署Flannel网络

向 etcd 写入集群 Pod 网段信息

cd /k8s/etcd/ssl/

/k8s/etcd/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem \

--key-file=server-key.pem \

--endpoints="https://172.16.8.100:2379,\

https://172.16.8.101:2379,https://172.16.8.102:2379" \

set /coreos.com/network/config '{ "Network": "172.18.0.0/16", "Backend": {"Type": "vxlan"}}'- flanneld 当前版本 (v0.10.0) 不支持 etcd v3,故使用 etcd v2 API 写入配置 key 和网段数据;

- 写入的 Pod 网段 ${CLUSTER_CIDR} 必须是 /16 段地址,必须与 kube-controller-manager 的 –cluster-cidr 参数值一致;

解压安装

tar -xvf flannel-v0.10.0-linux-amd64.tar.gz

mv flanneld mk-docker-opts.sh /k8s/kubernetes/bin/配置Flannel

vim /k8s/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=https://172.16.8.100:2379,https://172.16.8.101:2379,https://172.16.8.102:2379 -etcd-cafile=/k8s/etcd/ssl/ca.pem -etcd-certfile=/k8s/etcd/ssl/server.pem -etcd-keyfile=/k8s/etcd/ssl/server-key.pem"创建 flanneld 的 systemd unit 文件

vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/k8s/kubernetes/cfg/flanneld

ExecStart=/k8s/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/k8s/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target- mk-docker-opts.sh 脚本将分配给 flanneld 的 Pod 子网网段信息写入 /run/flannel/docker 文件,后续 docker 启动时 使用这个文件中的环境变量配置 docker0 网桥;

- flanneld 使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用 -iface 参数指定通信接口,如上面的 eth0 接口;

- flanneld 运行时需要 root 权限;

配置Docker启动指定子网段

vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target配置Docker启动指定子网段

vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

BindsTo=containerd.service

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target将flanneld systemd unit 文件到所有节点

cd /k8s/

scp -r kubernetes 172.16.8.101:/k8s/

scp -r kubernetes 172.16.8.102:/k8s/

scp /k8s/kubernetes/cfg/flanneld 172.16.8.102:/k8s/kubernetes/cfg/flanneld

scp /k8s/kubernetes/cfg/flanneld 172.16.8.102:/k8s/kubernetes/cfg/flanneld

scp /usr/lib/systemd/system/docker.service 172.16.8.101:/usr/lib/systemd/system/docker.service

scp /usr/lib/systemd/system/docker.service 172.16.8.102:/usr/lib/systemd/system/docker.service

scp /usr/lib/systemd/system/flanneld.service 172.16.8.101:/usr/lib/systemd/system/flanneld.service

scp /usr/lib/systemd/system/flanneld.service 172.16.8.102:/usr/lib/systemd/system/flanneld.service

启动服务

systemctl daemon-reload

systemctl start flanneld

systemctl enable flanneld

systemctl restart docker查看是否生效

ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:e3:57:a4 brd ff:ff:ff:ff:ff:ff

inet 172.16.8.101/24 brd 172.16.8.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee3:57a4/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:cf:5d:a7:af brd ff:ff:ff:ff:ff:ff

inet 172.18.25.1/24 brd 172.18.25.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 0e:bf:c5:3b:4d:59 brd ff:ff:ff:ff:ff:ff

inet 172.18.25.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::cbf:c5ff:fe3b:4d59/64 scope link

valid_lft forever preferred_lft forever4、部署 master 节点

kubernetes master 节点运行如下组件:

- kube-apiserver

- kube-scheduler

- kube-controller-manager

kube-scheduler 和 kube-controller-manager 可以以集群模式运行,通过 leader 选举产生一个工作进程,其它进程处于阻塞模式。

将二进制文件解压拷贝到master 节点

tar -xvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin/

cp kube-scheduler kube-apiserver kube-controller-manager kubectl /k8s/kubernetes/bin/创建 Kubernetes CA 证书

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOFcat << EOF | tee ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -拷贝认证

cp *pem /k8s/kubernetes/ssl/部署 kube-apiserver 组件

生成API_SERVER证书

cat << EOF | tee server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"172.16.8.100",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server创建 TLS Bootstrapping Token

# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

2366a641f656a0a025abb4aabda4511b

vim /k8s/kubernetes/cfg/token.csv

2366a641f656a0a025abb4aabda4511b,kubelet-bootstrap,10001,"system:kubelet-bootstrap"创建apiserver配置文件

vim /k8s/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://172.16.8.100:2379,https://172.16.8.101:2379,https://172.16.8.102:2379 \

--bind-address=172.16.8.100 \

--secure-port=6443 \

--advertise-address=172.16.8.100 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/k8s/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/k8s/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/k8s/kubernetes/ssl/ca.pem \

--service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/k8s/etcd/ssl/ca.pem \

--etcd-certfile=/k8s/etcd/ssl/server.pem \

--etcd-keyfile=/k8s/etcd/ssl/server-key.pem"创建 kube-apiserver systemd unit 文件

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/k8s/kubernetes/cfg/kube-apiserver

ExecStart=/k8s/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target启动服务

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver查看apiserver是否运行

ps -ef |grep kube-apiserver

root 76300 1 45 08:57 ? 00:00:14 /k8s/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://172.16.8.100:2379,https://172.16.8.101:2379,https://172.16.8.102:2379 --bind-address=172.16.8.100 --secure-port=6443 --advertise-address=172.16.9.51 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --enable-bootstrap-token-auth --token-auth-file=/k8s/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/k8s/kubernetes/ssl/server.pem --tls-private-key-file=/k8s/kubernetes/ssl/server-key.pem --client-ca-file=/k8s/kubernetes/ssl/ca.pem --service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem --etcd-cafile=/k8s/etcd/ssl/ca.pem --etcd-certfile=/k8s/etcd/ssl/server.pem --etcd-keyfile=/k8s/etcd/ssl/server-key.pem

root 76357 4370 0 08:58 pts/1 00:00:00 grep --color=auto kube-apiserver部署kube-scheduler

创建kube-scheduler配置文件

vim /k8s/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect"- –address:在 127.0.0.1:10251 端口接收 http /metrics 请求;kube-scheduler 目前还不支持接收 https 请求;

- –kubeconfig:指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证 kube-apiserver;

- –leader-elect=true:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态;

创建kube-scheduler systemd unit 文件

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/k8s/kubernetes/cfg/kube-scheduler

ExecStart=/k8s/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target启动服务

systemctl daemon-reload

systemctl enable kube-scheduler.service

systemctl restart kube-scheduler.service查看kube-scheduler是否运行

# ps -ef |grep kube-scheduler

root 77854 1 8 09:17 ? 00:00:02 /k8s/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

root 77901 1305 0 09:18 pts/0 00:00:00 grep --color=auto kube-scheduler

# systemctl status kube-scheduler.service

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; disabled; vendor preset: disabled)

Active: active (running) since 三 2018-12-05 09:17:43 CST; 29s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 77854 (kube-scheduler)

Tasks: 13

Memory: 10.9M

CGroup: /system.slice/kube-scheduler.service

└─77854 /k8s/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

12月 05 09:17:45 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:45.642632 77854 shared_informer.go:123] caches populated

12月 05 09:17:45 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:45.743297 77854 shared_informer.go:123] caches populated

12月 05 09:17:45 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:45.844554 77854 shared_informer.go:123] caches populated

12月 05 09:17:45 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:45.945332 77854 shared_informer.go:123] caches populated

12月 05 09:17:45 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:45.945434 77854 controller_utils.go:1027] Waiting for caches to sync for scheduler controller

12月 05 09:17:46 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:46.046385 77854 shared_informer.go:123] caches populated

12月 05 09:17:46 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:46.046427 77854 controller_utils.go:1034] Caches are synced for scheduler controller

12月 05 09:17:46 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:46.046574 77854 leaderelection.go:205] attempting to acquire leader lease kube-system/kube-scheduler...

12月 05 09:17:46 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:46.063185 77854 leaderelection.go:214] successfully acquired lease kube-system/kube-scheduler

12月 05 09:17:46 qas-k8s-master01 kube-scheduler[77854]: I1205 09:17:46.164498 77854 shared_informer.go:123] caches populated部署kube-controller-manager

创建kube-controller-manager配置文件

vim /k8s/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem \

--root-ca-file=/k8s/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem"创建kube-controller-manager systemd unit 文件

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/k8s/kubernetes/cfg/kube-controller-manager

ExecStart=/k8s/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target启动服务

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager查看kube-controller-manager是否运行

# systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2018-12-05 09:35:00 CST; 3s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 79191 (kube-controller)

Tasks: 8

Memory: 15.2M

CGroup: /system.slice/kube-controller-manager.service

└─79191 /k8s/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0....

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.350599 79191 serving.go:318] Generated self-signed cert in-memory

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: W1205 09:35:01.762710 79191 authentication.go:235] No authentication-kubeconfig provided in order to lookup...on't work.

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: W1205 09:35:01.762767 79191 authentication.go:238] No authentication-kubeconfig provided in order to lookup...on't work.

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: W1205 09:35:01.762792 79191 authorization.go:146] No authorization-kubeconfig provided, so SubjectAcce***ev...on't work.

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.762827 79191 controllermanager.go:151] Version: v1.13.0

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.763446 79191 secure_serving.go:116] Serving securely on [::]:10257

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.763925 79191 deprecated_insecure_serving.go:51] Serving insecurely on 127.0.0.1:10252

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.764443 79191 leaderelection.go:205] attempting to acquire leader lease kube-system/kube-con...manager...

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.770798 79191 leaderelection.go:289] lock is held by qas-k8s-master01_fab3fbe9-f82d-11e8-9140...et expired

12月 05 09:35:01 qas-k8s-master01 kube-controller-manager[79191]: I1205 09:35:01.770817 79191 leaderelection.go:210] failed to acquire lease kube-system/kube-controller-manager

Hint: Some lines were ellipsized, use -l to show in full.

# ps -ef |grep kube-controller-manager

root 79191 1 10 09:35 ? 00:00:01 /k8s/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem --cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem --root-ca-file=/k8s/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem

root 79220 1305 0 09:35 pts/0 00:00:00 grep --color=auto kube-controller-manager将可执行文件路/k8s/kubernetes/ 添加到 PATH 变量中

vim /etc/profile

PATH=/k8s/kubernetes/bin:$PATH:$HOME/bin

source /etc/profile查看master集群状态

# kubectl get cs,nodes

NAME STATUS MESSAGE ERROR

componentstatus/scheduler Healthy ok

componentstatus/etcd-2 Healthy {"health":"true"}

componentstatus/etcd-1 Healthy {"health":"true"}

componentstatus/etcd-0 Healthy {"health":"true"}

componentstatus/controller-manager Healthy ok5、部署node 节点

kubernetes work 节点运行如下组件:

- docker 前面已经部署

- kubelet

- kube-proxy

部署 kubelet 组件

- kublet 运行在每个 worker 节点上,接收 kube-apiserver 发送的请求,管理 Pod 容器,执行交互式命令,如exec、run、logs 等;

- kublet 启动时自动向 kube-apiserver 注册节点信息,内置的 cadvisor 统计和监控节点的资源使用情况;

- 为确保安全,本文档只开启接收 https 请求的安全端口,对请求进行认证和授权,拒绝未授权的访问(如apiserver、heapster)。

将kubelet 二进制文件拷贝node节点

cp kubelet kube-proxy /k8s/kubernetes/bin/

scp kubelet kube-proxy 172.16.8.101:/k8s/kubernetes/bin/

scp kubelet kube-proxy 172.16.8.102:/k8s/kubernetes/bin/创建 kubelet bootstrap kubeconfig 文件

vim environment.sh

# 创建kubelet bootstrapping kubeconfig

BOOTSTRAP_TOKEN=2366a641f656a0a025abb4aabda4511b

KUBE_APISERVER="https://172.16.8.100:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig将bootstrap kubeconfig kube-proxy.kubeconfig 文件拷贝到所有 nodes节点

cp bootstrap.kubeconfig kube-proxy.kubeconfig /k8s/kubernetes/cfg/

scp bootstrap.kubeconfig kube-proxy.kubeconfig 172.16.8.101:/k8s/kubernetes/cfg/

scp bootstrap.kubeconfig kube-proxy.kubeconfig 172.16.8.102:/k8s/kubernetes/cfg/创建kubelet 参数配置文件拷贝到所有 nodes节点

创建 kubelet 参数配置模板文件:

vim /k8s/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 172.16.8.100

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["10.0.0.2"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true创建kubelet配置文件

vim /k8s/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=172.16.8.100 \

--kubeconfig=/k8s/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/k8s/kubernetes/cfg/bootstrap.kubeconfig \

--config=/k8s/kubernetes/cfg/kubelet.config \

--cert-dir=/k8s/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"创建kubelet systemd unit 文件

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/k8s/kubernetes/cfg/kubelet

ExecStart=/k8s/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target将kubelet-bootstrap用户绑定到系统集群角色

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap启动服务

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubeletapprove kubelet CSR 请求

可以手动或自动 approve CSR 请求。推荐使用自动的方式,因为从 v1.8 版本开始,可以自动轮转approve csr 后生成的证书。

手动 approve CSR 请求

查看 CSR 列表:

# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs 39m kubelet-bootstrap Pending

node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s 5m5s kubelet-bootstrap Pending

# kubectl certificate approve node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs

certificatesigningrequest.certificates.k8s.io/node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs

# kubectl certificate approve node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s

certificatesigningrequest.certificates.k8s.io/node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s approved

[

# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs 41m kubelet-bootstrap Approved,Issued

node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s 7m32s kubelet-bootstrap Approved,Issued- Requesting User:请求 CSR 的用户,kube-apiserver 对它进行认证和授权;

- Subject:请求签名的证书信息;

- 证书的 CN 是 system:node:kube-node2, Organization 是 system:nodes,kube-apiserver 的 Node 授权模式会授予该证书的相关权限;

查看集群状态

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.16.8.100 Ready <none> 39m v1.13.0

172.16.8.101 Ready <none> 25s v1.13.0

172.16.8.102 Ready <none> 13s v1.13.0部署 kube-proxy 组件

kube-proxy 运行在所有 node节点上,它监听 apiserver 中 service 和 Endpoint 的变化情况,创建路由规则来进行服务负载均衡。

创建 Kubernetes Proxy 证书

cat << EOF | tee kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy创建 kube-proxy 配置文件

vim /k8s/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=172.16.8.100 \

--cluster-cidr=10.0.0.0/24 \

--kubeconfig=/k8s/kubernetes/cfg/kube-proxy.kubeconfig"- bindAddress: 监听地址;

- clientConnection.kubeconfig: 连接 apiserver 的 kubeconfig 文件;

- clusterCIDR: kube-proxy 根据 –cluster-cidr 判断集群内部和外部流量,指定 –cluster-cidr 或 –masquerade-all 选项后 kube-proxy 才会对访问 Service IP 的请求做 SNAT;

- hostnameOverride: 参数值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 ipvs 规则;

- mode: 使用 ipvs 模式;

创建kube-proxy systemd unit 文件

vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/k8s/kubernetes/cfg/kube-proxy

ExecStart=/k8s/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target启动服务

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2018-12-05 22:49:31 CST; 7s ago

Main PID: 13848 (kube-proxy)

Tasks: 0

Memory: 11.1M

CGroup: /system.slice/kube-proxy.service

‣ 13848 /k8s/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=172.16.8.100 --cluster-cidr=10.0.0.0/24 --kubeconfig=/k8s/kubernetes/cfg/kube-proxy.kubecon...

12月 05 22:49:31 qas-k8s-master01 kube-proxy[13848]: I1205 22:49:31.989376 13848 iptables.go:391] running i集群状态

打node 或者master 节点的标签

kubectl label node 172.16.8.100 node-role.kubernetes.io/master='master'

kubectl label node 172.16.8.101 node-role.kubernetes.io/node='node'

kubectl label node 172.16.8.102 node-role.kubernetes.io/node='node'# kubectl get node,cs

NAME STATUS ROLES AGE VERSION

node/172.16.8.100 Ready master 137m v1.13.0

node/172.16.8.101 Ready node 114m v1.13.0

node/172.16.8.102 Ready node 93m v1.13.0

NAME STATUS MESSAGE ERROR

componentstatus/controller-manager Healthy ok

componentstatus/scheduler Healthy ok

componentstatus/etcd-0 Healthy {"health":"true"}

componentstatus/etcd-1 Healthy {"health":"true"}

componentstatus/etcd-2 Healthy {"health":"true"}作者:思考

原文:http://blog.51cto.com/10880347/2326146