CNN应用之性别、年龄识别

原文地址:http://blog.csdn.net/hjimce/article/details/49255013

作者:hjimce

一、相关理论

本篇博文主要讲解2015年一篇paper《Age and Gender Classification using Convolutional Neural Networks》,个人感觉这篇文献没啥难度,只要懂得Alexnet,实现这篇文献的算法,会比较容易。其实读完这篇paper之后,我一直在想paper的创新点在哪里?因为我实在没有看出paper的创新点在哪里,估计是自己水平太lower了,看文献没有抓到文献的创新点。难道是因为利用CNN做年龄和性别分类的paper很少吗?网上搜索了一下,性别预测,以前很多都是用SVM算法,用CNN搞性别分类就只搜索到这一篇文章。个人感觉利用CNN进行图片分类已经不是什么新鲜事了,年龄、和性别预测,随便搞个CNN网络,然后开始训练跑起来,也可以获得不错的精度。

性别分类自然而然是二分类问题,然而对于年龄怎么搞?年龄预测是回归问题吗?paper采用的方法是把年龄划分为多个年龄段,每个年龄段相当于一个类别,这样性别也就多分类问题了。所以我们不要觉得现在的一些APP,功能好像很牛逼,什么性别、年龄、衣服类型、是否佩戴眼镜等识别问题,其实这种识别对于CNN来说,基本上是松松搞定的事,当然你如果要达到非常高的识别精度,是另外一回事了,就需要各种调参了。

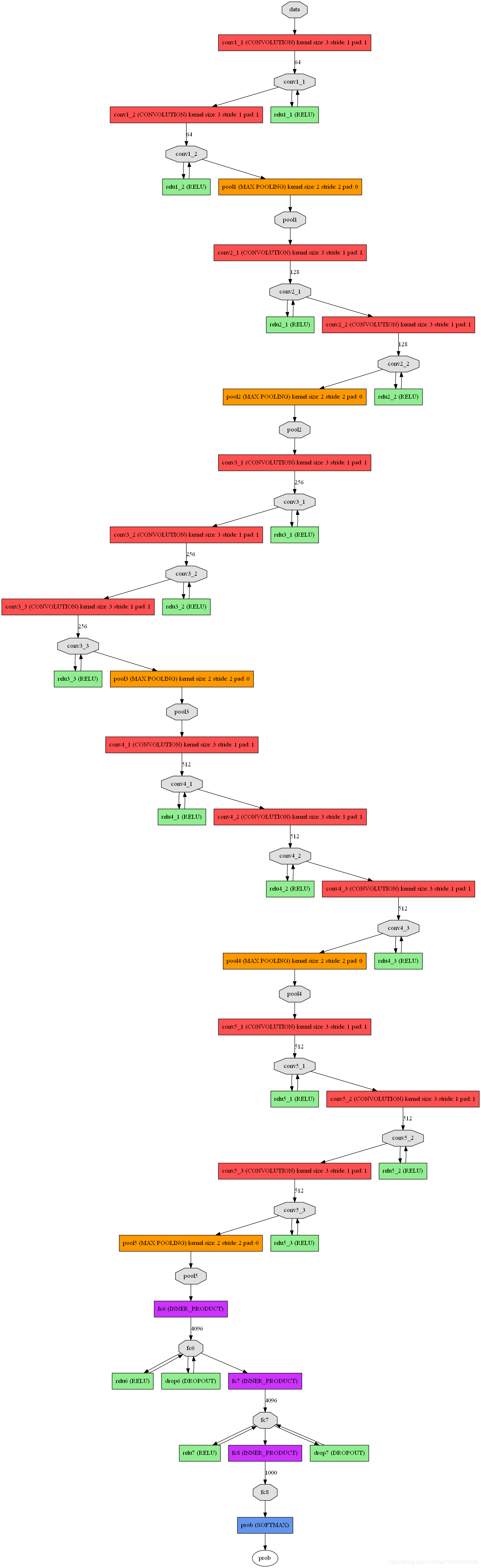

言归正传,下面开始讲解2015年paper《Age and Gender Classification using Convolutional Neural Networks》的网络结构,这篇文章没有什么新算法,只有调参,改变网络层数、卷积核大小等……所以如果已经对Alexnet比较熟悉的,可能会觉得看起来没啥意思,这篇papar的相关源码和训练数据,文献作者有给我们提供,可以到Caffe zoo model:https://github.com/BVLC/caffe/wiki/Model-Zoo 或者文献的主页:http://www.openu.ac.il/home/hassner/projects/cnn_agegender/。下载相关训练好的模型,paper性别、年龄预测的应用场景比较复杂,都是一些非常糟糕的图片,比较模糊的图片等,所以如果我们想要直接利用paper训练好的模型,用到我们自己的项目上,可能精度会比较低,后面我将会具体讲一下利用paper的模型进行fine-tuning,以适应我们的应用,提高我们自己项目的识别精度。

二、算法实现

tensorflow-GPU1.4+python3.5

VGG16特征提取和权重导入

权重:网盘链接:https://pan.baidu.com/s/1AB6sqLgsW6qZ_iMvphTIAQ

提取码:d61m

class vgg16:

def __init__(self, imgs, weights=None, sess=None):

self.imgs = imgs

self.convlayers()

self.fc_layers()

self.W=self.fc2

#计算softmax层输出

if weights is not None and sess is not None: #载入pre-training的权重

self.load_weights(weights, sess)

def convlayers(self):

self.parameters = []

# zero-mean input

# 去RGB均值操作(这里RGB均值为原数据集的均值)

with tf.name_scope('preprocess') as scope:

mean = tf.constant([123.68, 116.779, 103.939],

dtype=tf.float32, shape=[1, 1, 1, 3], name='img_mean')

images = self.imgs-mean

# conv1_1

with tf.name_scope('conv1_1') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 3, 64], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(images, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[64], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv1_1 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv1_2

with tf.name_scope('conv1_2') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 64, 64], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv1_1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[64], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv1_2 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# pool1

self.pool1 = tf.nn.max_pool(self.conv1_2,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME',

name='pool1')

# conv2_1

with tf.name_scope('conv2_1') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 64, 128], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.pool1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[128], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv2_1 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv2_2

with tf.name_scope('conv2_2') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 128, 128], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv2_1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[128], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv2_2 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# pool2

self.pool2 = tf.nn.max_pool(self.conv2_2,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME',

name='pool2')

# conv3_1

with tf.name_scope('conv3_1') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 128, 256], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.pool2, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv3_1 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv3_2

with tf.name_scope('conv3_2') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 256, 256], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv3_1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv3_2 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv3_3

with tf.name_scope('conv3_3') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 256, 256], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv3_2, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv3_3 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# pool3

self.pool3 = tf.nn.max_pool(self.conv3_3,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME',

name='pool3')

# conv4_1

with tf.name_scope('conv4_1') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 256, 512], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.pool3, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[512], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv4_1 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv4_2

with tf.name_scope('conv4_2') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 512, 512], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv4_1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[512], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv4_2 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv4_3

with tf.name_scope('conv4_3') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 512, 512], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv4_2, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[512], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv4_3 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# pool4

self.pool4 = tf.nn.max_pool(self.conv4_3,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME',

name='pool4')

# conv5_1

with tf.name_scope('conv5_1') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 512, 512], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.pool4, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[512], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv5_1 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv5_2

with tf.name_scope('conv5_2') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 512, 512], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv5_1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[512], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv5_2 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# conv5_3

with tf.name_scope('conv5_3') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 512, 512], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(self.conv5_2, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[512], dtype=tf.float32),

trainable=True, name='biases')

out = tf.nn.bias_add(conv, biases)

self.conv5_3 = tf.nn.relu(out, name=scope)

self.parameters += [kernel, biases]

# pool5

self.pool5 = tf.nn.max_pool(self.conv5_3,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME',

name='pool4')

def fc_layers(self):

# fc1

with tf.name_scope('fc1') as scope:

# 取出shape中第一个元素后的元素 例如x=[1,2,3] -->x[1:]=[2,3]

# np.prod是计算数组的元素乘积 x=[2,3] np.prod(x) = 2*3 = 6

# 这里代码可以使用 shape = self.pool5.get_shape()

#shape = shape[1].value * shape[2].value * shape[3].value 代替

shape = int(np.prod(self.pool5.get_shape()[1:]))

fc1w = tf.Variable(tf.truncated_normal([shape, 4096],

dtype=tf.float32,

stddev=1e-1), name='weights')

fc1b = tf.Variable(tf.constant(1.0, shape=[4096], dtype=tf.float32),

trainable=True, name='biases')

pool5_flat = tf.reshape(self.pool5, [-1, shape])

fc1l = tf.nn.bias_add(tf.matmul(pool5_flat, fc1w), fc1b)

self.fc1 = tf.nn.relu(fc1l)

self.parameters += [fc1w, fc1b]

# fc2

with tf.name_scope('fc2') as scope:

fc2w = tf.Variable(tf.truncated_normal([4096, 4096],

dtype=tf.float32,

stddev=1e-1), name='weights')

fc2b = tf.Variable(tf.constant(1.0, shape=[4096], dtype=tf.float32),

trainable=True, name='biases')

fc2l = tf.nn.bias_add(tf.matmul(self.fc1, fc2w), fc2b)

self.fc2 = tf.nn.relu(fc2l)

self.parameters += [fc2w, fc2b]

def load_weights(self, weight_file, sess):

weights = np.load(weight_file)

keys = sorted(weights.keys())

keys=keys[0:len(keys)-2]

for i, k in enumerate(keys):

print(i, k, np.shape(weights[k]))

sess.run(self.parameters[i].assign(weights[k]))

if __name__ == '__main__':

sess = tf.Session()

imgs = tf.placeholder(tf.float32, [None, 224, 224, 3])

vgg = vgg16(imgs, 'E:/VGG16/vgg16_weights.npz', sess) # 载入预训练好的模型权重

img1 = imread('E:/VQA/VQA/data/test_img.jpg', mode='RGB') #载入需要判别的图片

img1 = imresize(img1, (224, 224))

img2 = imread('E:/VQA/VQA/data/test_img1.jpg', mode='RGB')

img2 = imresize(img2, (224, 224))

img3 = imread('E:/VQA/VQA/data/test_img2.jpg', mode='RGB')

img3 = imresize(img3, (224, 224))

#计算VGG16的softmax层输出(返回是列表,每个元素代表一个判别类型的数组)

prob = sess.run(vgg.W, feed_dict={vgg.imgs: [img1,img2,img3]})

prob.shape

prob[1].shape

print(prob)

MLP分类(当然也可以用CNN(softmax)直接分类)

如果需要在4096的特征值上加一些除图片之外的特征可以在建个模型MLP(简单暴力)

Batch 做样本的按分段提取(可以用shuffle打乱数据训练效果更好)

#batch

def n_batch(i,size):

batch_x=input_data[i*size:(i+1)*size,:]

batch_y=label[i*size:(i+1)*size,:]

return batch_x,batch_y

in_units = 4480 #输入节点数

h1_units = 1024 #隐含层节点数

out=100

W1 = tf.Variable(tf.truncated_normal([in_units, h1_units], stddev=0.1)) #初始化隐含层权重W1,服从默认均值为0,标准差为0.1的截断正态分布

b1 = tf.Variable(tf.zeros([h1_units])) #隐含层偏置b1全部初始化为0

W2 = tf.Variable(tf.zeros([h1_units, out]))

b2 = tf.Variable(tf.zeros([out]))

x = tf.placeholder(tf.float32, [None, in_units])

keep_prob = tf.placeholder(tf.float32) #Dropout失活率

#定义模型结构

hidden1 = tf.nn.relu(tf.matmul(x, W1) + b1)

hidden1_drop = tf.nn.dropout(hidden1, keep_prob)

y = tf.nn.softmax(tf.matmul(hidden1_drop, W2) + b2)

#训练部分

y_ = tf.placeholder(tf.float32, [None, out])

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y+0.00000000000001), reduction_indices=[1]))

train_step = tf.train.AdamOptimizer(0.001).minimize(cross_entropy)

#定义一个InteractiveSession会话并初始化全部变量

correct_prediction = tf.equal(tf.arg_max(y, 1), tf.arg_max(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

saver = tf.train.Saver()

init=tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for num in range(150):

for i in range(0,100):

batch_x,batch_y=n_batch(i,2000)

sess.run(train_step,feed_dict={x: batch_x, y_: batch_y, keep_prob: 0.75})

if i % 100 ==0:

#训练过程每200步在测试集上验证一下准确率,动态显示训练过程

ll=sess.run(accuracy,feed_dict={x:batch_x,y_:batch_y,keep_prob:1})

loss=sess.run(cross_entropy,feed_dict={x:batch_x,y_:batch_y,keep_prob:1})

print(num,i, 'training_arruracy:',ll,loss)

test_ll=sess.run(accuracy,feed_dict={x:tx[0:100],y_:ty[0:100],keep_prob:1})

test_loss=sess.run(cross_entropy,feed_dict={x:tx[0:100],y_:ty[0:100],keep_prob:1})

print(num,i, 'test:',test_ll,test_loss)

if num==99:

saver.save(sess, 'E:/mymodel2/model.ckpt', global_step=num)

print('final_accuracy:', ll,loss,test_ll,test_loss)