背景说明

我们经常要导入大批量的数据进redis以供查询。这里举例要导入2亿个手机号码到Redis服务器。数据类型为Set。

比如177字头的手机号码,理论上是1亿个号码,即众17700000000至17799999999。我们这里再将1亿个号码平均分成5等份,即每份2000万个号码。key和它相对应的成员分配如下

key:177:1 成员从1770000000至17719999999共2000万个号码

key:177:2 成员从1772000000至17739999999共2000万个号码

key:177:3 成员从1774000000至17759999999共2000万个号码

key:177:4 成员从1776000000至17779999999共2000万个号码

key:177:5 成员从1778000000至17799999999共2000万个号码

资源准备

准备一台可用内存足够的Linux主机。安装好Redis服务器并正常开启,IP是192.168.7.214

执行步骤

1.准备好指令文件

分别生成177_1.txt、177_2.txt、177_3.txt、177_4.txt、177_5.txt。这里列出177_1.txt文件的部分内容,其他的类似

sadd 177:1 17700000000

sadd 177:1 17700000001

sadd 177:1 17700000002

sadd 177:1 17700000003

sadd 177:1 17700000004

sadd 177:1 17700000005

....

sadd 177:1 17719999999

熟悉redis命令的朋友,应该很清楚。上述命令的作用,及往177:1这个key的Set集合添加成员。假设上述文件保存在/home/c7user目录下

2.编写sh脚本

排除网络带宽方面的消耗,在Redis所在的主机上编辑sh文件,同时为监控光导入177_1.txt这2000万的耗时时间,编辑成一个import.sh文件,内容如下:

echo $(date)

cat /home/c7user/177_1.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

echo $(date)

上述粗体字体的是核心命令。

将import.sh文件赋予权限。命令:chmod 755 import.sh

若要导多份文件,则在对应的import.sh文件中追加即可,如:

cat /home/c7user/177_1.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/177_2.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/177_3.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/177_4.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/177_5.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/189_1.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/189_2.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/189_3.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/189_4.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

cat /home/c7user/189_5.txt | ./redis-cli -h 192.168.7.214 -a xxxxx --pipe

3.执行观察

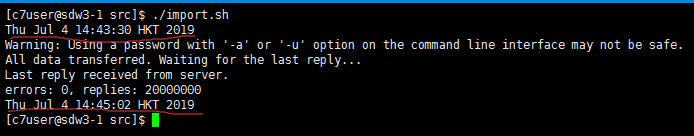

最后就是执行import.sh文件了,如下图所示,我们可以观察到导入2000万的号码至一个key所耗的时间为92秒,速度飞快。而如果采用java,c等上层语言的API去导入,哪怕是用了管道方式导入都估计没这么快。

参考资料

https://redis.io/topics/mass-insert#redis-mass-insertion

Redis Mass Insertion

Sometimes Redis instances need to be loaded with a big amount of preexisting or user generated data in a short amount of time, so that millions of keys will be created as fast as possible.

This is called a mass insertion, and the goal of this document is to provide information about how to feed Redis with data as fast as possible.

Use the protocol, Luke

Using a normal Redis client to perform mass insertion is not a good idea for a few reasons: the naive approach of sending one command after the other is slow because you have to pay for the round trip time for every command. It is possible to use pipelining, but for mass insertion of many records you need to write new commands while you read replies at the same time to make sure you are inserting as fast as possible.

Only a small percentage of clients support non-blocking I/O, and not all the clients are able to parse the replies in an efficient way in order to maximize throughput. For all this reasons the preferred way to mass import data into Redis is to generate a text file containing the Redis protocol, in raw format, in order to call the commands needed to insert the required data.