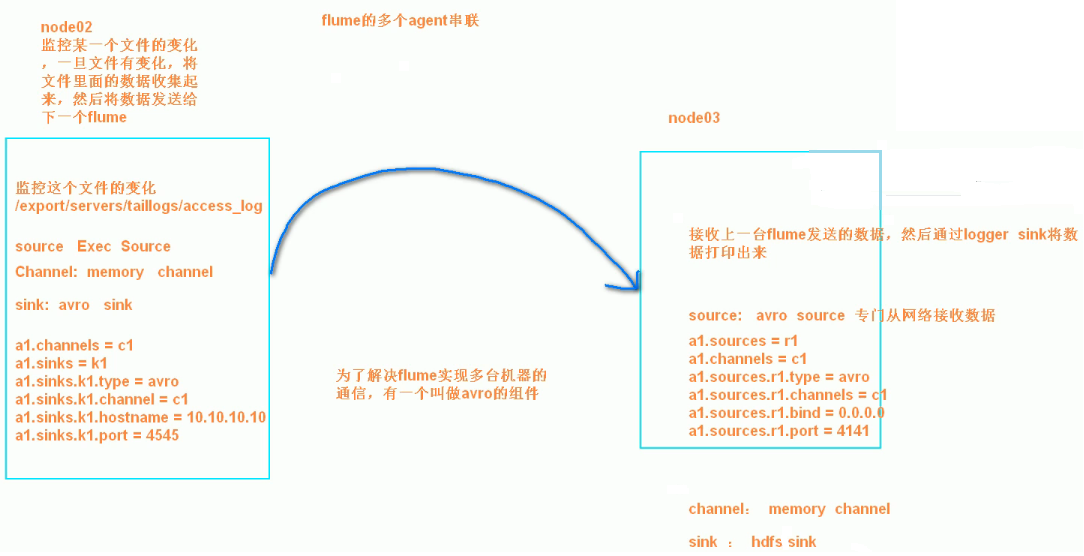

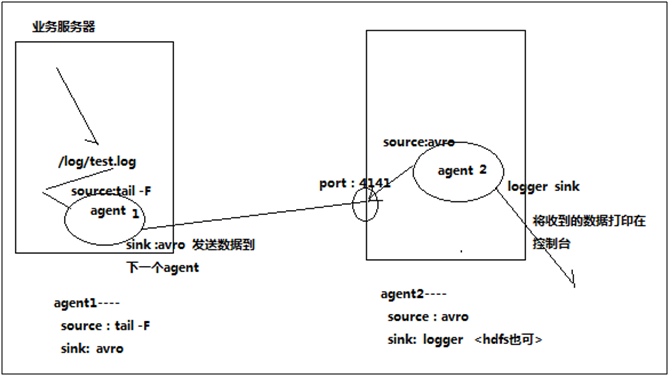

3、两个agent级联

需求分析:

第一个agent负责收集文件当中的数据,通过网络发送到第二个agent当中去,第二个agent负责接收第一个agent发送的数据,并将数据保存到hdfs上面去

第一步:node02安装flume

将node03机器上面解压后的flume文件夹拷贝到node02机器上面去

cd /export/servers

scp -r apache-flume-1.6.0-cdh5.14.0-bin/ node02:$PWD

第二步:node02配置flume配置文件

在node02机器配置我们的flume

cd /export/servers/apache-flume-1.6.0-cdh5.14.0-bin/conf

vim tail-avro-avro-logger.conf

##################

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /export/servers/taillogs/access_log

a1.sources.r1.channels = c1

# Describe the sink

##sink端的avro是一个数据发送者

a1.sinks = k1

a1.sinks.k1.type = avro

a1.sinks.k1.channel = c1

a1.sinks.k1.hostname = 192.168.52.120

a1.sinks.k1.port = 4141

a1.sinks.k1.batch-size = 10

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

第三步:node02开发脚本文件,往文件写入数据

直接将node03下面的脚本和数据拷贝到node02即可,node03机器上执行以下命令

cd /export/servers

scp -r shells/ taillogs/ node02:$PWD

第五步:node03开发flume配置文件

在node03机器上开发flume的配置文件

cd /export/servers/apache-flume-1.6.0-cdh5.14.0-bin/conf

vim avro-hdfs.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

##source中的avro组件是一个接收者服务

a1.sources.r1.type = avro

a1.sources.r1.channels = c1

a1.sources.r1.bind = 192.168.52.120

a1.sources.r1.port = 4141

# Describe the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://node01:8020/avro/hdfs/%y-%m-%d/%H%M/

a1.sinks.k1.hdfs.filePrefix = events-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = minute

a1.sinks.k1.hdfs.rollInterval = 3

a1.sinks.k1.hdfs.rollSize = 20

a1.sinks.k1.hdfs.rollCount = 5

a1.sinks.k1.hdfs.batchSize = 1

a1.sinks.k1.hdfs.useLocalTimeStamp = true

#生成的文件类型,默认是Sequencefile,可用DataStream,则为普通文本

a1.sinks.k1.hdfs.fileType = DataStream

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

第六步:顺序启动

node03机器启动flume进程

cd /export/servers/apache-flume-1.6.0-cdh5.14.0-bin

bin/flume-ng agent -c conf -f conf/avro-hdfs.conf -n a1 -Dflume.root.logger=INFO,console

node02机器启动flume进程

cd /export/servers/apache-flume-1.6.0-cdh5.14.0-bin/

bin/flume-ng agent -c conf -f conf/tail-avro-avro-logger.conf -n a1 -Dflume.root.logger=INFO,console

node02机器启shell脚本生成文件

cd /export/servers/shells

sh tail-file.sh