import tensorflow as tf TRAINING_STEPS = 10 LEARNING_RATE = 1 x = tf.Variable(tf.constant(5, dtype=tf.float32), name="x") y = tf.square(x) train_op = tf.train.GradientDescentOptimizer(LEARNING_RATE).minimize(y) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) for i in range(TRAINING_STEPS): sess.run(train_op) x_value = sess.run(x) print( "After %s iteration(s): x%s is %f."% (i+1, i+1, x_value) )

TRAINING_STEPS = 1000 LEARNING_RATE = 0.001 x = tf.Variable(tf.constant(5, dtype=tf.float32), name="x") y = tf.square(x) train_op = tf.train.GradientDescentOptimizer(LEARNING_RATE).minimize(y) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) for i in range(TRAINING_STEPS): sess.run(train_op) if i % 100 == 0: x_value = sess.run(x) print("After %s iteration(s): x%s is %f."% (i+1, i+1, x_value))

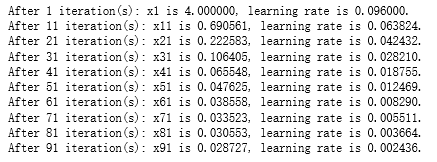

TRAINING_STEPS = 100 global_step = tf.Variable(0) LEARNING_RATE = tf.train.exponential_decay(0.1, global_step, 1, 0.96, staircase=True) x = tf.Variable(tf.constant(5, dtype=tf.float32), name="x") y = tf.square(x) train_op = tf.train.GradientDescentOptimizer(LEARNING_RATE).minimize(y, global_step=global_step) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) for i in range(TRAINING_STEPS): sess.run(train_op) if i % 10 == 0: LEARNING_RATE_value = sess.run(LEARNING_RATE) x_value = sess.run(x) print ("After %s iteration(s): x%s is %f, learning rate is %f."% (i+1, i+1, x_value, LEARNING_RATE_value))