版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/bless2015/article/details/89839166

定义层的函数,里面有权重值、偏置值、计算和激活函数

def add_layer(inputs,in_size,out_size,activation_function=None):

Weights = tf.Variable(tf.random_normal([in_size,out_size]),name='W')

biases = tf.Variable(tf.zeros([1,out_size])+0.1,name='b')

Wx_plus_b = tf.add(tf.matmul(inputs,Weights),biases)

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

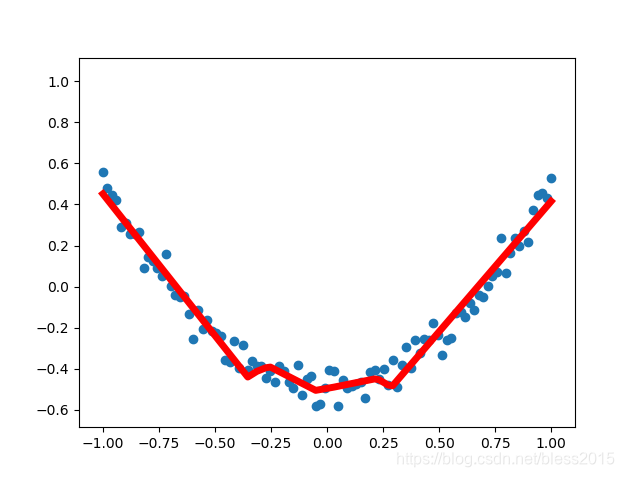

加上测试数据

x_data = np.linspace(-1,1,100)[:,np.newaxis]

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data)-0.5 + noise

xs = tf.placeholder(tf.float32,[None,1],name='x_input')

ys = tf.placeholder(tf.float32,[None,1],name='y_input')

#定义层

l1 = add_layer(xs,1,10,activation_function=tf.nn.relu)

prediction = add_layer(l1,10,1,activation_function=None)

#定义损失和优化器

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1]))

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

定义好图后,用tf.Session()来运行它

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

#可视化部分

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

ax.scatter(x_data,y_data)

plt.ion()

plt.show()

#------------------

for i in range(2000):

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

if i % 50==0:

prediction_value = sess.run(prediction,feed_dict={xs:x_data})

#可视化部分

try:

ax.lines.remove(lines[0])

except Exception:

pass

lines = ax.plot(x_data,prediction_value,'r-',lw=5)

plt.pause(0.1)

#--------------------