hue简介

Hue是一个开源的Apache Hadoop UI系统,最早是由Cloudera Desktop演化而来,由Cloudera贡献给开源社区,它是基于Python Web框架Django实现的。通过使用Hue我们可以在浏览器端的Web控制台上与Hadoop集群进行交互来分析处理数据,例如操作HDFS上的数据,运行MapReduce Job,Hive

编译HUE

安装依赖包

[root@hadoop-01 ~]# yum install -y ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc \

gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make mysql mysql-devel openldap-devel python-devel sqlite-devel gmp-devel

设置环境变量

[hadoop@hadoop-01 ~]$ vim ./.bash_profile

export HUE_HOME=/home/hadoop/app/hue-3.9.0-cdh5.7.0

export PATH=$HUE_HOME/bin:$PATH

[hadoop@hadoop-01 ~]$ source ./.bash_profile

安装hue及编译

#下载并解压安装到app

[hadoop@hadoop-01 software]$ wget http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.7.0.tar.gz

[hadoop@hadoop001 ~]$ tar -zxvf ~/soft/hue-3.9.0-cdh5.7.0.tar.gz -C ~/app/

#编译,make编译会下载很多的包,编译的快慢取决于网络

[hadoop@hadoop001 ~]$ cd ~/app/hue-3.9.0-cdh5.7.0/

[hadoop@hadoop001 hue-3.9.0-cdh5.7.0]$ make apps

若出现如下:XXXX post-processed则表示hue编译成功

1190 static files copied to '/home/hadoop/app/hue-3.9.0-cdh5.7.0/build/static', 1190 post-processed.

make[1]: Leaving directory `/home/hadoop/app/hue-3.9.0-cdh5.7.0/apps'

编辑配置文件

修改hue.ini配置文件($HUE_HOME/desktop/conf/hue.ini)

[hadoop@hadoop-01 ~]$ cd $HUE_HOME/desktop/conf

[hadoop@hadoop-01 conf]$ ll

total 56

-rw-r--r-- 1 hadoop hadoop 49111 Apr 27 11:09 hue.ini

-rw-r--r-- 1 hadoop hadoop 1843 Mar 24 2016 log4j.properties

-rw-r--r-- 1 hadoop hadoop 1721 Mar 24 2016 log.conf

[hadoop@hadoop-01 conf]$ pwd

/home/hadoop/app/hue-3.9.0-cdh5.7.0/desktop/conf

[hadoop@hadoop-01 conf]$ vim hue.ini

[desktop]

# Set this to a random string, the longer the better.

# This is used for secure hashing in the session store.

secret_key=jFE93j;2[290-eiw.KEiwN2s3['d;/.q[eIW^y#e=+Iei*@Mn<qW5o

# Webserver listens on this address and port

http_host=hadoop-01 (配置自己的主机名)

http_port=8888

# Time zone name

time_zone=Asia/Shanghai

Hadoop集成环境相关添加

修改hadoop配置文件( $HADOOP_HOME/etc/hadoop/ )

1.hdfs-site.xml

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

2、core-site.xml

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

3.yarn-site.xml

<!--打开HDFS上日志记录功能-->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!--在HDFS上聚合的日志最长保留多少秒。3天-->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>259200</value>

</property>

4.httpfs-site.xml

<property>

<name>httpfs.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>httpfs.proxyuser.hue.groups</name>

<value>*</value>

</property>

修改hue.ini配置文件( $HUE_HOME/desktop/conf/hue.ini )

[hadoop]

# Configuration for HDFS NameNode

# ------------------------------------------------------------------------

[[hdfs_clusters]]

# HA support by using HttpFs

[[[default]]]

# Enter the filesystem uri

fs_defaultfs=hdfs://hadoop-01:9000 (自己主机名以及HDFS对应端口号)

# NameNode logical name.

## logical_name=

# Use WebHdfs/HttpFs as the communication mechanism.

# Domain should be the NameNode or HttpFs host.

# Default port is 14000 for HttpFs.

webhdfs_url=http://hadoop-01:50070/webhdfs/v1 (自己主机名)

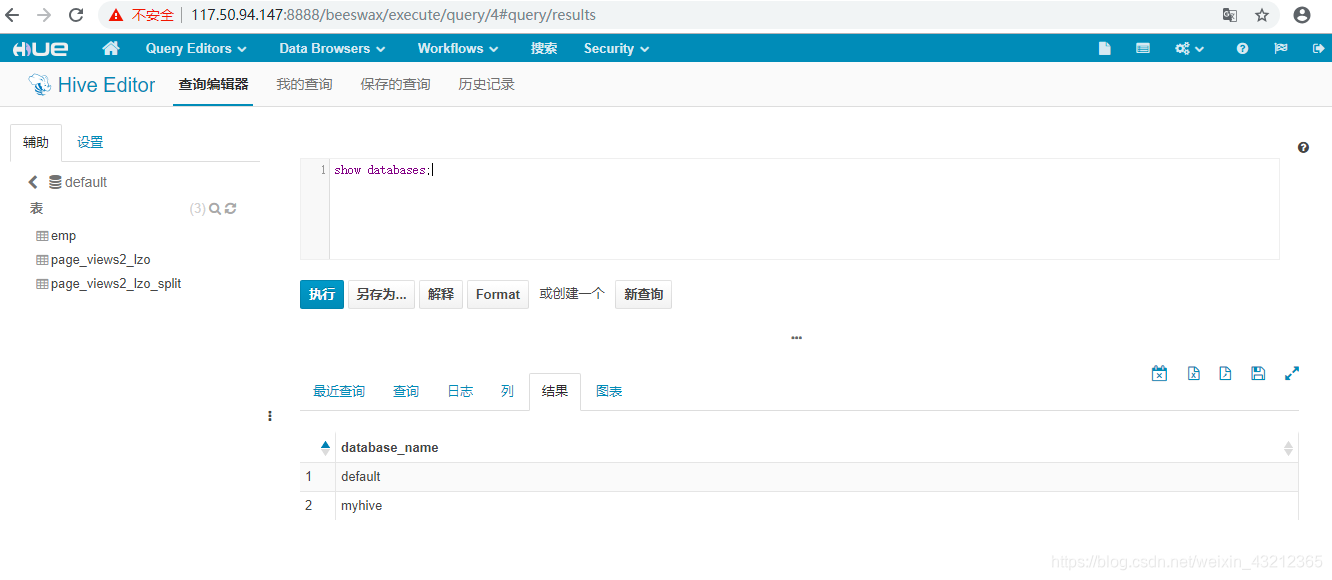

Hue集成Hive

修改hive配置文件( $HIVE_HOME/conf/hive-site.xml )

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop-01</value>

</property>

<property>

<name>hive.server2.long.polling.timeout</name>

<value>5000</value>

</property>

<property>

<name>hive.server2.authentication</name>

<value>NONE</value>

</property>

修改hue.ini配置文件( $HUE_HOME/desktop/conf/hue.ini )

[beeswax]

# Host where HiveServer2 is running.

# If Kerberos security is enabled, use fully-qualified domain name (FQDN).

hive_server_host=hadoop-01

# Port where HiveServer2 Thrift server runs on.

hive_server_port=10000

# Hive configuration directory, where hive-site.xml is located

hive_conf_dir=/home/hadoop/app/hive-1.1.0-cdh5.7.0/conf/

#配置MySQL

# mysql, oracle, or postgresql configuration.

## [[[mysql]]]

# Name to show in the UI.

nice_name="My SQL DB"

# For MySQL and PostgreSQL, name is the name of the database.

# For Oracle, Name is instance of the Oracle server. For express edition

# this is 'xe' by default.

name=mysqldb

# Database backend to use. This can be:

# 1. mysql

# 2. postgresql

# 3. oracle

engine=mysql

# IP or hostname of the database to connect to.

host=hadoop-01

# Port the database server is listening to. Defaults are:

# 1. MySQL: 3306

# 2. PostgreSQL: 5432

# 3. Oracle Express Edition: 1521

port=3306

# Username to authenticate with when connecting to the database.

user=root

# Password matching the username to authenticate with when

# connecting to the database.

password=123456

# Database options to send to the server when connecting.

# https://docs.djangoproject.com/en/1.4/ref/databases/

## options={}

启动Hue

#启动hadoop

[hadoop@hadoop-01 ~]$ start-all.sh

#启动hiveserver2

[hadoop@hadoop-01 ~]$ nohup hive --service hiveserver2 >~/app/hive-1.1.0-cdh5.7.0/console.log 2>&1 &

使用beeline连接hs2,-n hadoop 这里的hadoop是启动beeline的用户hadoop

[hadoop@hadoop-01 ~]$ beeline -u jdbc:hive2://hadoop-01:10000/default -n hadoop

连接时发生异常,信息如下:Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop001:10000: null

解决方案:检查发现配置了hive的用户登录导致的,将hive.server2.authentication配置的值改为NONE即可,原先是NOSAL

#启动hue

[hadoop@hadoop-01 ~]$ ~/app/hue-3.9.0-cdh5.7.0/build/env/bin/supervisor

访问hue

访问地址:http://ip:88888

这里创建的必须是hadoop用户,不然dfs只能操作/usr/XXX/目录,hive也是无法操作的。