各种采坑,终于跑起来了,参考自github上一个一千star的项目,Hats off to the sharer!

https://kiwenlau.com/2015/06/08/150608-hadoop-cluster-docker/

https://kiwenlau.com/2016/06/12/160612-hadoop-cluster-docker-update/

https://github.com/kiwenlau/hadoop-cluster-docker/blob/master/Dockerfile

前言

这里介绍下项目的总体思路:

首先以centos为基础镜像,安装java、hadoop以及一系列基础工具,制作hadoop基础镜像centos7-hadoop;以centos7-hadoop为基础镜像,修改配置文件,启动ssh服务,开放master相关端口,制作集群的master节点镜像hadoop-master;以centos7-hadoop为基础镜像,修改配置文件,启动ssh服务,开放slave相关端口,制作集群的slave镜像,master和slave的区别仅体现在开放的端口不同,后续优化中可能会改成只制作一个镜像,每个镜像都开发所有端口。

具体一些的细节,制作centos7-hadoop镜像时,在上下文文件夹中存储jdk、hadoop的安装包,减少镜像构建时间;制作master和slave镜像时,在上下文文件夹中存储相关配置文件,以此修改hadoop的相关文件,这一步仅仅需要上传一些配置文件,构建时间很短。这样先构建基础镜像,再构建master和slave镜像的方法,可以减少最终镜像的构建时间。

一、制作基础镜像centos7-hadoop

1、准备上下文

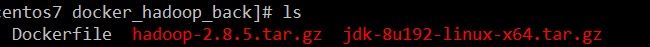

创建文件夹,将下载的hadoop、jdk文件放到文件夹中,并创建Dockerfile文件。

mkdir docker-hadoop-base

#这里将下载的jdk和hadoop放置到该文件夹下

2、编辑Dockerfile文件

vim Dockerfile

文件内容:

# # # # # # # # # # # # # # # # # # # # # # # # # Dockerfile to build hadoop container images # # Based on Centos # # # # # # # # # # # # # # # # # # # # # # # # # #base image FROM centos # MainTainer MAINTAINER neu_wj@163.com #install java RUN mkdir /usr/local/java ADD jdk-8u192-linux-x64.tar.gz /usr/local/java/ RUN mv /usr/local/java/jdk1.8.0_192 /usr/local/java/jdk1.8 ENV JAVA_HOME /usr/local/java/jdk1.8 ENV JRE_HOME ${JAVA_HOME}/jre ENV CLASSPATH .:${JAVA_HOME}/lib ENV PATH ${JAVA_HOME}/bin:$PATH #install hadoop ADD hadoop-2.8.5.tar.gz /usr/local/ RUN mv /usr/local/hadoop-2.8.5 /usr/local/hadoop ADD config/hadoop-env.sh /usr/local/hadoop/etc/hadoop/hadoop-env.sh ENV HADOOP_HOME /usr/local/hadoop ENV PATH ${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:$PATH #install ntpdate RUN yum install -y ntpdate && \ ntpdate ntp1.aliyun.com #install ssh RUN yum install -y openssh-clients && \ yum install -y openssh-server #install which RUN yum install -y which #install net-tools RUN yum install -y net-tools

3、通过Dockerfile构建镜像

在刚刚创建的文件夹下,执行构建命令:

docker build -t "centos7-hadoop" .

参数说明:-t 指出构建镜像的名称及标签 . 指明镜像构建的上下文文件夹,这里是当前文件夹。想要详细了解的话,这篇博客中关于镜像构建上下文介绍的很好。

日后闲暇时补充这个Dockerfile的相关说明

构建完成后,使用 docker images 可以看到刚刚构建的镜像

二、制作master镜像

1、准备上下文

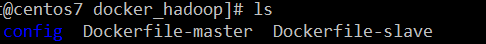

创建与1-1同级的文件夹docker-hadoop-base

mkdir docker-hadoop-base

在该文件夹下创建文件夹config,用于存储集群配置文件

mkdir config

config中准备需要的配置文件:

其中ssh_config是为ssh配置跳过公钥检查,文件内容如下:

Host localhost StrictHostKeyChecking no Host 0.0.0.0 StrictHostKeyChecking no Host hadoop-* StrictHostKeyChecking no UserKnownHostsFile=/dev/null

其余为hadoop配置文件,每个文件的具体内容根据自己集群情况来,给出一个我的示例:

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://hadoop-0:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>~/hadoopdata/</value> </property> </configuration>

#在hadoo安装包$hadoo_home/etc/hadoop/hadoop_env.sh的文件尾追加以下内容 # set JAVA_HOME for hadoop export JAVA_HOME=/usr/local/java/jdk1.8

#只给出configuration标签内容 <configuration> <property> <name>dfs.namenode.name.dir</name> <value>~/hadoopdata/namenode</value> </property> <!--配置存储namenode数据的目录--> <property> <name>dfs.datanode.data.dir</name> <value>~/hadoopdata/datanode</value> </property> <!--配置存储datanode数据的目录--> <property> <name>dfs.replication</name> <value>2</value> </property> <!--配置部分数量--> <property> <name>dfs.secondary.http.address</name> <value>hadoop-2:50090</value> </property> <!--配置第二名称节点 --> </configuration>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!--配置mapreduce任务所在的资源调度平台-->

</configuration>

#根据实际情况添加集群中slave节点的主机名 hadoop-1 hadoop-2

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-0</value>

</property>

<!--配置yarn主节点-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--配置执行的计算框架-->

</configuration>

2、编辑Dockerfile-master文件

# # # # # # # # # # # # # # # # # # # # # # # # # # # # # Dockerfile to build hadoop master container images # # Based on centos7-hadoop # # # # # # # # # # # # # # # # # # # # # # # # # # # # # #base image FROM centos7-hadoop # MainTainer MAINTAINER neu_wj@163.com #WORKDIR /root # ssh without key RUN ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' && \ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys COPY config/* /tmp/ RUN cat /tmp/ssh_config >> /etc/ssh/ssh_config && \ mv /tmp/core-site.xml $HADOOP_HOME/etc/hadoop/core-site.xml && \ mv /tmp/hdfs-site.xml $HADOOP_HOME/etc/hadoop/hdfs-site.xml && \ mv /tmp/yarn-site.xml $HADOOP_HOME/etc/hadoop/yarn-site.xml && \ mv /tmp/mapred-site.xml $HADOOP_HOME/etc/hadoop/mapred-site.xml && \ mv /tmp/slaves $HADOOP_HOME/etc/hadoop/slaves # SSH and SERF ports EXPOSE 22 7373 7946 # HDFS ports EXPOSE 9000 50070 # YARN ports EXPOSE 8032 8088 CMD ["/usr/sbin/init"]

3、构建镜像

docker build -f Dockerfile-master -t "hadoop-master" .

其中-f指定构建镜像时的Dockerfile文件。

三、制作slave镜像

1、还在制作master镜像的文件夹下,编辑Dockerfile-slave文件,文件内容如下

# # # # # # # # # # # # # # # # # # # # # # # # # # # # # Dockerfile to build hadoop slave container images # # Based on Centos7-hadoop # # # # # # # # # # # # # # # # # # # # # # # # # # # # # #base image FROM centos7-hadoop # MainTainer MAINTAINER neu_wj@163.com #WORKDIR /root # ssh without key RUN ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' && \ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys COPY config/* /tmp/ RUN cat /tmp/ssh_config >> /etc/ssh/ssh_config && \ mv /tmp/core-site.xml $HADOOP_HOME/etc/hadoop/core-site.xml && \ mv /tmp/hdfs-site.xml $HADOOP_HOME/etc/hadoop/hdfs-site.xml && \ mv /tmp/yarn-site.xml $HADOOP_HOME/etc/hadoop/yarn-site.xml && \ mv /tmp/mapred-site.xml $HADOOP_HOME/etc/hadoop/mapred-site.xml && \ mv /tmp/slaves $HADOOP_HOME/etc/hadoop/slaves # SSH and SERF ports EXPOSE 22 7373 7946 # HDFS ports EXPOSE 50090 50475 50010 CMD ["/usr/sbin/init"]

2、构建hadoop-slave镜像

docker build -f Dockerfile-slave -t "hadoop-slave" .

四、启动集群节点

1、创建hadoop网络

docker network hadoop

2、启动master节点容器

docker run -d --net=hadoop -P --privileged=true --name hadoop-0 -h hadoop-0 hadoop-master

3、启动slave节点容器(需要几个启动几个,和slaves配置文件中一致)

docker run -d --net=hadoop -P --privileged=true --name hadoop-1 -h hadoop-1 hadoop-slave

docker run -d --net=hadoop -P --privileged=true --name hadoop-2 -h hadoop-2 hadoop-slave

4、进入容器

docker exec -it hadoop-0 /bin/bash

5、给每个容器启动ssh(后续优化中会把这一步写到dockerfile中执行)

systemctl start sshd.service

五、启动集群

1、格式化hdfs

在namenode节点,即master节点上

hadoop namenode -format

2、启动hdfs

可在任意节点执行

start-dfs.sh

3、启动yarn

必须在SourceManger节点,即master

start-yarn.sh

六、验证

浏览器访问 “ 宿主机IP:50070”

浏览器访问“宿主机IP:8088”