IDEA上搭建spark开发环境

我本地系统是windows10,首先IDEA上要安装了scala插件。

1、下载winutils.exe文件

winutils.exe是在Windows系统上需要的hadoop调试环境工具,里面包含一些在Windows系统下调试hadoop、spark所需要的基本的工具类,另外在使用eclipse调试hadoop程序是,也需要winutils.exe,需要配置上面的环境变量

1)下载winutils,注意需要与hadoop的版本相对应。

hadoop2.2版本可以在这里下载https://github.com/srccodes/hadoop-common-2.2.0-bin

hadoop2.6版本可以在这里下载https://github.com/amihalik/hadoop-common-2.6.0-bin

2)配置环境变量

增加环境变量:HADOOP_HOME=“XXX\hadoop-common-2.2.0-bin”

添加path:%HADOOP_HOME%\bin

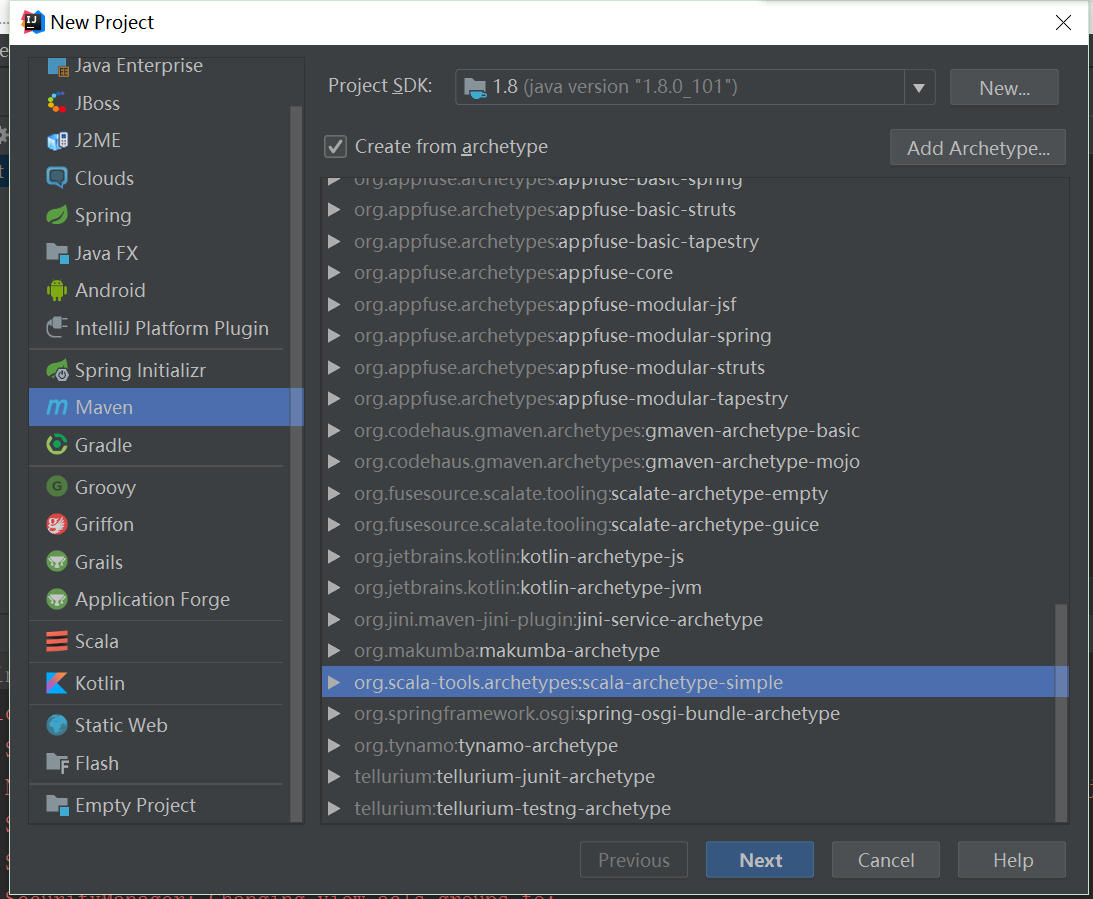

2、IDEA中新建maven工程:

配置pom.xml,选择对应的scala版本和spark版本:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>top.ruandb</groupId>

<artifactId>wordcount</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<scala.version>2.7.0</scala.version>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<scala.version>2.11.8</scala.version>

<spark.version>2.1.1</spark.version>

</properties>

<repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<dependencies>

<!--scala-->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<!--spark-core-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<!--junit-->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.5</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>

3、wordcount测试

word.txt:

hello word hello hello

word lucy lucy english rdd

WordCountApp:

package top.ruandb import org.apache.spark.{SparkConf, SparkContext} object WordCountApp { def main(args: Array[String]): Unit = { //创建sparkConf,并设置app名称和运行模式 val sparkConf = new SparkConf() sparkConf.setAppName("WordCount").setMaster("local[2]") //创建spark context val sc = new SparkContext(sparkConf) //wordcount 处理逻辑 sc.textFile("D:\\spark\\word.txt").flatMap(_.split(" ")).map((_, 1)). reduceByKey(_ + _, 1).sortBy(_._2, false). saveAsTextFile("D:\\spark\\wordcount") //关闭spark context sc.stop() } }

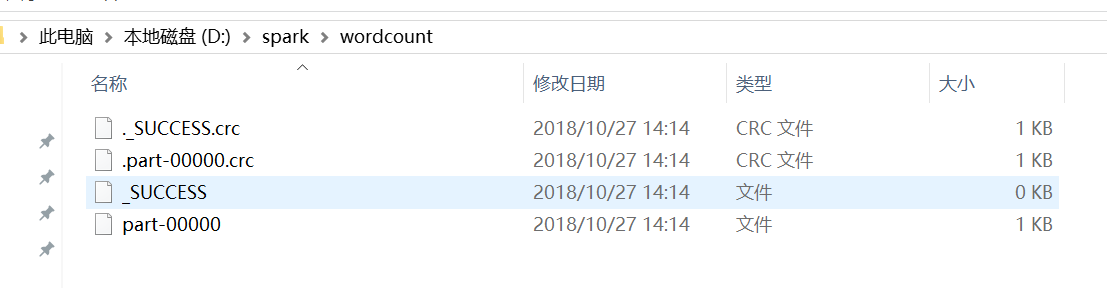

运行结果:

part-00000:

(hello,3) (word,2) (lucy,2) (rdd,1) (english,1)