一,基础环境配置

| 环境 | 版本 |

|---|---|

| debian09 | Debian 4.9.144-3 (2019-02-02) x86_64 GNU/Linux |

| kubectl | 1.14.1 |

| kubeadmin | 1.14.1 |

| docker | 18.09.4 |

| harbor | 1.6.5 |

| 主机名称 | 相关信息 | 备注 | |

|---|---|---|---|

| k8s-master001-150 | 10.25.148.150 | mater+etcd+keepalived+haproxy | |

| k8s-master002-151 | 10.25.148.151 | mater+etcd+keepalived+haproxy | |

| k8s-master003-152 | 10.25.148.152 | mater+etcd+keepalived+haproxy | |

| k8s-node001-153 | 10.25.148.153 | docker+kubelet+kubeadm | |

| k8s-node002-154 | 10.25.148.154 | docker+kubelet+kubeadm | |

| k8s-node003-155 | 10.25.148.155 | docker+kubelet+kubeadm | |

| k8s-node005-157 | 10.25.148.157 | docker+kubelet+kubeadm | |

| k8s-node004-156 | 10.25.148.156 | docker+docker-compose+harbor | |

| Vip-keepalive | 10.25.148.178 | vip用于高可用 |

二 ,安装前准备

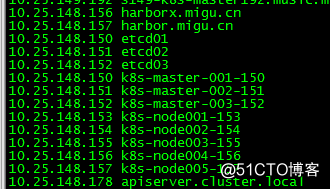

1.设置机器主机名并为每台服务器添加host解析记录

2.停防火墙{忽略}

3.关闭swap

swapoff -a

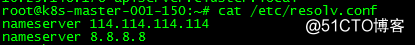

4.增加dns(coredns 会读取resolv.conf文件 dns不能设置成127.0.0.1或者本机 容易造成回路导致coredns启动失败,建议搭建自己的dns服务器)

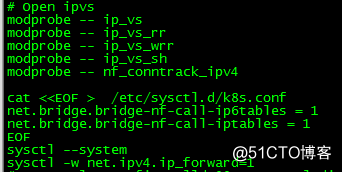

5.设置内核参数(每台机器上面都要执行)

6.为每台机器安装docker

7.时间同步

三,搭建etcd高可用集群

kubeadm创建高可用集群有两种方法:

etcd集群由kubeadm配置并运行于pod,启动在Master节点之上。

etcd集群单独部署。

etcd集群单独部署,似乎更容易些,这里就以这种方法来部署。

下面我们采用单独部署并集成到集群里面

1.安装CA证书,安装CFSSL证书管理工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /opt/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /opt/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /opt/bin/cfssl-certinfo

echo "export PATH=/opt/bin:$PATH" > /etc/profile.d/k8s.sh

2.所有k8s的执行文件全部放入/opt/bin/目录下

3.创建CA配置文件

root@k8s-master001-150:~# mkdir ssl

root@k8s-master001-150:~# cd ssl/

root@k8s-master001-150:~/ssl# cfssl print-defaults config > config.json

root@k8s-master001-150:~/ssl# cfssl print-defaults csr > csr.json

#根据config.json文件的格式创建如下的ca-config.json文件,过期时间设置成了 87600h

root@k8s-master001-150:~/ssl# cat ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

4.创建CA证书签名请求

root@k8s-master001-150:~/ssl# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GD",

"L": "SZ",

"O": "k8s",

"OU": "System"

}

]

}

5.生成CA证书和私匙

root@k8s-master001-150:~/ssl# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

root@k8s-master001-150:~/ssl# ls ca

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

6.拷贝ca证书到所有Node相应目录

root@k8s-master001-150:~/ssl# mkdir -p /etc/etcd/pki

root@k8s-master001-150:~/ssl# cp ca /etc/etcd/pki

root@k8s-master001-150:~/ssl# scp -r /etc/etcd/pki 10.25.148.151:/etc/etcd/pki

root@k8s-master001-150:~/ssl# scp -r/etc/etcd/pki 10.25.148.152:/etc/etcd/pki

7.下载etcd文件

有了CA证书后,就可以开始配置etcd了

wget https://github.com/coreos/etcd/releases/download/v3.3.12/etcd-v3.3.12-linux-amd64.tar.gz

cp etcd etcdctl /opt/bin/

8.创建etcd证书,创建etcd证书签名请求文件

root@k8s-master001-150:~/ssl# cat server.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.25.148.150",

"10.25.148.151",

"10.25.148.152"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GD",

"L": "SZ",

"O": "k8s",

"OU": "System"

}

]

}

#特别注意:上述host的字段填写所有etcd节点的IP,否则会无法启动。

9.生成etcd证书和私钥

cfssl gencert -ca=/etc/etcd/pki/ca.pem \

-ca-key=/etc/etcd/pki/ca-key.pem \

-config=/etc/etcd/pki/ca-config.json \

-profile=kubernetes server.json | cfssljson -bare server

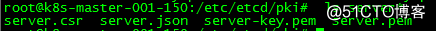

#查看

10.拷贝证书到所有节点对应目录

cp server.pem /etc/etcd/pki/

scp -r /etc/etcd/pki/ 10.25.148.151:/etc/etcd/pki

scp -r /etc/etcd/pki/* 10.25.148.152:/etc/etcd/pki

11.创建etcd的Systemd unit 文件,证书都准备好后就可以配置启动文件了

root@k8s-master001-150:~# mkdir -p /var/lib/etcd #必须先创建etcd工作目录

root@k8s-master001-150:~# cat /lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd \

--name ${ETCD_NAME} \

--cert-file=/etc/etcd/pki/server.pem \

--key-file=/etc/etcd/pki/server-key.pem \

--peer-cert-file=/etc/etcd/pki/peer.pem \

--peer-key-file=/etc/etcd/pki/peer-key.pem \

--trusted-ca-file=/etc/etcd/pki/ca.pem \

--peer-trusted-ca-file=/etc/etcd/pki/ca.pem \

--initial-advertise-peer-urls ${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--listen-peer-urls ${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls ${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls ${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-cluster-token ${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster etcd01=https://10.25.148.150:2380,etcd02=https://10.25.148.151:2380,etcd03=https://10.25.148.152:2380 \

--initial-cluster-state new \

--data-dir=${ETCD_DATA_DIR}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#启动

root@k8s-master001-150:~/ssl# systemctl daemon-reload

root@k8s-master001-150:~/ssl# systemctl enable etcd

root@k8s-master001-150:~/ssl# systemctl start etcd

#把etcd启动文件拷贝到另外两台节点,修改下配置就可以启动了

#查看集群状态

root@k8s-master-001-150:/etc/etcd/pki# etcdctl --key-file /etc/etcd/pki/server-key.pem --cert-file /etc/etcd/pki/server.pem --ca-file /etc/etcd/pki/ca.pem member list

5f239a2abdd6784f: name=etcd02 peerURLs=https://10.25.148.151:2380 clientURLs=https://10.25.148.151:2379 isLeader=true

73adbeabae2f400f: name=etcd03 peerURLs=https://10.25.148.152:2380 clientURLs=https://10.25.148.152:2379 isLeader=false

8e688fa3b00db5d7: name=etcd01 peerURLs=https://10.25.148.150:2380 clientURLs=https://10.25.148.150:2379 isLeader=false

#查看集群健康状态

root@k8s-master-001-150:/etc/etcd/pki# etcdctl --key-file /etc/etcd/pki/server-key.pem --cert-file /etc/etcd/pki/server.pem --ca-file /etc/etcd/pki/ca.pem cluster-health

member 5f239a2abdd6784f is healthy: got healthy result from https://10.25.148.151:2379

member 73adbeabae2f400f is healthy: got healthy result from https://10.25.148.152:2379

member 8e688fa3b00db5d7 is healthy: got healthy result from https://10.25.148.150:2379

cluster is healthy

12.安装配置keepalived

下载keeppalived包并修改配置文件

root@k8s-master-001-150:/etc/etcd/pki# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "curl -k https://10.25.148.178:8443"

interval 3

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 208

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass just0kk

}

virtual_ipaddress {

10.25.148.178/22

}

track_script {

check_haproxy

}

}

}

#三个master节点配置一样,其它依次修改priority优先级90,80及state为BACKUP(注意virtual_router_id避免和内网其它机器的id冲突)

13,安装和配置haproxy

root@k8s-master-001-150:/etc/etcd/pki# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

defaults

log global

mode http

#option httplog

#option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen stats

bind :1080

stats refresh 30s

stats uri /stats

stats realm Haproxy Manager

stats auth admin:admin

frontend kubernetes

bind :8443

mode tcp

default_backend kubernetes-master

#default_backend dynamic

backend kubernetes-master

mode tcp

balance roundrobin

server k8s-master-001-150 10.25.148.150:6443 check maxconn 2000

server k8s-master-002-151 10.25.148.151:6443 check maxconn 2000

server k8s-master-003-152 10.25.148.152:6443 check maxconn 2000

#三台配置一样不用修改

13,安装kubeadm、kubelet、kubectl工具

很简单下载kubeadm、kubelet、kubectl二进制文件拷贝至/usr/bin/,添加执行权限即可

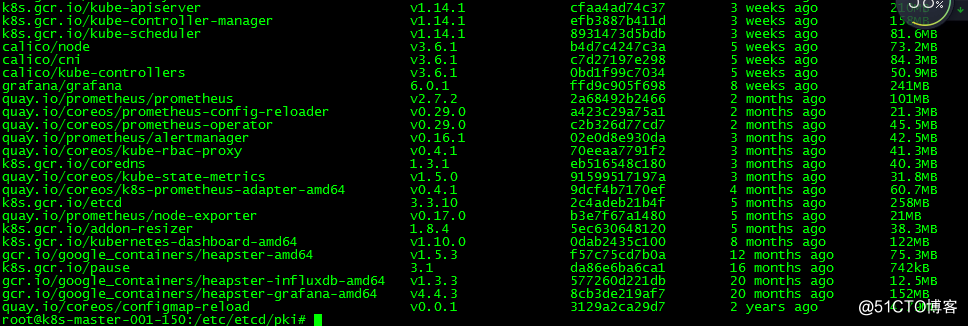

14.准备搭建集群的镜像(自行搭建×××拉取)

15.编写集群init初始化配置文件(在执行文件之前不行安装socat、ipset、ipvsadm等工具)

root@k8s-master-001-150:/home/kube/conf# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

#imageRepository: harborx.migu.cn/library

networking:

podSubnet: 100.64.0.0/10

kubernetesVersion: v1.14.1

apiServer:

CertSANs:

- "k8s-master-001-150"

- "k8s-master-002-151"

- "k8s-master-003-152"

- "10.25.148.150"

- "10.25.148.151"

- "10.25.148.152"

- "10.25.148.178"

- "127.0.0.1"

controlPlaneEndpoint: "10.25.148.178:8443"

etcd:

external:

endpoints:

- https://10.25.148.150:2379

- https://10.25.148.151:2379

-

caFile: /etc/etcd/pki/ca.pem

certFile: /etc/etcd/pki/server.pem

keyFile: /etc/etcd/pki/server-key.pem

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

#添加其他两台master

scp -r /etc/kubernetes/pki [email protected]:/etc/kubernetes/

scp -r /etc/kubernetes/pki [email protected]:/etc/kubernetes/

#每台执行如master001上面的kubeadm.yaml初始化文件

#查看

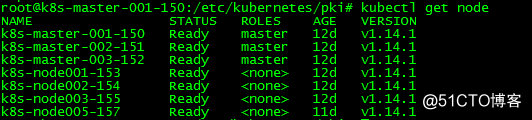

root@k8s-master-001-150:/etc/kubernetes/pki# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master-001-150 Ready master 12d v1.14.1

k8s-master-002-151 Ready master 12d v1.14.1

k8s-master-003-152 Ready master 12d v1.14.1

16.添加node节点

kubeadm join 10.25.148.178:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c0a1021e5d63f509a0153724270985cdc22e46dc76e8e7b84d1fbb5e83566ea8

#查看

root@k8s-master-001-150:/etc/kubernetes/pki# kubectl get pod -n kube-system

coredns-fb8b8dccf-htzn6 1/1 Running 9 12d

coredns-fb8b8dccf-prsw9 1/1 Running 7 12d

heapster-5cf8f6d449-zgkld 1/1 Running 0 5d15h

kube-apiserver-k8s-master-001-150 1/1 Running 0 11d

kube-apiserver-k8s-master-002-151 1/1 Running 0 11d

kube-apiserver-k8s-master-003-152 1/1 Running 7 11d

kube-controller-manager-k8s-master-001-150 1/1 Running 2 12d

kube-controller-manager-k8s-master-002-151 1/1 Running 3 12d

kube-controller-manager-k8s-master-003-152 1/1 Running 11 11d

kube-proxy-6pfcj 1/1 Running 1 12d

kube-proxy-762pq 1/1 Running 1 12d

kube-proxy-8z6j7 1/1 Running 1 12d

kube-proxy-dcgqn 1/1 Running 9 11d

kube-proxy-jvrqt 1/1 Running 1 12d

kube-proxy-n6c8r 1/1 Running 6 11d

kube-proxy-t7jnr 1/1 Running 2 12d

kube-scheduler-k8s-master-001-150 1/1 Running 1 12d

kube-scheduler-k8s-master-002-151 1/1 Running 3 12d

kube-scheduler-k8s-master-003-152 1/1 Running 13 11d

17.装Calico网络插件

下载最新版本calico.yaml

curl https://docs.projectcalico.org/v3.6.1/getting-started/kubernetes/installation/hosted/calico.yaml -O

#配置

POD_CIDR="100.64.0.0/10" \

sed -i -e "s?192.168.0.0/16?$POD_CIDR?g" calico.yaml

sed -i 's@.etcd_endpoints:.@\ \ etcd_endpoints:\ \"https://10.25.148.150:2379,https://10.25.148.151:2379,https://10.25.148.152:2379\"@gi' calico.yaml

export ETCD_CERT=cat /etc/etcd/pki/etcd.pem | base64 | tr -d '\n'

export ETCD_KEY=cat /etc/etcd/pki/etcd-key.pem | base64 | tr -d '\n'

export ETCD_CA=cat /etc/etcd/pki/ca.pem | base64 | tr -d '\n'

sed -i "s@.etcd-cert:.@\ \ etcd-cert:\ ${ETCD_CERT}@gi" calico.yaml

sed -i "s@.etcd-key:.@\ \ etcd-key:\ ${ETCD_KEY}@gi" calico.yaml

sed -i "s@.etcd-ca:.@\ \ etcd-ca:\ ${ETCD_CA}@gi" calico.yaml

sed -i 's@.etcd_ca:.@\ \ etcd_ca:\ "/calico-secrets/etcd-ca"@gi' calico.yaml

sed -i 's@.etcd_cert:.@\ \ etcd_cert:\ "/calico-secrets/etcd-cert"@gi' calico.yaml

sed -i 's@.etcd_key:.@\ \ etcd_key:\ "/calico-secrets/etcd-key"@gi' calico.yaml

18.查看集群

root@k8s-master-001-150:/home/admin# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-75569d87d7-spv4l 1/1 Running 5 12d

calico-node-fdlmb 1/1 Running 1 12d

calico-node-hkz4l 1/1 Running 6 11d

calico-node-hwdxf 1/1 Running 1 12d

calico-node-jvgjj 1/1 Running 1 12d

calico-node-jxb69 1/1 Running 11 12d

calico-node-k2ktm 1/1 Running 2 12d

calico-node-mnvwm 1/1 Running 1 12d

coredns-fb8b8dccf-htzn6 1/1 Running 9 12d

coredns-fb8b8dccf-prsw9 1/1 Running 7 12d

heapster-5cf8f6d449-zgkld 1/1 Running 0 5d16h

kube-apiserver-k8s-master-001-150 1/1 Running 0 11d

kube-apiserver-k8s-master-002-151 1/1 Running 0 11d

kube-apiserver-k8s-master-003-152 1/1 Running 7 11d

kube-controller-manager-k8s-master-001-150 1/1 Running 2 12d

kube-controller-manager-k8s-master-002-151 1/1 Running 3 12d

kube-controller-manager-k8s-master-003-152 1/1 Running 11 11d

kube-proxy-6pfcj 1/1 Running 1 12d

kube-proxy-762pq 1/1 Running 1 12d

kube-proxy-8z6j7 1/1 Running 1 12d

kube-proxy-dcgqn 1/1 Running 9 11d

kube-proxy-jvrqt 1/1 Running 1 12d

kube-proxy-n6c8r 1/1 Running 6 11d

kube-proxy-t7jnr 1/1 Running 2 12d

kube-scheduler-k8s-master-001-150 1/1 Running 1 12d

kube-scheduler-k8s-master-002-151 1/1 Running 3 12d

kube-scheduler-k8s-master-003-152 1/1 Running 13 11d

kubernetes-dashboard-5f57845f9d-87gn6 1/1 Running 1 12d