调试

通常有两种方法对Scrapy项目进行调试,一种是通过scrapy.shell,另一种是通过IDE的Debug功能。这里介绍第二种。

运行环境

- 语言:python 3.6

- IDE: VS Code

- 浏览器:Chrome

scrapy shell

在命令行中输入 scrapy shell 【想要访问的页面url】

成功后会进入scrapy shell进行操作:

response.xpath(‘……’)进行测试

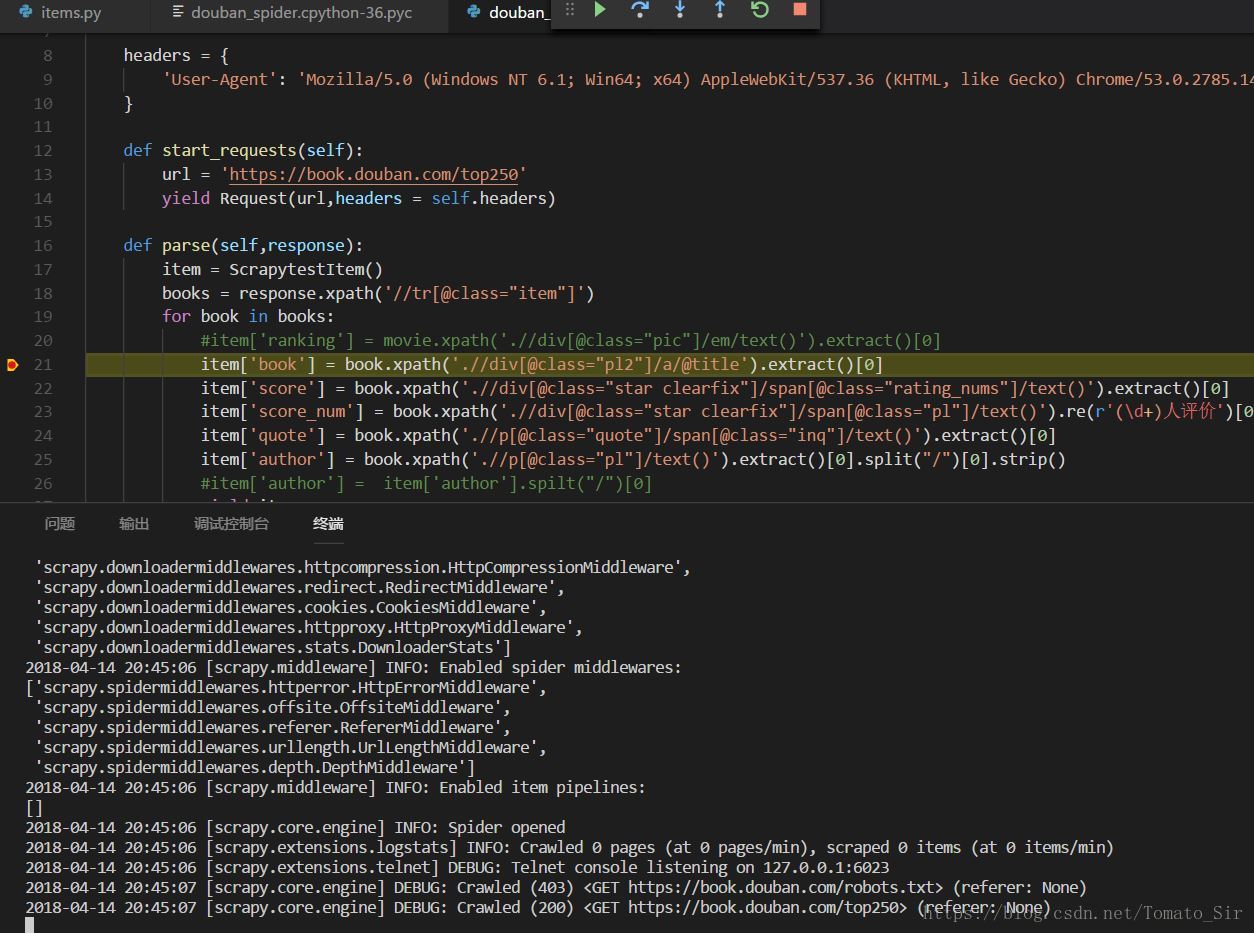

IDE Debug

首先在items.py同级目录下,创建run.py

run.py

coding如下:

from scrapy import cmdline

name = 'douban_book_top250'

cmd = 'scrapy crawl {0}'.format(name)

cmdline.execute(cmd.split())其中,name为之前spider的name属性,接着在spider文件中(或者你想要的位置)设置断点。接着,在vs code中选择调试即可。程序会在断点出暂停,我们就可以查看相应内容进行调试。

抓取公司信息项目

crawlerspider

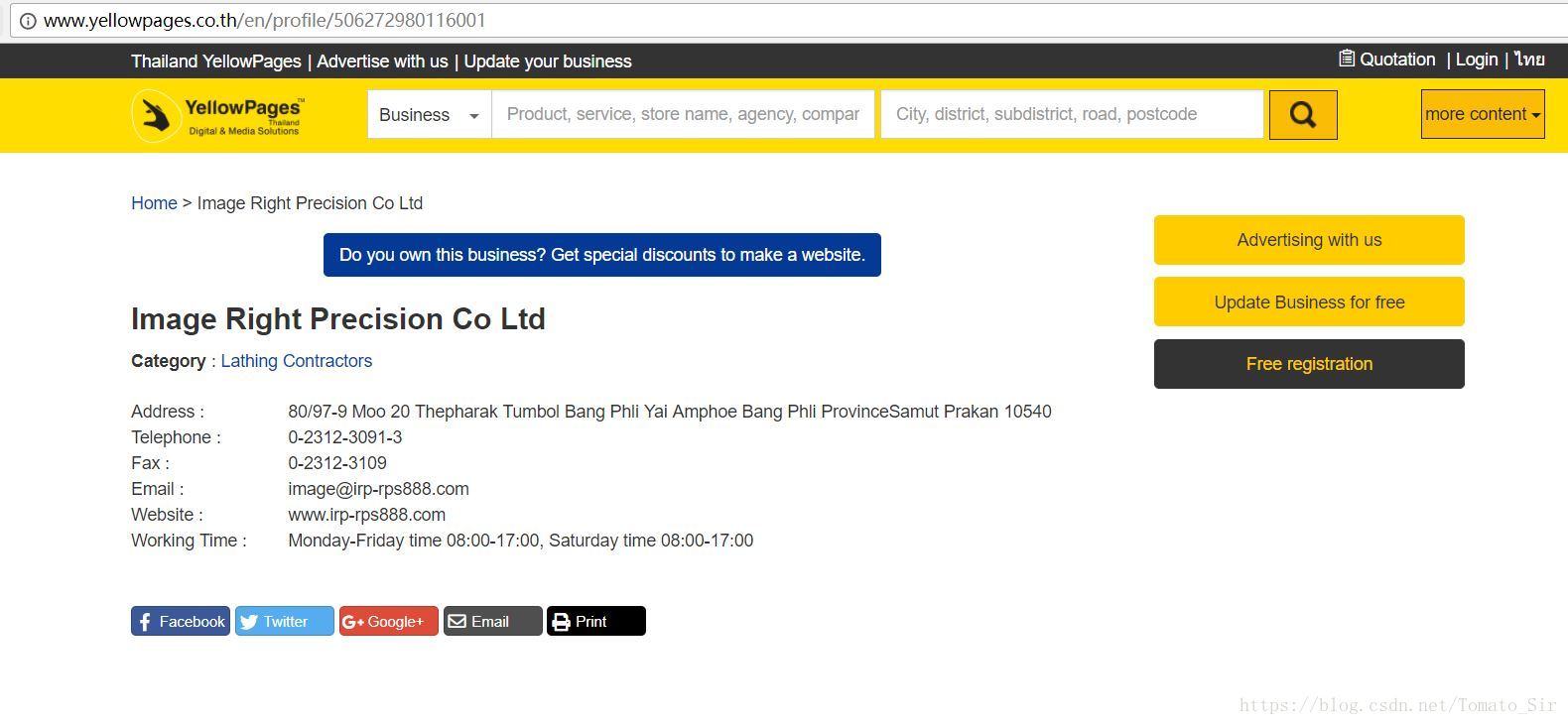

接到一个任务,爬取类似黄页的网站,抓取公司的基本信息(名称、业务类别、电话、邮件)。登录网站,查看相关的url:

发现网站关于公司信息的url基本都是…/en/profile/数字+字母的格式,受小白进阶的启发,采用crawlerspider进行爬取。crawlerspider主要是对有规则的url进行爬取,代码如下:

import re

import scrapy

from scrapy.spiders import CrawlSpider, Rule, Request

from scrapy.linkextractors import LinkExtractor

from YellowPagesCrawler.items import YellowPagesCrawlerItem

class YPCrawler(CrawlSpider):

name = 'YPCrawler'

allowed_domains = ['yellowpages.co.th']

start_urls = ['http://www.yellowpages.co.th']

rules = (

Rule(LinkExtractor(allow=(),restrict_xpaths=('//a[@href]')),

callback='parse_item',follow=True),

)

def parse_item(self,response):

if (re.search(r'^http://www.yellowpages.co.th/en/profile/\S+',response.url)):

print(response.url)

item = YellowPagesCrawlerItem()

item['CompanyURL'] = response.url

item['CompanyName'] = response.xpath('.//h2/text()').extract()[0]

item['CompanyCategory'] = response.xpath('.//strong/parent::*/following-sibling::*/text()').extract()[0]

item['CompanyTel'] = ""

telnumbers = response.xpath('.//div[contains(text(),"Telephone")]/following::*[1]/a/text()').extract()

if telnumbers == []:

telnumbers = response.xpath('.//div[contains(text(),"Telephone")]/following::*[1]/text()').extract()

for tel in telnumbers:

item['CompanyTel'] = item['CompanyTel'] + tel.strip() + ' '

mail = response.xpath('.//div[contains(text(),"Email")]/following::*[1]/a/text()').extract()

if mail == []:

mail = response.xpath('.//div[contains(text(),"Email")]/following::*[1]/text()').extract()

if mail != []:

item['CompanyMail'] = mail[0].strip()

else:

item['CompanyMail'] = "no Email"

return item

else:

pass

其中,在parse_item的正则表达式原先是放在allow里的,但是这样的话,从主页进行爬取,并不会爬到相关信息,由于主页并不能直接连接到公司信息页面,即使follow=true也没有用,因为allow完之后没有符合的url,所以在allow去空,即爬取所有在allow_domain下的url。在parse_item中通过response.url对所有response里进行筛选。Rule里的参数:allow和restrict_xpaths都是对url进行筛选的,callback是指定处理response的函数,follow表示是否对抓取的url进行跟进。这里特别需要注意的是,rules是一个iterator对象,因此需要在Rule定义完之后,加上一个逗号,至关重要!!!!否则会报错。

通过item pipeline将item保存至Excel

scrapy里在pipeline对item进行处理和保存,这里选择将item内容保存至excel里,先上代码:

from openpyxl import Workbook

class YellowpagescrawlerPipeline(object):

wb = Workbook()

ws = wb.active

ws.append(['公司名称','业务分类','联系电话','电子邮件','链接地址'])

def process_item(self, item, spider):

line = [item['CompanyName'],item['CompanyCategory'],item['CompanyTel'],item['CompanyMail'],item['CompanyURL']]

self.ws.append(line)

self.wb.save('.\CompanyInfo.xlsx')

return item

openpyxl是第三方库,代码比较简单。

使pipeline生效,需要在settings.py中生效ITEM_PIPELINES设置,具体如下

ITEM_PIPELINES = {

'YellowPagesCrawler.pipelines.YellowpagescrawlerPipeline': 200,

}其中200为优先级,数值越小,优先级越高。

爬取结果:

更改需求,抓取某一类别公司信息

项目临时需求,需要抓取特定类别公司信息,例如:

http://www.yellowpages.co.th/en/heading/Plastics-Specialties-Wholesales&Manufacturers?page=0

可以看到,每一页有一些公司列表,每个公司点击进去会有详细的公司信息,因此,修改我们的spider如下:

class YPSpider(scrapy.Spider):

name = "YPSpider"

allow_domains = ['yellowpages.co.th']

base_url = 'http://www.yellowpages.co.th/en/heading/Plastics-Specialties-Wholesales&Manufacturers?page='

def start_requests(self):

for i in range(0,77):

url = self.base_url + str(i)

print(url)

yield Request(url,self.parse)

def parse(self,response):

urls = response.xpath('.//h3/a/@href').extract()

CoNames = response.xpath('.//h3/a/text()').extract()

for index in range(0,len(urls)):

print(urls[index])

yield Request(urls[index],callback=self.getItems,meta={'CoName':CoNames[index]})

def getItems(self,response):

item = YPItem()

item['CompanyName'] = str(response.meta['CoName'])

item['CompanyURL'] = response.url

# 分别对两种页面url进行解析

if (re.search(r'^http://www.yellowpages.co.th/en/profile/\S+',response.url)):

item['CompanyTel'] = ""

telnumbers = response.xpath('.//div[contains(text(),"Telephone")]/following::*[1]/a/text()').extract()

if telnumbers == []:

telnumbers = response.xpath('.//div[contains(text(),"Telephone")]/following::*[1]/text()').extract()

for tel in telnumbers:

item['CompanyTel'] = item['CompanyTel'] + tel.strip() + ' '

if item['CompanyTel'] == "":

item['CompanyTel'] = "no Telephone"

mail = response.xpath('.//div[contains(text(),"Email")]/following::*[1]/a/text()').extract()

if mail == []:

mail = response.xpath('.//div[contains(text(),"Email")]/following::*[1]/text()').extract()

if mail != []:

item['CompanyMail'] = mail[0].strip()

else:

item['CompanyMail'] = "no Email"

else:

item['CompanyTel'] = ""

telnumbers = response.xpath('.//a[contains(@href,"tel")]/nobr/text()').extract()

for telNum in telnumbers:

item['CompanyTel'] = item['CompanyTel'] + telNum + ' '

if item['CompanyTel'] == "":

item['CompanyTel'] = "no Telephone"

item['CompanyMail'] = ""

mail = response.xpath('.//a[contains(@href,"mailto")]/text()').extract()

if mail != []:

item['CompanyMail'] = mail[0]

else:

item['CompanyMail'] = "no Email"

return item这里需要注意的是,对于不同的request,我们定义不同的callback函数;同时在yield request的时候,我们可以通过 meta 的方式传递我们想要的变量,以便callback函数使用。

上传变量:

yield Request(urls[index],callback=self.getItems,meta={'CoName':CoNames[index]})在callback函数中使用这些变量:

item['CompanyName'] = str(response.meta['CoName'])