版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/QQB67G8COM/article/details/87369116

一、Sqoop2提供了能够使用REST接口与Sqoop 2服务器通信的命令行shell。客户端可以在两种模式下运行——交互模式和批处理模式。批处理模式目前不支持create、update和clone命令。交互模式支持所有可用的命令。

二、Sqoop2新增了Sqoop中没有的链接器,我们主要是通过链接器的方式来实现数据的create、update和clone,简单说明一下链接器的使用过程并通过一个实例来掌握:使用链接器首先要的是相关链接器的Jar包,譬如你想要将数据从hdfs中传输到mysql,那么你至少需要使用官方提供hdfs数据传输的封装包和jdbc的相关封装包,我们首先需要创建两个链接hdfs-link和jdbc-link,并且配置好这两个链接,然后通过这两个link来创建一个job,并且配置好job,最后start这个job,Java角度来看可以把创建link看作class的对象实例,调用的jar包为class,job看作main函数,start也就是代码执行。

三、将HDFS的数据导出Mysql数据库

1、hadoop配置mapred-site.xml,这个主要是为了Sqoop2能够查看自身的各种status,譬如查看Job是否执行成功

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

$HADOOP_HOME/sbin/mr-jobhistory-daemon.sh start historyserver

2、在Sqoop2客户端设置Sqoop2的Server,12000为默认端口

sqoop:000> set server --host master --port 12000 --webapp sqoop

3、准备HDFS和MYSQL上的数据

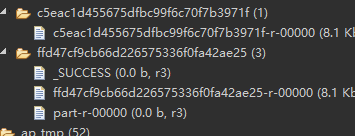

HDFS上的需要注意,文件夹下只放一个有数据的文件,其它的需要删除

MYSQL上的表需要注意,我这里的数据是用来进行数据分析的,我的业务的原因,不需要设置主键。

4、启动hadoop、historyserver和sqoop2

$ start-all.sh && ($HADOOP_HOME/sbin/mr-jobhistory-daemon.sh start historyserver) && (sqoop2-server start)

5、查看官方自带的connector

sqoop:000> show connector

+------------------------+---------+------------------------------------------------------------+----------------------+

| Name | Version | Class | Supported Directions |

+------------------------+---------+------------------------------------------------------------+----------------------+

| generic-jdbc-connector | 1.99.7 | org.apache.sqoop.connector.jdbc.GenericJdbcConnector | FROM/TO |

| kite-connector | 1.99.7 | org.apache.sqoop.connector.kite.KiteConnector | FROM/TO |

| oracle-jdbc-connector | 1.99.7 | org.apache.sqoop.connector.jdbc.oracle.OracleJdbcConnector | FROM/TO |

| hdfs-connector | 1.99.7 | org.apache.sqoop.connector.hdfs.HdfsConnector | FROM/TO |

| ftp-connector | 1.99.7 | org.apache.sqoop.connector.ftp.FtpConnector | TO |

| kafka-connector | 1.99.7 | org.apache.sqoop.connector.kafka.KafkaConnector | TO |

| sftp-connector | 1.99.7 | org.apache.sqoop.connector.sftp.SftpConnector | TO |

+------------------------+---------+------------------------------------------------------------+----------------------+

6、创建hdfs和jdbc的link,我的已经创建,因此使用update来修改

create link --connector hdfs-connector #创建一个hdfs-connector链接器,必须填Name、URI和Hadoop的Conf目录,其它的根绝实际业务填写,留空直接Enter

sqoop:000> update link --name hdfs-link

Updating link with name hdfs-link

Please update link:

Name: hdfs-link

HDFS cluster

URI: hdfs://master:8020

Conf directory: /hadoopeco/hadoop-2.8.5/etc/hadoop

Additional configs::

There are currently 0 values in the map:

entry#

link was successfully updated with status OK

create link --name jdbc-link #必须填写name、driverclass、connection、username和password,其它的根据实际业务填写,留空Enter

sqoop:000> update link --name jdbc-link

Updating link with name jdbc-link

Please update link:

Name: jdbc-link

Database connection

Driver class: com.mysql.jdbc.Driver

Connection String: jdbc:mysql://master:3306/AP_db

Username: root

Password: **********

Fetch Size:

Connection Properties:

There are currently 0 values in the map:

entry#

SQL Dialect

Identifier enclose:

link was successfully updated with status OK

查看已创建的link

sqoop:000> show link

+-----------+------------------------+---------+

| Name | Connector Name | Enabled |

+-----------+------------------------+---------+

| hdfs-link | hdfs-connector | true |

| jdbc-link | generic-jdbc-connector | true |

+-----------+------------------------+---------+

7、通过link来创建job

create job -f hdfs-link -t jdbc-link

sqoop:000> update job --name hdfsTojdbc

Updating job with name hdfsTojdbc

Please update job:

Name: hdfsTojdbc #jobname,必填

Input configuration

Input directory: #这个目录是天hdfs下的目录,例/tmp/user/<userid>

Override null value:

Null value:

Incremental import

Incremental type:

0 : NONE

1 : NEW_FILES

Choose: 0 #选0,必填

Last imported date:

Database target

Schema name:

Table name: t_c5eac1d455675dfbc99f6c70f7b3971f #tablename,必填

Column names:

There are currently 0 values in the list:

element#

Staging table:

Clear stage table: 2019-02-15T12:04:49,413 INFO [UpdateThread] org.apache.sqoop.repository.JdbcRepositoryTransaction - Attempting transaction commit

Throttling resources

Extractors:

Loaders:

Classpath configuration

Extra mapper jars:

There are currently 0 values in the list:

element#

Job was successfully updated with status OK

8、执行Job和查看Job的状态

开始任务

sqoop:000> start job --name "hdfsTojdbc"

Submission details

Job Name: hdfsTojdbc

Server URL: http://localhost:12000/sqoop/

Created by: ljj

Creation date: 2019-02-15 12:27:46 EST

Lastly updated by: ljj

External ID: job_1550244884040_0002

http://master:8088/proxy/application_1550244884040_0002/

2019-02-15 12:27:46 EST: BOOTING - Progress is not available #未取得进展

查看任务状态,如果没有配置mapreduce.jobhistory.address,就无法查看任务的状态,因为获取不了hdfs的任务状态

sqoop:000> status job -name "hdfsTojdbc"

Submission details

Job Name: hdfsTojdbc

Server URL: http://localhost:12000/sqoop/

Created by: ljj

Creation date: 2019-02-15 12:30:30 EST

Lastly updated by: ljj

External ID: job_1550244884040_0002

http://master:8088/proxy/application_1550244884040_0002/

2019-02-15 12:31:56 EST: SUCCEEDED

Counters:

org.apache.hadoop.mapreduce.FileSystemCounter

FILE_LARGE_READ_OPS: 0

FILE_WRITE_OPS: 0

HDFS_READ_OPS: 59

HDFS_BYTES_READ: 47691

HDFS_LARGE_READ_OPS: 0

FILE_READ_OPS: 0

FILE_BYTES_WRITTEN: 3090006

FILE_BYTES_READ: 0

HDFS_WRITE_OPS: 0

HDFS_BYTES_WRITTEN: 0

org.apache.hadoop.mapreduce.lib.output.FileOutputFormatCounter

BYTES_WRITTEN: 0

org.apache.hadoop.mapreduce.lib.input.FileInputFormatCounter

BYTES_READ: 0

org.apache.hadoop.mapreduce.JobCounter

TOTAL_LAUNCHED_MAPS: 9

MB_MILLIS_MAPS: 846051328

VCORES_MILLIS_MAPS: 826222

SLOTS_MILLIS_MAPS: 826222

OTHER_LOCAL_MAPS: 9

MILLIS_MAPS: 826222

org.apache.sqoop.submission.counter.SqoopCounters

ROWS_READ: 183

ROWS_WRITTEN: 183

org.apache.hadoop.mapreduce.TaskCounter

SPILLED_RECORDS: 0

MERGED_MAP_OUTPUTS: 0

VIRTUAL_MEMORY_BYTES: 19212435456

MAP_INPUT_RECORDS: 0

SPLIT_RAW_BYTES: 2202

MAP_OUTPUT_RECORDS: 183

FAILED_SHUFFLE: 0

PHYSICAL_MEMORY_BYTES: 2263040000

GC_TIME_MILLIS: 138501

CPU_MILLISECONDS: 71780

COMMITTED_HEAP_BYTES: 1480065024

Job executed successfully

mysql此时数据插入成功:

mysql默认运行在safe-updates模式下,该模式不允许非主键情况下执行update和delete命令,若需要删除数据,需要接触安全模式

SET SQL_SAFE_UPDATES = 0

事后为了数据库安全务必将0修改回1

四、问题解决

务必配置mapred-site.xml,否则不单止查看不了job的状态,想通过命令“stop job -name jobname”来终止任务时因为获取不了MapReduce的状态而抛出异常