论文

Abstract

- Despite effectiveness, FM can be hindered by its modelling of all feature interactions with the same weight, as not all feature interactions are equally useful and predictive.

- In this work, we improve FM by discriminating the importance of different

feature interactions. - Empirically, it is shown on regression task AFM betters FM with a 8.6% relative improvement, and consistently outperforms the state-of-the-art deep learning methods Wide&Deep [Cheng et al., 2016] and DeepCross [Shan et al., 2016] with a much simpler structure and fewer model parameters.

1 Introduction

- the key problem with PR (and other similar cross feature-based solutions, such as the wide component of Wide&Deep [Cheng et al., 2016]) is that for sparse datasets where only a few cross features are observed, the parameters for unobserved cross features cannot be estimated.

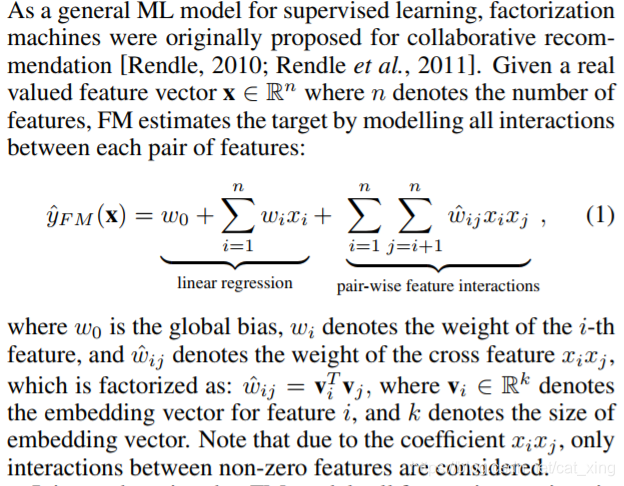

- To address the generalization issue of PR, factorization machines (FMs)1 were proposed [Rendle, 2010], which parameterize the weight of a cross feature as the inner product of the embedding vectors of the constituent features

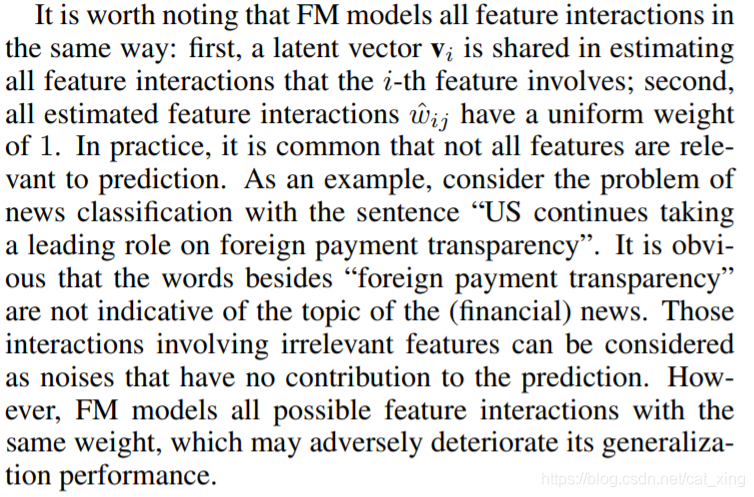

- Despite great promise, we argue that FM can be hindered by its modelling of all factorized interactions with the same weight.

- the interactions with less useful features should be assigned a lower weight as they contribute less to the prediction.

- Nevertheless, FM lacks such capability of differentiating the importance of feature interactions, which may result in suboptimal prediction.

- In this work, we improve FM by discriminating the importance of feature interactions.

- We devise a novel model named AFM, which utilizes

the recent advance in neural network modelling — the attention

mechanism [Chen et al., 2017a; 2017b] — to enable feature interactions

contribute differently to the prediction.- More importantly, the importance of a feature interaction is automatically learned from data without any human domain knowledge.

- Extensive experiments show that our use of attention on FM serves two benefits:it not only leads to better performance, but also provides insight into which feature interactions contribute more to the prediction.

2 Factorization Machines

3 Attentional Factorization Machines

3.1 Model

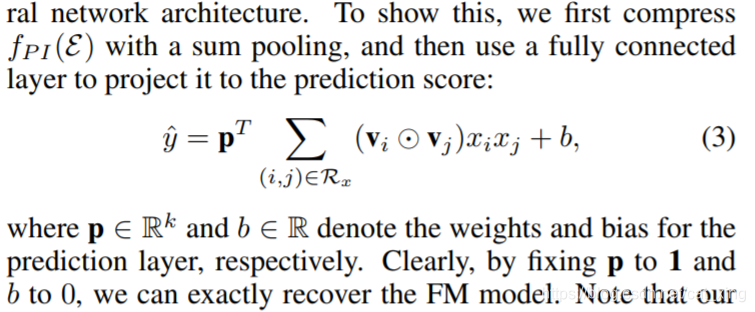

- the pair-wise interaction layer and the attention-based pooling layer are the main contribution of this paper.

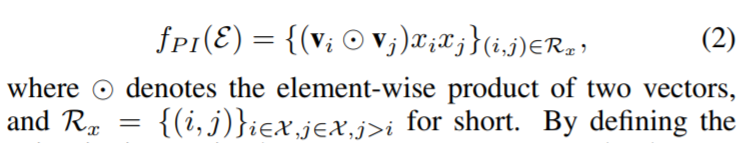

Pair-wise Interaction Layer

双向交互层

- It expands m vectors to m(m − 1)/2 interacted vectors, where

each interacted vector is the element-wise product of two distinct

vectors to encode their interaction.

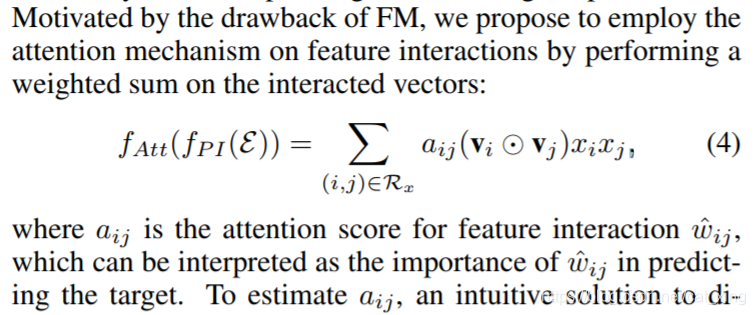

Attention-based Pooling Layer

- The idea is to allow different parts contribute

differently when compressing them to a single representation.

- for features that have never co-occurred in the training data,

the attention scores of their interactions cannot be estimated.

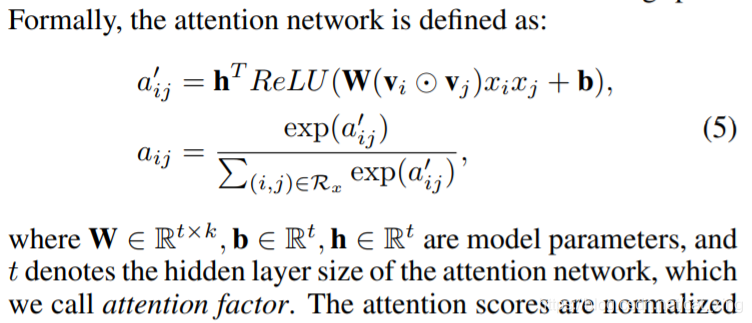

To address the generalization problem, we further parameterize

the attention score with a multi-layer perceptron (MLP),

which we call the attention network.

the attention network 是用来算 的

3.2 Learning

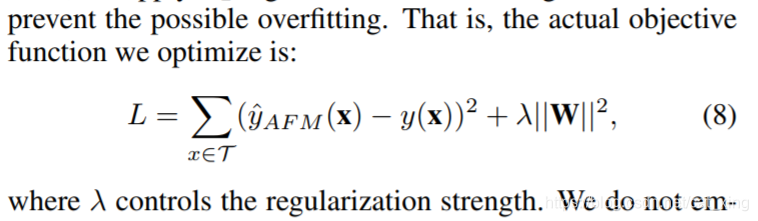

- In this paper, we

focus on the regression task and optimize the squared loss. - To optimize the objective function, we employ stochastic

gradient descent (SGD)

Overfitting Prevention

As AFM has a stronger representation ability than FM, it may be even

easier to overfit the training data.

- Here we consider two techniques to prevent

overfitting — dropout and L2 regularization — that have been

widely used in neural network models. - The idea of dropout is randomly drop some neurons (along

their connections) during training [Srivastava et al., 2014].

- We do not employ

dropout on the attention network, as we find the joint use

of dropout on both the interaction layer and attention network

leads to some stability issue and degrades the performance

4 Related Work

FMs [Rendle, 2010] are mainly used for supervised learning under

sparse settings; for example, in situations where categorical

variables are converted to sparse feature vector via one-hot encoding.

- By directly extending FM with the attention mechanism that

learns the importance of each feature interaction, our AMF

is more interpretable and empirically demonstrates superior

performance over Wide&Deep and DeepCross.

5 Experiments

5.1 Experimental Settings

Datasets.

-

Frappe

The Frappe dataset has been used for

context-aware recommendation, which contains 96, 203 app

usage logs of users under different contexts.

The eight context

variables are all categorical, including weather, city, daytime

and so on.

We convert each log (user ID, app ID and context

variables) to a feature vector via one-hot encoding, obtaining

5, 382 features. -

MovieLens

The MovieLens data has been used for personalized

tag recommendation, which contains 668, 953 tag

applications of users on movies.

We convert each tag application

(user ID, movie ID and tag) to a feature vector and

obtain 90, 445 features.

Evaluation Protocol

Baselines.

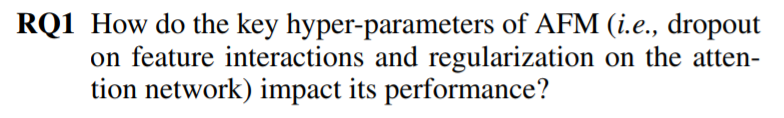

5.2 Hyper-parameter Investigation (RQ1)

First, we explore the effect of dropout on the pair-wise interaction

layer

- By setting the dropout ratio to a proper value, both AFM

and FM can be significantly improved - Our implementation of FM offers a better performance

than LibFM(adapts the learning rate for each parameter

based on its frequency, employ dropout) - AFM outperforms FM and LibFM by a large margin.

We then study whether the L2 regularization on the attention

network is beneficial to AFM

- simply using dropout on the

pair-wise interaction layer is insufficient to prevent overfitting

for AFM. And more importantly, tuning the attention network

can further improve the generalization of AFM

5.3 Impact of the Attention Network(RQ2)

now focus on analyzing the impact of the attention network

on AFM

- We observe that AFM converges faster than FM

- On Frappe, both the training and test error of AFM

are much lower than that of FM, indicating that AFM can

better fit the data and lead to more accurate prediction.- On

MovieLens, although AFM achieves a slightly higher training

error than FM, the lower test error shows that AFM generalizes

better to unseen data.

Micro-level Analysis

Besides the improved performance, another key advantage of AFM is that

it is more explainable through interpreting the attention score of each feature interaction.

5.4 Performance Comparison (RQ3)

-

First, we see that AFM achieves the best performance

among all methods -

Second, HOFM improves over FM, which is attributed to

its modelling of higher-order feature interactions.However,

the slight improvements are based on the rather expensive

cost of almost doubling the number of parameters -

Lastly, DeepCross performs the worst, due to the severe

problem of overfitting

6 Conclusion and Future Work

-

Our AFM enhances FM by learning the

importance of feature interactions with an attention network,

which not only improves the representation ability but also

the interpretability of a FM model. -

As AFM has a relatively high complexity quadratic

to the number of non-zero features, we will consider improving

its learning efficiency, for example by using learning

to hash [Zhang et al., 2016b; Shen et al., 2015] and

data sampling [Wang et al., 2017b] techniques.