一、THUCNews数据集

传送门

链接: https://pan.baidu.com/s/1lziUTaCF7VfnuAKXrGftTw 提取码: saag

概述

本数据集是清华NLP组提供的THUCNews新闻文本分类数据集的一个子集(原始的数据集大约74万篇文档,训练起来需要花较长的时间)。

本次训练使用了其中的10个分类(体育, 财经, 房产, 家居, 教育, 科技, 时尚, 时政, 游戏, 娱乐),每个分类6500条,总共65000条新闻数据。

数据集划分如下:

- cnews.train.txt: 训练集(50000条)

- cnews.val.txt: 验证集(5000条)

- cnews.test.txt: 测试集(10000条)

1、预处理

cnews_loader.py为数据的预处理文件。

read_file(): 读取文件数据;build_vocab(): 构建词汇表,使用字符级的表示,这一函数会将词汇表存储下来,避免每一次重复处理;read_vocab(): 读取上一步存储的词汇表,转换为{词:id}表示;read_category(): 将分类目录固定,转换为{类别: id}表示;to_words(): 将一条由id表示的数据重新转换为文字;preocess_file(): 将数据集从文字转换为固定长度的id序列表示;batch_iter(): 为神经网络的训练准备经过shuffle的批次的数据。

# -*-coding:utf-8-*-

import sys

import numpy as np

import tensorflow.contrib.keras as kr

from collections import Counter

# 获取Python版本号

if sys.version_info[0] > 2:

is_py3 = True

else:

reload(sys)

sys.setdefaultencoding("utf-8")

is_py3 = False

def native_word(word, encoding="utf-8"):

"""如果在python2下面使用python3训练的模型,可考虑调用此函数转化一下字符编码"""

if not is_py3:

return word.encode(encoding)

else:

return word

def native_content(content):

if not is_py3:

return content.encode('utf-8')

else:

return content

def open_file(filename, mode='r'):

"""

常用文件操作,可在python2和python3间切换.

mode: 'r' or 'w' for read or write

"""

if is_py3:

return open(filename, mode, encoding='utf-8', errors='ignore')

else:

return open(filename, mode)

def read_file(filename):

"""读取文件数据"""

contents, labels = [], []

with open_file(filename) as f:

for line in f:

try:

label, content = line.strip().split('\t')

if content:

contents.append(list(native_content(content)))

labels.append(native_content(label))

except:

pass

return contents, labels

def build_vocab(train_dir, vocab_dir, vocab_size=5000):

"""根据训练集构建词汇表,存储"""

data_train, _ = read_file(train_dir)

all_data = []

for content in data_train:

# extend()方法只接受一个列表作为参数,并将该参数的每个元素都添加到原有的列表中。

# a.extend([1,2]) -> [1, 2, '3', '1', 1, 2]

all_data.extend(content)

counter = Counter(all_data)

count_pairs = counter.most_common(vocab_size - 1) # 返回一个TopN列表

words, _ = list(zip(*count_pairs))

# 添加一个 <PAD> 来将所有文本pad为同一长度

words = ['<PAD>'] + list(words)

open_file(vocab_dir, mode="w").write('\n'.join(words) + '\n')

def read_vocab(vocab_dir):

"""读取词汇表, 转换为{词:id}表示"""

with open_file(vocab_dir) as fp:

words = [native_content(_.strip()) for _ in fp.readlines()]

word_to_id = dict(zip(words, range(len(words))))

return words, word_to_id

def read_category():

"""读取分类目录,转换为{类别: id}表示;"""

categories = ['体育', '财经', '房产', '家居', '教育', '科技', '时尚', '时政', '游戏', '娱乐']

categories = [native_content(x) for x in categories]

cat_to_id = dict(zip(categories, range(len(categories))))

return categories, cat_to_id

def to_words(content, words):

"""将id表示的内容转换为文字"""

return ''.join(words[x] for x in content)

def process_file(filename, word_to_id, cat_to_id, max_length=600):

"""将文件转换为id表示"""

contents, labels = read_file(filename)

data_id, label_id = [], []

for i in range(len(contents)):

data_id.append([word_to_id[x] for x in contents[i] if x in word_to_id])

label_id.append(cat_to_id[labels[i]])

# 使用keras提供的pad_sequences来将文本pad为固定长度

x_pad = kr.preprocessing.sequence.pad_sequences(data_id, max_length)

y_pad = kr.untils.to_categorical(label_id, num_classes=len(cat_to_id))

return x_pad, y_pad

def batch_iter(x, y, batch_size=64):

"""生成批次数据"""

data_len = len(x)

num_batch = int((data_len - 1)/batch_size) + 1

indices = np.random.permutation(np.arange(data_len))

x_shuffle = x[indices]

y_shuffle = y[indices]

for i in range(num_batch):

start_id = i * batch_size

end_id = min((i + 1) * batch_size, data_len)

yield x_shuffle[start_id: end_id], y_shuffle[start_id: end_id]

经过数据预处理,数据的格式如下:

| Data | Shape | Data | Shape |

|---|---|---|---|

| x_train | [50000, 600] | y_train | [50000, 10] |

| x_val | [5000, 600] | y_val | [5000, 10] |

| x_test | [10000, 600] | y_test | [10000, 10] |

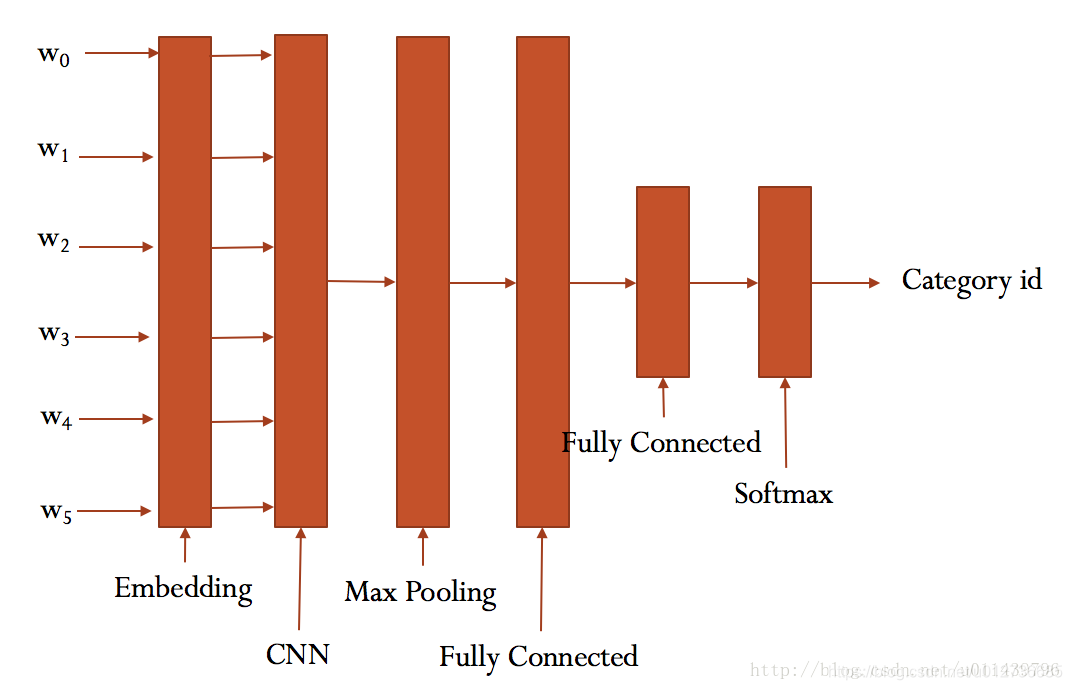

2、CNN卷积神经网络

CNN网络配置参数和网络结构,在 cnn_model.py 中。

CNN网络结构示意图如下所示:

class TCNNConfig(object):

"""CNN配置参数"""

embedding_dim = 64 # 词向量维度

seq_length = 600 # 序列长度

num_classes = 10 # 类别数

num_filters = 128 # 卷积核数目

kernel_size = 5 # 卷积核尺寸

vocab_size = 5000 # 词汇表达小

hidden_dim = 128 # 全连接层神经元

dropout_keep_prob = 0.5

learning_rate = 1e-3

batch_size = 64

num_epochs = 10 # 总迭代轮次

print_per_batch = 100 # 每多少轮输出一次结果

save_per_batch = 10 # 每多少轮存入tensorboard

class TextCNN(object):

"""docstring for TextCNN"""

def __init__(self, config):

self.config = config

# 三个待输入的数据

self.input_x = tf.placeholder(tf.int32, [None, self.config.seq_length], name='input_x')

self.input_y = tf.placeholder(tf.float32, [None, self.config.num_classes], name='input_y')

self.keep_prob = tf.placeholder(tf.float32, name='keep_prob')

self.cnn()

def cnn(self):

"""CNN模型"""

# 词向量映射

with tf.device('/gpu:0'):

embedding = tf.get_variable('embedding', [self.config.vocab_size,self.config.embedding_dim])

embedding_inputs = tf.nn.embedding_lookup(embedding, self.input_x)

with tf.name_scope("cnn"):

# CNN Layer

# 第1个参数是输入数据,第2个参数是卷积核数量num_filters,第3个参数是卷积核大小kernel_size。

conv = tf.layers.conv1d(embedding_inputs, self.config.num_filters, self.config.kernel_size, name='conv')

# global max pooling layer

# 计算一个张量的各个维度上元素的最大值

gmp = tf.reduce_max(conv, reduction_indices=[1], name='gmp')

with tf.name_scope("score"):

# 全连接层,后面接dropout以及relu激活

fc = tf.layers.dense(gmp, self.config.hidden_dim, name='fc1')

fc = tf.contrib.layers.dropout(fc, self.keep_prob)

fc = tf.nn.relu(fc)

# 分类器

self.logits = tf.layers.dense(fc, self.config.num_classes, name='fc2')

self.y_pred_cls = tf.argmax(tf.nn.softmax(self.logits), 1) # 预测类别

with tf.name_scope("optimize"):

# 损失函数,交叉熵

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(logits=self.logits, labels=self.input_y)

self.loss = tf.reduce_mean(cross_entropy)

# 优化器

self.optim = tf.train.AdamOptimizer(learning_rate=self.config.learning_rate).minimize(self.loss)

with tf.name_scope("accuracy"):

# accuracy

correct_pred = tf.equal(tf.argmax(self.input_y, 1), self.y_pred_cls)

self.auc = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

3、训练与验证

# -*- coding; utf-8 -*-

import os

import sys

import time

from datetime import timedelta

import numpy as np

import tensorflow as tf

from sklearn import metrics

from cnn_model import TCNNConfig, TextCNN

from preprocess import read_vocab, read_category, batch_iter, process_file, build_vocab

base_dir = '/home/jie/Jie/codes/tf/NLP/cnews'

train_dir = os.path.join(base_dir, 'cnews.train.txt')

test_dir = os.path.join(base_dir, 'cnews.test.txt')

val_dir = os.path.join(base_dir, 'cnews.val.txt')

vocab_dir = os.path.join(base_dir, 'cnews.vocab.txt')

# 最佳验证结果保存路径

save_dir = 'checkpoints/textcnn'

save_path = os.path.join(save_dir, 'best_validation')

def get_time_dif(start_time):

"""获取已使用时间"""

end_time = time.time()

time_dif = end_time - start_time

return timedelta(seconds=int(round(time_dif)))

def feed_data(x_batch, y_batch, keep_prob):

feed_dict = {

model.input_x: x_batch,

model.input_y: y_batch,

model.keep_prob: keep_prob

}

return feed_dict

def evaluate(sess, x_, y_):

"""评估在某一数据上的准确率和损失"""

data_len = len(x_)

batch_eval = batch_iter(x_, y_, 128)

total_loss = 0.0

total_acc = 0.0

for x_batch, y_batch in batch_eval:

batch_len = len(x_batch)

feed_dict = feed_data(x_batch, y_batch, 1.0)

# 此处计算的loss为平均值

loss, acc = sess.run([model.loss, model.acc], feed_dict=feed_dict)

total_loss += loss * batch_len

total_acc += acc * batch_len

return total_loss / data_len, total_acc / data_len

def train():

print("Configuring TensorBoard and Saver...")

# 配置 Tensorboard,重新训练时,请将tensorboard文件夹删除,不然图会覆盖

tensorboard_dir = 'tensorboard/textcnn'

if not os.path.exists(tensorboard_dir):

os.makedirs(tensorboard_dir)

tf.summary.scalar("loss", model.loss)

tf.summary.scalar("accuracy", model.acc)

merged_summary = tf.summary.merge_all()

writer = tf.summary.FileWriter(tensorboard_dir)

# 配置 Saver

saver = tf.train.Saver()

if not os.path.exists(save_dir):

os.makedirs(save_dir)

print("Loading training and validation data...")

start_time = time.time()

x_train, y_train = process_file(train_dir, word_to_id, cat_to_id, config.seq_length)

x_val, y_val = process_file(val_dir, word_to_id, cat_to_id, config.seq_length)

time_dif = get_time_dif(start_time)

print("Time usage: ", time_dif)

# 创建sess

sess = tf.Session()

sess.run(tf.global_variables_initializer())

writer.add_graph(sess.graph)

print("Training and evalution...")

start_time = time.time()

total_batch = 0 # 总批次

best_acc_val = 0.0 # 最佳验证集准确率

last_improved = 0 # 记录上一次提升批次

require_improvement = 1000 # 如果超过1000轮未提升,提前结束训练

flag = False

for epoch in range(config.num_epochs):

print('Epoch: ', epoch + 1)

batch_train = batch_iter(x_train, y_train, config.batch_size)

for x_batch, y_batch in batch_train:

feed_dict = feed_data(x_batch, y_batch, config.dropout_keep_prob)

if(total_batch % config.save_per_batch == 0):

# 每多少轮次将训练结果写入tensorboard scalar

s = sess.run(merged_summary, feed_dict=feed_dict)

writer.add_summary(s, total_batch)

if(total_batch % config.print_per_batch == 0):

# 每多少轮次输出在训练集和验证集上的性能

feed_dict[model.keep_prob] = 1.0

loss_train, acc_train = sess.run([model.loss, model.acc], feed_dict=feed_dict)

loss_val, acc_val = evaluate(sess, x_val, y_val)

if acc_val > best_acc_val:

best_acc_val = acc_val

last_improved = total_batch

saver.save(sess=sess, save_path=save_path)

improved_str = '*'

else:

improved_str = ''

time_dif = get_time_dif(start_time)

msg = 'Iter: {0:>6}, Train Loss: {1:>6.2}, Train Acc: {2:>7.2%},' \

+ 'Val Loss: {3:>6.2}, Val Acc: {4:>7.2%}, Time: {5} {6}'

print(msg.format(total_batch, loss_train, acc_train, loss_val, acc_train, time_dif, improved_str))

sess.run(model.optim, feed_dict=feed_dict)

total_batch += 1

if total_batch - last_improved > require_improvement:

# 验证集正确率长期不提升,提前结束训练

print("No optimization for a long time, auto-stopping...")

flag = True

break

if flag:

break

def test():

print("Loading test data...")

start_time = time.time()

x_test, y_test = process_file(test_dir, word_to_id, cat_to_id, config.seq_length)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

saver = tf.train.Saver()

# 读取保存的模型

saver.restore(sess=sess, save_path=save_path)

print("Testing")

loss_test, acc_test = evaluate(sess, x_test, y_test)

msg = 'Test Loss: {0:>6.2}, Test Acc: {1:>7.2%}'

print(msg.format(loss_test, acc_test))

batch_size = 128

data_len = len(x_test)

num_batch = int((data_len - 1) / batch_size) + 1

y_test_cls = np.argmax(y_test, 1)

y_pred_cls = np.zeros(shape=len(x_test), dtype=np.int32) # 保存预测结果

for i in range(num_batch): # 逐批次处理

start_id = i * batch_size

end_id = min((i + 1) * batch_size, data_len)

feed_dict = {

model.input_x: x_test[start_id:end_id],

model.keep_prob: 1.0

}

y_pred_cls[start_id:end_id] = session.run(model.y_pred_cls, feed_dict=feed_dict)

# 评估

print("Precision, Recall and F1-Score...")

print(metrics.classification_report(y_test_cls, y_pred_cls, target_names=categories))

# 混淆矩阵

print("Confusion Matrix...")

cm = metrics.confusion_matrix(y_test_cls, y_pred_cls)

print(cm)

time_dif = get_time_dif(start_time)

print("Time usage:", time_dif)

if __name__ == '__main__':

# if len(sys.argv) != 2 or sys.argv[1] not in ['train', 'test']:

# raise ValueError("""usage: python run_cnn.py [train / test]""")

print('Configuring CNN model...')

config = TCNNConfig()

if not os.path.exists(vocab_dir): # 如果不存在词汇表,重建

build_vocab(train_dir, vocab_dir, config.vocab_size)

categories, cat_to_id = read_category()

words, word_to_id = read_vocab(vocab_dir)

config.vocab_size = len(words)

model = TextCNN(config)

train()

# if sys.argv[1] == 'train':

# train()

# else:

# test()

(1)训练

训练结果

Training and evalution...

Epoch: 1

Iter: 0, Train Loss: 2.3, Train Acc: 9.38%,Val Loss: 2.3, Val Acc: 9.38%, Time: 0:00:01 *

Iter: 100, Train Loss: 0.91, Train Acc: 76.56%,Val Loss: 1.3, Val Acc: 76.56%, Time: 0:00:02 *

Iter: 200, Train Loss: 0.32, Train Acc: 89.06%,Val Loss: 0.71, Val Acc: 89.06%, Time: 0:00:04 *

Iter: 300, Train Loss: 0.4, Train Acc: 82.81%,Val Loss: 0.58, Val Acc: 82.81%, Time: 0:00:05 *

Iter: 400, Train Loss: 0.25, Train Acc: 89.06%,Val Loss: 0.4, Val Acc: 89.06%, Time: 0:00:06 *

...

Iter: 2800, Train Loss: 0.011, Train Acc: 100.00%,Val Loss: 0.23, Val Acc: 100.00%, Time: 0:00:33

Iter: 2900, Train Loss: 0.058, Train Acc: 98.44%,Val Loss: 0.2, Val Acc: 98.44%, Time: 0:00:34 *

Iter: 3000, Train Loss: 0.0062, Train Acc: 100.00%,Val Loss: 0.21, Val Acc: 100.00%, Time: 0:00:35

...

Iter: 3700, Train Loss: 0.00073, Train Acc: 100.00%,Val Loss: 0.27, Val Acc: 100.00%, Time: 0:00:43

Iter: 3800, Train Loss: 0.087, Train Acc: 95.31%,Val Loss: 0.3, Val Acc: 95.31%, Time: 0:00:44

Iter: 3900, Train Loss: 0.0085, Train Acc: 100.00%,Val Loss: 0.22, Val Acc: 100.00%, Time: 0:00:45

No optimization for a long time, auto-stopping...

验证集上的最佳效果为98.44%,且只迭代了 5 epochs就结束了

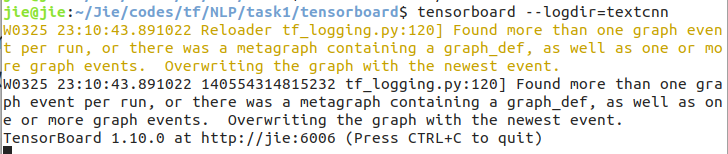

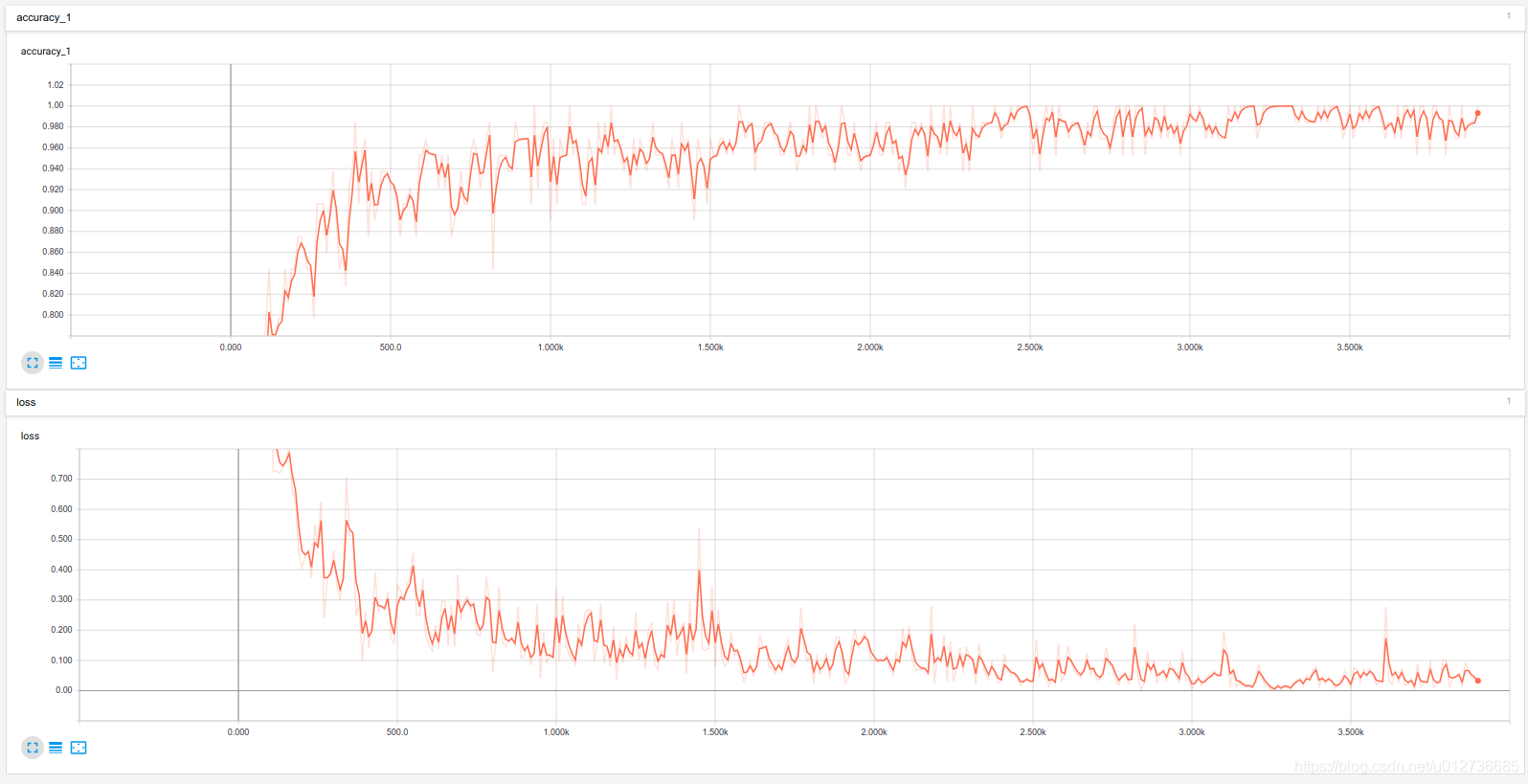

(2)loss、acc可视化

(3)测试

Testing

Test Loss: 0.15, Test Acc: 95.78%

Precision, Recall and F1-Score...

precision recall f1-score support

体育 0.99 0.99 0.99 1000

财经 0.96 0.99 0.98 1000

房产 1.00 1.00 1.00 1000

家居 0.98 0.85 0.91 1000

教育 0.86 0.95 0.91 1000

科技 0.91 0.99 0.95 1000

时尚 0.95 0.97 0.96 1000

时政 0.96 0.94 0.95 1000

游戏 1.00 0.94 0.97 1000

娱乐 0.99 0.95 0.97 1000

micro avg 0.96 0.96 0.96 10000

macro avg 0.96 0.96 0.96 10000

weighted avg 0.96 0.96 0.96 10000

Confusion Matrix...

[[990 0 0 0 5 4 0 0 1 0]

[ 0 988 0 0 2 4 0 6 0 0]

[ 0 0 996 1 2 1 0 0 0 0]

[ 3 18 2 850 59 26 18 23 0 1]

[ 1 4 0 5 954 18 10 8 0 0]

[ 0 0 0 1 7 990 1 0 1 0]

[ 1 0 0 2 11 7 975 0 0 4]

[ 0 10 0 2 32 15 0 940 1 0]

[ 4 5 2 2 11 12 18 1 944 1]

[ 2 1 0 6 22 8 7 2 1 951]]

Time usage: 0:00:05

在测试集上的准确率达到了95.78%,且各类的 precision, recall 和 f1-score 基本都超过了0.9。

从混淆矩阵也可以看出分类效果非常优秀。

二、IMDB数据集

传送门:http://ai.stanford.edu/~amaas/data/sentiment/

1、下载数据

import tensorflow as tf

import numpy as np

from tensorflow import keras

# 获取数据集

# 如果已下载该数据集,则会使用缓存副本

imdb = keras.datasets.imdb

# num_words=10000 会保留训练数据中出现频次在前 10000 位的字词。为确保数据规模处于可管理的水平,罕见字词将被舍弃。

(train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

2、探索数据

(1)初步探索

每个样本都是一个整数数组,表示影评中的字词。每个标签都是整数值 0 或 1,其中 0 表示负面影评,1 表示正面影评。

# 查看样本数

print("Training entries: {}, labels: {}".format(len(train_data), len(train_labels)))

# 查看第一个样本

# 影评文本已转换为整数,其中每个整数都表示字典中的一个特定字词。

print(train_data[0])

# 查看第一条和第二条影评中的字词数。

# 影评的长度可能会有所不同,由于神经网络的输入必须具有相同长度,因此需要解决此问题。

print(len(train_data[0]), len(train_data[1]))

输出结果

在这里插入代码片

(2)将整数转换回字词

查询包含整数到字符串映射的字典对象。

word_index = imdb.get_word_index()

word_index = {k:(v+3) for k,v in word_index.items()}

word_index["<PAD>"] = 0

word_index["<START>"] = 1

word_index["<UNK>"] = 2 # unknown

word_index["<UNUSED>"] = 3

reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

def decode_review(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

print(decode_review(train_data[0]))

3、准备数据

train_data = keras.preprocessing.sequence.pad_sequences(train_data, value=word_index["<PAD>"],

padding='post', maxlen=256)

test_data = keras.preprocessing.sequence.pad_sequences(test_data, value=word_index["<PAD>"],

padding='post', maxlen=256)

# input shape is the vocabulary count used for the movie reviews (10,000 words)

vocab_size = 10000

# keras.Sequential:新建一个序列模型,根据官方文档定义,The Sequential model is a linear stack of layers.

model = keras.Sequential()

# keras.layers.Embedding是将维度为vocab_size的向量转为维度为16的向量。使用词向量代替onehot编码的向量可以降低维度,同时词向量也可以表示词之间的相关性。

# 找到一篇教程,对词向量的本质说的比较清楚

model.add(keras.layers.Embedding(vocab_size, 16))

# keras.layers.GlobalAveragePooling

model.add(keras.layers.GlobalAveragePooling1D())

# relu函数

model.add(keras.layers.Dense(16, activation=tf.nn.relu))

# sigmoid

model.add(keras.layers.Dense(1, activation=tf.nn.sigmoid))

# model.summary()

# 训练数据配置,包括优化器、损失函数、优化的参数

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='binary_crossentropy',

metrics=['accuracy'])

# 配置训练集中数据和标签

x_val = train_data[:10000]

partial_x_train = train_data[10000:]

y_val = train_labels[:10000]

partial_y_train = train_labels[10000:]

# 训练数据

history = model.fit(partial_x_train, partial_y_train,

epochs=40, batch_size=512,

validation_data=(x_val, y_val), verbose=1)

# 评估模型结果

results = model.evaluate(test_data, test_labels)

# print(results)

history_dict = history.history

#print(history_dict.keys())

# 画出结果图

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

# "bo" is for "blue dot"

plt.plot(epochs, loss, 'bo', label='Training loss')

# b is for "solid blue line"

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

plt.clf()

acc_values = history_dict['acc']

val_acc_values = history_dict['val_acc']

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

三、评价指标

1、基本概念

对于一个二分类问题,预测与真实结果会出现四种情况。

| 真实情况 \ 预测情况 | 正类 | 负类 |

|---|---|---|

| 正类 | TP(True Positive) | FN(False Negative) |

| 负类 | FP(False Positive) | TN(True Negative) |

我的记忆方法:首先看第一个字母是T则代表预测正确,反之F预测错误;然后看P表示预测的结果是正,N表示预测的结果为负。

2、准确率(accuracy)

accuracy表示所有预测正确的占总的比重。

3、精确率(precision)

precision(查准率):正确预测为正的占全部预测为正的比例,也就是真正正确的占所有预测为正的比例。

4、召回率(recall)

recall(查全率):正确预测为正占全部真实为正的比例,也就是真正正确的占所有实际为正的比例。

例如:召回率在医疗方面非常重要。

5、F1值

F1值:精确率和召回率的调和均值,越大越好。

==》

6、roc曲线 vs PR曲线

ROC曲线和PR(Precision - Recall)曲线皆为类别不平衡问题中常用的评估方法。

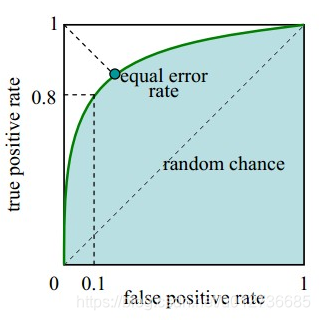

(1)roc曲线

roc曲线:接收者操作特征曲线(receiver operating characteristic curve),是反映敏感性和特异性连续变量的综合指标,ROC曲线上每个点反映着对同一信号刺激的感受性。

主要表现为一种真正例率 (TPR) 和假正例率 (FPR) 的权衡。具体方法是在不同的分类阈值 (threshold) 设定下分别以TPR和FPR为纵、横轴作图。

下图是ROC曲线例子。

横坐标:1-Specificity,伪正类率(False positive rate,FPR,FPR=FP/(FP+TN)),预测为正但实际为负的样本占所有负例样本的比例;

纵坐标:Sensitivity,真正类率(True positive rate,TPR,TPR=TP/(TP+FN)),预测为正且实际为正的样本占所有正例样本的比例。

真正的理想情况,TPR应接近1,FPR接近0,即图中的(0,1)点。ROC曲线越靠拢(0,1)点,越偏离45度对角线越好。

AUC值。AUC (Area Under Curve) 被定义为ROC曲线下的面积。取值范围 [0.5, 1],AUC值越大的分类器,正确率越高。

(2)PR曲线

PR曲线展示的是Precision vs Recall的曲线,PR曲线与ROC曲线的相同点是都采用了TPR (Recall),都可以用AUC来衡量分类器的效果。不同点是ROC曲线使用了FPR,而PR曲线使用了Precision,因此PR曲线的两个指标都聚焦于正例。类别不平衡问题中由于主要关心正例,所以在此情况下PR曲线被广泛认为优于ROC曲线。