首先阐述一下,KNN被写成一个类,包含7个函数,下面分别解释和说明函数的作用的实现思想。

KNN是通过测量不同特征值之间的距离进行分类。

它的思路是:如果一个样本在特征空间中的k个最相似(即特征空间中最邻近)的样本中的大多数属于某一个类别,则该样本也属于这个类别,其中K通常是不大于20的整数。KNN算法中,所选择的邻居都是已经正确分类的对象。该方法在定类决策上只依据最邻近的一个或者几个样本的类别来决定待分样本所属的类别。

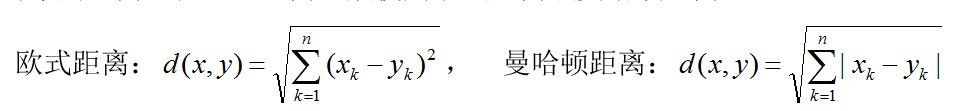

- 在KNN中,通过计算对象间距离来作为各个对象之间的非相似性指标,避免了对象的匹配问题。在这里距离一般使用欧氏距离或曼哈顿距离。

def train(self, X, y):

"""

Train the classifier. For k-nearest neighbors this is just

memorizing the training data.

Inputs:

- X: A numpy array of shape (num_train, D) containing the training data

consisting of num_train samples each of dimension D.

- y: A numpy array of shape (N,) containing the training labels, where

y[i] is the label for X[i].

"""

self.X_train = X # X是大写

self.y_train = y- 分类过程分为训练集和测试集,顾名思义是通过训练集训练,用测试集测试模型。

- KNN的核心思想就是通过计算测试集和训练集的距离来判断模型好坏。在本文中采用计算欧式距离的方法。

- 两次循环计算距离:

def compute_distances_two_loops(self, X): """ Compute the distance between each test point in X and each training point in self.X_train using a nested loop over both the training data and the test data. Inputs: - X: A numpy array of shape (num_test, D) containing test data. Returns: - dists: A numpy array of shape (num_test, num_train) where dists[i, j] is the Euclidean distance between the ith test point and the jth training point. """ num_test = X.shape[0] num_train = self.X_train.shape[0] dists = np.zeros((num_test, num_train)) for i in range(num_test): for j in range(num_train): ##################################################################### # TODO(Jaden):compute the distance ,namely matrix multiplication # Compute the l2 distance between the ith test point and the jth # # training point, and store the result in dists[i, j]. You should # # not use a loop over dimension. # ##################################################################### diff = X[i] - self.X_train[j] diff_2 = diff ** 2 d = np.sqrt(np.sum(diff_2)) dists[i, j] = d return dists其实学过线代的同学就明白,在n维空间中,有:

A点:(x1, x2, x3, ......, xn)

B点:(y1, y2, y3, ......, yn)

那么A、B两点的欧式距离为sqrt( (x1-y1)^2 + (x2-y2)^2 + (x3-y3)^2 + (xn-yn)^2)

- 一次循环计算距离:

def compute_distances_one_loop(self, X): """ Compute the distance between each test point in X and each training point in self.X_train using a single loop over the test data. Input / Output: Same as compute_distances_two_loops """ num_test = X.shape[0] num_train = self.X_train.shape[0] dists = np.zeros((num_test, num_train)) for i in range(num_test): ####################################################################### # TODO: # # Compute the l2 distance between the ith test point and all training # # points, and store the result in dists[i, :]. # ####################################################################### diff = self.X_train - X[i] dist = np.sum(diff ** 2, axis=1) # 计算每一行的和 dists[i, :] = np.sqrt(dist) return dists

这里着实饶了一圈才明白,原因是自己编程基础太薄弱了,对numpy还不够了解。

"diff = self.X_train - X[i]"这里乍一看不对,因为self.X_train是num_train x D的,而X[i]只有一行,看上去貌似不能做运算。

但实践出真知~

>>> import numpy as np

>>> a = np.arange(12).reshape((3,4))

>>> a

array([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]])

>>> b = a # b 和 a相同行数

>>> b - a[2]

array([[-8, -8, -8, -8],

[-4, -4, -4, -4],

[ 0, 0, 0, 0]])

>>> c = np.arange(24).reshape((6,4)) # c 和 a不同行数

>>> c

array([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11],

[12, 13, 14, 15],

[16, 17, 18, 19],

[20, 21, 22, 23]])

>>> c - a[2]

array([[-8, -8, -8, -8],

[-4, -4, -4, -4],

[ 0, 0, 0, 0],

[ 4, 4, 4, 4],

[ 8, 8, 8, 8],

[12, 12, 12, 12]])两种情况都表明:在列数相同的时候,直接将一个矩阵与一个向量做加减法,都是将矩阵的每一行与该向量做加减运算。

至此,一次循环函数的写法也就好理解了。

- 无循环计算距离:

def compute_distances_no_loops(self, X): """ Compute the distance between each test point in X and each training point in self.X_train using no explicit loops. Input / Output: Same as compute_distances_two_loops """ num_test = X.shape[0] num_train = self.X_train.shape[0] dists = np.zeros((num_test, num_train)) ######################################################################### # TODO: # Compute the l2 distance between all test points and all training # # points without using any explicit loops, and store the result in # # dists. # # # # You should implement this function using only basic array operations; # # in particular you should not use functions from scipy. # # # # HINT: Try to formulate the l2 distance using matrix multiplication # # and two broadcast sums. # ######################################################################### train_sq = np.sum(self.X_train ** 2, axis=1, keepdims=True) train_sq = np.broadcast_to(train_sq, shape=(num_train, num_test)).T test_sq = np.sum(X ** 2, axis=1, keepdims=True) test_sq = np.broadcast_to(test_sq, shape=(num_test, num_train)) cross = np.dot(X, self.X_train.T) dists = np.sqrt(train_sq + test_sq - 2 * cross) return distsnumpy的sum函数中的keepdims参数的作用是保持维度不变,不让结果中操作的维度消失。更详细的说明,参考这里。

numpy的broadcast函数作用是将array变换为新的shape。详细说明看这里。

- 标签预测函数:

def predict_labels(self, dists, k=1): """ Given a matrix of distances between test points and training points, predict a label for each test point. Inputs: - dists: A numpy array of shape (num_test, num_train) where dists[i, j] gives the distance betwen the ith test point and the jth training point. Returns: - y: A numpy array of shape (num_test,) containing predicted labels for the test data, where y[i] is the predicted label for the test point X[i]. """ num_test = dists.shape[0] y_pred = np.zeros(num_test) for i in range(num_test): # A list of length k storing the labels of the k nearest neighbors to # the ith test point. closest_y = [] ######################################################################### # TODO(Jaden): Finish the predict_label function # Use the distance matrix to find the k nearest neighbors of the ith # # testing point, and use self.y_train to find the labels of these # # neighbors. Store these labels in closest_y. # # Hint: Look up the function numpy.argsort. # ######################################################################### test_dist = dists[i] sort_dist = np.argsort(test_dist) valid_idx = sort_dist[:k] closest_y = self.y_train[valid_idx] ######################################################################### # TODO: # # Now that you have found the labels of the k nearest neighbors, you # # need to find the most common label in the list closest_y of labels. # # Store this label in y_pred[i]. Break ties by choosing the smaller # # label. # ######################################################################### y_unique, y_count = np.unique(closest_y, return_counts=True) # np.unique函数,保留不重复元素,第二个参数是元素的重复次数 common_idx = np.argmax(y_count) # 元素出现次数最多的位置 y_pred[i] = y_unique[common_idx] # 去最大的数作为预测 return y_pred这个就没什么好说的,很容易理解。