一.数据倾斜简介

1>.什么是数据倾斜

答:大量数据涌入到某一节点,导致此节点负载过重,此时就产生了数据倾斜。

2>.处理数据倾斜的两种方案

第一:重新设计key;

第二:设计随机分区;

二.模拟数据倾斜

数据:

3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9 1 3 5 7 9

1>.App端代码

7

8 import org.apache.hadoop.conf.Configuration;

9 import org.apache.hadoop.fs.FileSystem;

10 import org.apache.hadoop.fs.Path;

11 import org.apache.hadoop.io.IntWritable;

12 import org.apache.hadoop.io.Text;

13 import org.apache.hadoop.mapreduce.Job;

14 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

15 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

16

17 public class ScrewApp {

18 public static void main(String[] args) throws Exception {

19 //实例化一个Configuration,它会自动去加载本地的core-site.xml配置文件的fs.defaultFS属性。(该文件放在项目的resources目录即可。)

20 Configuration conf = new Configuration();

21 //将hdfs写入的路径定义在本地,需要修改默认为文件系统,这样就可以覆盖到之前在core-site.xml配置文件读取到的数据。

22 conf.set("fs.defaultFS","file:///");

23 //代码的入口点,初始化HDFS文件系统,此时我们需要把读取到的fs.defaultFS属性传给fs对象。

24 FileSystem fs = FileSystem.get(conf);

25 //创建一个任务对象job,别忘记把conf穿进去哟!

26 Job job = Job.getInstance(conf);

27 //给任务起个名字

28 job.setJobName("WordCount");

29 //指定main函数所在的类,也就是当前所在的类名

30 job.setJarByClass(ScrewApp.class);

31 //指定map的类名,这里指定咱们自定义的map程序即可

32 job.setMapperClass(ScrewMapper.class);

33 //指定reduce的类名,这里指定咱们自定义的reduce程序即可

34 job.setReducerClass(ScrewReduce.class);

35 //设置输出key的数据类型

36 job.setOutputKeyClass(Text.class);

37 //设置输出value的数据类型

38 job.setOutputValueClass(IntWritable.class);

39 Path localPath = new Path("D:\\10.Java\\IDE\\gpj\\MyHadoop\\MapReduce\\out");

40 if (fs.exists(localPath)){

41 fs.delete(localPath,true);

42 }

43 //设置输入路径,需要传递两个参数,即任务对象(job)以及输入路径

44 FileInputFormat.addInputPath(job,new Path("D:\\10.Java\\IDE\\gpj\\MyHadoop\\MapReduce\\screw.txt"));

45 //设置输出路径,需要传递两个参数,即任务对象(job)以及输出路径

46 FileOutputFormat.setOutputPath(job,localPath);

47 //设置Reduce的个数为2.

48 job.setNumReduceTasks(2);

49 //等待任务执行结束,将里面的值设置为true。

50 job.waitForCompletion(true);

51 }

52 }

2>.Reduce端代码

8 import org.apache.hadoop.io.IntWritable;

9 import org.apache.hadoop.io.Text;

10 import org.apache.hadoop.mapreduce.Reducer;

11

12 import java.io.IOException;

13

14 public class ScrewReduce extends Reducer<Text,IntWritable,Text,IntWritable> {

15 @Override

16 protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

17 int count = 0;

18 for (IntWritable value : values) {

19 count += value.get();

20 }

21 context.write(key,new IntWritable(count));

22 }

23 }

3>.Mapper端代码

7

8 import org.apache.hadoop.io.IntWritable;

9 import org.apache.hadoop.io.LongWritable;

10 import org.apache.hadoop.io.Text;

11 import org.apache.hadoop.mapreduce.Mapper;

12

13 import java.io.IOException;

14

15 public class ScrewMapper extends Mapper<LongWritable,Text,Text,IntWritable> {

16 @Override

17 protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

18 String line = value.toString();

19

20 String[] arr = line.split(" ");

21

22 for (String word : arr) {

23 context.write(new Text(word),new IntWritable(1));

24 }

25 }

26 }

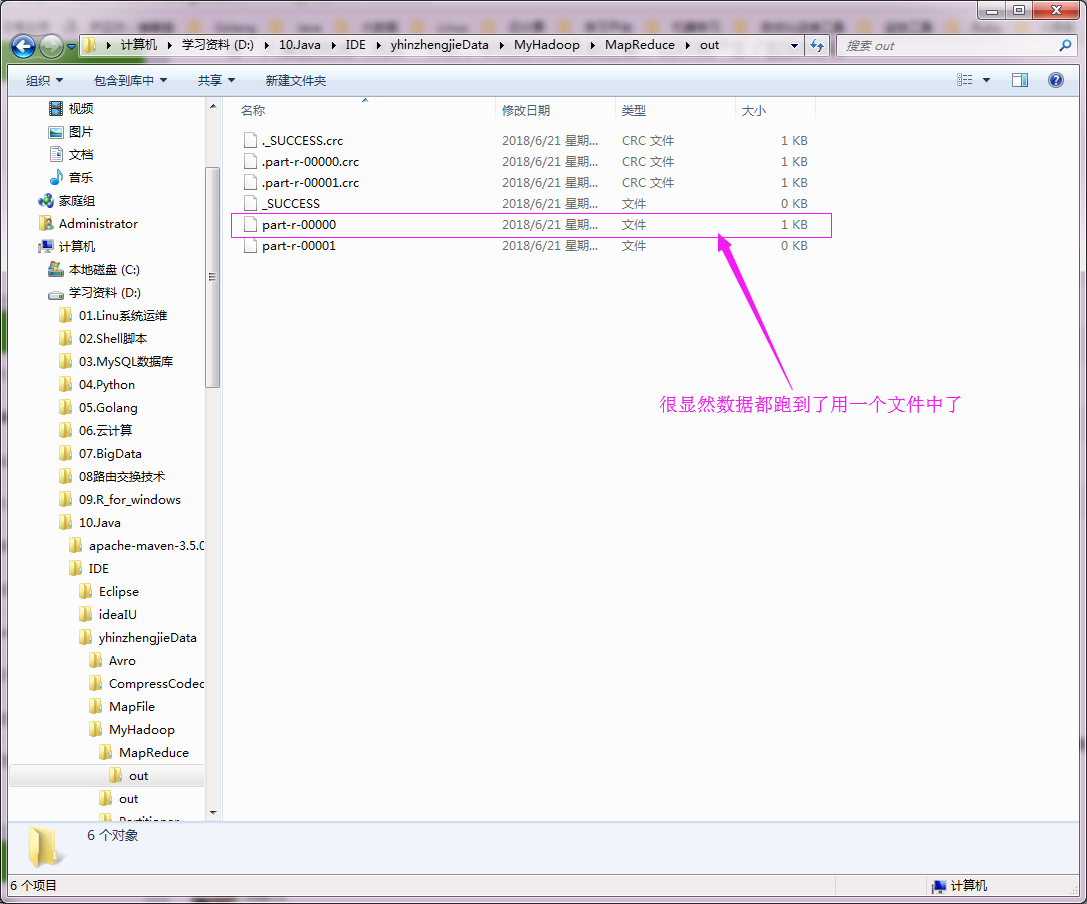

执行以上代码,查看数据如下:

三.解决数据倾斜方案之重新设计key

1>.具体代码如下

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.util.Random;

public class ScrewMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

//定义一个reduce变量

int reduces;

//定义一个随机数生成器变量

Random r;

/**

* setup方法是用于初始化值

*/

@Override

protected void setup(Context context) throws IOException, InterruptedException {

//通过context.getNumReduceTasks()方法获取到用户配置的reduce个数。

reduces = context.getNumReduceTasks();

//生成一个随机数生成器

r = new Random();

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

String[] arr = line.split(" ");

for (String word : arr) {

//从reducs的范围中获取一个int类型的随机数赋值给randVal

int randVal = r.nextInt(reduces);

//重新定义key

String newWord = word+"_"+ randVal;

//将自定义的key赋初始值为1发给reduce端

context.write(new Text(newWord), new IntWritable(1));

}

}

}

3 import org.apache.hadoop.io.IntWritable;

4 import org.apache.hadoop.io.LongWritable;

5 import org.apache.hadoop.io.Text;

6 import org.apache.hadoop.mapreduce.Mapper;

7

8 import java.io.IOException;

9

10 public class ScrewMapper2 extends Mapper<LongWritable,Text,Text,IntWritable> {

11

12 //处理的数据类似于“1_1 677”

13 @Override

14 protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

15 String line = value.toString();

16 //

17 String[] arr = line.split("\t");

18

19 //newKey

20 String newKey = arr[0].split("_")[0];

21

22 //newVAl

23 int newVal = Integer.parseInt(arr[1]);

24

25 context.write(new Text(newKey), new IntWritable(newVal));

26

27

28 }

29 }

7

8 import org.apache.hadoop.io.IntWritable;

9 import org.apache.hadoop.io.Text;

10 import org.apache.hadoop.mapreduce.Reducer;

11

12 import java.io.IOException;

13

14 public class ScrewReducer extends Reducer<Text,IntWritable,Text,IntWritable> {

15 @Override

16 protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

17 int count = 0;

18 for (IntWritable value : values) {

19 count += value.get();

20 }

21 context.write(key,new IntWritable(count));

22 }

23 }

8 import org.apache.hadoop.conf.Configuration;

9 import org.apache.hadoop.fs.FileSystem;

10 import org.apache.hadoop.fs.Path;

11 import org.apache.hadoop.io.IntWritable;

12 import org.apache.hadoop.io.Text;

13 import org.apache.hadoop.mapreduce.Job;

14 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

15 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

16

17 public class ScrewApp {

18 public static void main(String[] args) throws Exception {

19 //实例化一个Configuration,它会自动去加载本地的core-site.xml配置文件的fs.defaultFS属性。(该文件放在项目的resources目录即可。)

20 Configuration conf = new Configuration();

21 //将hdfs写入的路径定义在本地,需要修改默认为文件系统,这样就可以覆盖到之前在core-site.xml配置文件读取到的数据。

22 conf.set("fs.defaultFS","file:///");

23 //代码的入口点,初始化HDFS文件系统,此时我们需要把读取到的fs.defaultFS属性传给fs对象。

24 FileSystem fs = FileSystem.get(conf);

25 //创建一个任务对象job,别忘记把conf穿进去哟!

26 Job job = Job.getInstance(conf);

27 //给任务起个名字

28 job.setJobName("WordCount");

29 //指定main函数所在的类,也就是当前所在的类名

30 job.setJarByClass(ScrewApp.class);

31 //指定map的类名,这里指定咱们自定义的map程序即可

32 job.setMapperClass(ScrewMapper.class);

33 //指定reduce的类名,这里指定咱们自定义的reduce程序即可

34 job.setReducerClass(ScrewReducer.class);

35 //设置输出key的数据类型

36 job.setOutputKeyClass(Text.class);

37 //设置输出value的数据类型

38 job.setOutputValueClass(IntWritable.class);

39 Path localPath = new Path("D:\\10.Java\\IDE\\MyHadoop\\MapReduce\\out");

40 if (fs.exists(localPath)){

41 fs.delete(localPath,true);

42 }

43 //设置输入路径,需要传递两个参数,即任务对象(job)以及输入路径

44 FileInputFormat.addInputPath(job,new Path("D:\\10.Java\\IDE\\MyHadoop\\MapReduce\\screw.txt"));

45 //设置输出路径,需要传递两个参数,即任务对象(job)以及输出路径

46 FileOutputFormat.setOutputPath(job,localPath);

47 //设置Reduce的个数为2.

48 job.setNumReduceTasks(2);

49 //等待任务执行结束,将里面的值设置为true。

50 if (job.waitForCompletion(true)) {

51 //当第一个MapReduce结束之后,我们这里又启动了一个新的MapReduce,逻辑和上面类似。

52 Job job2 = Job.getInstance(conf);

53 job2.setJobName("Wordcount2");

54 job2.setJarByClass(ScrewApp.class);

55 job2.setMapperClass(ScrewMapper2.class);

56 job2.setReducerClass(ScrewReducer.class);

57 job2.setOutputKeyClass(Text.class);

58 job2.setOutputValueClass(IntWritable.class);

59 Path p2 = new Path("D:\\10.Java\\IDE\\MyHadoop\\MapReduce\\out2");

60 if (fs.exists(p2)) {

61 fs.delete(p2, true);

62 }

63 FileInputFormat.addInputPath(job2, localPath);

64 FileOutputFormat.setOutputPath(job2, p2);

65 //我们将第一个MapReduce的2个reducer的处理结果放在新的一个MapReduce中只启用一个MapReduce。

66 job2.setNumReduceTasks(1);

67 job2.waitForCompletion(true);

68 }

69 }

70 }

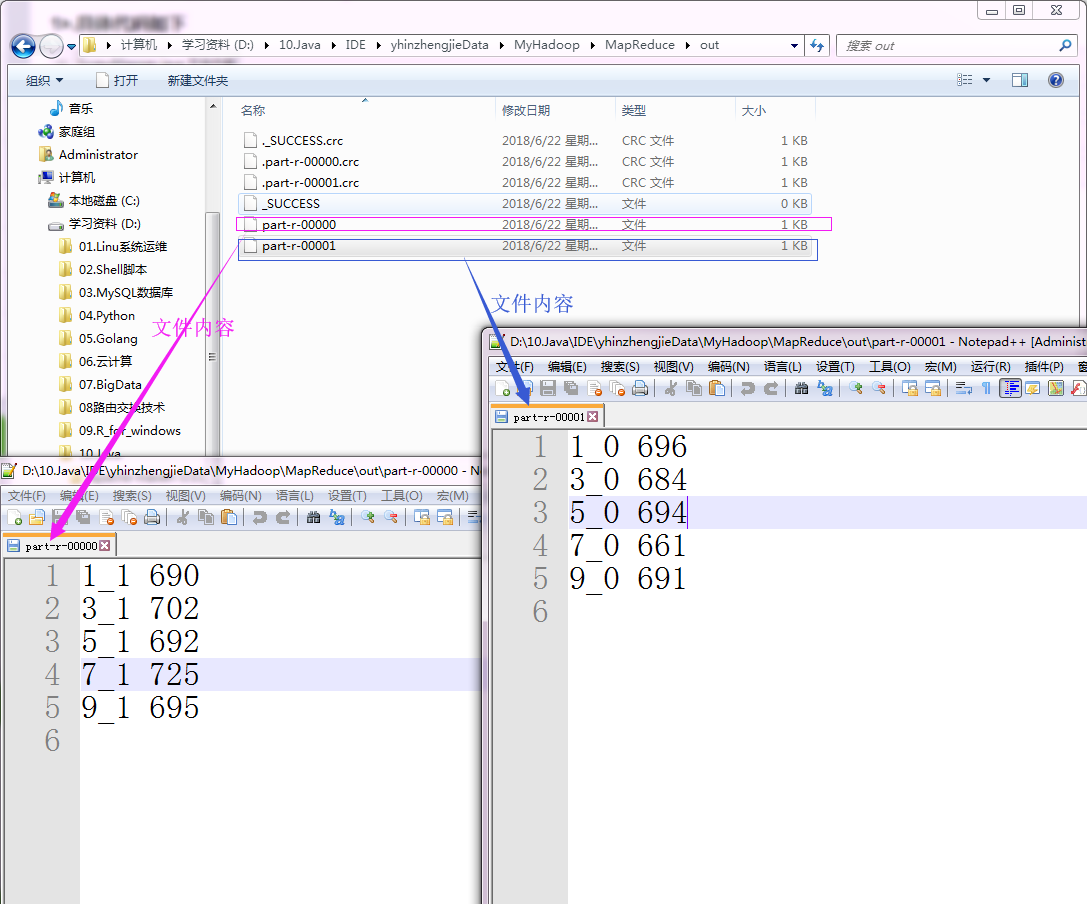

2>.检测实验结果

“D:\\10.Java\\IDE\\MyHadoop\\MapReduce\\out” 目录内容如下:

“D:\\10.Java\\IDE\\MyHadoop\\MapReduce\\out2” 目录内容如下:

四.解决数据倾斜方案之使用随机分区

1>.具体代码如下

7

8 import org.apache.hadoop.io.IntWritable;

9 import org.apache.hadoop.io.LongWritable;

10 import org.apache.hadoop.io.Text;

11 import org.apache.hadoop.mapreduce.Mapper;

12

13 import java.io.IOException;

14

15 public class Screw2Mapper extends Mapper<LongWritable,Text,Text,IntWritable> {

16

17 @Override

18 protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

19

20 String line = value.toString();

21

22 String[] arr = line.split(" ");

23

24 for(String word : arr){

25 context.write(new Text(word), new IntWritable(1));

26

27 }

28

29

30 }

31 }

8 import org.apache.hadoop.io.IntWritable;

9 import org.apache.hadoop.io.Text;

10 import org.apache.hadoop.mapreduce.Partitioner;

11

12 import java.util.Random;

13

14 public class Screw2Partition extends Partitioner<Text, IntWritable> {

15 @Override

16 public int getPartition(Text text, IntWritable intWritable, int numPartitions) {

17 Random r = new Random();

18 //返回的是分区的随机的一个ID

19 return r.nextInt(numPartitions);

20 }

21 }

8 import org.apache.hadoop.io.IntWritable;

9 import org.apache.hadoop.io.Text;

10 import org.apache.hadoop.mapreduce.Reducer;

11

12 import java.io.IOException;

13

14 public class Screw2Reducer extends Reducer<Text,IntWritable,Text,IntWritable> {

15 @Override

16 protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

17 int sum = 0;

18 for(IntWritable value : values){

19 sum += value.get();

20 }

21 context.write(key,new IntWritable(sum));

22 }

23 }

8 import org.apache.hadoop.conf.Configuration;

9 import org.apache.hadoop.fs.FileSystem;

10 import org.apache.hadoop.fs.Path;

11 import org.apache.hadoop.io.IntWritable;

12 import org.apache.hadoop.io.Text;

13 import org.apache.hadoop.mapreduce.Job;

14 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

15 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

16

17 public class Screw2App {

18 public static void main(String[] args) throws Exception {

19 Configuration conf = new Configuration();

20 conf.set("fs.defaultFS", "file:///");

21 FileSystem fs = FileSystem.get(conf);

22 Job job = Job.getInstance(conf);

23 job.setJobName("Wordcount");

24 job.setJarByClass(Screw2App.class);

25 job.setMapperClass(Screw2Mapper.class);

26 job.setReducerClass(Screw2Reducer.class);

27 job.setPartitionerClass(Screw2Partition.class);

28 job.setOutputKeyClass(Text.class);

29 job.setOutputValueClass(IntWritable.class);

30 Path p = new Path("D:\\10.Java\\IDE\\yhinzhengjieData\\MyHadoop\\MapReduce\\out");

31 if (fs.exists(p)) {

32 fs.delete(p, true);

33 }

34 FileInputFormat.addInputPath(job, new Path("D:\\10.Java\\IDE\\yhinzhengjieData\\MyHadoop\\MapReduce\\screw.txt"));

35 FileOutputFormat.setOutputPath(job, p);

36 job.setNumReduceTasks(2);

37 job.waitForCompletion(true);

38 }

39 }

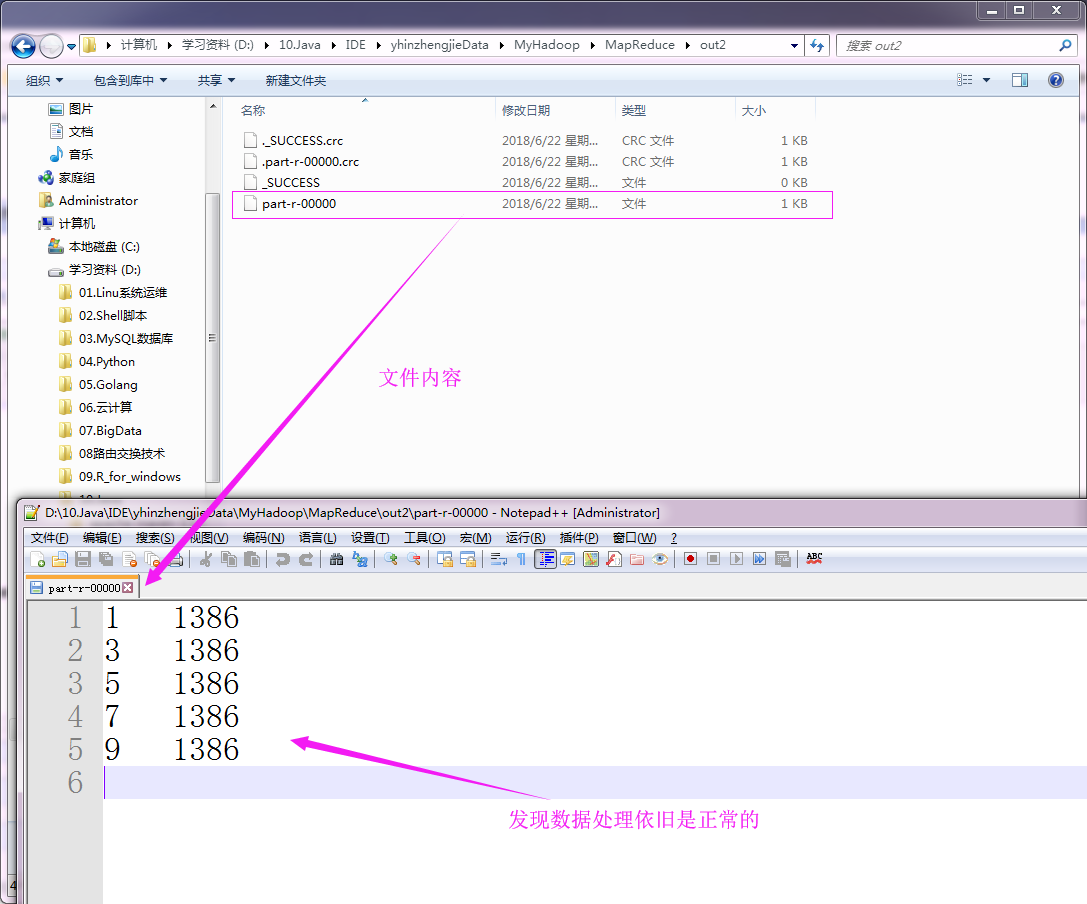

2>.检测实验结果

“D:\\10.Java\\IDE\\MyHadoop\\MapReduce\\out” 目录内容如下: