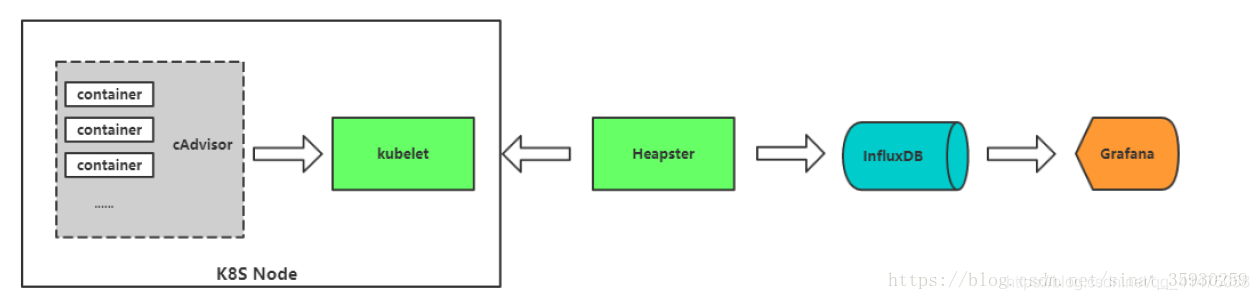

一、架构图

二、组件功能

1、cAdvisor:容器数据收集。

cAdvisor会收集当前节点的信息和容器的信息,主要会收集网络和磁盘IO,文件系统大小、内存使用情况等。但是,cAdvisor只会收集实时的数据,并不会做持久化存储。

2、Heapster:集群监控数据收集,汇总所有节点监控数据。

Heapster首先从apiserver获取集群中所有Node的信息,通过这些Node上的kubelet获取有用数据,而kubelet本身的数据则是从cAdvisor得到,可以收集Node节点上的cAdvisor数据:CPU、内存、网络和磁盘等。所有获取到的数据都被推到Heapster配置的后端存储中,并还支持数据的可视化,将每个Node上的cAdvisor的数据进行汇总。

3、InfluxDB:时序数据库,存储监控数据。

4、Grafana:可视化展示。

三、流程

cAdvisor负责收集节点的状态信息,然后Heapster会通过kubelet向每一个节点请求cAdvisor收集的信息,并将信息存储在InfluxDB中,Grafana通过InfluxDB中的信息进行前端的展示。

四、补充,这个方案没部署成功

1、在以前的版本中,kubelet默认集成了cAdvisor,并且cAdvisor通过节点机的4194端口暴露一个对外端口,可以通过这个端口访问数据和UI界面,但是在新的版本中,kubelet中的cadvisor是没有对外开放4194端口的。

在node节点上查看kubelet端口可以看到并没有4194端口。

[root@k8s-node1-102 ~]# netstat -tlunp | grep kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 16192/kubelet

tcp 0 0 10.0.0.102:10250 0.0.0.0:* LISTEN 16192/kubelet

tcp 0 0 127.0.0.1:33611 0.0.0.0:* LISTEN 16192/kubelet

tcp 0 0 10.0.0.102:10255 0.0.0.0:* LISTEN 16192/kubelet

2、在1.12.0版本之前还可以开启kubelet的cadvisor:

(1)在/etc/kubernetes/kubelet 加入如下 参数:

CADVISOR="–cadvisor-port=4194 --storage-driver-db=‘cadvisor’ --storage-driver-host=‘localhost:8086’"

(2)修改/etc/systemd/system/kubelet.service,然后重启kubelet服务

在ExecStart=xxx 后面加上$CADVISOR

3、但是在1.12.0版本之后,–cadvisor-port 这个参数这个参数已经不支持了,可能已经不支持这种监控方式了,因为kubelet中的cadvisor无法开启,Heapster也就无法通过kubelet收集汇总所有节点中cadvisor中的数据,所以这种监控方案现在应该是已经被抛弃了,查了下资料Heapster已经被抛弃不更新了,所以这种方案现在是用不了的了,因为我k8s的版本是1.12.2的,所以没有部署成功,但也记录一下。

五、部署过程

mkdir monitor

cd monitor/

1、vim influxdb.yaml

[root@k8s-master-101 monitor]# cat influxdb.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-influxdb

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: influxdb

spec:

containers:

- name: influxdb

image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-influxdb-amd64:v1.1.1

volumeMounts:

- mountPath: /data

name: influxdb-storage

volumes:

- name: influxdb-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-influxdb

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- port: 8086

targetPort: 8086

selector:

k8s-app: influxdb

kubectl create -f influxdb.yaml

2、vim heapster.yaml

[root@k8s-master-101 monitor]# cat heapster.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-amd64:v1.4.2

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http://monitoring-influxdb:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

kubectl create -f heapster.yaml

3、vim grafana.yaml

[root@k8s-master-101 monitor]# cat grafana.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-grafana-amd64:v4.4.1

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GRAFANA_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

value: /api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/

#value:/

volumes:

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

type: NodePort

ports:

- port : 80

targetPort: 3000

selector:

k8s-app: grafana

kubectl create -f grafana.yaml

六、访问测试

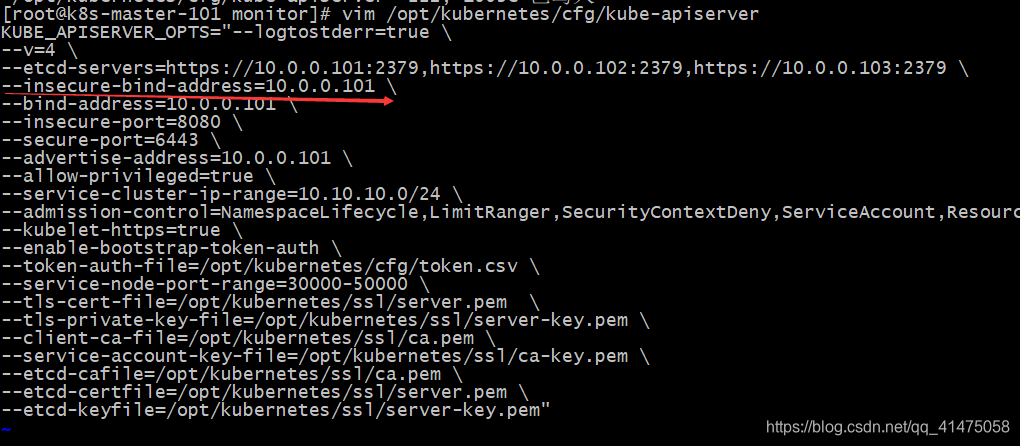

想要访问grafana有两种方式:第一种是通过apiserver的非安全端口进行访问,第二种是通过proxy代理的端口访问。

1、通过apiserver的非安全端口访问

apiserver的非安全端口就是在配置文件中定义的insecure。把–insecure-bind-address从127.0.0.1改为master地址10.0.0.101

2、重启apiserver

systemctl restart kube-apiserver.service

3、通过浏览器访问

http://10.0.0.101:8080/api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/