环境:ESXI6.5虚拟化

主机配置:操作系统 Oracle Linux 7.3

CPU:8个VCPU

内存:16G

本地磁盘:50G

全程默认最小化安装Oracle Linux 7.3操作系统

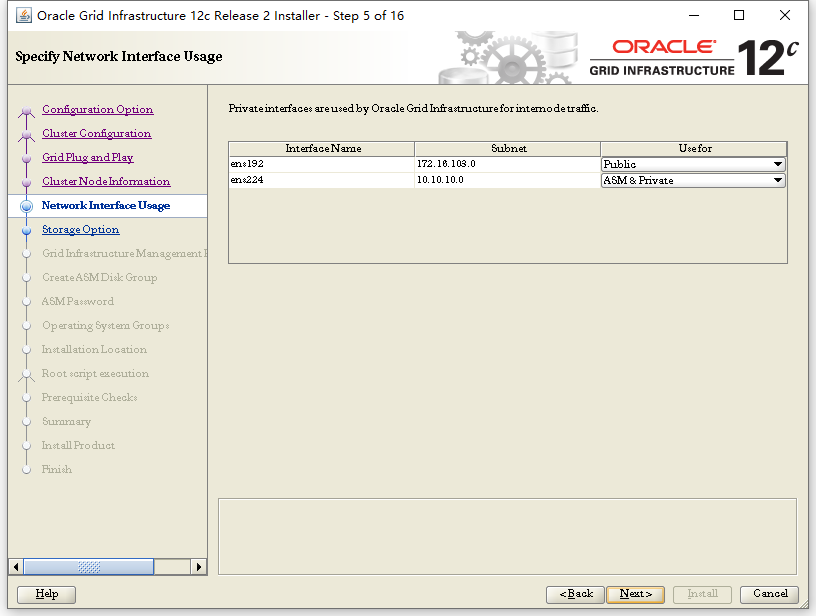

每个节点2个网卡,一个网卡用作public网络,一个网卡用户private网络,public是主机是真实连接的网络,配置真实的IP地址,private是私有网络,是RAC环境下互相检测心跳的网络。

终端使用xshell+xftp+xmanager

修改主机名(主机名,hosts文件中的主机名尽量不要包含-_.之类的符号)

hostnamectl set-hostname rac1

hostnamectl set-hostname rac2

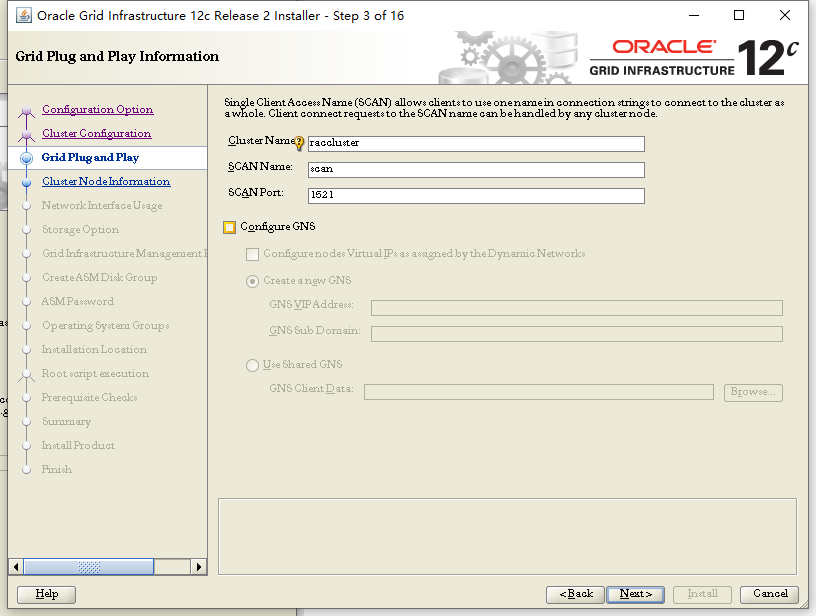

IP地址规划

两个节点的hosts文件内容

#Public-IP

172.16.103.4 rac1

172.16.103.5 rac2

#Private-IP

10.10.10.1 rac1priv

10.10.10.2 rac2priv

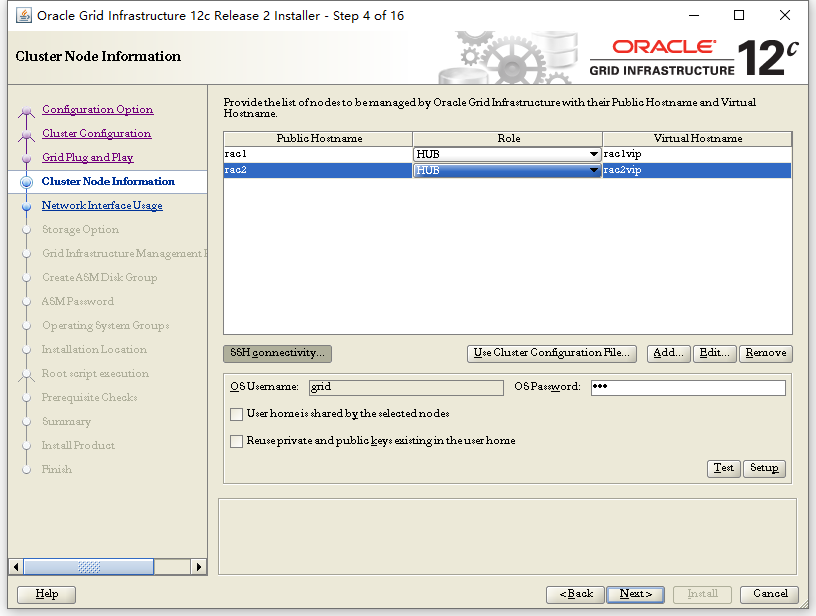

#Virtual-IP

172.16.103.6 rac1vip

172.16.103.7 rac2vip

#Scan-IP

172.16.103.8 scan

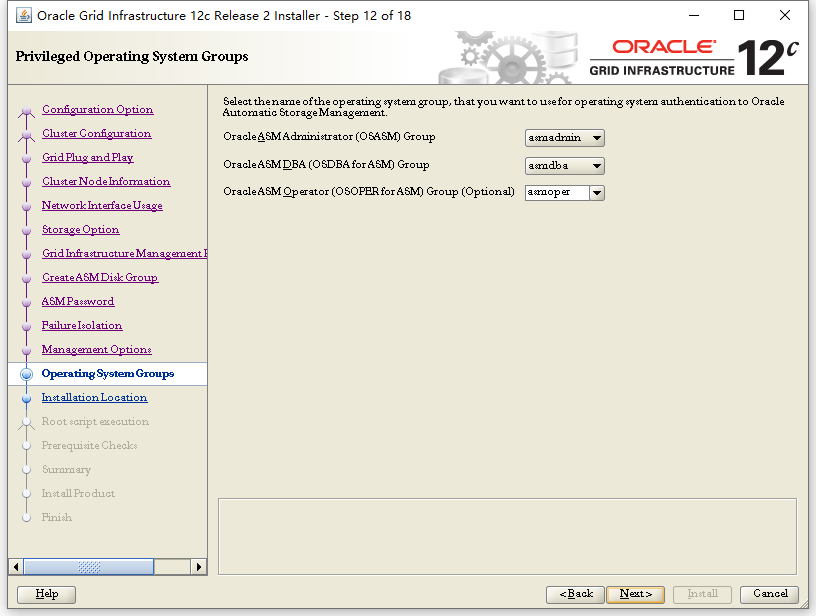

添加用户和组(所有root节点执行)

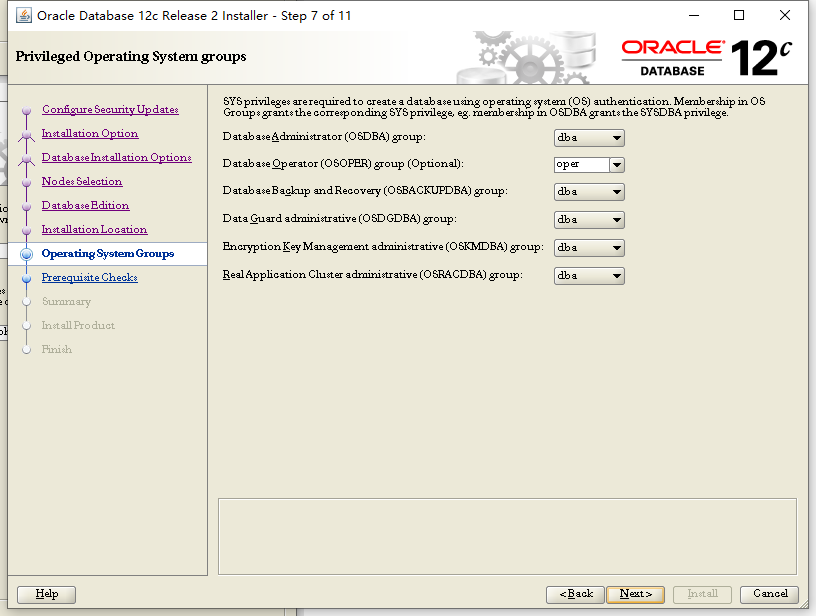

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

groupadd -g 54330 racdba

useradd -u 54321 -g oinstall -G dba,asmdba,oper oracle

useradd -u 54322 -g oinstall -G dba,oper,backupdba,dgdba,kmdba,asmdba,asmoper,asmadmin,racdba grid

更改grid和oracle的密码,密码为123和123123(所有root节点执行)

passwd grid

passwd oracle

关闭防火墙,ntp(如存在)chronyd,selinux,如果系统上安装的有ntpd或者chronyd服务器,一定要使用yum remove 命令卸载包,配置grid时会报错,oracle自身有自己的时间同步功能,如果系统上安装的有其他的时间同步软件再最终检查grid时候,会报错,报错内容是提示启用ntp或者chronyd服务(所有root节点执行)

systemctl stop firewalld

systemctl disable firewalld

systemctl stop chronyd.service

systemctl disable chronyd.service

vi /etc/selinux/config

SELINUX=disabled

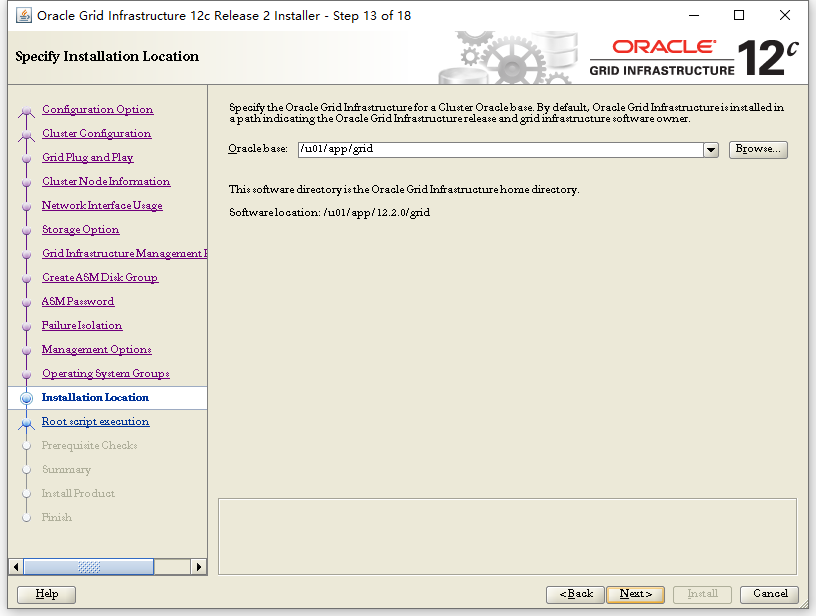

创建目录(所有root节点执行)

mkdir -p /u01/app/12.2.0/grid

mkdir -p /u01/app/grid

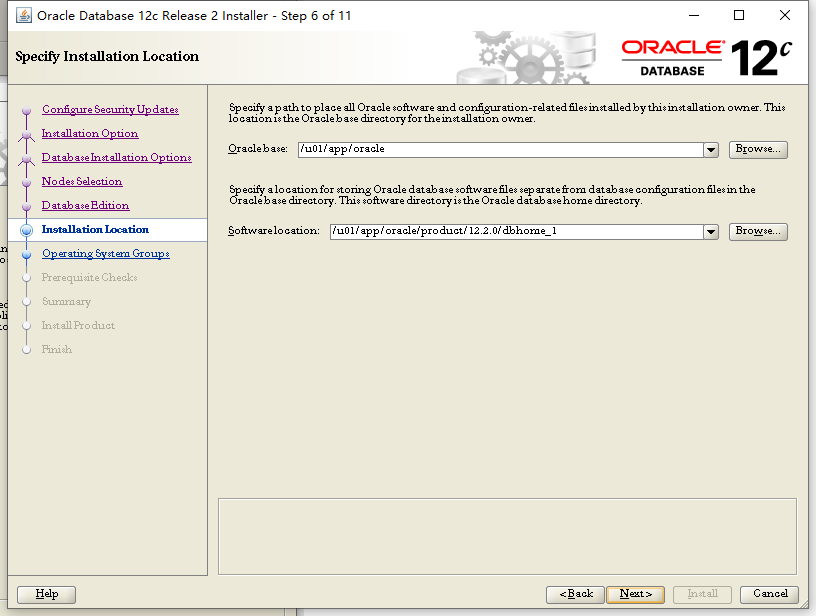

mkdir -p /u01/app/oracle/product/12.2.0/dbhome_1

chown -R grid:oinstall /u01

chown -R oracle:oinstall /u01/app/oracle

chmod -R 775 /u01/

配置oracle用户的环境变量(所有root节点执行)

vi /home/oracle/.bash_profile

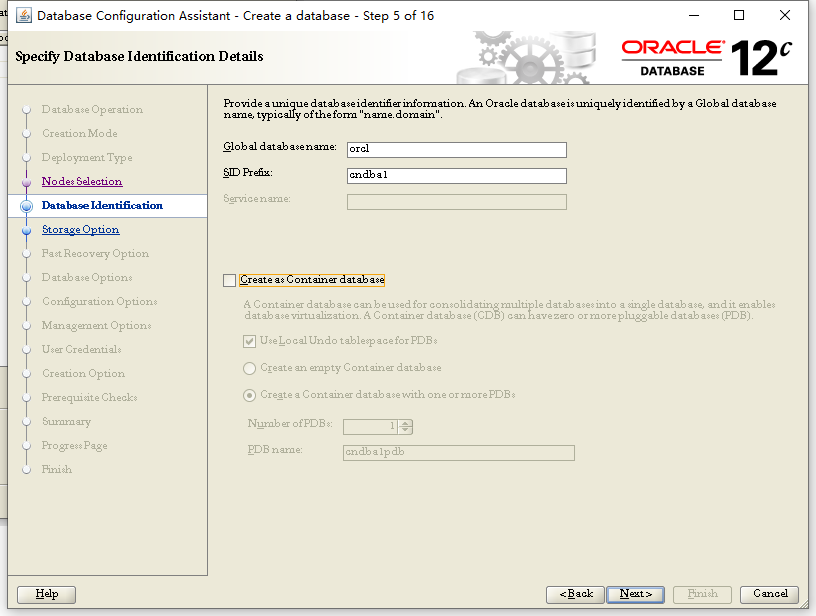

ORACLE_SID=cndba1;export ORACLE_SID

#ORACLE_SID=cndba2;export ORACLE_SID

ORACLE_UNQNAME=cndba;export ORACLE_UNQNAME

JAVA_HOME=/usr/local/java; export JAVA_HOME

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/12.2.0/dbhome_1; export ORACLE_HOME

ORACLE_TERM=xterm; export ORACLE_TERM

NLS_DATE_FORMAT="YYYY:MM:DDHH24:MI:SS"; export NLS_DATE_FORMAT

NLS_LANG=american_america.ZHS16GBK; export NLS_LANG

TNS_ADMIN=$ORACLE_HOME/network/admin; export TNS_ADMIN

ORA_NLS11=$ORACLE_HOME/nls/data; export ORA_NLS11

PATH=.:${JAVA_HOME}/bin:${PATH}:$HOME/bin:$ORACLE_HOME/bin:$ORA_CRS_HOME/bin

PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

LD_LIBRARY_PATH=$ORACLE_HOME/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export CLASSPATH

THREADS_FLAG=native; export THREADS_FLAG

export TEMP=/tmp

export TMPDIR=/tmp

umask 022

配置grid用户的环境变量(所有root节点执行)

vi /home/grid/.bash_profile

export ORACLE_SID=+ASM1

#export ORACLE_SID=+ASM2

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/12.2.0/grid

export PATH=$ORACLE_HOME/bin:$PATH:/usr/local/bin/:.

export TEMP=/tmp

export TMP=/tmp

export TMPDIR=/tmp

umask 022

修改系统限制参数(所有root节点执行)

vi /etc/security/limits.conf

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

配置NOZEROCONF(所有root节点执行)

vi /etc/sysconfig/network

NOZEROCONF=yes

修改内核参数(所有root节点执行)

vi /etc/sysctl.conf

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

使配置生效(所有root节点执行)

sysctl -p

配置本地yum源(所有root节点执行)

mkdir -p /mnt/cdrom

mount /dev/cdrom /mnt/cdrom

cd /etc/yum.repos.d/

mv public-yum-ol7.repo public-yum-ol7.repo.bak

vi oracle.repo

[oracle]

name=oracle

baseurl=file:///mnt/cdrom

gpgcheck=0

enabled=1

yum clean all

安装依赖包

yum install -y binutils compat-libstdc++-33 gcc gcc-c++ glibc glibc.i686 glibc-devel ksh libgcc.i686 libstdc++-devel libaio libaio.i686 libaio-devel libaio-devel.i686 libXext libXext.i686 libXtst libXtst.i686 libX11 libX11.i686 libXau libXau.i686 libxcb libxcb.i686 libXi libXi.i686 make sysstat unixODBC unixODBC-devel zlib-devel zlib-devel.i686 compat-libcap1 nfs-utils smartmontools unzip xclock xterm xorg*

找到数据库安装包下的rpm目录的cvuqdisk-1.0.10-1.rpm,上传到/tmp目录下并安装

安装cvuqdisk(所有root节点执行)

rpm -ivh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

开启esxi平台的ssh,使用root登陆到主机,使用以下命令生成虚拟磁盘(vmware用户则使用vmware相关的命令创建虚拟磁盘)

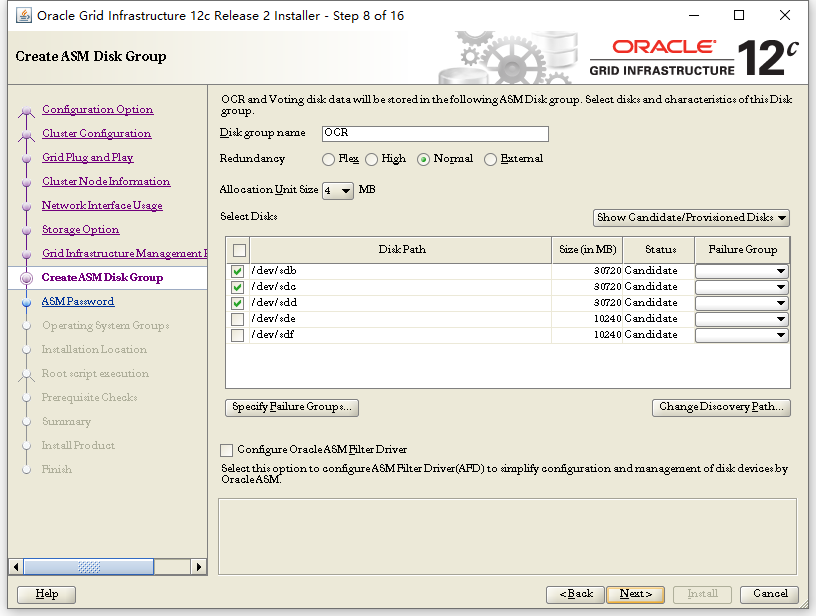

vmkfstools -c 30Gb -a lsilogic -d eagerzeroedthick /vmfs/volumes/datastore1/sharedisk/asm1.vmdk

vmkfstools -c 30Gb -a lsilogic -d eagerzeroedthick /vmfs/volumes/datastore1/sharedisk/asm2.vmdk

vmkfstools -c 30Gb -a lsilogic -d eagerzeroedthick /vmfs/volumes/datastore1/sharedisk/asm3.vmdk

vmkfstools -c 10Gb -a lsilogic -d eagerzeroedthick /vmfs/volumes/datastore1/sharedisk/asm4.vmdk

vmkfstools -c 10Gb -a lsilogic -d eagerzeroedthick /vmfs/volumes/datastore1/sharedisk/asm5.vmdk

前面3个30G的虚拟磁盘用作OCR的仲裁盘,使用常规冗余方式,后面两个10G的虚拟盘用作数据盘

修改虚拟机的共享磁盘的配置文件

先关闭虚拟机,添加现有磁盘的时候不必关注类型是否为后置备,置零,使用以上命令创建的肯定是后置备,置零,只是添加时软件显示的不是后置备,置零,添加成功之后将自动变成后置备,置零,有一点是需要注意的,就是添加时一定要选择磁盘模式为独立-持久,这个选项是添加时定义的,添加之后,如果虚拟机有快照改选项将无法更改,关于共享,添加成功之后通过再次编辑虚拟机,选择多写入器共享即可

添加现有磁盘,注意最终的参数为"后置备,置零,独立-持久,多写入器共享"

最终开机之后使用以下命令测试是否可以得到UUID

/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdc

36000c29392c541f9ef3e5f72aa62e0b8

如果无法得到UUID的话,需要修改虚拟机的配置文件,将配置文件中加入如下参数(ESXi6.5需要在web控制台选择虚拟机,操作,编辑设置,虚拟机选项,高级,编辑配置,添加参数,vmware用户可使用记事本直接编辑虚拟机的vmx文件)

disk.Enable true

绑定udev共享磁盘

for i in b c d e f

do

echo "KERNEL==\"sd?\",SUBSYSTEM==\"block\", PROGRAM==\"/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\",RESULT==\"`/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", SYMLINK+=\"asm-disk$i\",OWNER=\"grid\", GROUP=\"asmadmin\",MODE=\"0660\"" >>/etc/udev/rules.d/99-oracle-asmdevices.rules

done

重读分区表

/sbin/partprobe /dev/sdb

/sbin/partprobe /dev/sdc

/sbin/partprobe /dev/sdd

/sbin/partprobe /dev/sde

/sbin/partprobe /dev/sdf

udevadm测试

/sbin/udevadm test /sys/block/sdb

/sbin/udevadm test /sys/block/sdc

/sbin/udevadm test /sys/block/sdd

/sbin/udevadm test /sys/block/sde

/sbin/udevadm test /sys/block/sdf

重载udev

/sbin/udevadm control --reload-rules

验证udev绑定的磁盘组

ls -l /dev/as*

输出结果

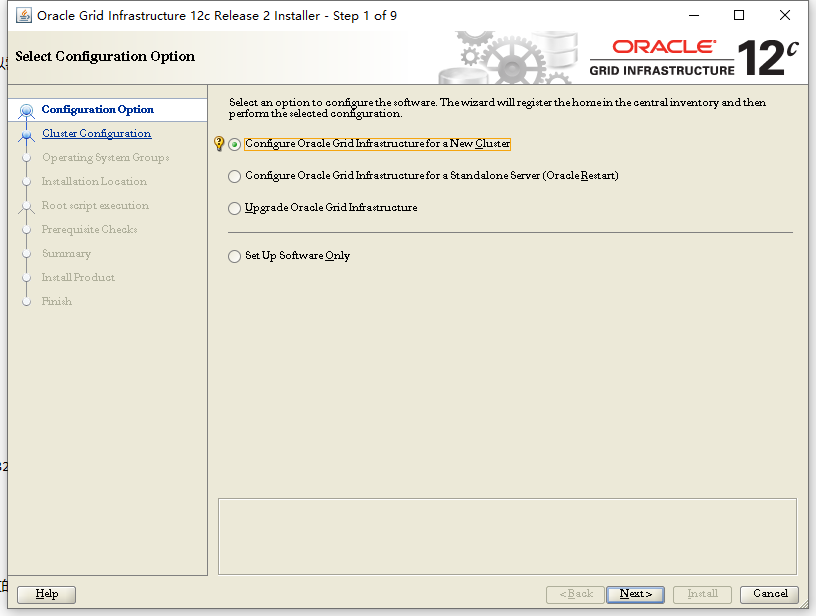

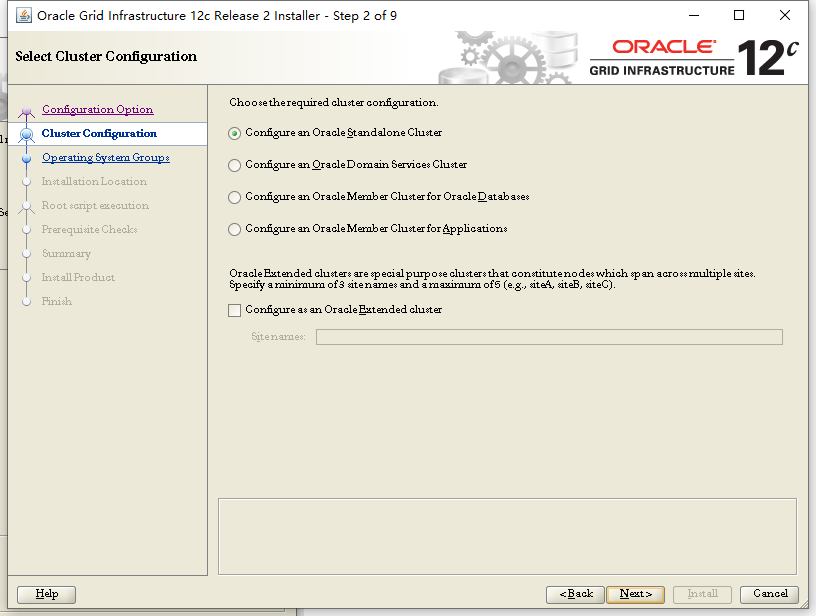

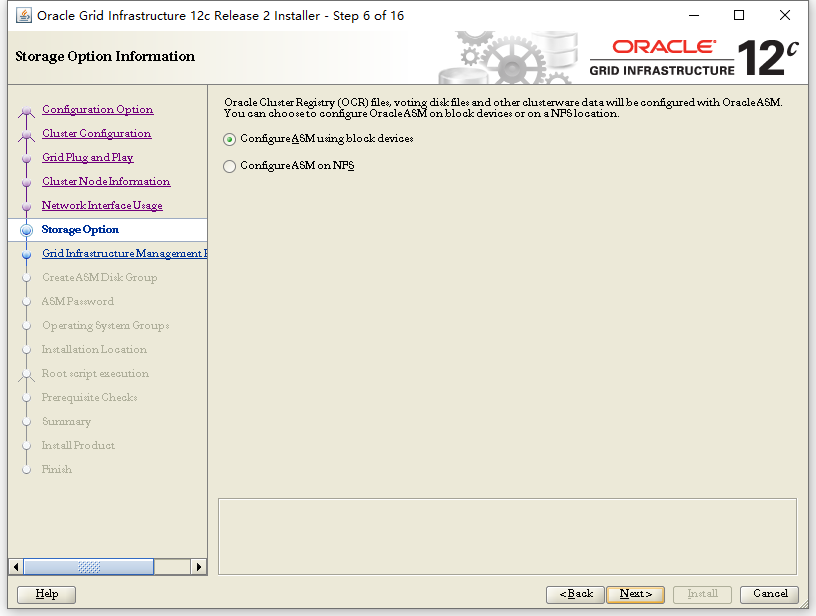

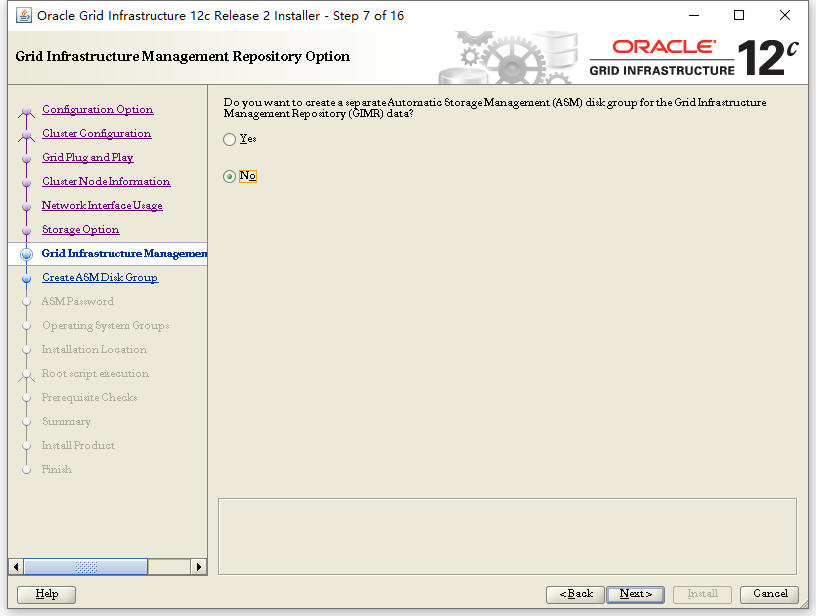

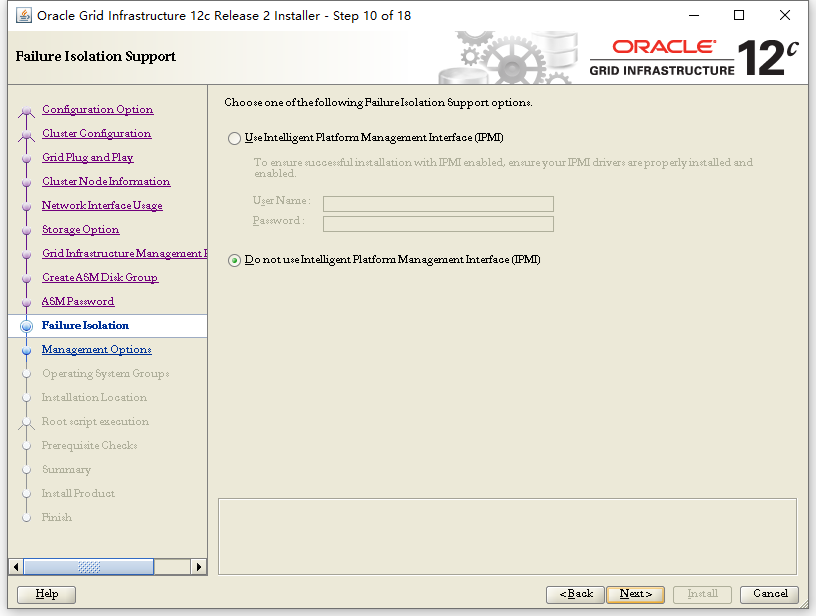

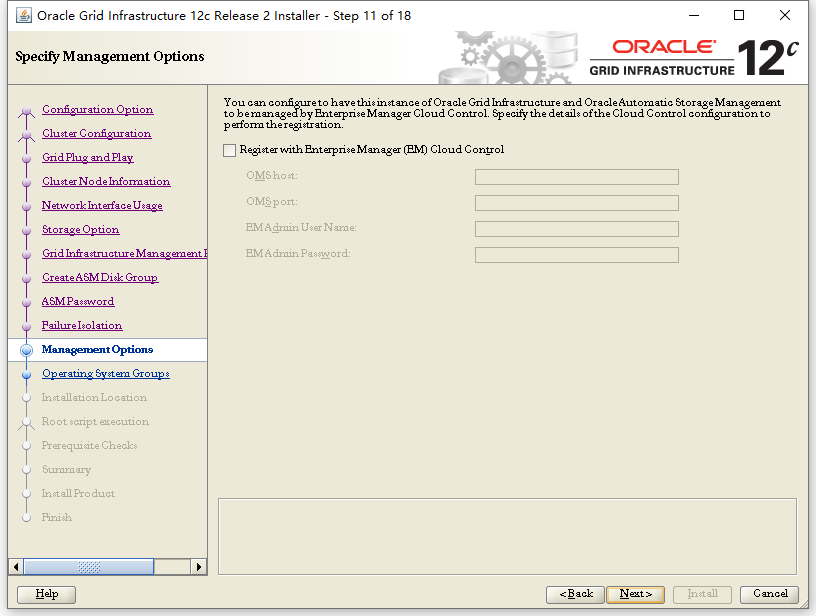

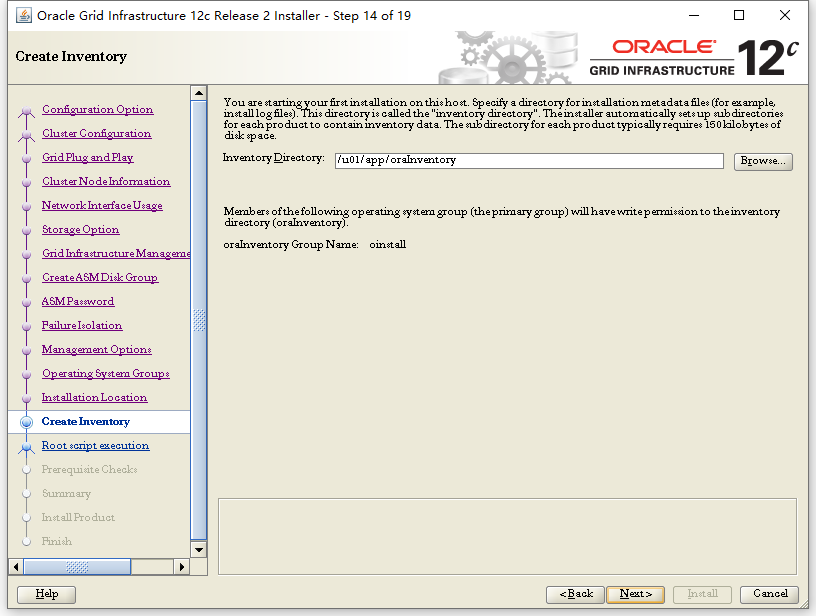

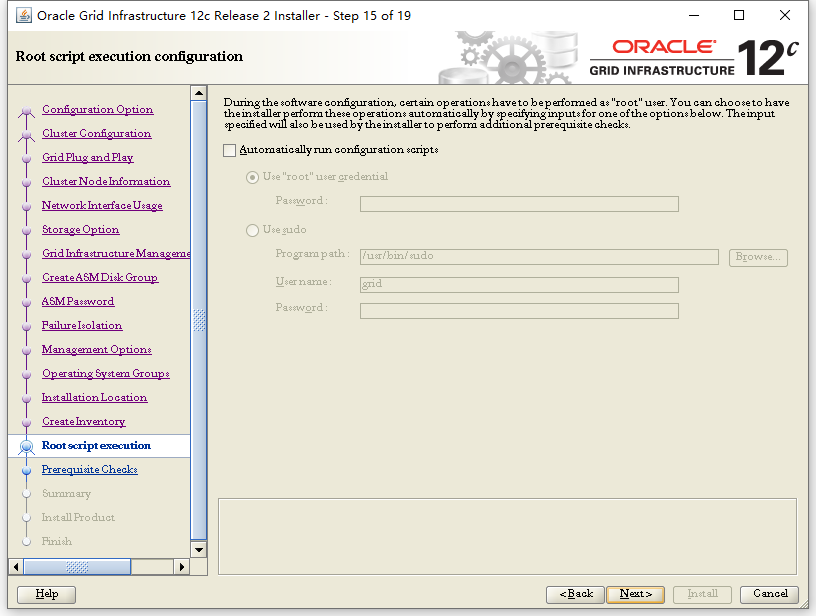

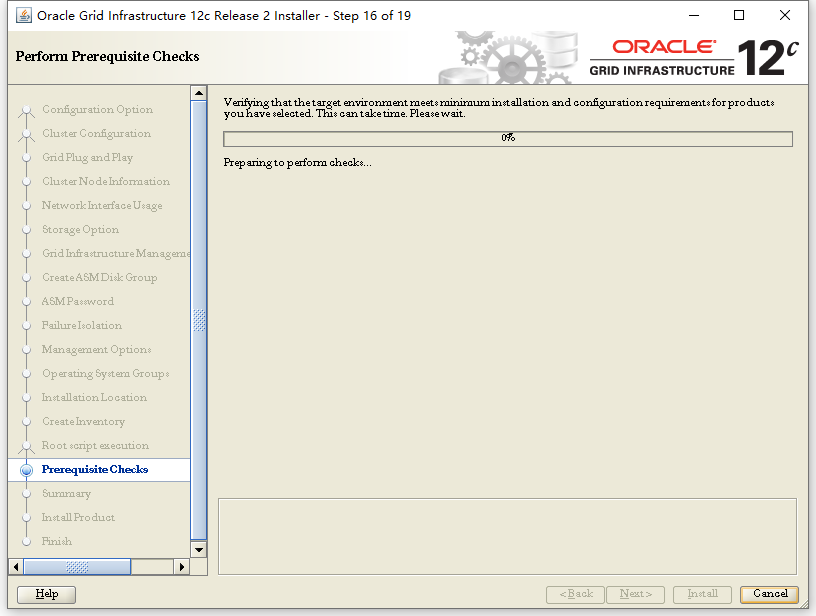

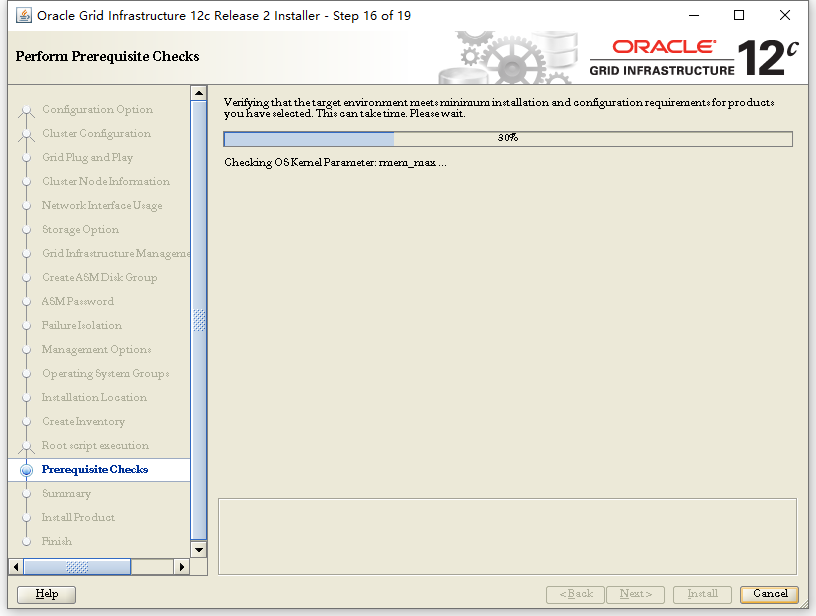

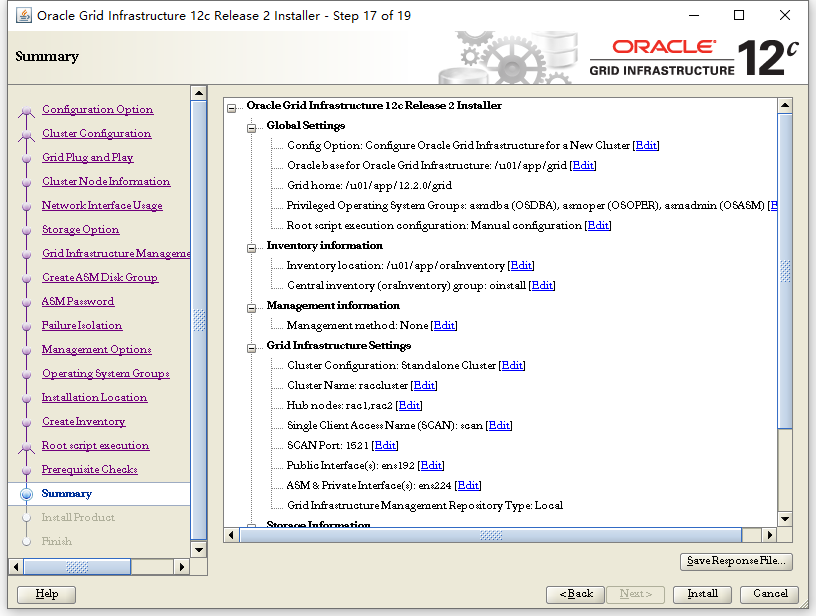

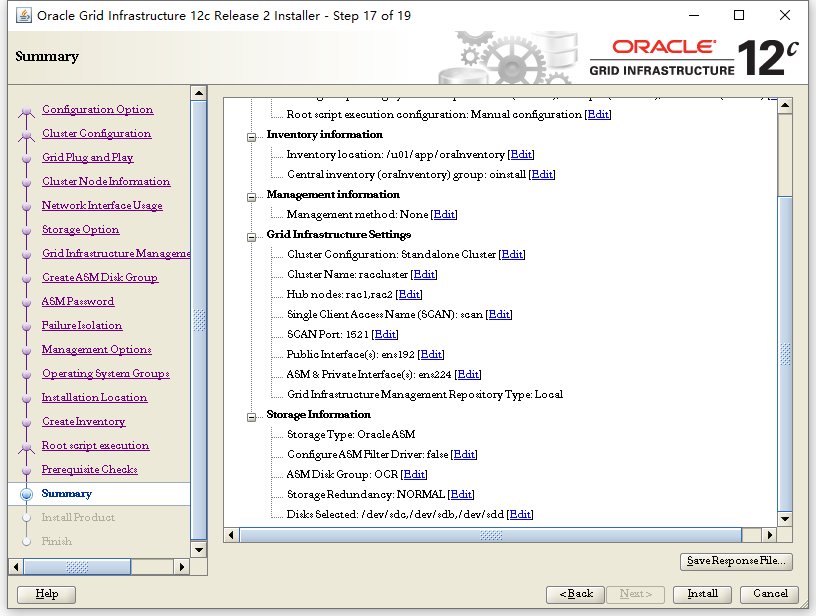

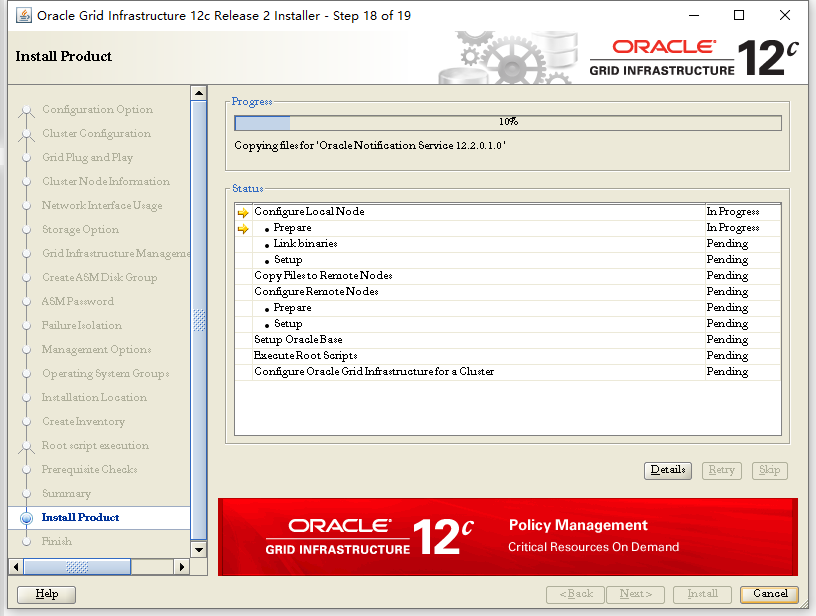

安装grid

注意:12cR2 的GRID 的安装和之前版本不同,采用的是直接解压缩的模式。 所以需要先把安装介质复制到GRID HOME,然后直接进行解压缩。 这个目录必须在GRID HOME下才可以进行解压缩。

使用grid用户登陆

[grid@rac1 ~]$ echo $ORACLE_HOME

/u01/app/12.2.0/grid

上传grid包到/u01/app/12.2.0/grid目录下然后安装unzip解压缩软件(root用户)

解压缩grid包(在哪个节点安装就在哪个节点上解压即可,grid用户执行)

unzip linuxx64_12201_grid_home.zip

启动图形化安装(grid用户执行)

[grid@rac1 grid]$ ./gridSetup.sh

Launching Oracle Grid Infrastructure Setup Wizard…

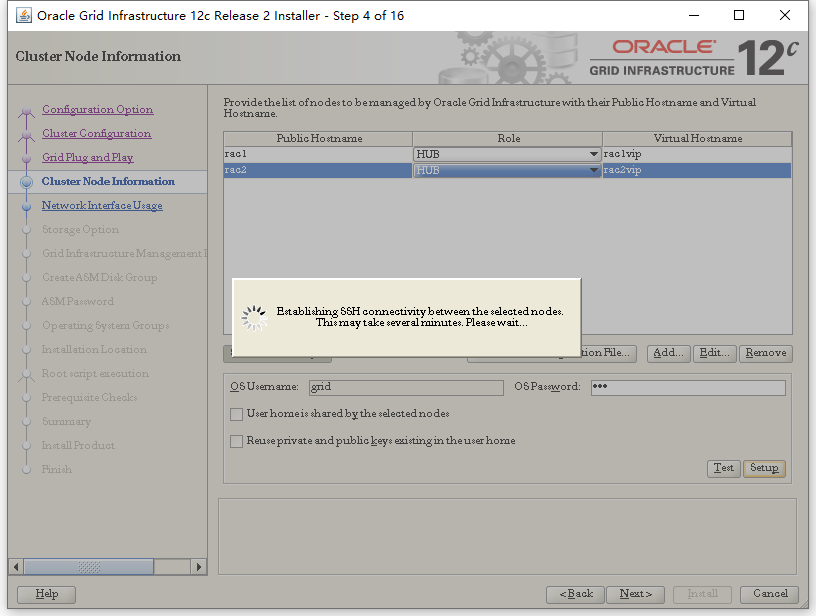

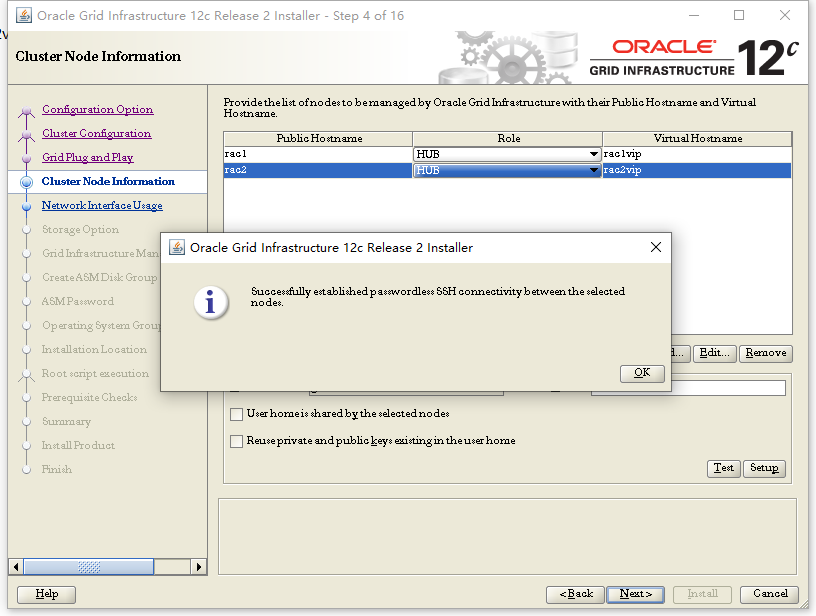

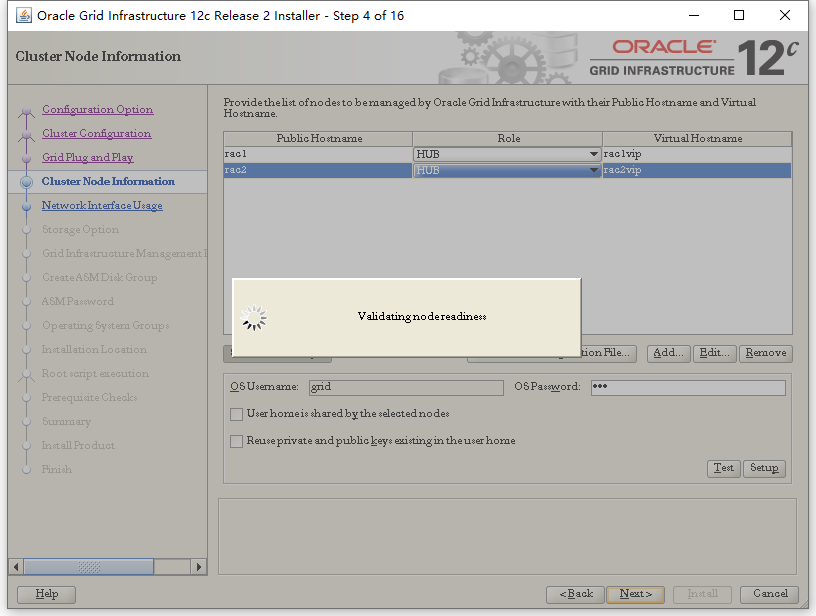

此处先点Edit编辑rac1,将默认的rac1-vip修改成rac1vip删除中划线,然后点Add,添加rac2,名称是rac2,虚拟主机名是rac2vip也没有中划线,然后点SSH connectivity,再OS Password中输入grid的密码,然后点下面的Setup

弹出如下的执行窗口

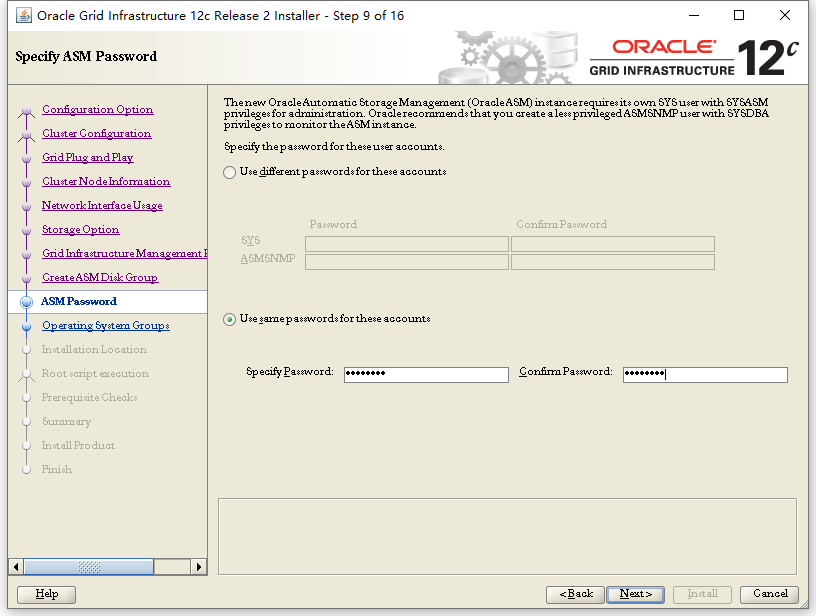

以上设置密码为:Admin123

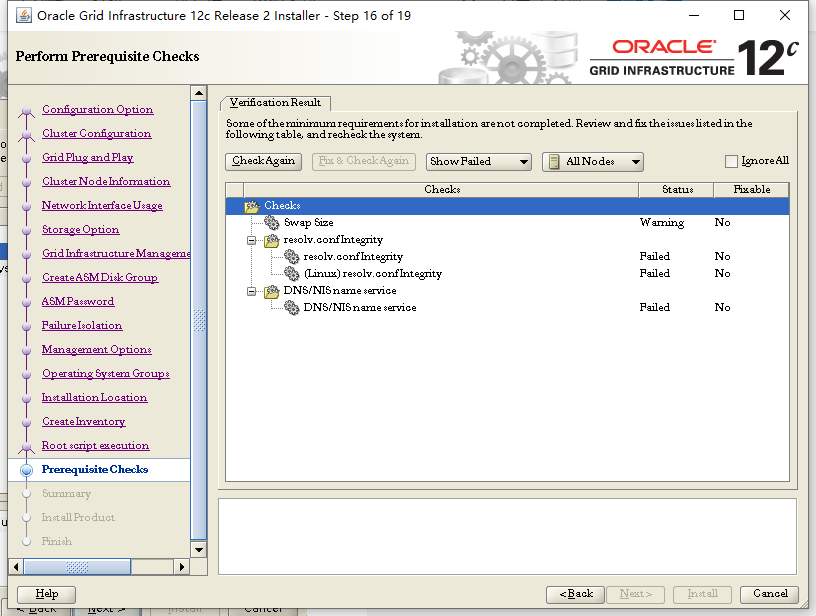

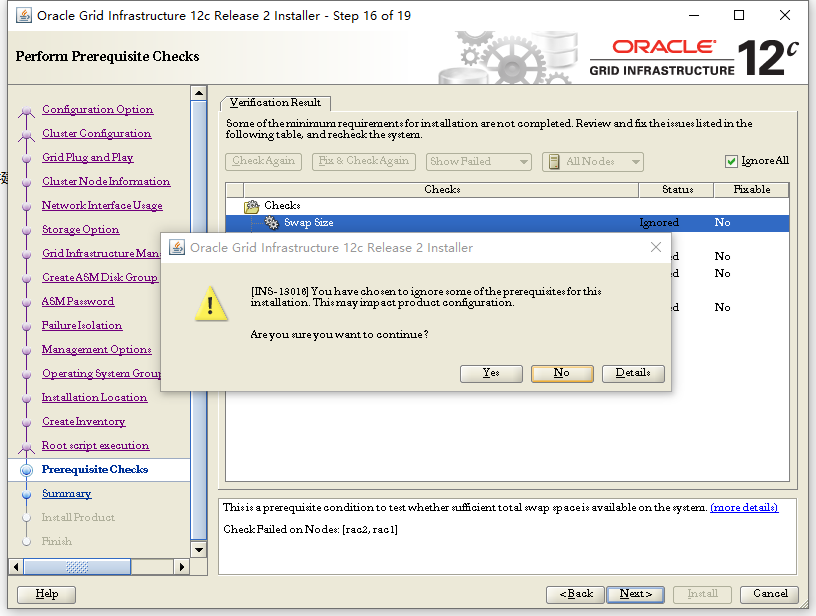

不使用DNS解析主机名,忽略DNS的错误和resolv的错误。Swap大小可在系统安装时定义,我的环境物理内存为16G,grid软件建议的大小为15.4188GB,不知道是否是oracle官方建议将交换分区设置和物理内存一样的大小,已经均忽略,选择Ignore All

Yes

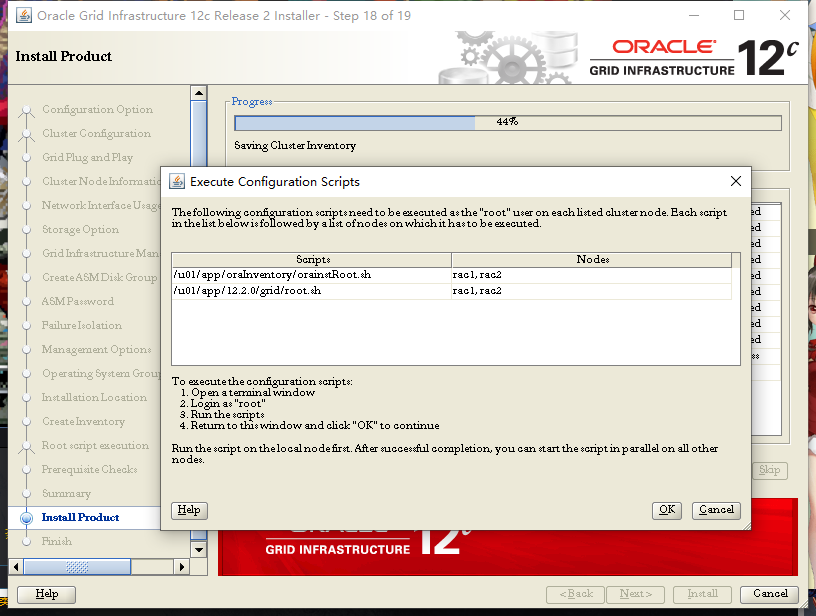

使用root用户,去各个节点执行脚本,先在节点1执行了orainstRoot.sh和root.sh,再去节点2依次执行

节点1的相关日志

[root@rac1 grid]# whoami

root

[root@rac1 grid]# cd /u01/app/oraInventory/

[root@rac1 oraInventory]# ./orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac1 oraInventory]# cd /u01/app/12.2.0/grid/

[root@rac1 grid]# ./root.sh

root.sh root.sh.old

[root@rac1 grid]# ./root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/12.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/12.2.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac1/crsconfig/rootcrs_rac1_2019-03-27_08-10-04AM.log

2019/03/27 08:10:06 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2019/03/27 08:10:06 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector.

2019/03/27 08:10:30 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/03/27 08:10:30 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2019/03/27 08:10:34 CLSRSC-363: User ignored prerequisites during installation

2019/03/27 08:10:34 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2019/03/27 08:10:35 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2019/03/27 08:10:36 CLSRSC-594: Executing installation step 5 of 19: 'SaveParamFile'.

2019/03/27 08:10:41 CLSRSC-594: Executing installation step 6 of 19: 'SetupOSD'.

2019/03/27 08:10:42 CLSRSC-594: Executing installation step 7 of 19: 'CheckCRSConfig'.

2019/03/27 08:10:42 CLSRSC-594: Executing installation step 8 of 19: 'SetupLocalGPNP'.

2019/03/27 08:11:02 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2019/03/27 08:11:08 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2019/03/27 08:11:08 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2019/03/27 08:11:12 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2019/03/27 08:11:28 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2019/03/27 08:11:56 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2019/03/27 08:12:00 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac1'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2019/03/27 08:12:29 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2019/03/27 08:12:33 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac1'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2672: Attempting to start 'ora.evmd' on 'rac1'

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1'

CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded

CRS-2676: Start of 'ora.evmd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1'

CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1'

CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded

Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-190327AM081309.log for details.

2019/03/27 08:13:54 CLSRSC-482: Running command: '/u01/app/12.2.0/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-2672: Attempting to start 'ora.crf' on 'rac1'

CRS-2672: Attempting to start 'ora.storage' on 'rac1'

CRS-2676: Start of 'ora.storage' on 'rac1' succeeded

CRS-2676: Start of 'ora.crf' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rac1'

CRS-2676: Start of 'ora.crsd' on 'rac1' succeeded

CRS-4256: Updating the profile

Successful addition of voting disk ef2a7a3187914f83bf26540634ff2096.

Successful addition of voting disk db1b21a9ba0d4f24bfde4a009802bf7e.

Successful addition of voting disk aaaeba3168554feebf40b64df4adb643.

Successfully replaced voting disk group with +OCR.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE ef2a7a3187914f83bf26540634ff2096 (/dev/sdc) [OCR]

2. ONLINE db1b21a9ba0d4f24bfde4a009802bf7e (/dev/sdb) [OCR]

3. ONLINE aaaeba3168554feebf40b64df4adb643 (/dev/sdd) [OCR]

Located 3 voting disk(s).

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac1'

CRS-2673: Attempting to stop 'ora.crsd' on 'rac1'

CRS-2677: Stop of 'ora.crsd' on 'rac1' succeeded

CRS-2673: Attempting to stop 'ora.storage' on 'rac1'

CRS-2673: Attempting to stop 'ora.crf' on 'rac1'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rac1'

CRS-2673: Attempting to stop 'ora.gpnpd' on 'rac1'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'rac1'

CRS-2677: Stop of 'ora.drivers.acfs' on 'rac1' succeeded

CRS-2677: Stop of 'ora.crf' on 'rac1' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'rac1' succeeded

CRS-2677: Stop of 'ora.storage' on 'rac1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac1'

CRS-2677: Stop of 'ora.mdnsd' on 'rac1' succeeded

CRS-2677: Stop of 'ora.asm' on 'rac1' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac1'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac1' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'rac1'

CRS-2673: Attempting to stop 'ora.evmd' on 'rac1'

CRS-2677: Stop of 'ora.ctssd' on 'rac1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'rac1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rac1'

CRS-2677: Stop of 'ora.cssd' on 'rac1' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'rac1'

CRS-2677: Stop of 'ora.gipcd' on 'rac1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

2019/03/27 08:15:03 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1'

CRS-2672: Attempting to start 'ora.evmd' on 'rac1'

CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded

CRS-2676: Start of 'ora.evmd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1'

CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'rac1'

CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1'

CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac1'

CRS-2672: Attempting to start 'ora.ctssd' on 'rac1'

CRS-2676: Start of 'ora.ctssd' on 'rac1' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac1'

CRS-2676: Start of 'ora.asm' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'rac1'

CRS-2676: Start of 'ora.storage' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.crf' on 'rac1'

CRS-2676: Start of 'ora.crf' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rac1'

CRS-2676: Start of 'ora.crsd' on 'rac1' succeeded

CRS-6023: Starting Oracle Cluster Ready Services-managed resources

CRS-6017: Processing resource auto-start for servers: rac1

CRS-6016: Resource auto-start has completed for server rac1

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2019/03/27 08:17:40 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/03/27 08:17:40 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

CRS-2672: Attempting to start 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac1'

CRS-2676: Start of 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac1'

CRS-2676: Start of 'ora.asm' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.OCR.dg' on 'rac1'

CRS-2676: Start of 'ora.OCR.dg' on 'rac1' succeeded

2019/03/27 08:19:18 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2019/03/27 08:19:57 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

节点2的相关日志

[root@rac2 ~]# whoami

root

[root@rac2 ~]# cd /u01/app/oraInventory/

[root@rac2 oraInventory]# ./orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac2 oraInventory]# cd ../12.2.0/grid/

[root@rac2 grid]# ./root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/12.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/12.2.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac2/crsconfig/rootcrs_rac2_2019-03-27_08-22-39AM.log

2019/03/27 08:22:41 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2019/03/27 08:22:41 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector.

2019/03/27 08:23:05 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/03/27 08:23:05 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2019/03/27 08:23:06 CLSRSC-363: User ignored prerequisites during installation

2019/03/27 08:23:06 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2019/03/27 08:23:07 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2019/03/27 08:23:07 CLSRSC-594: Executing installation step 5 of 19: 'SaveParamFile'.

2019/03/27 08:23:09 CLSRSC-594: Executing installation step 6 of 19: 'SetupOSD'.

2019/03/27 08:23:11 CLSRSC-594: Executing installation step 7 of 19: 'CheckCRSConfig'.

2019/03/27 08:23:11 CLSRSC-594: Executing installation step 8 of 19: 'SetupLocalGPNP'.

2019/03/27 08:23:13 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2019/03/27 08:23:14 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2019/03/27 08:23:14 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2019/03/27 08:23:15 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2019/03/27 08:23:31 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2019/03/27 08:23:56 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2019/03/27 08:23:57 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac2'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2019/03/27 08:24:24 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2019/03/27 08:24:25 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac2'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac2'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rac2'

CRS-2677: Stop of 'ora.drivers.acfs' on 'rac2' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

2019/03/27 08:24:53 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac2'

CRS-2672: Attempting to start 'ora.evmd' on 'rac2'

CRS-2676: Start of 'ora.mdnsd' on 'rac2' succeeded

CRS-2676: Start of 'ora.evmd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac2'

CRS-2676: Start of 'ora.gpnpd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'rac2'

CRS-2676: Start of 'ora.gipcd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac2'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac2'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac2'

CRS-2676: Start of 'ora.diskmon' on 'rac2' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac2'

CRS-2672: Attempting to start 'ora.ctssd' on 'rac2'

CRS-2676: Start of 'ora.ctssd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.crf' on 'rac2'

CRS-2676: Start of 'ora.crf' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rac2'

CRS-2676: Start of 'ora.crsd' on 'rac2' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac2'

CRS-2676: Start of 'ora.asm' on 'rac2' succeeded

CRS-6017: Processing resource auto-start for servers: rac2

CRS-2672: Attempting to start 'ora.net1.network' on 'rac2'

CRS-2672: Attempting to start 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac2'

CRS-2676: Start of 'ora.net1.network' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.ons' on 'rac2'

CRS-2676: Start of 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac2'

CRS-2676: Start of 'ora.ons' on 'rac2' succeeded

CRS-2676: Start of 'ora.asm' on 'rac2' succeeded

CRS-6016: Resource auto-start has completed for server rac2

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2019/03/27 08:27:39 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/03/27 08:27:39 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2019/03/27 08:28:00 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2019/03/27 08:28:28 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... Succeeded

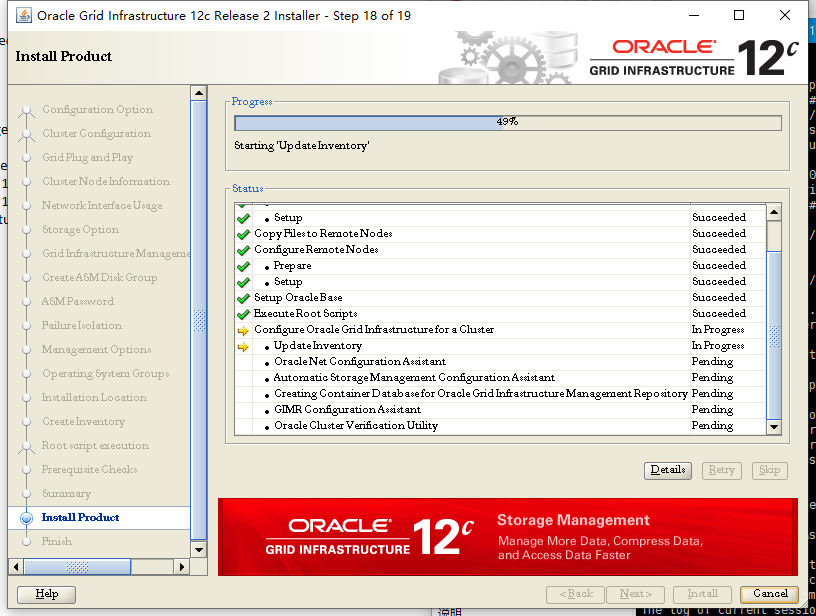

执行完两个节点上的两个脚本之后按OK,让程序继续往下走

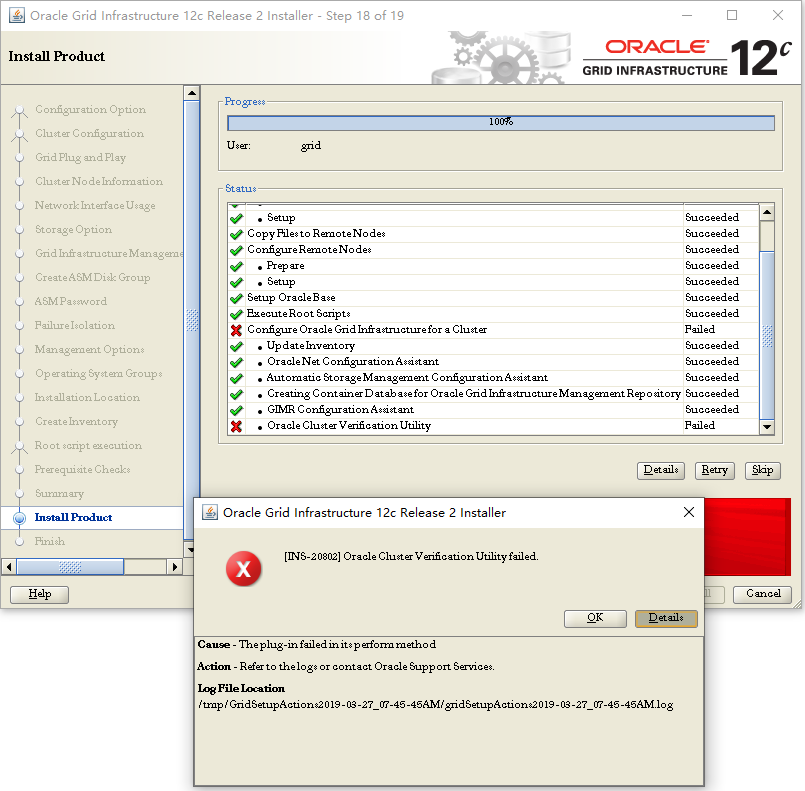

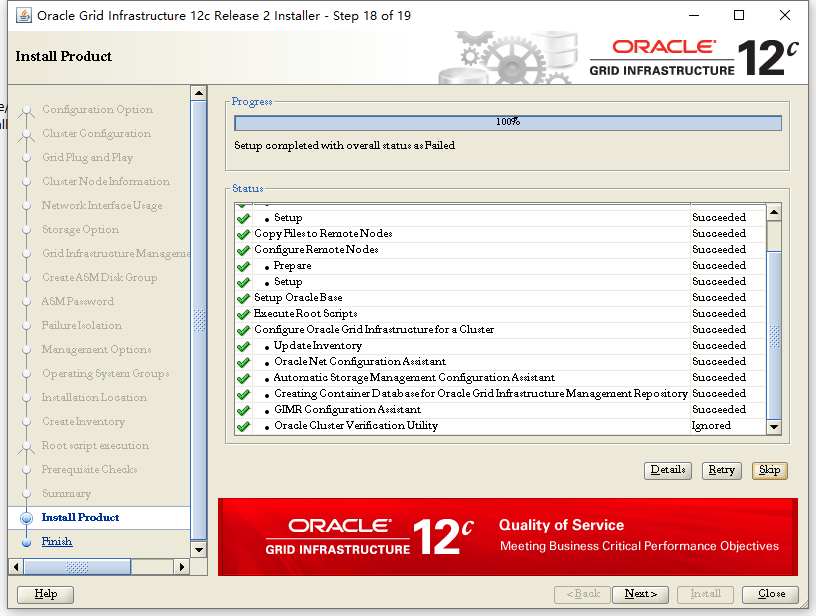

最后报错,查看日志,日志的详细信息

[root@rac1 GridSetupActions2019-03-27_07-45-45AM]# tail -n 50 gridSetupActions2019-03-27_07-45-45AM.log

INFO: [Mar 27, 2019 8:50:45 AM] Read:

INFO: [Mar 27, 2019 8:50:45 AM] Read: Verifying ASM best practices

INFO: [Mar 27, 2019 8:50:45 AM] Verifying ASM best practices

INFO: [Mar 27, 2019 8:50:45 AM] Read:

INFO: [Mar 27, 2019 8:50:49 AM] Read: Collecting ASM_POWER_LIMIT ...collected

INFO: [Mar 27, 2019 8:50:49 AM] Collecting ASM_POWER_LIMIT ...collected

INFO: [Mar 27, 2019 8:50:51 AM] Read: Collecting ASM disk I/O error ...collected

INFO: [Mar 27, 2019 8:50:51 AM] Collecting ASM disk I/O error ...collected

INFO: [Mar 27, 2019 8:50:53 AM] Read: Collecting Disks without disk_group ...collected

INFO: [Mar 27, 2019 8:50:53 AM] Collecting Disks without disk_group ...collected

INFO: [Mar 27, 2019 8:50:55 AM] Read: Collecting ASM SHARED_POOL_SIZE parameter ...collected

INFO: [Mar 27, 2019 8:50:55 AM] Collecting ASM SHARED_POOL_SIZE parameter ...collected

INFO: [Mar 27, 2019 8:50:57 AM] Read: Collecting ASM disk group free space ...collected

INFO: [Mar 27, 2019 8:50:57 AM] Collecting ASM disk group free space ...collected

INFO: [Mar 27, 2019 8:50:59 AM] Read: Collecting ASM disk rebalance operations in WAIT status ...collected

INFO: [Mar 27, 2019 8:50:59 AM] Collecting ASM disk rebalance operations in WAIT status ...collected

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read: Baseline collected.

INFO: [Mar 27, 2019 8:50:59 AM] Baseline collected.

INFO: [Mar 27, 2019 8:50:59 AM] Read: Collection report for this execution is saved in file "/u01/app/grid/crsdata/@global/cvu/baseline/install/grid_install_12.2.0.1.0.zip".

INFO: [Mar 27, 2019 8:50:59 AM] Collection report for this execution is saved in file "/u01/app/grid/crsdata/@global/cvu/baseline/install/grid_install_12.2.0.1.0.zip".

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read: Post-check for cluster services setup was unsuccessful.

INFO: [Mar 27, 2019 8:50:59 AM] Post-check for cluster services setup was unsuccessful.

INFO: [Mar 27, 2019 8:50:59 AM] Read: Checks did not pass for the following nodes:

INFO: [Mar 27, 2019 8:50:59 AM] Checks did not pass for the following nodes:

INFO: [Mar 27, 2019 8:50:59 AM] Read: rac2,rac1

INFO: [Mar 27, 2019 8:50:59 AM] rac2,rac1

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read: Failures were encountered during execution of CVU verification request "stage -post crsinst".

INFO: [Mar 27, 2019 8:50:59 AM] Failures were encountered during execution of CVU verification request "stage -post crsinst".

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read: Verifying Single Client Access Name (SCAN) ...FAILED

INFO: [Mar 27, 2019 8:50:59 AM] Verifying Single Client Access Name (SCAN) ...FAILED

INFO: [Mar 27, 2019 8:50:59 AM] Read: Verifying DNS/NIS name service 'scan' ...FAILED

INFO: [Mar 27, 2019 8:50:59 AM] Verifying DNS/NIS name service 'scan' ...FAILED

INFO: [Mar 27, 2019 8:50:59 AM] Read: PRVG-1101 : SCAN name "scan" failed to resolve

INFO: [Mar 27, 2019 8:50:59 AM] PRVG-1101 : SCAN name "scan" failed to resolve

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read:

INFO: [Mar 27, 2019 8:50:59 AM] Read: CVU operation performed: stage -post crsinst

INFO: [Mar 27, 2019 8:50:59 AM] CVU operation performed: stage -post crsinst

INFO: [Mar 27, 2019 8:50:59 AM] Read: Date: Mar 27, 2019 8:48:24 AM

INFO: [Mar 27, 2019 8:50:59 AM] Date: Mar 27, 2019 8:48:24 AM

INFO: [Mar 27, 2019 8:50:59 AM] Read: CVU home: /u01/app/12.2.0/grid/

INFO: [Mar 27, 2019 8:50:59 AM] CVU home: /u01/app/12.2.0/grid/

INFO: [Mar 27, 2019 8:50:59 AM] Read: User: grid

INFO: [Mar 27, 2019 8:50:59 AM] User: grid

INFO: [Mar 27, 2019 8:50:59 AM] Completed Plugin named: Oracle Cluster Verification Utility

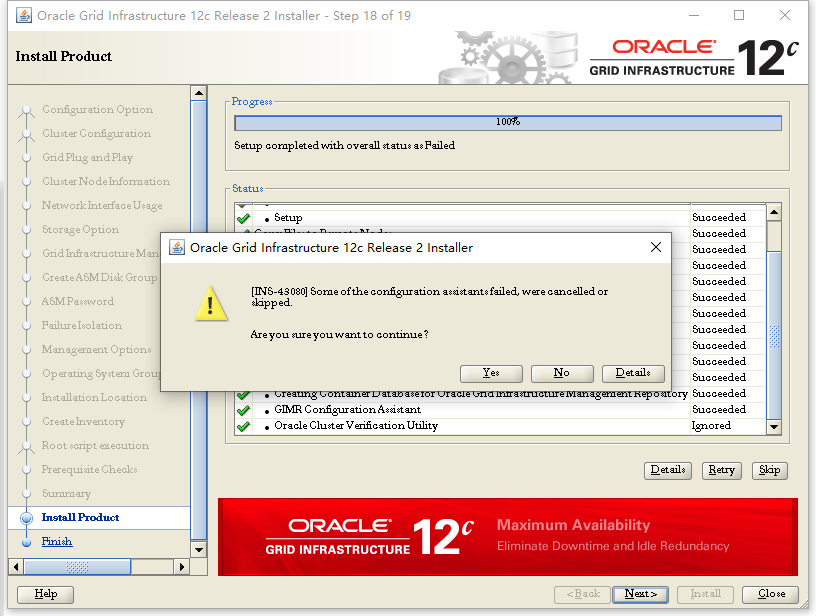

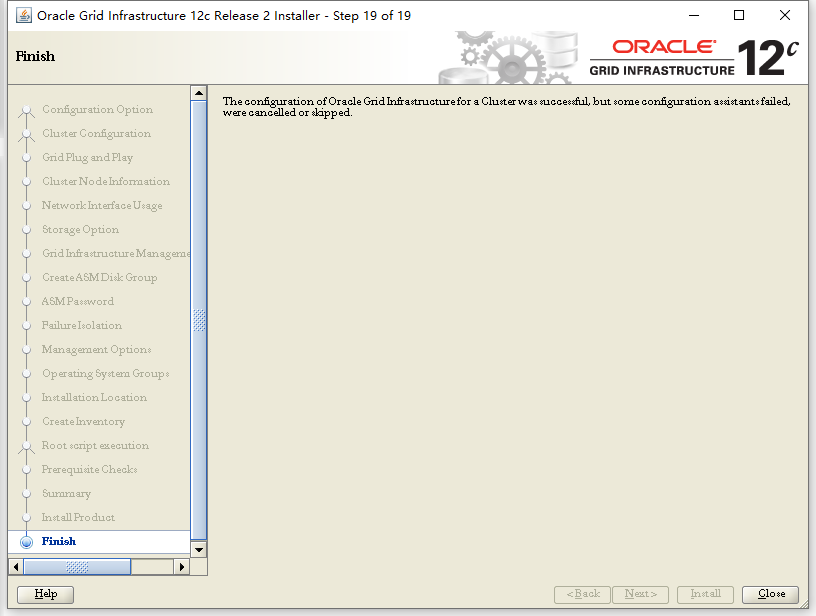

查看日志得到是无法解析scan,因为没有配置dns服务器,所有无法解析,由于使用hosts文件解析,该错误可以忽略

直接点ok,点skip

Yes

使用grid用户登陆两个节点,查看集群状态

节点1

[grid@rac1 grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.OCR.dg

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac1 169.254.171.182 10.1

0.10.1,STABLE

ora.asm

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac1 Open,STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------

节点2

[grid@rac2 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.OCR.dg

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac1 169.254.171.182 10.1

0.10.1,STABLE

ora.asm

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac1 Open,STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------

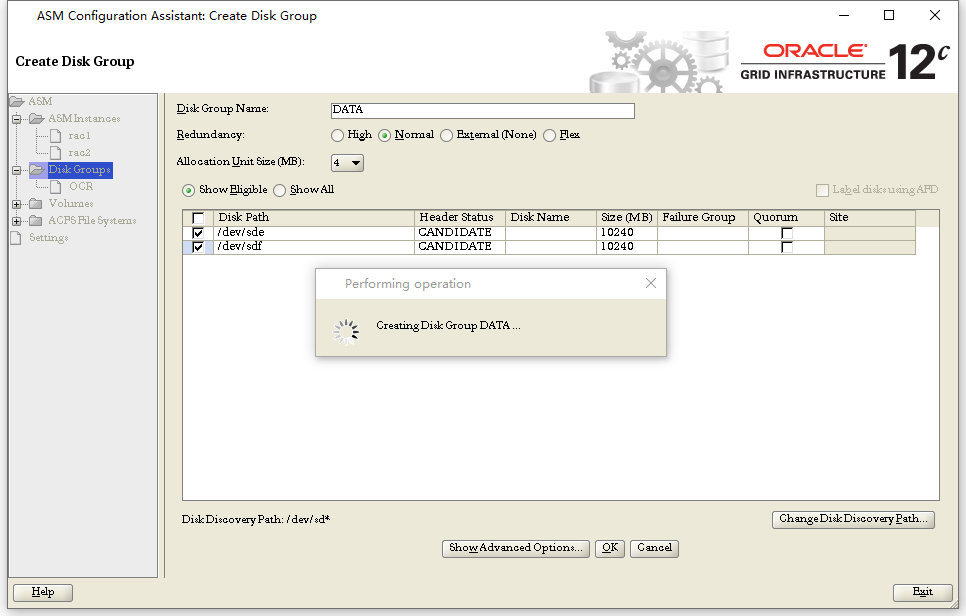

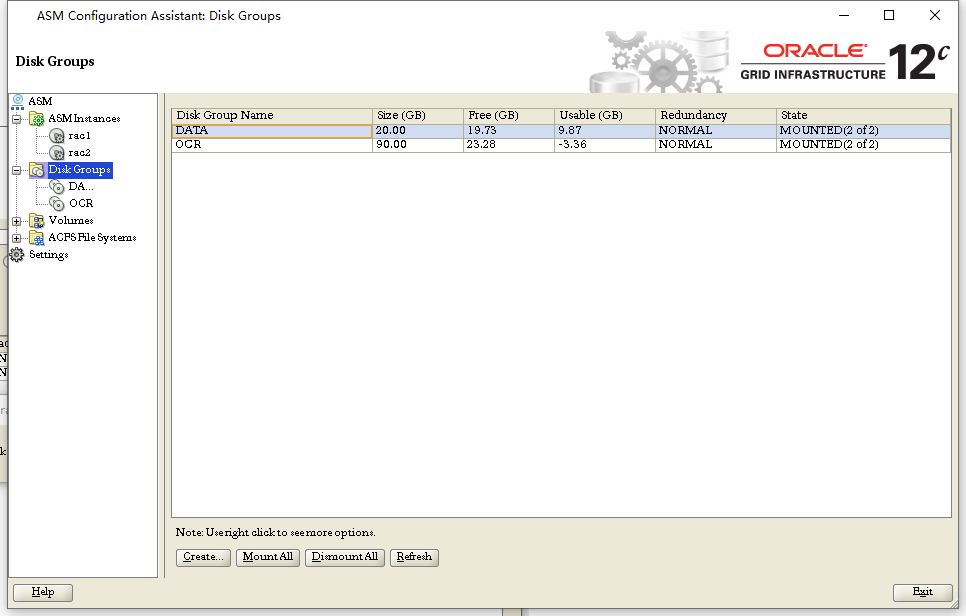

检查和配置asm

使用grid用户执行

[grid@rac1 grid]$ asmca

两个节点都要检查,注意挂载的状态和节点是否存活,两个几点的信息要一致

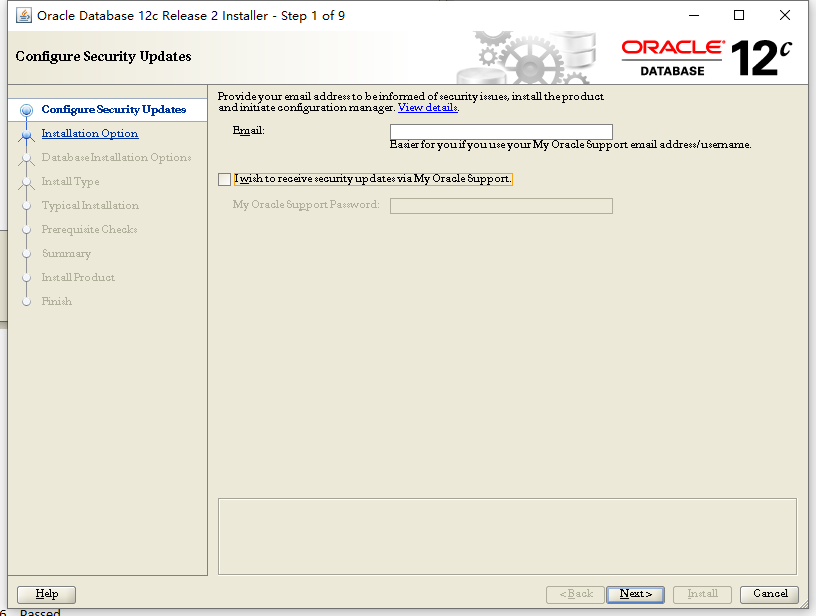

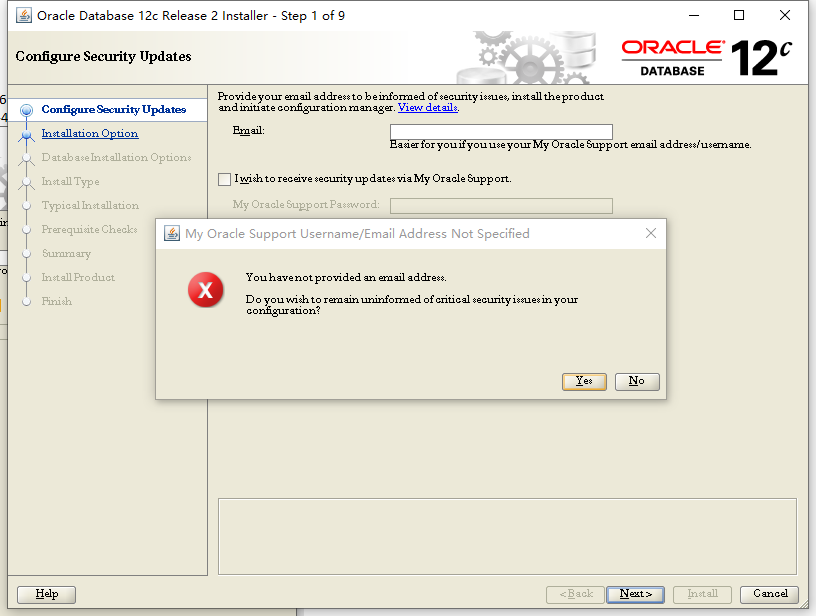

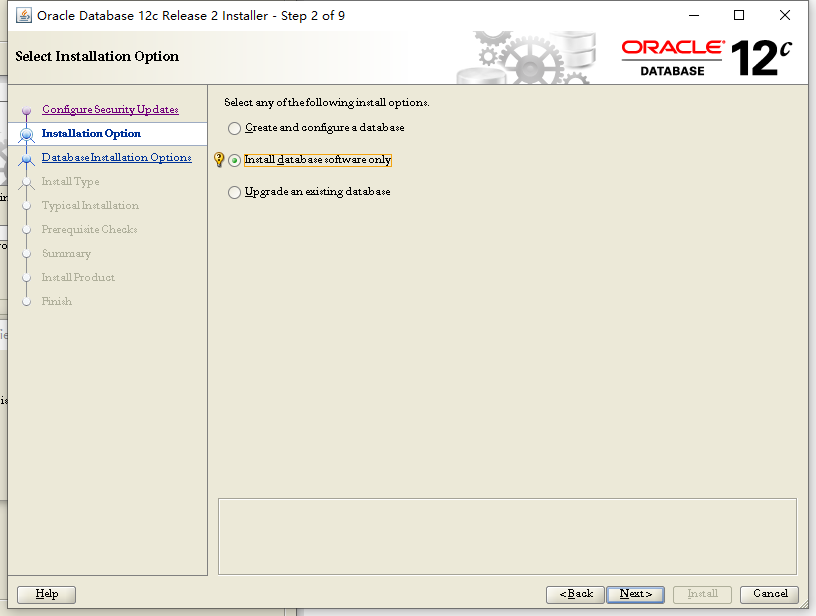

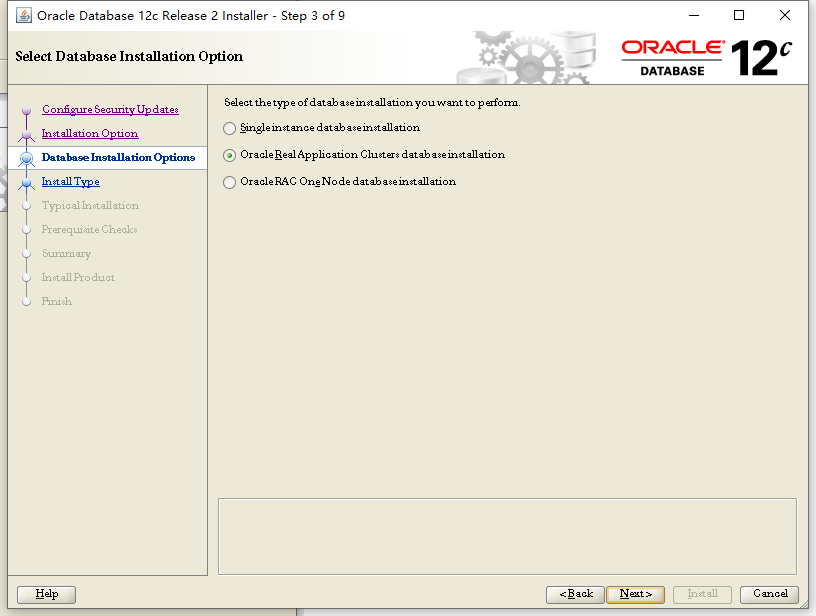

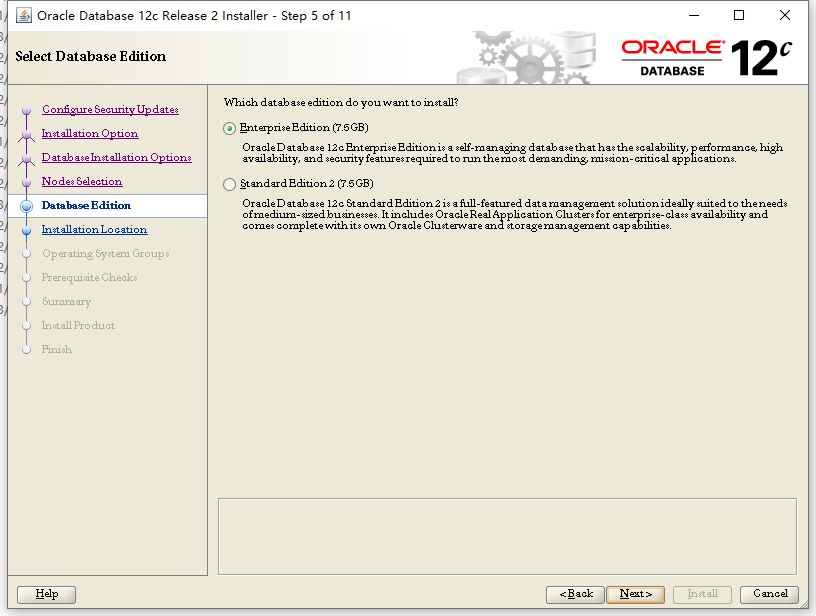

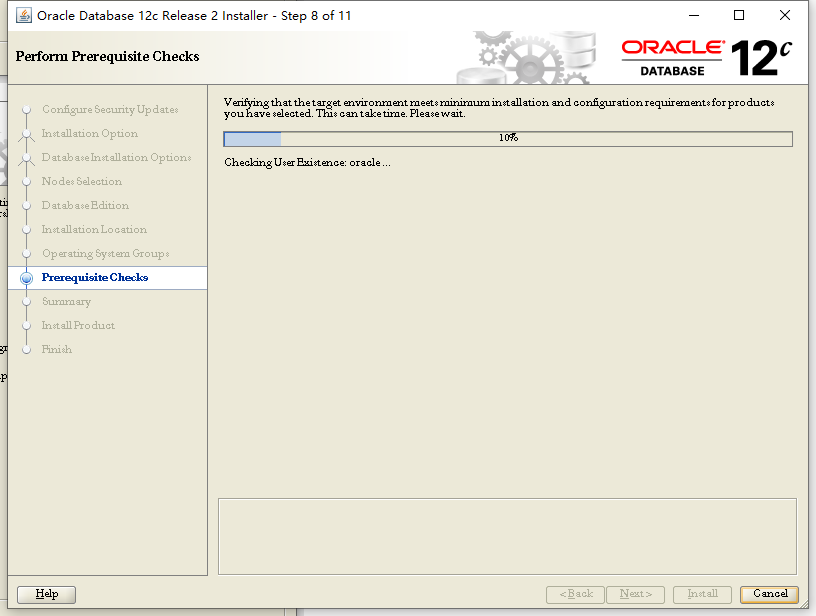

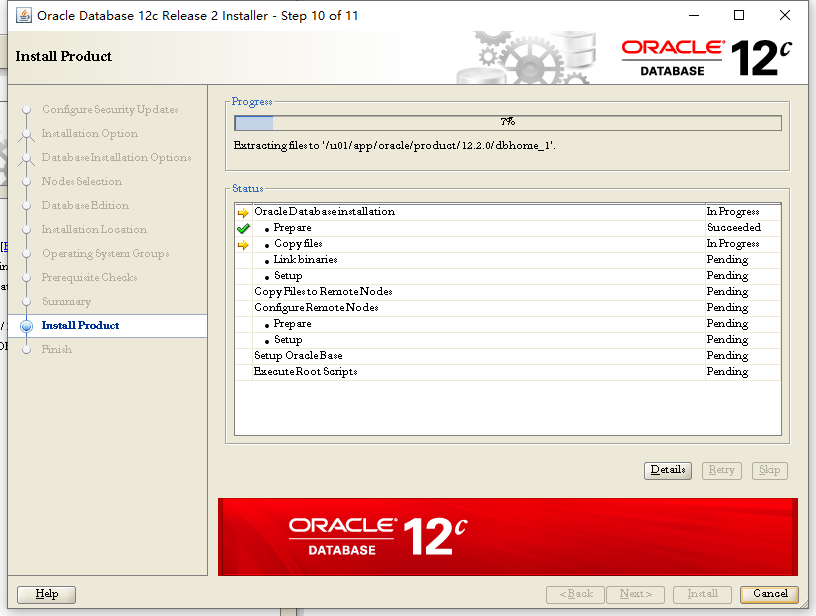

下面安装数据库软件

使用xftp工具将数据库软件包上传到/u01/app/oracle目录(root执行)

使用oracle 用户登陆,解压数据库软件包(oracle执行)

unzip linuxx64_12201_database.zip

进入database目录,执行runInstaller开始安装

[oracle@rac1 oracle]$ cd database/

[oracle@rac1 database]$ ./runInstaller

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 500 MB. Actual 24214 MB Passed

Checking swap space: must be greater than 150 MB. Actual 5115 MB Passed

Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2019-03-27_09-22-45AM. Please wait ...[o

Yes

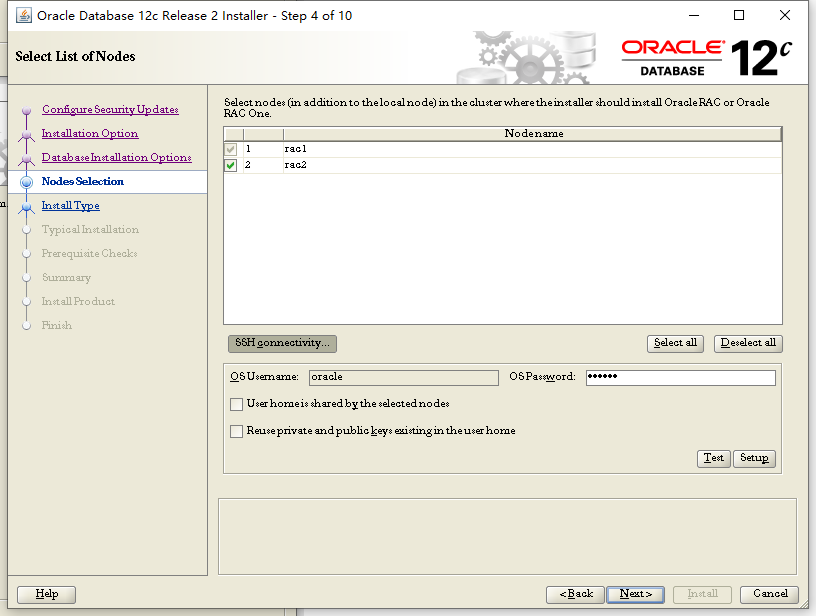

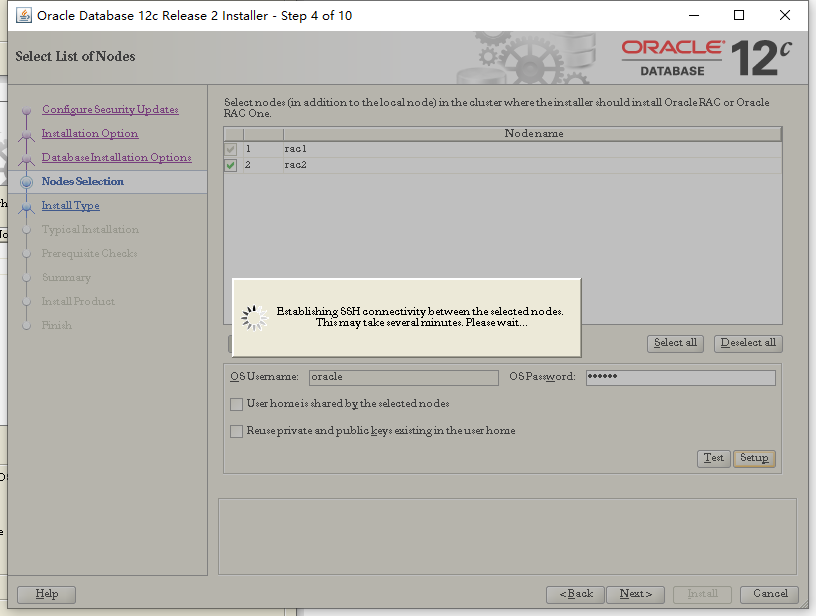

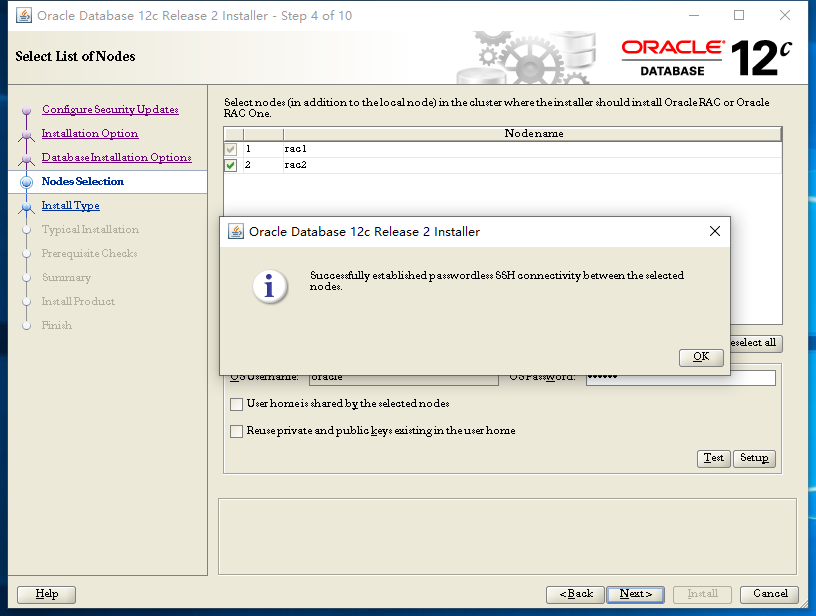

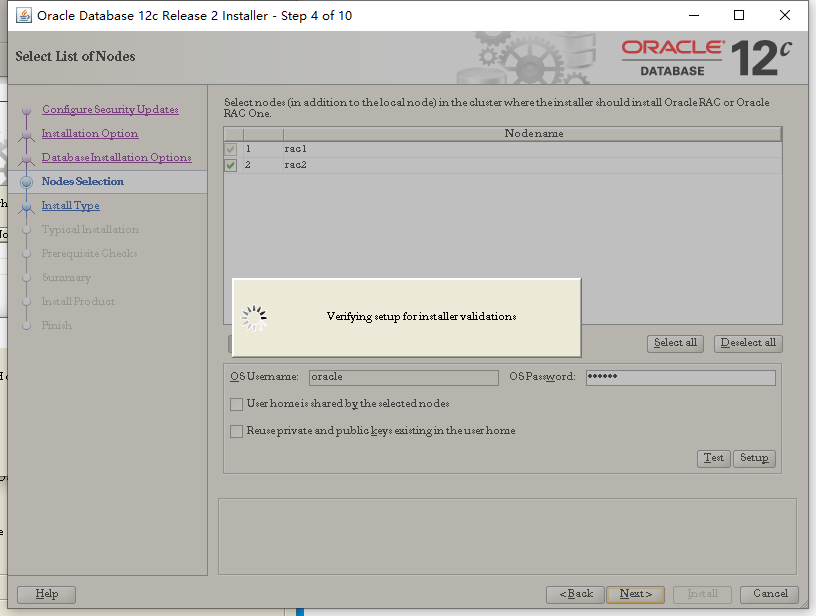

点击SSH connectivity 在OS Password处输入oracle用户的密码,然后点击Setup

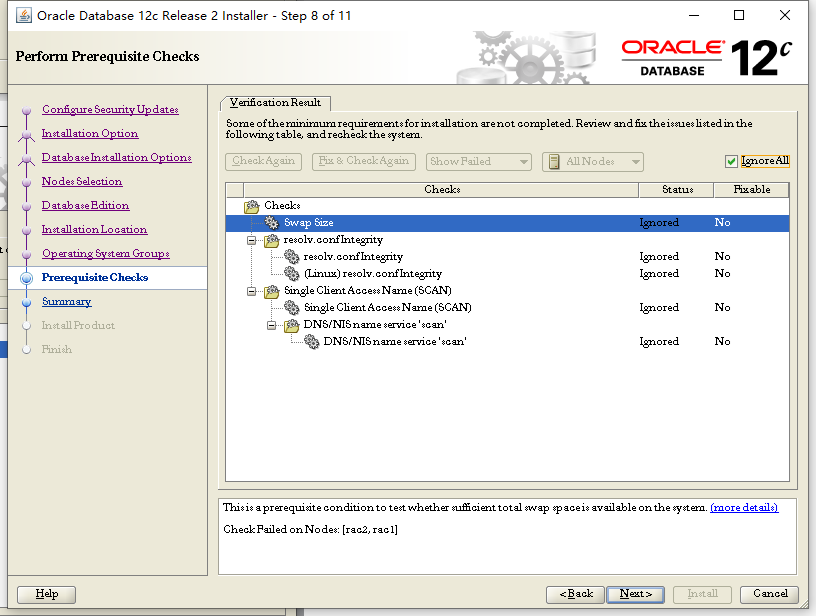

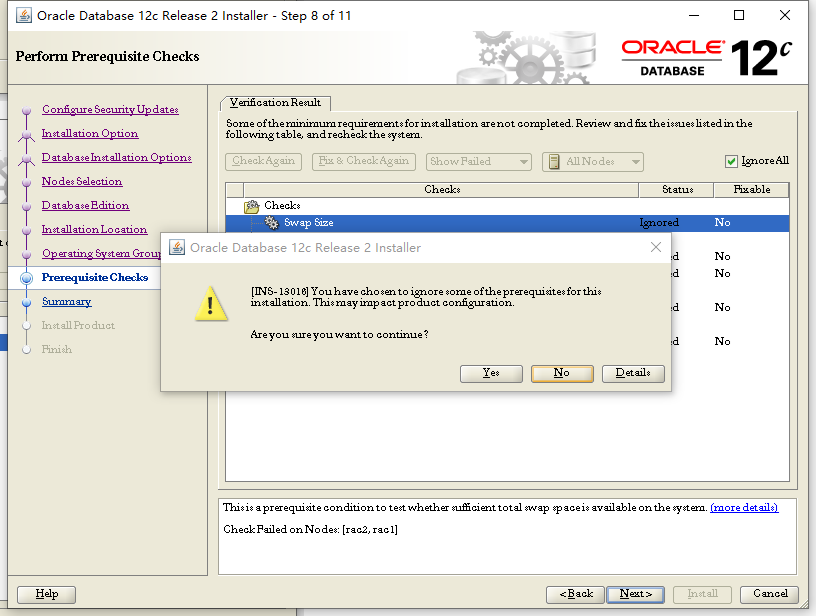

除交换分区以外,其他错误均为未配置dns服务器导致,集群使用hosts文件解析scan,全部忽略,勾选Ignore All,Next

Yes

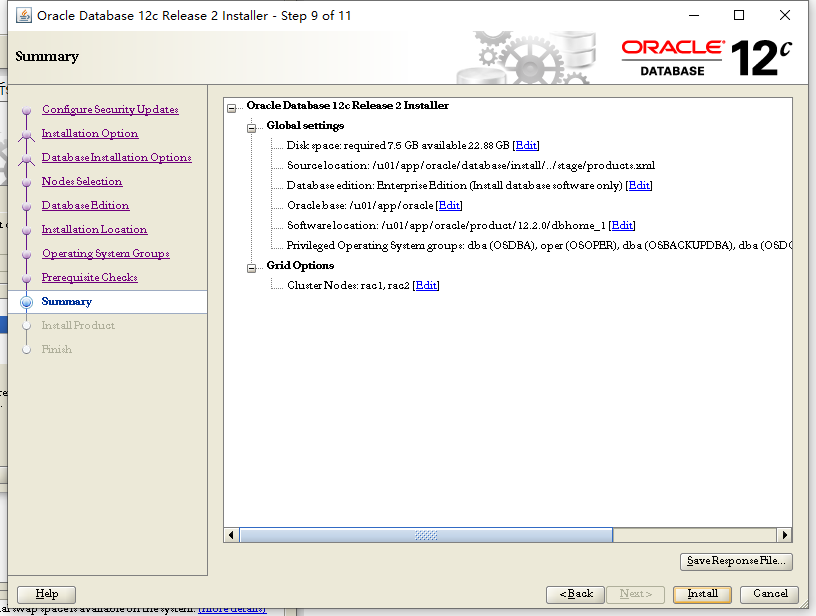

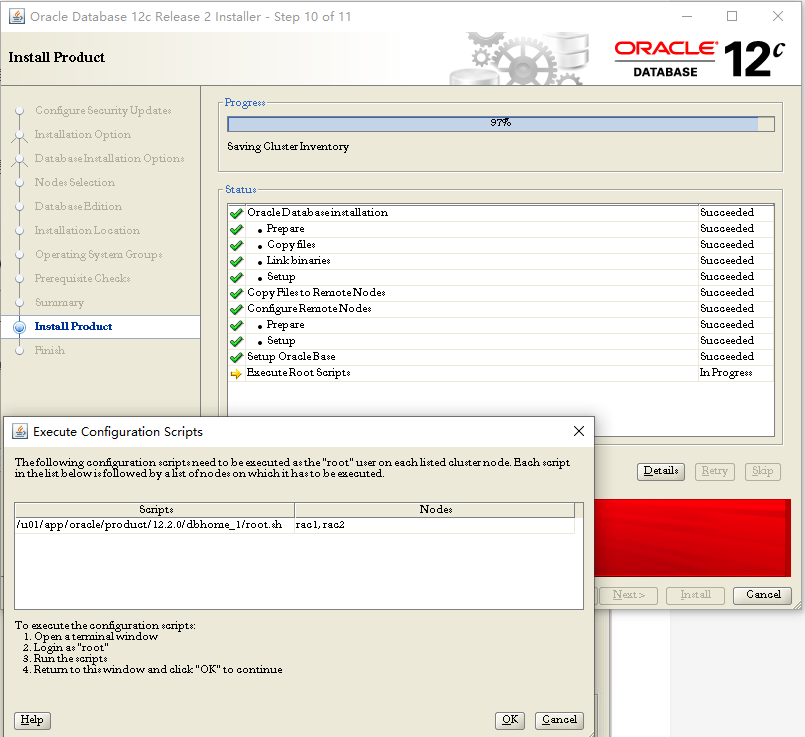

下面执行脚本

使用root用户登陆,执行

节点1

[root@rac1 /]# cd /u01/app/oracle/product/12.2.0/dbhome_1/

[root@rac1 dbhome_1]# ./root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/12.2.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

节点2

[root@rac2 grid]# cd /u01/app/oracle/product/12.2.0/dbhome_1/

[root@rac2 dbhome_1]# ./root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/12.2.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

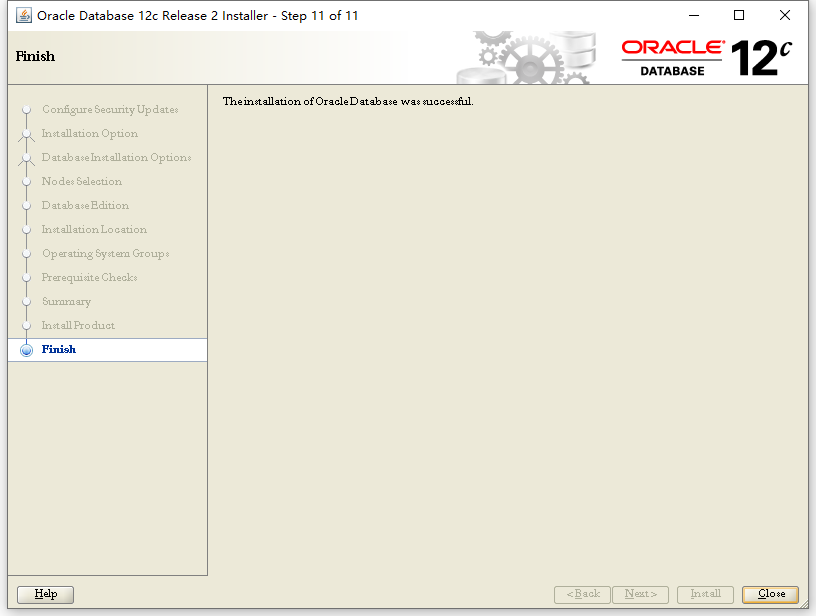

执行完毕,按ok

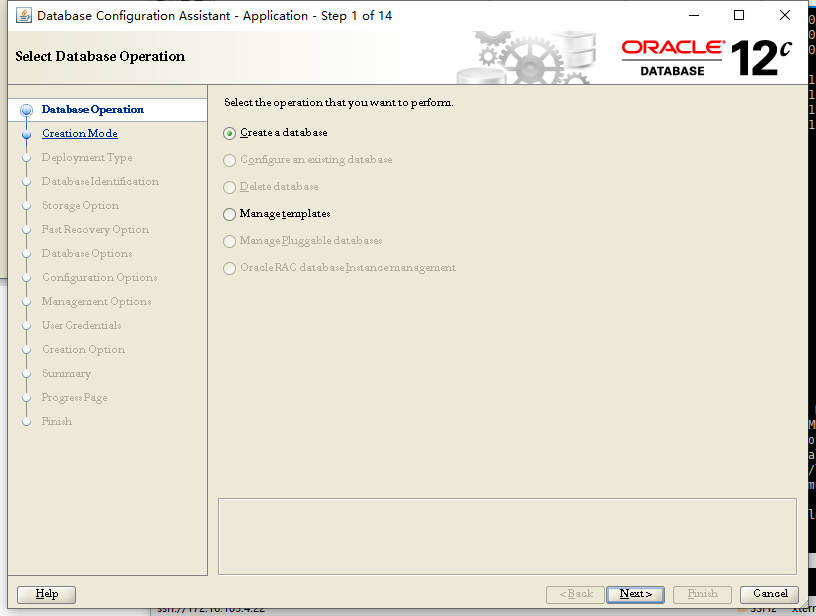

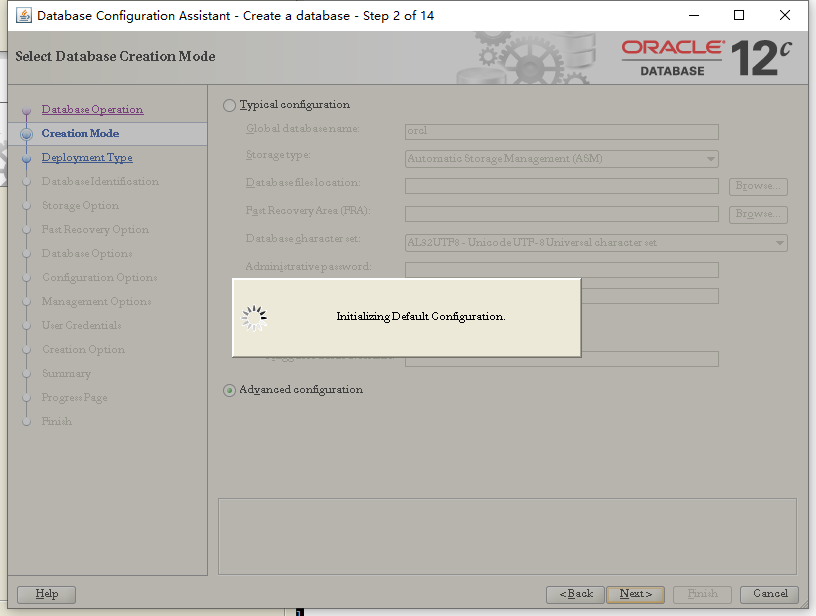

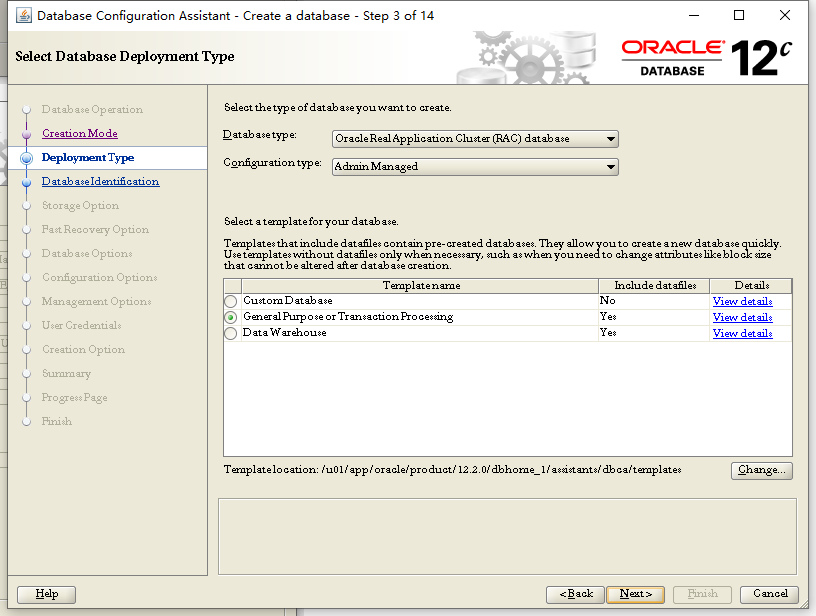

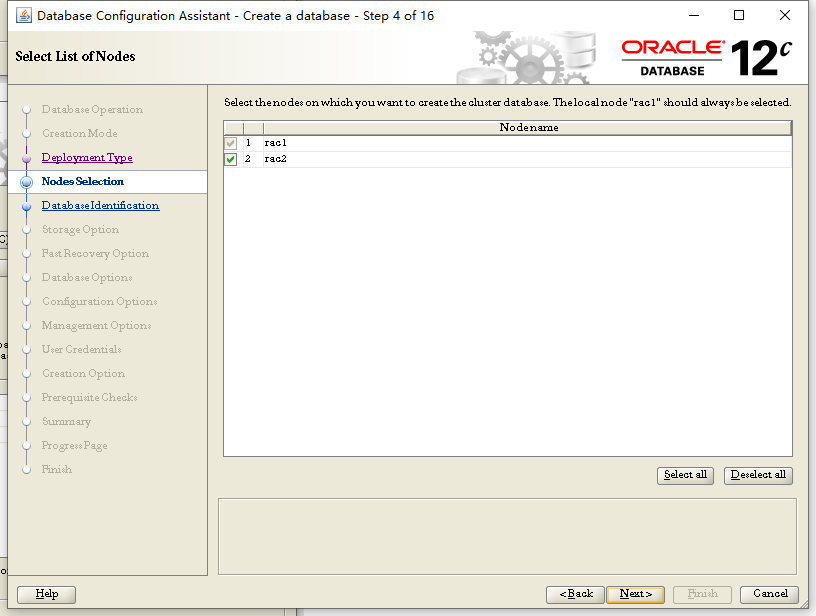

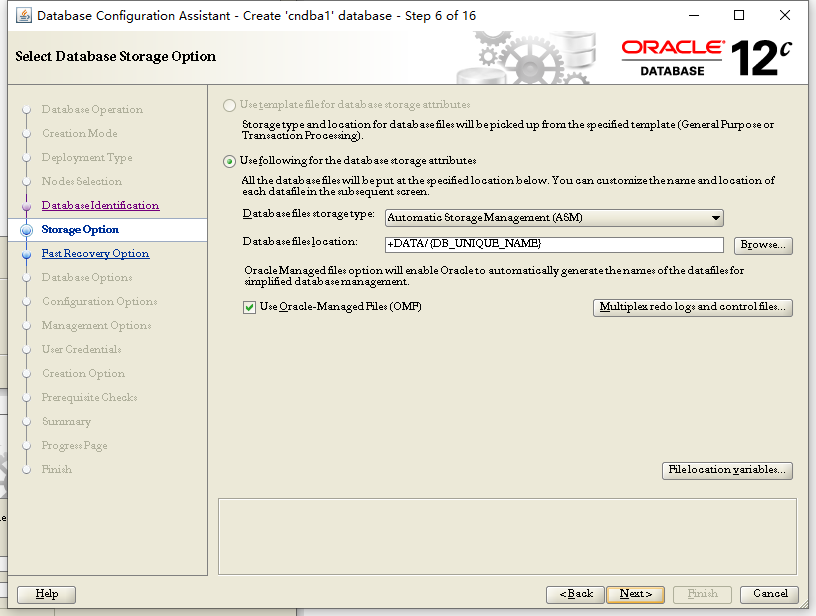

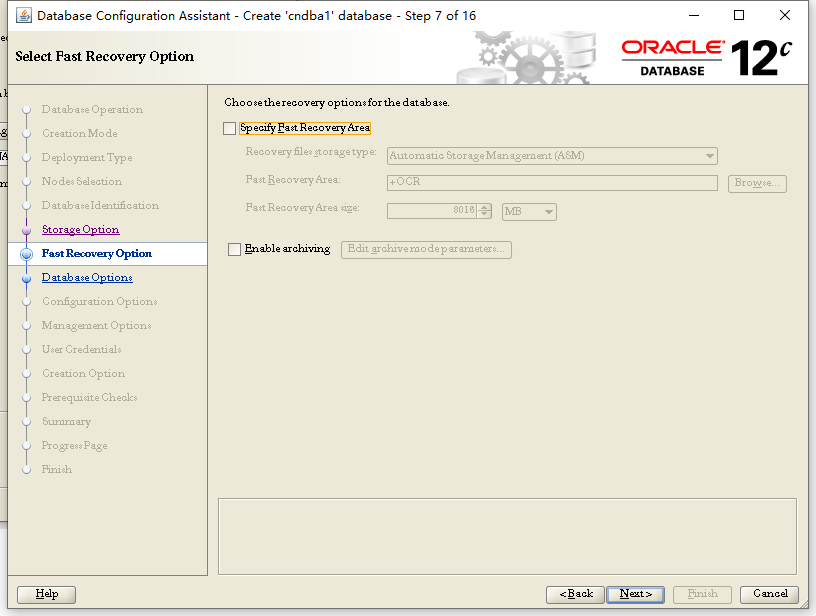

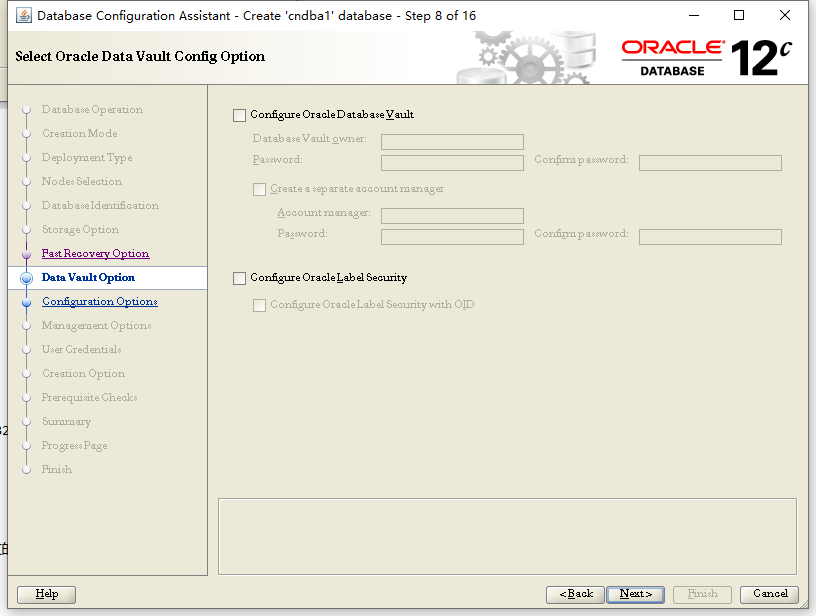

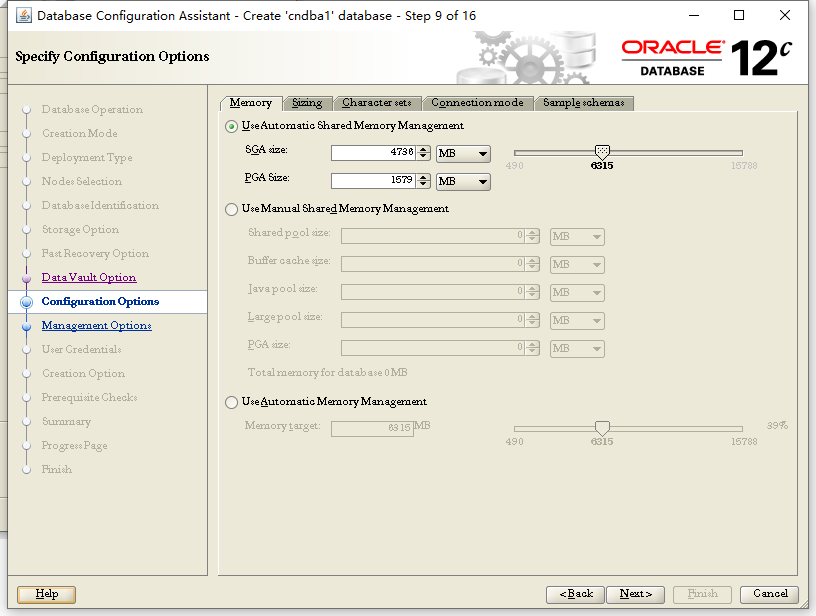

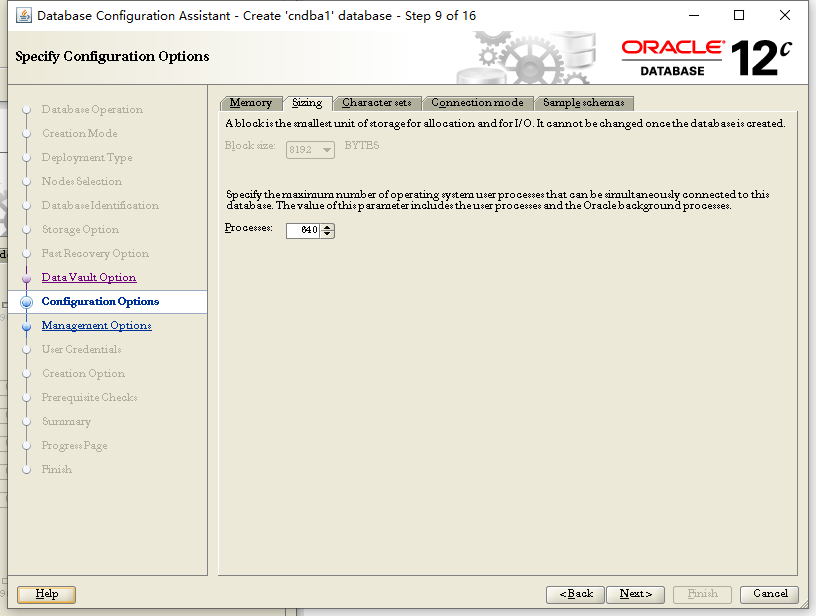

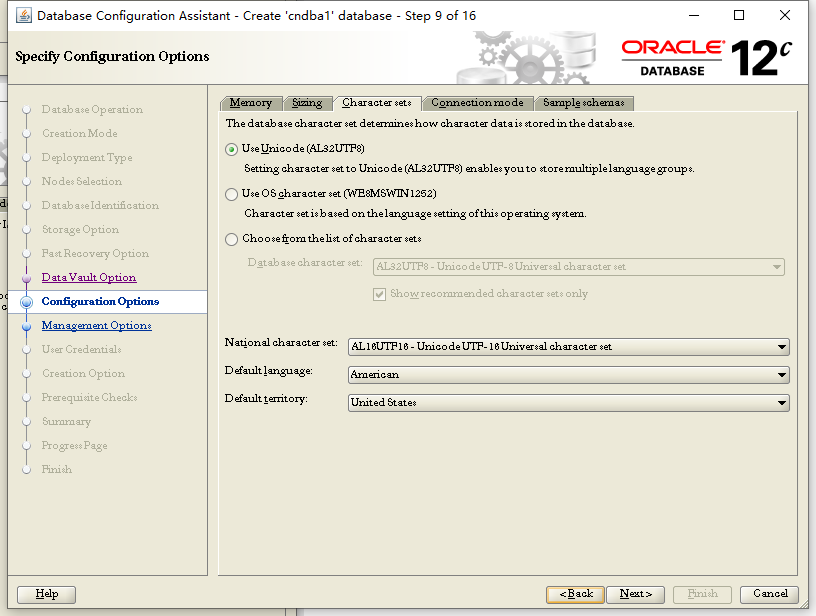

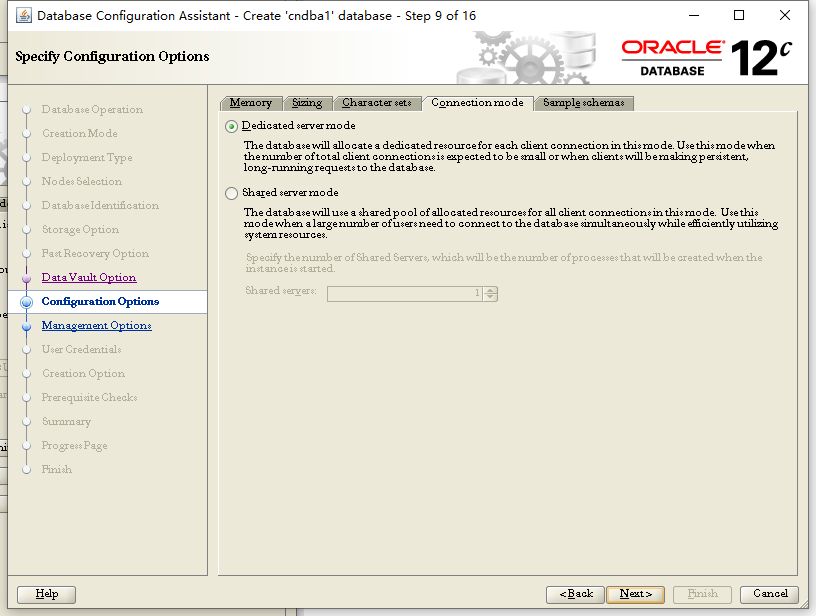

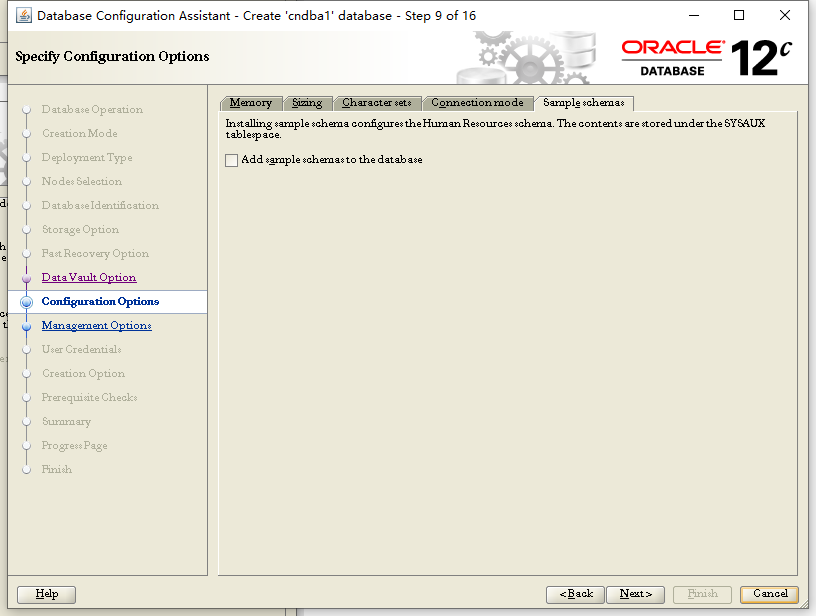

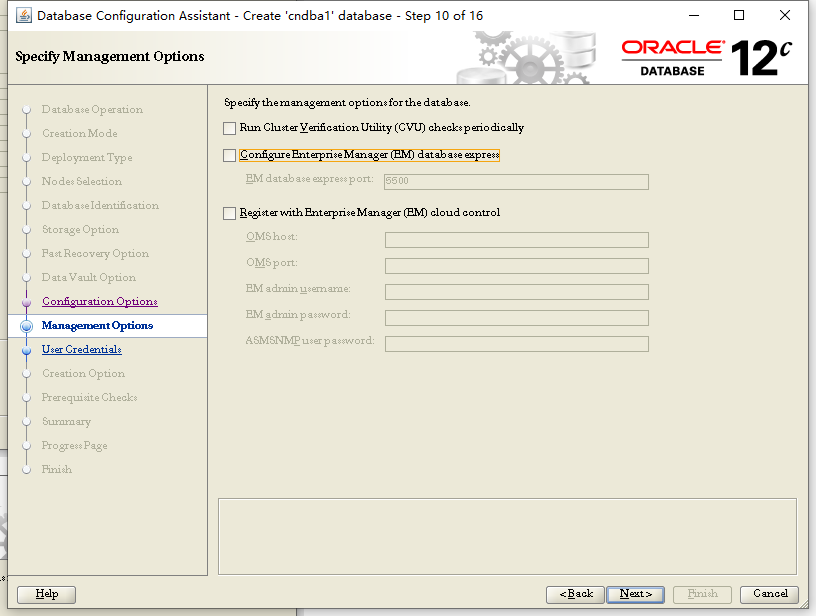

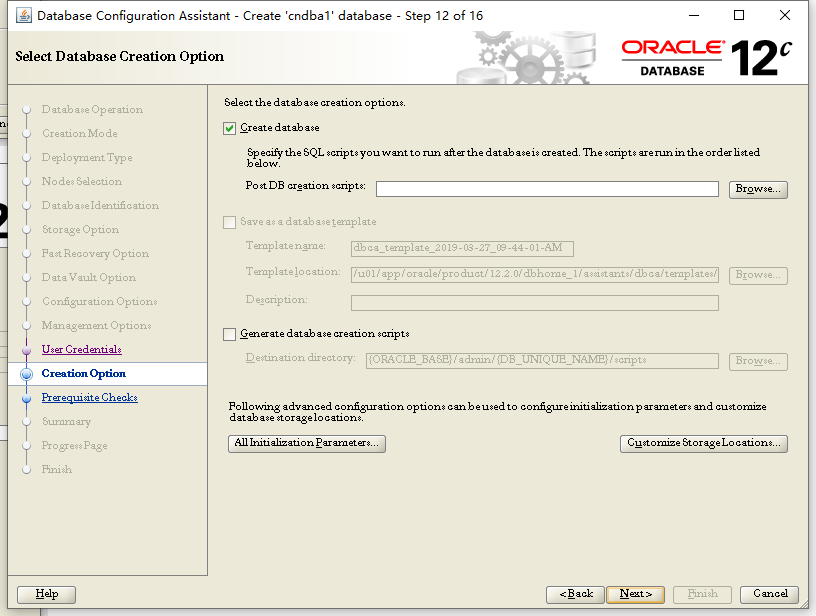

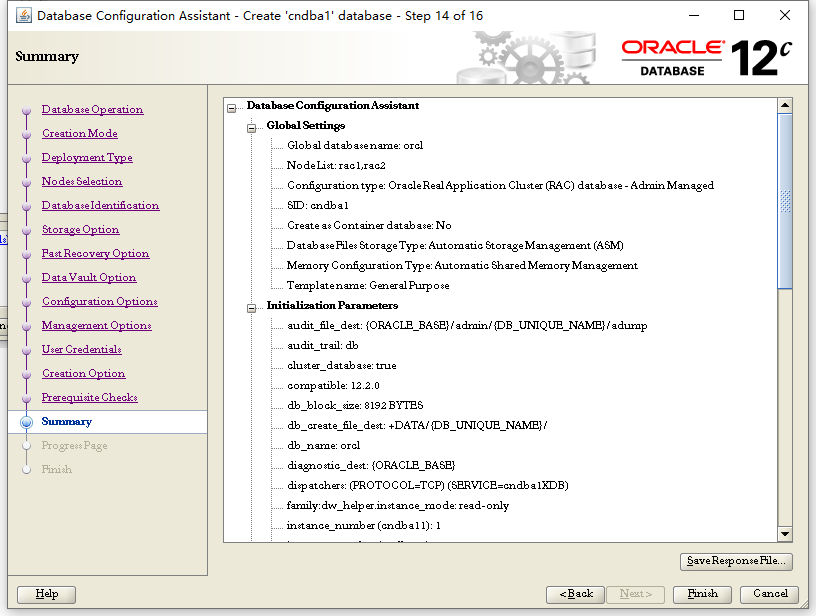

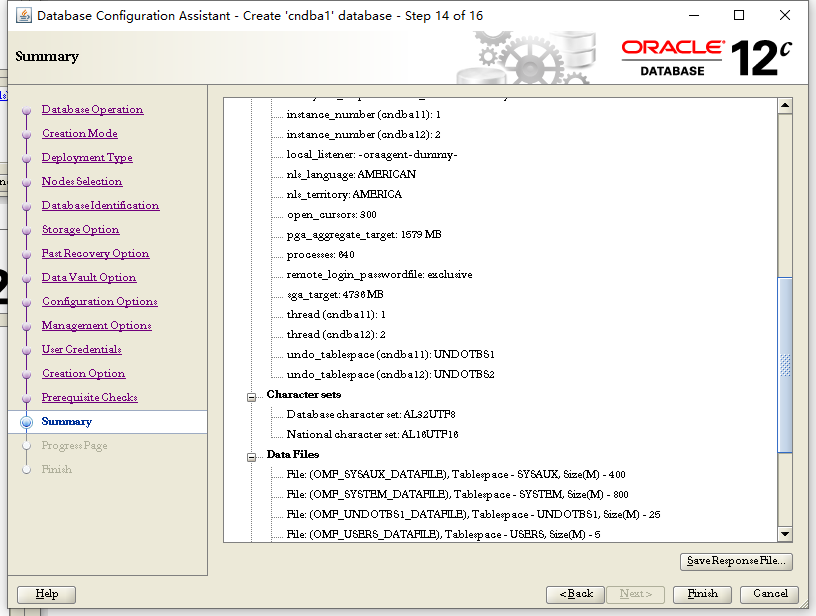

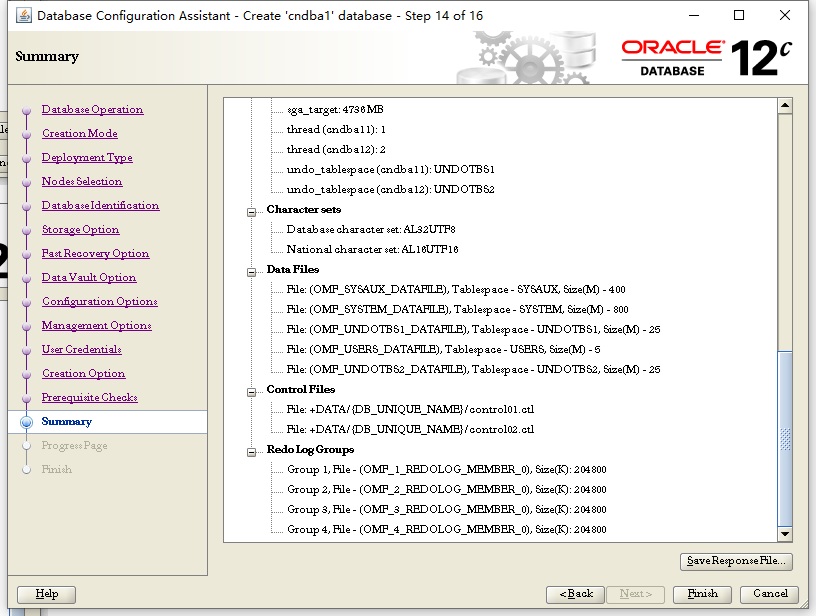

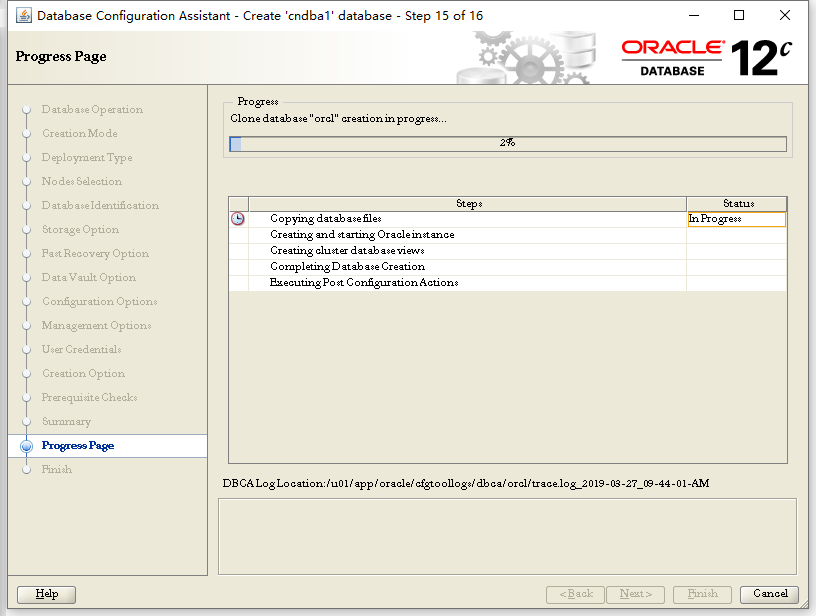

下面创建数据库,使用oracle用户在任意节点

[oracle@rac1 database]$ dbca

所有子选项保持默认

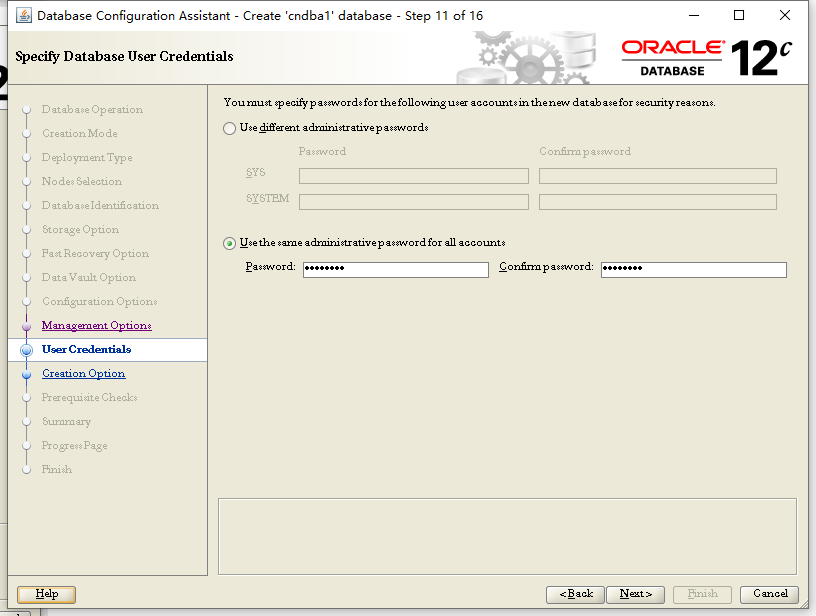

设置密码为Admin123

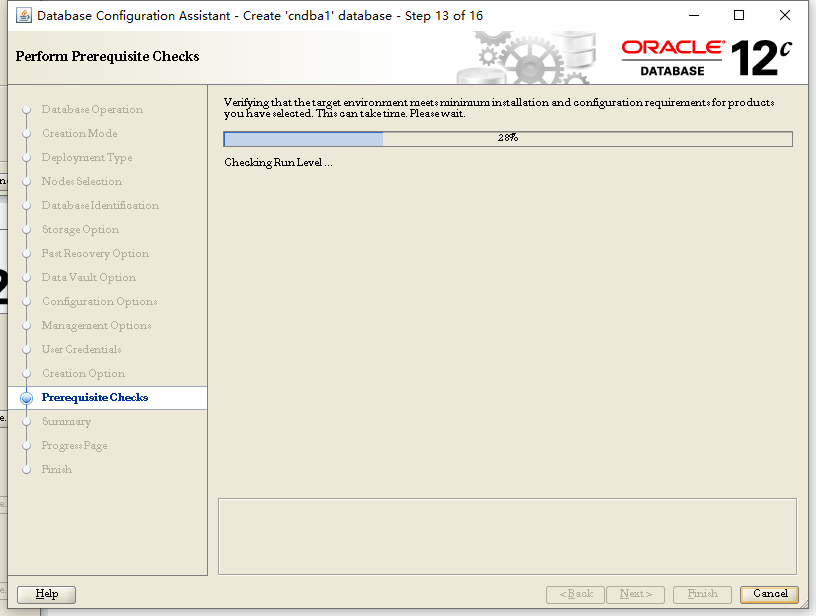

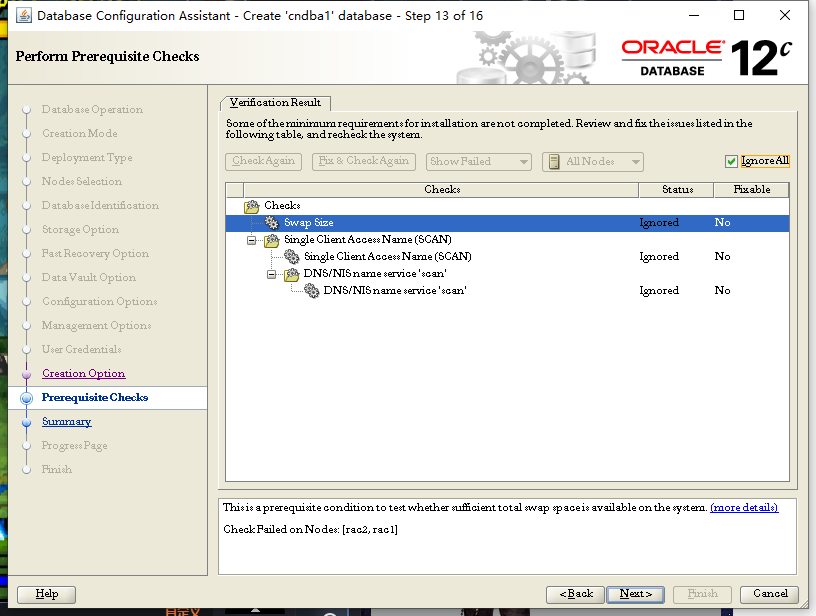

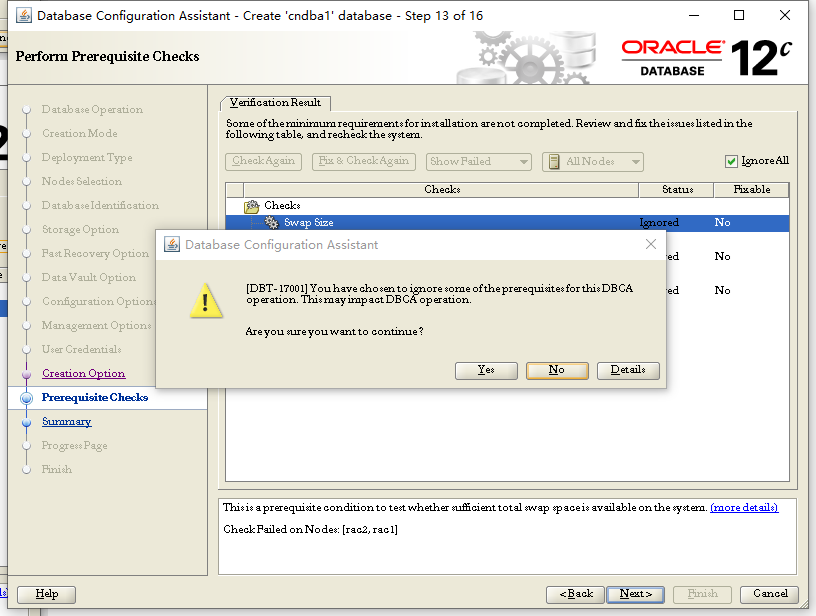

还是dns无法解析scan的错误和交换分区的警告,勾选Ignore All忽略错误,点击Next

Yes

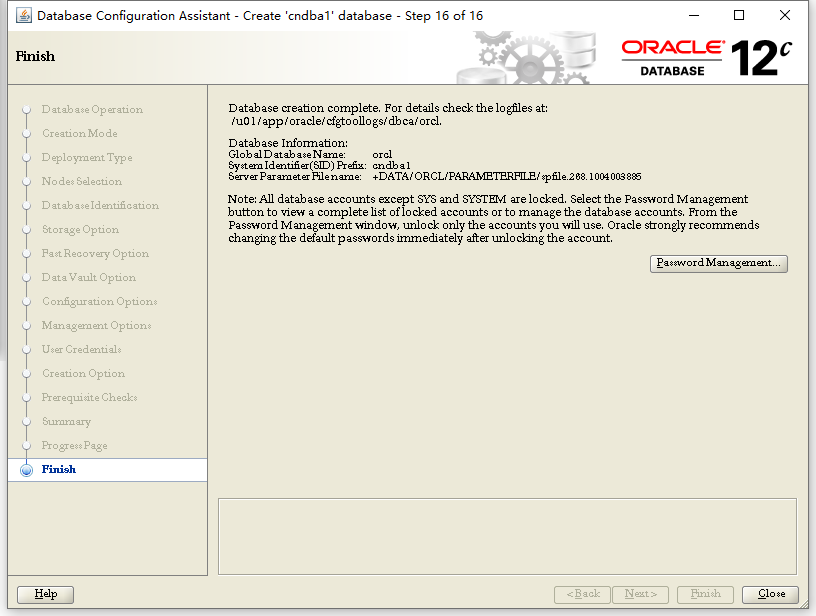

此处可以点击Password Management 来管理用户和密码或者重新定义sys和system的密码。

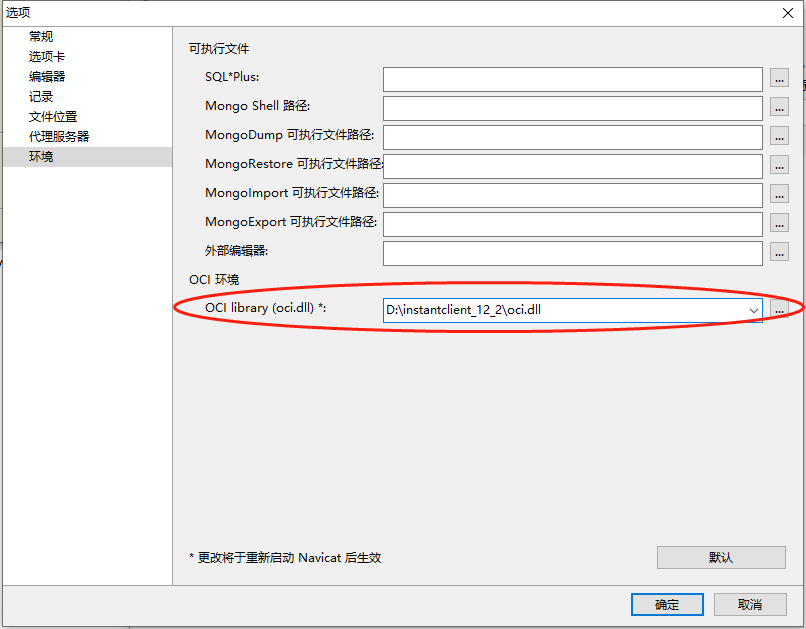

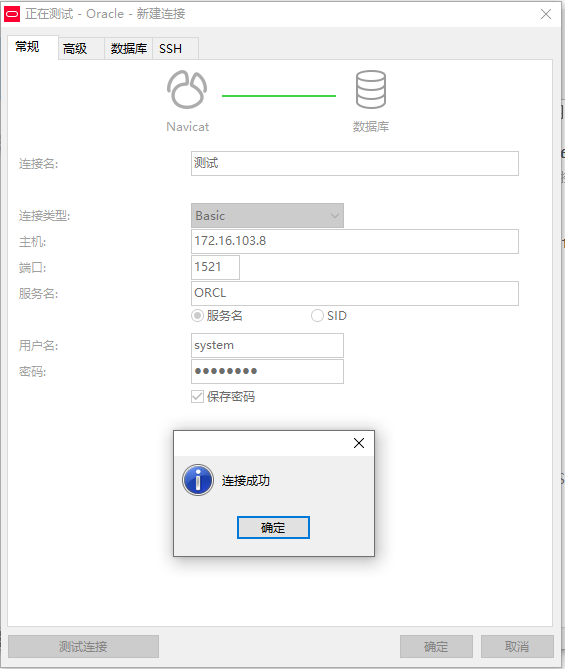

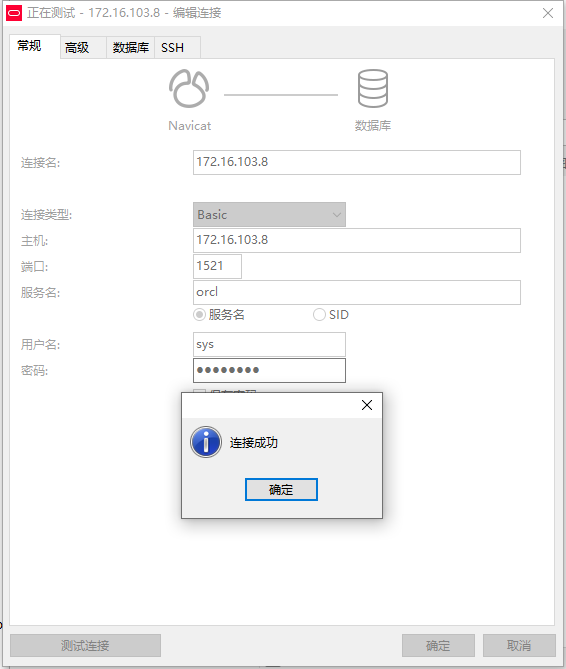

下面介绍使用navicat连接oracle数据库

下载最新版本的navicat版本,然后设置

需要从oracle官方网站下载12.2的windows客户端包安装,或者直接抽取包内的oci.dll文件,然后navicat的此项设置指向oracle的oci.dll文件即可。

连接名自定义,主机写集群的scanip地址,服务名是orcl,用户名是system密码为之前创建的Admin123

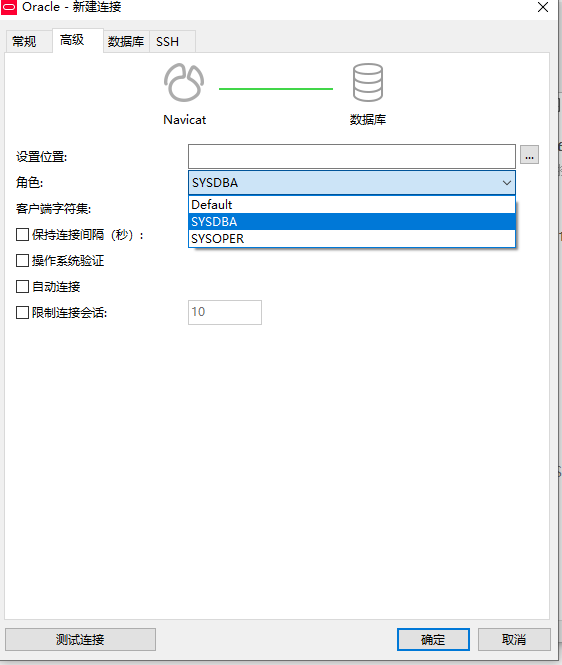

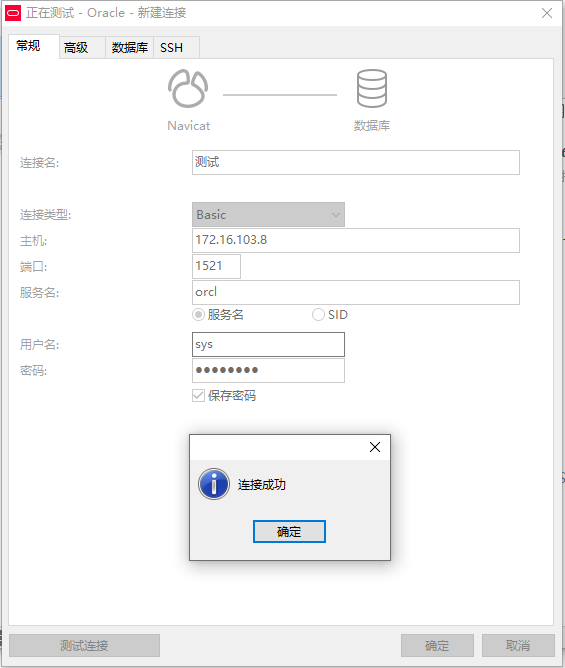

如果想使用sys用户登陆,那么需要更改下面的设置

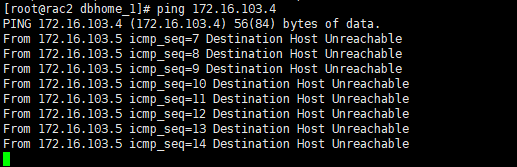

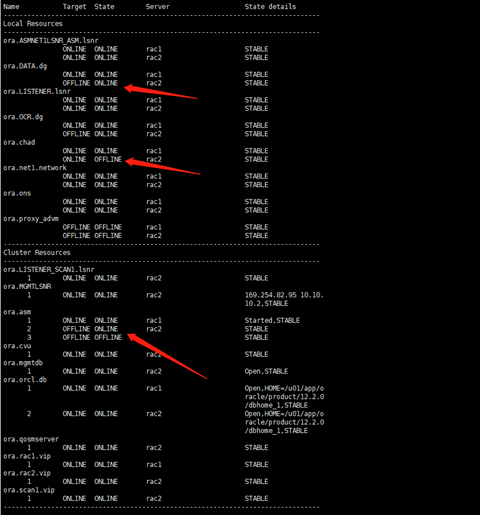

测试单点故障是否影响数据库,关闭一个节点。

在节点2上ping节点的public ip 已经无法ping通

使用navicat再次连接数据库测试

依然可以成功使用数据库。

此时在节点2上查看集群状态

[grid@rac2 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE rac2 STABLE

ora.DATA.dg

ONLINE ONLINE rac2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE rac2 STABLE

ora.OCR.dg

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE OFFLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac2 169.254.82.95 10.10.

10.2,STABLE

ora.asm

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac2 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac2 Open,STABLE

ora.orcl.db

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE rac2 Open,HOME=/u01/app/o

racle/product/12.2.0

/dbhome_1,STABLE

ora.qosmserver

1 ONLINE ONLINE rac2 STABLE

ora.rac1.vip

1 ONLINE INTERMEDIATE rac2 FAILED OVER,STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2 STABLE

--------------------------------------------------------------------------------

可以看到ora.orcl.db中,节点1已经处于offline状态,这时,开启节点1

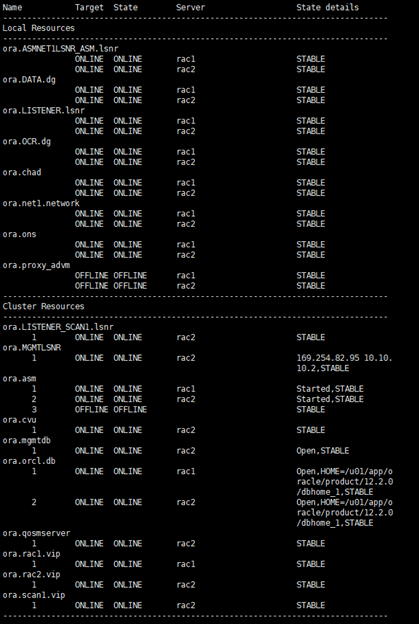

再次再节点2上查看集群状态

[grid@rac2 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.DATA.dg

OFFLINE OFFLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.LISTENER.lsnr

ONLINE OFFLINE rac1 STARTING

ONLINE ONLINE rac2 STABLE

ora.OCR.dg

OFFLINE OFFLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE OFFLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE OFFLINE rac1 STARTING

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac2 169.254.82.95 10.10.

10.2,STABLE

ora.asm

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac2 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac2 Open,STABLE

ora.orcl.db

1 ONLINE OFFLINE Instance Shutdown,ST

ABLE

2 ONLINE ONLINE rac2 Open,HOME=/u01/app/o

racle/product/12.2.0

/dbhome_1,STABLE

ora.qosmserver

1 ONLINE ONLINE rac2 STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2 STABLE

--------------------------------------------------------------------------------

可以看到有些节点1的状态已经处理STARTING了,再等等,启动较慢

[grid@rac2 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.DATA.dg

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.OCR.dg

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE OFFLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac2 169.254.82.95 10.10.

10.2,STABLE

ora.asm

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac2 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac2 Open,STABLE

ora.orcl.db

1 ONLINE ONLINE rac1 Open,HOME=/u01/app/o

racle/product/12.2.0

/dbhome_1,STABLE

2 ONLINE ONLINE rac2 Open,HOME=/u01/app/o

racle/product/12.2.0

/dbhome_1,STABLE

ora.qosmserver

1 ONLINE ONLINE rac2 STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2 STABLE

--------------------------------------------------------------------------------

可以看到已经启动了,但是有一个地方不知为啥没有还原,就是Local Resources下的ora.chad下的ONLINE OFFLINE rac2 STABLE 当前状态处于OFFLINE,数据库可以正常查询

次日查询集群状态

发现多出异常,然后用root执行了./crsctl stop cluster -all和./crsctl start cluster -all 之后 所有都恢复正常了。(停止集群所有节点,启动集群所有节点)

关于报错:

物理内存不够的报错

节点物理内存低于6G就会报错

安装完成之后在节点1执行root.sh脚本的时候报如下错误

Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-170325AM010920.log for details.

2017/03/25 01:10:23 CLSRSC-184: Configuration of ASM failed

2017/03/25 01:10:27 CLSRSC-258: Failed to configure and start ASM

Died at /u01/app/12.2.0/grid/crs/install/crsinstall.pm line 2091.

查看日志文件,

ORA-00845: MEMORY_TARGET not supported on thi s system

通过搜索引擎搜索相关错误,最后的解决办法是修改shm目录的大小,具体修改的原则是shm的大小要大于MEMORY_TARGET。

mount -o remount,size=8G /dev/shm

vi /etc/fstab

tmpfs /dev/shm tmpfs defaults,size=8G 0 0

保存退出之后,通过df -h查看shm目录的大小确认修改是否生效。

如果首次安装失败,再次安装时使用之前的共享磁盘在安装grid的最后一步时同样会报错,具体的错误日志是磁盘内已经存在相关文件,无法写入之类的报错。要解决改报错,需要清空共享磁盘,使用以下命令

dd if=/dev/zero of=/dev/sda bs=512 count=1

常用命令:

创建数据库(oracle用户下执行)

dbca

使用sqlplus连接数据库(grid用户)

sqlplus / as sysdba

查看监听状态(grid用户)

lsnrctl status

查看集群状态(grid用户)

crsctl stat res -t

停止/启动节点集群服务,须要以root用户,进入grid的安装目录下的bin目录

./crsctl stop cluster -all -----停止所有节点集群服务

./crsctl stop cluster -------停止本节点集群服务