原文地址:

https://blog.csdn.net/happyday_d/article/details/85267561

--------------------------------------------------------------------------------------------------------

Pytorch中的学习率调整:lr_scheduler,ReduceLROnPlateau

-

torch.optim.lr_scheduler:该方法中提供了多种基于epoch训练次数进行学习率调整的方法; -

torch.optim.lr_scheduler.ReduceLROnPlateau:该方法提供了一些基于训练过程中的某些测量值对学习率进行动态的下降.

lr_scheduler调整方法一:根据epochs

CLASS torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda, last_epoch=-1)

将每个参数组的学习率设置为给定函数的初始值,当last_epoch=-1时,设置初始的lr作为lr;

参数:

optimizer:封装好的优化器

lr_lambda(function or list):一个计算每个epoch的学习率的函数或者一个list;

last_epoch:最后一个epoch的索引

eg:

>>> # Assuming optimizer has two groups. >>> lambda1 = lambda epoch: epoch // 30 >>> lambda2 = lambda epoch: 0.95 ** epoch >>> scheduler = LambdaLR(optimizer, lr_lambda=[lambda1, lambda2]) >>> for epoch in range(100): >>> scheduler.step() >>> train(...) >>> validate(...)

CLASS torch.optim.lr_scheduler.StepLR(optimizer, step_size, gamma=0.1, last_epoch=-1)

当epoch每过stop_size时,学习率都变为初始学习率的gamma倍

eg:

>>> # Assuming optimizer uses lr = 0.05 for all groups >>> # lr = 0.05 if epoch < 30 >>> # lr = 0.005 if 30 <= epoch < 60 >>> # lr = 0.0005 if 60 <= epoch < 90 >>> # ... >>> scheduler = StepLR(optimizer, step_size=30, gamma=0.1) >>> for epoch in range(100): >>> scheduler.step() >>> train(...) >>> validate(...)

CLASS torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones, gamma=0.1, last_epoch=-1)

当训练epoch达到milestones值时,初始学习率乘以gamma得到新的学习率;

eg:

>>> # Assuming optimizer uses lr = 0.05 for all groups >>> # lr = 0.05 if epoch < 30 >>> # lr = 0.005 if 30 <= epoch < 80 >>> # lr = 0.0005 if epoch >= 80 >>> scheduler = MultiStepLR(optimizer, milestones=[30,80], gamma=0.1) >>> for epoch in range(100): >>> scheduler.step() >>> train(...) >>> validate(...)

CLASS torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma, last_epoch=-1)

每个epoch学习率都变为初始学习率的gamma倍

CLASS torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0, last_epoch=-1)

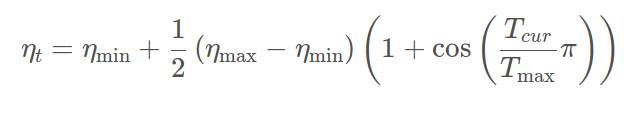

利用cos曲线降低学习率,该方法来源SGDR,学习率变换如下公式:

其中:

ηηmax为初始学习率,T

Tcur为当前epochs;

eta_min表示公式中的ηηmin,常设置为0;η

lr_scheduler调整方法一:根据测试指标

CLASS torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='min', factor=0.1, patience=10, verbose=False, threshold=0.0001, threshold_mode='rel', cooldown=0, min_lr=0, eps=1e-08)

当参考的评价指标停止改进时,降低学习率,factor为每次下降的比例,训练过程中,当指标连续patience次数还没有改进时,降低学习率;