目录

参数

参数就神经元上的权重W,用变量表示,随机给初始值。

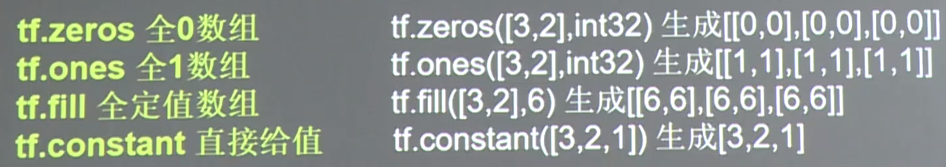

随机生成一些变量的方法:

神经网络的实现过程

- 准备数据集。提取特征。作为输入喂给神经网络(Neural Network,NN)

- 搭建NN结构,从输入到输出(先搭建计算图,再用会话执行)(NN前向传播算法→计算输出)

- 大量特征数据喂给NN,迭代优化NN参数(NN反向传播算法→优化参数训练模型)

- 使用训练好的模型进行预测和分类

前三步:训练,第四步:测试

前向传播

搭建一个模型,实现推理(以全连接网络为例)

例:生产一批零件,将体积x1和重量x2为特征,输入到NN,通过NN后,得到输出:

先看下面的结构

神经网络的层数从第一个计算层开始,比如,输入不是计算出,所以a是第一层。

看一下代码实现

#coding:utf-8

#代码中如果有中文,用utf-8编码

#两层简单神经网络

import tensorflow as tf

#定义输入和参数

x = tf.constant([[0.7,0.5]])#一行两列

w1 = tf.Variable(tf.random_normal([2,3],stddev=1,seed=1))

w2 = tf.Variable(tf.random_normal([3,1],stddev=1,seed=1))

#定义前向传播过程

a = tf.matmul(x,w1)#矩阵乘法

y = tf.matmul(a,w2)

#用会话进行计算

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

print("y in tf3_3.py is:",sess.run(y))

执行结果

![]()

下面是使用placeholder,一次性喂入多个数据。

#coding:utf-8

#代码中如果有中文,用utf-8编码

#两层简单神经网络

import tensorflow as tf

#定义输入和参数

#使用placeholder实现输入定义

x = tf.placeholder(tf.float32,shape=(None,2))

w1 = tf.Variable(tf.random_normal([2,3],stddev=1,seed=1))

w2 = tf.Variable(tf.random_normal([3,1],stddev=1,seed=1))

#定义前向传播过程

a = tf.matmul(x,w1)

y = tf.matmul(a,w2)

#用会话进行计算

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

print("y in tf3_3.py is:\n",sess.run(y,feed_dict={x:[[0.7,0.5],[0.2,0.4],[4.0,2.0]]}))

后向传播

综合示例

建立一个完整的神经网络,输入体积和重量,当“体积+重量>1 合格,否则不合格”

#coding:utf-8

#导入模块

import numpy as np

import tensorflow as tf

batch_size = 8

seed = 23455

#基于seed产生随机数

rng = np.random.RandomState(seed)

#随机数返回32行2列的矩阵,表示32组 体积和重量数据,作为输入数据集

X = rng.rand(32,2)

#取出一行,判断如果小于1,y = 1,否则,y=0, 作为数据的标签(正确答案)

Y = [[int((x0+x1)<1)] for (x0,x1) in X]

#定义神经网络的输入,参数和输出,定义前向传播的过程

x = tf.placeholder(tf.float32,shape=(None,2))

y_ = tf.placeholder(tf.float32,shape=(None,1))

#初始化参数(权重)

w1 = tf.Variable(tf.random_normal([2,3],stddev=1, seed=1))

w2 = tf.Variable(tf.random_normal([3,1],stddev=1,seed=1))

a = tf.matmul(x,w1)

y = tf.matmul(a,w2)

#定义损失函数及反向传播算法

loss = tf.reduce_mean(tf.square(y-y_)) #均方误差

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(loss) # 梯度下降法,0.001是学习率

#train_step = tf.train.MomentumOptimizer(0.001,0.9).minimize(loss)

#train_step = tf.train.AdamOptimizer(0.001,0.9).minimize(loss)

#生成会话,训练steps轮

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

print("w1:\n",sess.run(w1))

print("w2:\n",sess.run(w2))

#开始训练

steps = 10000

for i in range(steps):

start = (i*batch_size) % 32

end = start + batch_size

sess.run(train_step,feed_dict={x:X[start:end],y_:Y[start:end]})

if i % 500 == 0:

total_loss = sess.run(loss,feed_dict={x:X,y_:Y})

print("After %d training steps,loss on all data is %g"%(i,total_loss))

#输出训练好的参数值

print("\n")

print("w1:\n",sess.run(w1))

print("w2:\n",sess.run(w2))执行结果:

w1:

[[-0.8113182 1.4845988 0.06532937]

[-2.4427042 0.0992484 0.5912243 ]]

w2:

[[-0.8113182 ]

[ 1.4845988 ]

[ 0.06532937]]

After 0 training steps,loss on all data is 5.13118

After 500 training steps,loss on all data is 0.429111

After 1000 training steps,loss on all data is 0.409789

After 1500 training steps,loss on all data is 0.399923

After 2000 training steps,loss on all data is 0.394146

After 2500 training steps,loss on all data is 0.390597

After 3000 training steps,loss on all data is 0.388336

After 3500 training steps,loss on all data is 0.386855

After 4000 training steps,loss on all data is 0.385863

After 4500 training steps,loss on all data is 0.385186

After 5000 training steps,loss on all data is 0.384719

After 5500 training steps,loss on all data is 0.384391

After 6000 training steps,loss on all data is 0.38416

After 6500 training steps,loss on all data is 0.383995

After 7000 training steps,loss on all data is 0.383877

After 7500 training steps,loss on all data is 0.383791

After 8000 training steps,loss on all data is 0.383729

After 8500 training steps,loss on all data is 0.383684

After 9000 training steps,loss on all data is 0.383652

After 9500 training steps,loss on all data is 0.383628

w1:

[[-0.6916735 0.81592685 0.0962934 ]

[-2.3433847 -0.1074271 0.5854707 ]]

w2:

[[-0.08710451]

[ 0.81644475]

[-0.05229824]]

搭建神经网络的八股

准备、前传、反传、迭代

- 准备: import模块、常量定义、生成数据集

- 前向传播:定义输入、参数(权重)、输出, x= ,y_= ,w1= ,w2 = ,a= ,y =

- 反向传播:定义损失函数、反向传播算法, loss = , train_step =

- 生成会话,进行迭代steps轮。