Jan. 14 - Jan. 25th 2019 two weeks paper reading

- paper reading list--- Image Denoising

- 1 A multiscale Image Denoising Algorithm Based on dilated residual Convolution Network [link](https://arxiv.org/pdf/1812.09131.pdf).

- 2 Dilated Residual Networks(ResNet)[link](https://www.cs.princeton.edu/~funk/drn.pdf).

- 3 Understanding Convolution for semantic segmentation[link](http://sunw.csail.mit.edu/abstract/understanding.pdf).

- 4 learning Deep CNN Denoiser Prior for Image restoration(IRCNN)[link](http://openaccess.thecvf.com/content_cvpr_2017/papers/Zhang_Learning_Deep_CNN_CVPR_2017_paper.pdf).

- How to solve this type of problem?

- 5 Image Super-Resolution Using Deep Convolution Networks[link](https://arxiv.org/pdf/1501.00092.pdf).

- 6. Accurate Image Super- Resolution using Very Deep Convolution Networks(VDSR) [link](https://arxiv.org/pdf/1511.04587.pdf).

- 7 Centralized Sparse representation for Image restoration [link](http://www4.comp.polyu.edu.hk/~cslzhang/paper/conf/iccv11/ICCV_CSR_Final.pdf).

- 7.1 Introduction

- 7.2 Centralized sparse representation modeling

- 7.2.1 The sparse coding noise in image restoration

- 7.2.2 Centralized sparse representation

- Now the problem turn to be how to find a reasonable estimate of the unknown vector $\alpha_x$

- 7.3 Algorithm of CSR

paper reading list— Image Denoising

1 A multiscale Image Denoising Algorithm Based on dilated residual Convolution Network link.

- Dilated filter

- Multiscale convolution group

2 Dilated Residual Networks(ResNet)link.

which is the combination of Dilated networks and Residual networks.

2.1 Dilated convolution.

there is a very illustrated explanation about dilated convolution.

link.

2.2 Residual Network

3 Understanding Convolution for semantic segmentationlink.

- dense upsampling convolution(DUC)

- bilinear upsampling(interpolation)

- hybrid dilated convolution(HDC)

4 learning Deep CNN Denoiser Prior for Image restoration(IRCNN)link.

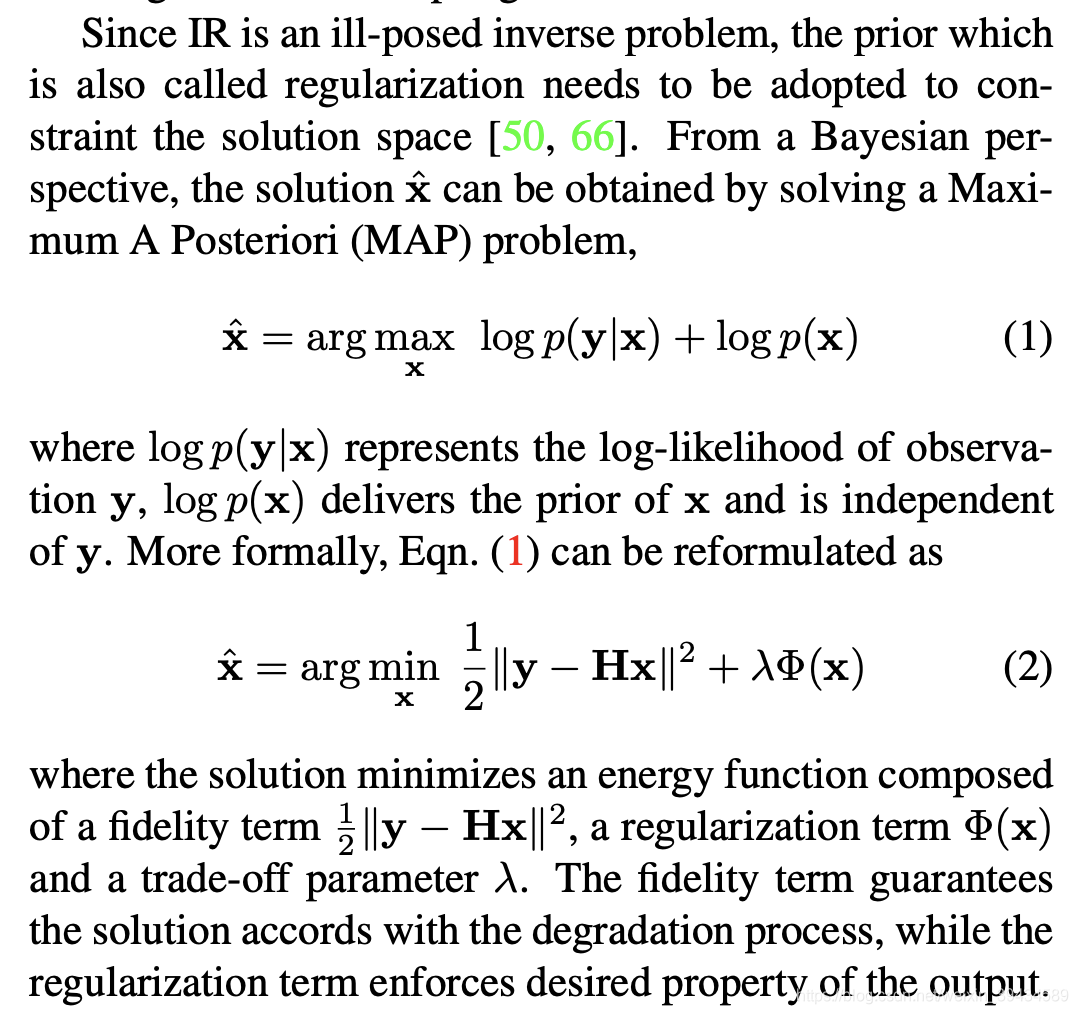

Image restoration(IR)

The object of IR:

y = Hx + v

the purpose of image restoration is to recover the latent clean image x from its

degraded observation y.

How to solve this type of problem?

- model-based optimization method

NCSR, BM3D, WNNM and so on - discriminative learning method

MLP, SRCNN, DCNN and so on

There are so many different types method of these two classes.

maybe next time I can give a literature review of these papers.

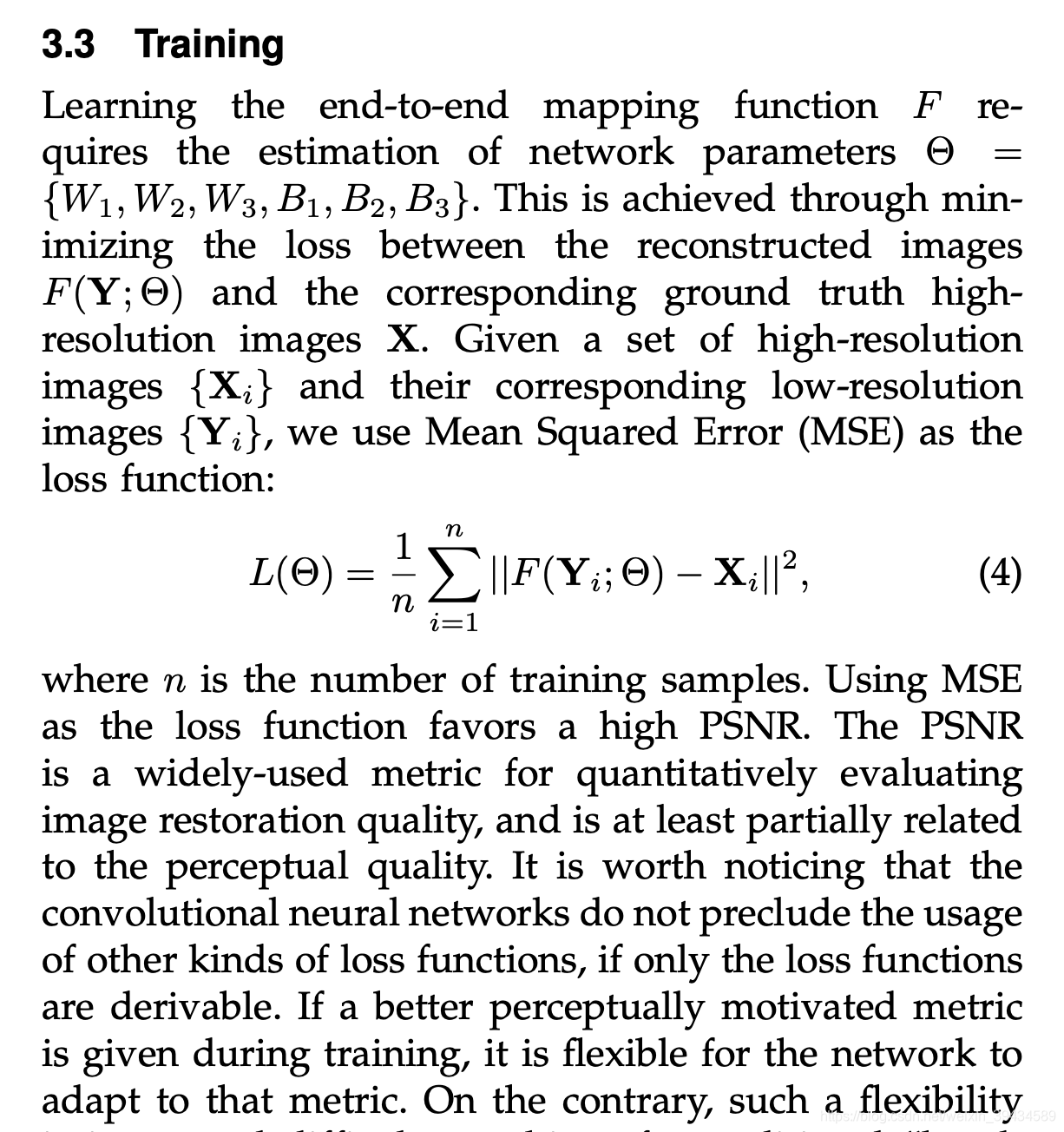

5 Image Super-Resolution Using Deep Convolution Networkslink.

the low-resolution inputs is first upscaled to the desired size using bicubic interpolation before inputing to SRCNN network.

- Patch Extraction and Representation

X: Ground truth high-resolution image

Y: Bicubic upsampling version of low-resolution image

- Nonlinear Mapping

- To Reconstruct the image

: * 1 * 1 * C

- rely on the context of small image regions

- the training converge too slowly

- network only works for a single scale

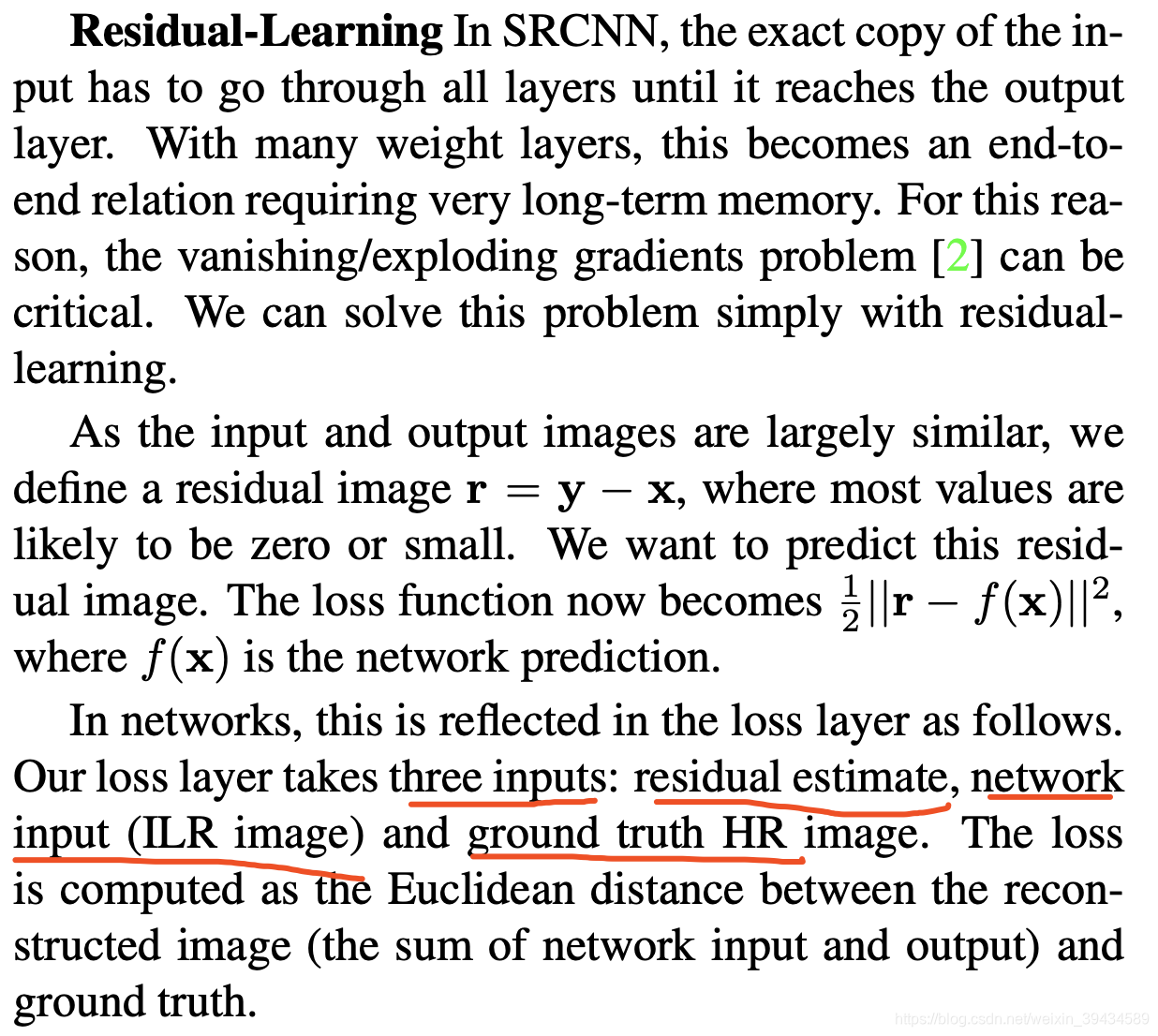

6. Accurate Image Super- Resolution using Very Deep Convolution Networks(VDSR) link.

HR high- resolution

LR low-resolution

- context: very deep network using large receptive field and take a large image context into account.

- using interpolated low-resolution image as input, and predict the image details.

- we pad zeros before convolutions to keep the size of our feature maps the same.

- convergence: using residual-learning CNN to speed up the training

- scale factor: we propose a single-model SR approach. Scales are typically user-specified and can be arbitrary including fractions.

7 Centralized Sparse representation for Image restoration link.

7.1 Introduction

y = Hx + v

H: degradation matrix

y: observed image

v: additive noise vector

x: original image

x can be represented as a linear combination of a few atoms from a dictionary

x

=

; s.t.

x-

: small constant balacing the sparsity and the approximation error.

counts the number of non-zero coefficients in

To do the image restoration

y = Hx + v

To reconstruct x from y

Since x

,

y

H

Then:

- = ; s.t. y - H

- Reconstruct x:

-

=

But because y is noise corrupted, blurred or incomplete, may deviate much from

In this paper

We introduce the concept of sparse coding noise(SCN) to facilitate the discussion of problem.

Given the dictionary

-

=

We proposed centralized sparse representation model to effectively reduce the SCN and then enhance the sparsity based IR performance.

7.2 Centralized sparse representation modeling

7.2.1 The sparse coding noise in image restoration

X

: original image

(matrix extracting patch

from X at location i)

Given dictionary

each patch can be sparsely represented by the set of sparse code

-

In the application of IR, x is not available to code, and we only have the degraded observation image y: y = Hx + v

= { } -

the image then can be reconstructed as:

-

from the context we mentioned before, we know that will deviate from

-

So that SCN : And will determine the IR quality of

-

We perform the experiment to investigate the statistics of SCN , And the observation motivate us to model SCN with a laplacian prior.

Laplacian distribution

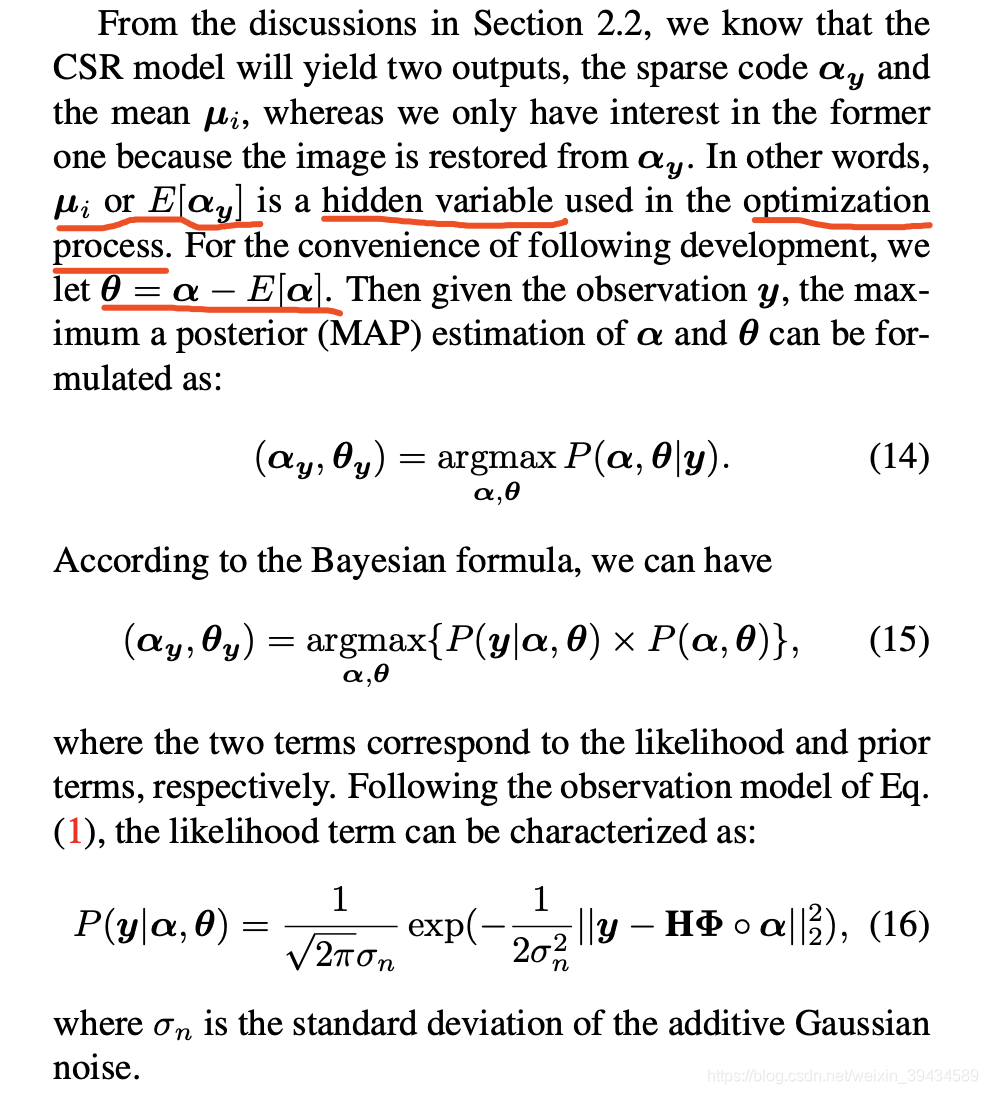

7.2.2 Centralized sparse representation

From the context we have mentioned before, we know that if we want to improve the performance of the model, we need to suppress the SCN :

But in practice,

is always unknown, so we can give a good estimate of

, donated as

, so that

can be an estimate of SCN.

- A new sparse coding model can be:

-

=

{

+ r

-

}

r is constant

norm, p can be 1 OR 2 measure the distance between and - Compared with the model before, this model enforce to be more close to .

Now the problem turn to be how to find a reasonable estimate of the unknown vector

Normally, the estimate of a variable can be the average of several samples or the expectation.

In this part, we use expectation to estimate the

.

= E[

], and in practice, we can approach E[

] by E[

], by assuming the SCN is nearly zero.

- Then the model we have showed before can be:

-

- = { + r - E[ ] }

- We call this model centralized sparse representation (CSR)

- For the sparse code on each image patch i, E[ ] can be nearly computed if we have enough samples of . Then E[ ] can be computed as the weighted average of those sparse code vectors associated with the nonlocal similar patches to patch i. Donated for each patch i via block matching and then average the sparse codes within each cluster.

- Denoted by , the sparse code of the searched similar patch j to patch i.

- Then E[ ] = =

-

is the weight

; are the estimate of patch i and patch j. W is the normalization factor and h is predetermined scalar. - = { + r - }

Then we can apply iterative minimization approach to the CSR model.

The steps are as follows:

- initialize as 0, eg: Then compute , and then, using , we can compute via .

- Based on

, we can find the similar patches with each local patch i, then we can update

by

, and the updated result, donated by

. Then it can be used in next round. Such a procedure is iterated until convergence. In the

iteration, the sparse coding is performed by.

= { + r - }

During iteration, the accuracy of sparse code is gradually improved.

7.3 Algorithm of CSR

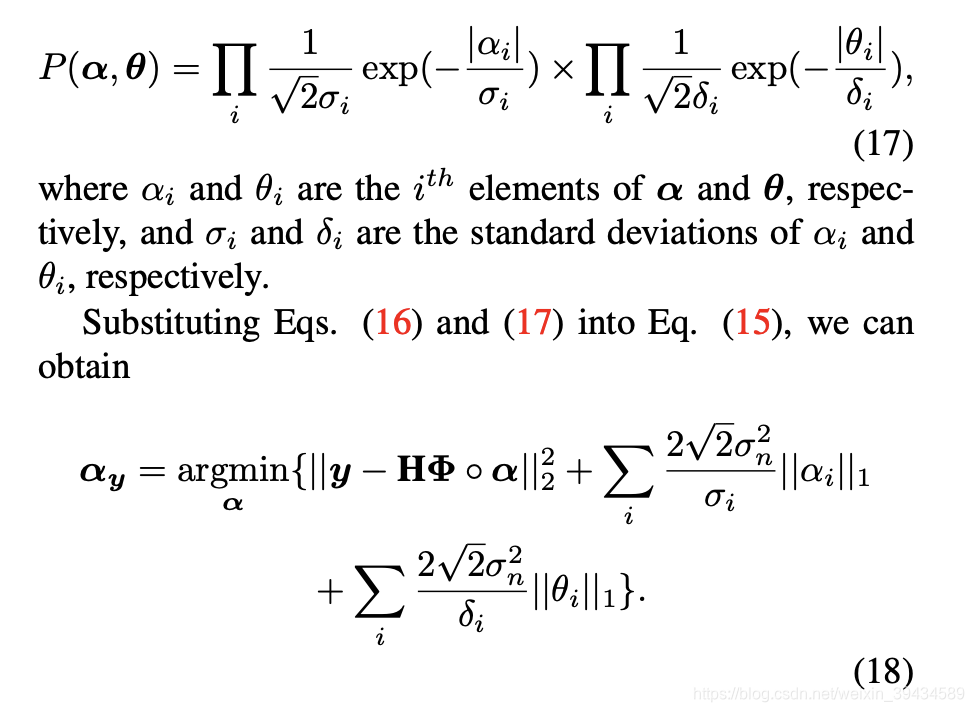

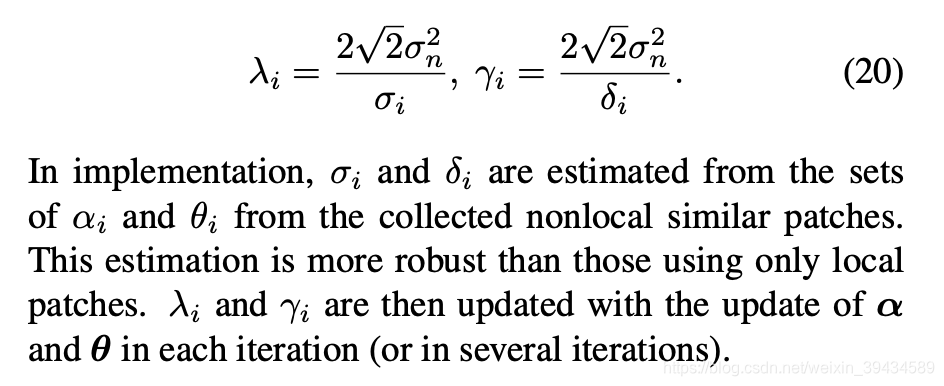

7.3.1 The determination of parameters and r.

It can be empirically found that

and

are nearly uncorrelated.

And before we have found that SCN can be well characterized by the laplacian distribution.

Meanwhile, it is also well accepted in literature that the sparse coefficients

can be characterized by i.i.d Laplacian distribution.

=

{

+ r

-

}

It is normally for us to set

equal to 1

And then the model can be converted to:

=

{

+ r

-

}

=

{

+ r

}

Compared this model with eq(18)

we can conclude that:

This is the end of this model.