HBASE启动失败

org.apache.hadoop.hbase.ClockOutOfSyncException: org.apache.hadoop.hbase.ClockOutOfSyncException: Server hadoop74,60020,1372320861420 has been rejected; Reported time is too far out of sync with master. Time difference of 143732ms > max allowed of 30000ms

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:525)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:95)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:79)

at org.apache.hadoop.hbase.regionserver.HRegionServer.reportForDuty(HRegionServer.java:2093)

at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:744)

at java.lang.Thread.run(Thread.java:722)

Caused by: org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.hbase.ClockOutOfSyncException: Server hadoop74,60020,1372320861420 has been rejected; Reported time is too far out of sync with master. Time difference of 143732ms > max allowed of 30000ms

原因:时间不一致造成的

解决方案1:

配置:hbase.master.maxclockske

<property>

<name>hbase.master.maxclockskew</name>

<value>200000</value>

<description>Time difference of regionserver from master</description>

</property>

解决方案2:

修改个节点时间

hbase并发问题

2012-06-01 16:05:22,776 INFO org.apache.hadoop.hdfs.DFSClient: Exception in createBlockOutputStream java.net.SocketException: 打开的文件过多

2012-06-01 16:05:22,776 INFO org.apache.hadoop.hdfs.DFSClient: Abandoning block blk_3790131629645188816_18192

2012-06-01 16:13:01,966 WARN org.apache.hadoop.hdfs.DFSClient: DFS Read: java.io.IOException: Could not obtain block: blk_-299035636445663861_7843 file=/hbase/SendReport/83908b7af3d5e3529e61b870a16f02dc/data/17703aa901934b39bd3b2e2d18c671b4.9a84770c805c78d2ff19ceff6fecb972

at org.apache.hadoop.hdfs.DFSClient$DFSInputStream.chooseDataNode(DFSClient.java:1812)

at org.apache.hadoop.hdfs.DFSClient$DFSInputStream.blockSeekTo(DFSClient.java:1638)

at org.apache.hadoop.hdfs.DFSClient$DFSInputStream.read(DFSClient.java:1767)

at org.apache.hadoop.hdfs.DFSClient$DFSInputStream.read(DFSClient.java:1695)

at java.io.DataInputStream.readBoolean(DataInputStream.java:242)

at org.apache.hadoop.hbase.io.Reference.readFields(Reference.java:116)

at org.apache.hadoop.hbase.io.Reference.read(Reference.java:149)

at org.apache.hadoop.hbase.regionserver.StoreFile.<init>(StoreFile.java:216)

at org.apache.hadoop.hbase.regionserver.Store.loadStoreFiles(Store.java:282)

at org.apache.hadoop.hbase.regionserver.Store.<init>(Store.java:221)

at org.apache.hadoop.hbase.regionserver.HRegion.instantiateHStore(HRegion.java:2510)

at org.apache.hadoop.hbase.regionserver.HRegion.initialize(HRegion.java:449)

at org.apache.hadoop.hbase.regionserver.HRegion.openHRegion(HRegion.java:3228)

at org.apache.hadoop.hbase.regionserver.HRegion.openHRegion(HRegion.java:3176)

at org.apache.hadoop.hbase.regionserver.handler.OpenRegionHandler.openRegion(OpenRegionHandler.java:331)

at org.apache.hadoop.hbase.regionserver.handler.OpenRegionHandler.process(OpenRegionHandler.java:107)

at org.apache.hadoop.hbase.executor.EventHandler.run(EventHandler.java:169)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

at java.lang.Thread.run(Thread.java:722)

原因及修改方法:由于 Linux系统最大可打开文件数一般默认的参数值是1024,通过 ulimit -n 65535 可即时修改,但重启后就无效了。或者有如下修改方式:

有如下三种修改方式:

1.在/etc/rc.local 中增加一行 ulimit -SHn 65535

2.在/etc/profile 中增加一行 ulimit -SHn 65535

3.在/etc/security/limits.conf最后增加如下两行记录

* soft nofile 65535

* hard nofile 65535

如果hadoop版本不是hbase默认对于版本,运行phoenix异步索引MR会报错

2017-07-13 21:18:41,438 INFO [main] mapreduce.Job: Job job_1498447686527_0002 running in uber mode : false

2017-07-13 21:18:41,439 INFO [main] mapreduce.Job: map 0% reduce 0%

2017-07-13 21:18:55,554 INFO [main] mapreduce.Job: map 100% reduce 0%

2017-07-13 21:19:05,642 INFO [main] mapreduce.Job: map 100% reduce 100%

2017-07-13 21:19:06,660 INFO [main] mapreduce.Job: Job job_1498447686527_0002 completed successfully

2017-07-13 21:19:06,739 ERROR [main] index.IndexTool: An exception occurred while performing the indexing job: IllegalArgumentException: No enum constant org.apache.hadoop.mapreduce.JobCounter.MB_MILLIS_REDUCES at:

java.lang.IllegalArgumentException: No enum constant org.apache.hadoop.mapreduce.JobCounter.MB_MILLIS_REDUCES

at java.lang.Enum.valueOf(Enum.java:236)

使用./hbase classpath 查看hbase使用的jar包路径,我用的habse0.98默认对应hadoop2.2.0,而我的是2.6.4版本,解决办法是移出hbase/lib下的hadoop*,删除outpath或者重新指定outpath运行该MR,运行完刷新索引,索引表就可用了。

phoenix查询count租约过期问题

首先租约过期解决方案,将租约时间增加:

首先租约过期解决方案,将租约时间增加:

划拉到底,增加时间到3分钟

将统计count(参数)中的参数用1代替,如select count(1) from ehrmain;

重启hadoop时namenode启动失败

部分日志:

This node has namespaceId '1770688123 and clusterId 'CID-57ffa982-419d-4c6a-81a1-d59761366cd1' but the requesting node expected '1974358097' and 'CID-57ffa982-419d-4c6a-81a1-d59761366cd1'

2017-04-25 19:20:01,508 WARN org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer: Edit log tailer interrupted

java.lang.InterruptedException: sleep interrupted

-------做的操作

停掉所有的进程,修改core-site.xml中的ipc参数

<property>

<name>ipc.client.connect.max.retries</name>

<value>100</value>

</property>

<property>

<name>ipc.client.connect.retry.interval</name>

<value>10000</value>

</property>

备份namenode的current,格式化namenode,将备用namenode的current替换成格式化后的namenode的current,修改journal的current中的VERSION的namespaceID和clusterID,使其值与namenode的VERSION对应值一致重启hadoop

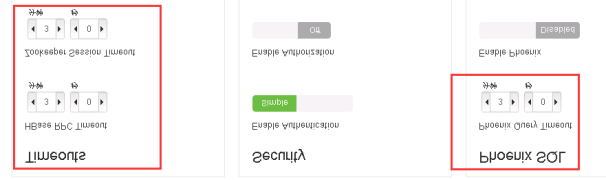

小松鼠连接不上

重启hbase后数据查不到,小松鼠客户端连接不上,hbase shell扫描报错:ERROR: org.apache.hadoop.hbase.exceptions.RegionOpeningException: Region ADVICE,,1484647165178.b827fafe905eda29996dfb56bd9fd61f. is opening on hadoop79,60020,1497005577348

Region EHRMAIN,,1489599097179.61688929e93c5143efbe19b68f995c76. is not online on hadoop79,60020,1497002696781

phoenix报错:is opening on hadoop78,60020

命令hbase hbck:出现大量异常信息

解决办法:关掉hbase集群,在每个RegionServer上的hbase-site.xml配置文件里面增加如下

<property>

<name>hbase.regionserver.executor.openregion.threads</name>

<value>100</value>

</property>

重启hbase集群,命令hbase hbck:Summary:

Table CONSULTATIONRECORD is okay.

Number of regions: 6

Deployed on: hadoop76,60020,1497006965624 hadoop77,60020,1497006965687 hadoop78,60020,1497006965700 hadoop79,60020,1497006965677

Table PT_LABREPORT is okay.所有表都ok。

注:hbase hbck -fix可以修复hbase数据不一致

无法启动datanode问题

hadoop集群启动start-all.sh的时候,slave总是无法启动datanode,并会报错:… could only be replicated to 0 nodes, instead of 1 … 就是有节点的标识可能重复。也可能有其他原因,以下解决方法请依次尝试。

解决方法:

1).删除所有节点dfs.data.dir和dfs.tmp.dir目录(默认为tmp/dfs/data和tmp/dfs/tmp)下的数据文件;然后重新hadoop namenode -format 格式化节点;然后启动。

2).如果是端口访问的问题,你应该确保所用的端口都打开,比如hdfs://machine1:9000/、50030、50070之类的。执行#iptables -I INPUT -p tcp –dport 9000 -j ACCEPT 命令。如果还有报错:hdfs.DFSClient: Exception in createBlockOutputStream java.net.ConnectException: Connection refused;应该是datanode上的端口不能访问,到datanode上修改iptables:#iptables -I INPUT -s machine1 -p tcp -j ACCEPT

3).还有可能是防火墙的限制集群间的互相通信。尝试关闭防火墙。/etc/init.d/iptables stop

4).最后还有可能磁盘空间不够了,请查看 df -al

权限问题导致的启动失败

ERROR namenode.NameNode: java.io.IOException: Cannot create directory /export/home/dfs/name/current

ERROR namenode.NameNode: java.io.IOException: Cannot remove current directory: /usr/local/hadoop/hdfsconf/name/current

原因是 没有设置 /usr/hadoop/tmp 的权限没有设置, 将之改为:

chown –R hadoop:hadoop /usr/hadoop/tmp

sudo chmod -R a+w /usr/local/hadoop

Hadoop格式化HDFS报错

此报错一般是配置中定义的相关name和data文件夹没有创建或者权限不足,也有可能是曾经其他版本使用过的文件夹,解决办法,如果已有删除重建,没有就创建这两个文件夹,保证是当前用户创建的,再重新格式化即可。

MR操作hbase

java.lang.ClassNotFoundException: org.apache.hadoop.hbase.protobuf.generated.ClientProtos$Result

解决办法:hbase少了protobuf的jar包

删除hbase的jar包,重新导入全部hbase*.jar

---------------------------------------

java.lang.NoClassDefFoundError: org/cloudera/htrace/Trace

导入htrace包

phoenix报错

WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

查看hadoop/lib目录,发现用户是500 500,修改 chown root.root -R *

重启系统,启动所有组建../sqlline.py 192.168.204.111 启动成功

-------------------------------

如若报错而且很长时间才启动 Can't get master address from ZooKeeper; znode data == null

--hbase master挂了

phoenix启动报错:Error: org.apache.hadoop.hbase.DoNotRetryIOException: Class org.apache.phoenix.coprocessor.MetaDataRegionObserver cannot be loaded Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks

必须重启hbase包括Hmaster

phoenix报错:Error: ERROR 1012 (42M03): Table undefined. tableName=ZLH_PERSON (state=42M03,code=1012)

查看是否存在TABLE_SCHEM,写上就好了

0: jdbc:phoenix:hadoop74,hadoop75,hadoop76:21> select * from test.zlh_person;

+------+-------+------+

| ID | NAME | AGE |

+------+-------+------+

| 100 | 灏忕孩 | 18 |

+------+-------+------+

hbase-0.98.21/bin/stop-hbase.sh

stopping hbasecat: /tmp/hbase-hadoop-master.pid: No such file or directory

原因是,默认情况下pid文件保存在/tmp目录下,/tmp目录下的文件很容易丢失(重启后基本就会删除),解决办法:在hbase-env.sh中修改pid文件的存放路径

[java]

# The directory where pid files are stored. /tmp by default.

export HBASE_PID_DIR=/var/hadoop/pids

hadoop中

no namenode to stop hadoop关闭找不到pid,修改集群中hadoop-env.sh和yarn-daemon.sh中的pid选项即可

2016-09-09 15:32:59,380 WARN [main-SendThread(hadoop74:2181)] zookeeper.ClientCnxn: Session 0x0 for server hadoop74/172.18.3.74:2181, unexpected error, closing socket connection and attempting reconnect

java.io.IOException: Connection reset by peer

连不上zookeeper,查看zookeeper的日志文件有报too many connections from host - max is 10 的错误,因为hbase连接zookeeper的连接数太多,默认zookeeper给每个客户端IP使用的连接数为10个,目前每个regionserver有三百个region,stop zookeeper修改zoo.cfg:maxClientCnxns=300,重启zookeeper

ambari部署集群ssh主机注册时不成功,查看日志Ambari agent machine hostname (localhost.localdomain) does not match expected ambari server hostname (hadoop-node-101). Aborting registration.

发现/etc/hosts文件有问题,hostname之间不是空格 localhost和hostname都放在了最后,修改成正确的hosts文件后解决

正确格式:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.18.*.* hadoop-node-*

..............

ambari的phoenix版本太低不支持分页问题解决

1、删除phoenix相关的表

2、hbase shell删除phoenix四个系统表("SYSTEM.CATALOG", "SYSTEM.FUNCTION", "SYSTEM.SEQUENCE", "SYSTEM.STATS")

3、修改相应的phoenix jar包名替换ambari的phoenix jar

phoenix-4.4.0.2.4.2.0-258-client.jar,phoenix-core-4.4.0.2.4.2.0-258-tests.jar,phoenix-server-client-4.4.0.2.4.2.0-258.jar,phoenix-4.4.0.2.4.2.0-258-thin-client.jar,phoenix-server-4.4.0.2.4.2.0-258.jar,phoenix-core-4.4.0.2.4.2.0-258.jar,phoenix-server-4.4.0.2.4.2.0-258-tests.jar

4、小松鼠加入新jar重建驱动(phoenix-4.4.0.2.4.2.0-258-client.jar,phoenix-server-4.4.0.2.4.2.0-258.jar,phoenix-core-4.4.0.2.4.2.0-258.jar)

5、关闭hbase,修改 Advanced hbase-site下的RegionServer WAL Codec:org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec

6、重启hbase

java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.addDeprecations([Lorg/apache/hadoop/conf/Configuration$DeprecationDelta;)V

缺少hadoop-mapreduce-client-core-2.2.0.jar包

hbase宕机does not exist or is not under Constructionblk_1075156057_1415896

设置-XX:CMSInitiatingOccupancyFraction=60,提早cms进行老生带的回收,减少cms的时间