lane detection.py

import os

import sys

import cv2

import numpy as np

from PyQt5.QtWidgets import QApplication

import GUI

# pre-define function

def grayscale(img):

return cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

def canny(img, low_threshold, high_threshold):

return cv2.Canny(img, low_threshold, high_threshold)

def gaussian_blur(img, kernel_size):

return cv2.GaussianBlur(img, (kernel_size, kernel_size), 0)

# crop the region of interest

def ROI(img, vertices):

mask = np.zeros_like(img)

if len(img.shape) > 2:

channel_count = img.shape[2]

ignore_mask_color = (255,) * channel_count

else:

ignore_mask_color = 255

cv2.fillPoly(mask, vertices, ignore_mask_color)

masked_img = cv2.bitwise_and(img, mask)

return masked_img

def draw_lines(img, lines, color=[255,0,0], thickness=2):

for line in lines:

for x1, y1, x2, y2 in line:

cv2.line(img, (x1, y1), (x2, y2), color, thickness)

def hough_lines(img, rho, theta, threshold, min_line_len, max_line_gap):

lines = cv2.HoughLinesP(img, rho, theta, threshold, np.array([]),

minLineLength=min_line_len, maxLineGap=max_line_gap)

return lines

# angle filter

def bypass_angle_filter(lines, low_thres, hi_thres):

filtered_lines = []

for line in lines:

for x1, y1, x2, y2 in line:

angle = abs(np.arctan((y2-y1)/(x2-x1+0.0001))*180/np.pi)

if low_thres < angle < hi_thres:

filtered_lines.append([[x1, y1, x2, y2]])

return filtered_lines

def yellow_enhance(img_rgb):

img_hsv = cv2.cvtColor(img_rgb, cv2.COLOR_BGR2HSV)

lower_yellow = np.array([40, 100, 20])

upper_yellow = np.array([100, 255, 255])

mask = cv2.inRange(img_hsv, lower_yellow, upper_yellow)

gray = grayscale(img_rgb)

return cv2.addWeighted(mask, 1., gray, 1., 0.)# merge the right / left line

def average_lines(img, lines, y_min, y_max):

# m: 斜率 b: 截距 norm: 长度

hough_pts = {'m_left': [], 'b_left': [], 'norm_left': [],

'm_right': [], 'b_right': [], 'norm_right': []}

if lines:

for line in lines:

for x1, y1, x2, y2 in line:

X, Y = [x1, x2], [y1, y2]

m, b = np.polyfit(X, Y, 1)

norm = ((x2-x1)**2 + (y2-y1)**2)**0.5

if m < 0: # right lane

hough_pts['m_right'].append(m)

hough_pts['b_right'].append(b)

hough_pts['norm_right'].append(norm)

if m > 0: # left lane

hough_pts['m_left'].append(m)

hough_pts['b_left'].append(b)

hough_pts['norm_left'].append(norm)

if len(hough_pts['norm_left']):

b_avg_left = np.mean(np.array(hough_pts['b_left']))

m_avg_left = np.mean(np.array(hough_pts['m_left']))

xmin_left = int((y_min-b_avg_left)/m_avg_left)

xmax_left = int((y_max-b_avg_left)/m_avg_left)

left_lane = [[xmin_left, y_min, xmax_left, y_max]]

else:

left_lane = [[0, 0, 0, 0]]

if len(hough_pts['norm_right']):

b_avg_right = np.mean(np.array(hough_pts['b_right']))

m_avg_right = np.mean(np.array(hough_pts['m_right']))

xmin_right = int((y_min - b_avg_right)/m_avg_right)

xmax_right = int((y_max-b_avg_right)/m_avg_right)

right_lane = [[xmin_right, y_min, xmax_right, y_max]]

else:

right_lane = [[0, 0, 0, 0]]

return [left_lane, right_lane]

# major process

def pipeline(img, vertices, low_thres, hi_thres):

gray = yellow_enhance(img)

gray_blur = gaussian_blur(gray,3)

gray_dilate = cv2.dilate(gray_blur, (3,3), iterations=10)

edges = canny(gray_dilate, 50, 180)

h, w = img.shape[:2]

masked = ROI(edges, vertices)

h_lines = hough_lines(masked,

rho=1,

theta=np.pi/180,

threshold=26,

min_line_len=5,

max_line_gap=50

)

# Angle High Pass filter

h_lines = bypass_angle_filter(h_lines, low_thres, hi_thres)

# averaging lines

avg_lines = average_lines(img, h_lines, int(h * 0.65), h)

avg_img = np.zeros(img.shape, dtype=np.uint8)

draw_lines(avg_img, avg_lines, color=[255, 0, 0], thickness=10)

img_all_lines = cv2.addWeighted(avg_img, 1., img, 1., 0.)

return img_all_lines# process the frame / video

def process_img(img):

h, w = img.shape[:2]

vertices = np.array([[(200, h-80), (490, h*0.65),

(820, h*0.65), (w-150, w-80),

(100, h-80)]], dtype=np.int32)

low_thres, hi_thres = [30, 80]

return pipeline(img, vertices, low_thres, hi_thres)

def process_video(dir):

cap = cv2.VideoCapture(dir)

ret, frame = cap.read()

h, w = frame.shape[:2]

fourcc = cv2.VideoWriter_fourcc(*'XVID')

output = cv2.VideoWriter('./test_videos/output.avi', fourcc, 30.0, (w//2, h//2))

while cap.isOpened():

ret, frame = cap.read()

if ret:

processed_img = process_img(frame)

frame = cv2.resize(frame, (w//2, h//2), cv2.INTER_LINEAR)

processed_img = cv2.resize(processed_img, (w//2, h//2), cv2.INTER_LINEAR)

cv2.imshow('frame', frame)

cv2.imshow('result', processed_img)

output.write(processed_img)

if cv2.waitKey(10) == 27:

break

else:

break

cap.release()

output.release()

cv2.destroyAllWindows()# main function

if __name__ == '__main__':

isProcessed = True

if not isProcessed:

process_video('./test_videos/challenge.mp4') # show and save the video

else:

app = QApplication(sys.argv)

v_dir = './test_videos/challenge.mp4'

v_dir2 = './test_videos/output.avi'

gui = GUI.GUI(v_dir, v_dir2)

gui.show()

sys.exit(app.exec_())

GUI.py

from PyQt5.QtCore import *

from PyQt5.QtWidgets import *

from PyQt5.QtGui import *

import cv2

import sys

class GUI(QWidget):

def __init__(self, video_dir, video_dir2):

super().__init__()

self.timer = QTimer(self)

self.timer.timeout.connect(self.show_pic) # 计时结束调用 show_pic 函数

self.timer.start(30)

self.cap = cv2.VideoCapture(video_dir)

self.cap2 = cv2.VideoCapture(video_dir2)

self.label = QLabel()

self.label2 = QLabel()

h_box = QHBoxLayout(self)

h_box.addWidget(self.label)

h_box.addWidget(self.label2)

self.setLayout(h_box)

self.move(300, 300)

self.setWindowTitle('Lane Detection')

def show_pic(self):

ret, frame = self.cap.read()

ret2, frame2 = self.cap2.read()

if ret and ret2:

show = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

h, w = show.shape[:2]

show = cv2.resize(show, (w//2, h//2), cv2.INTER_LINEAR)

show_image = QImage(show.data, show.shape[1], show.shape[0], QImage.Format_RGB888)

self.label.setPixmap(QPixmap.fromImage(show_image))

show2 = cv2.cvtColor(frame2, cv2.COLOR_BGR2RGB)

show2 = cv2.resize(show2, (w//2, h//2), cv2.INTER_LINEAR)

show_image2 = QImage(show2.data, show2.shape[1], show2.shape[0], QImage.Format_RGB888)

self.label2.setPixmap(QPixmap.fromImage(show_image2))

self.timer.start(30)

# # test part

# if __name__=='__main__':

# app = QApplication(sys.argv)

# v_dir = './test_videos/challenge.mp4'

# v_dir2 = './test_videos/white.mp4'

# gui = GUI(v_dir, v_dir2)

# gui.show()

# sys.exit(app.exec_())

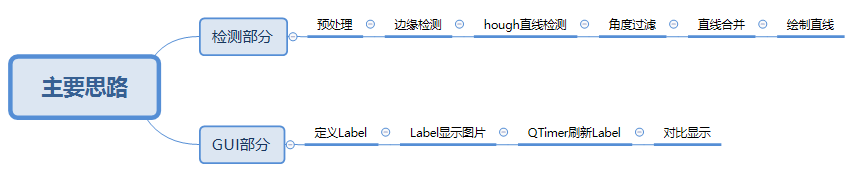

最终效果

process image:

GUI:

总结:

测试视频中表现还可以,然而鲁棒性并不是很好,很多预设参数,一旦图像质量、尺寸有变化,效果就会比较差;

GUI部分做的很随意,其实有很多地方可以改进,比如加几个button,connect一个文件对话框可以选取视频进行处理或对比,以及可以设置用cv2.VideoCapture打开摄像头录制视频,边录制边对每一帧处理再显示在Qt的label上;

总的来说就是重新熟悉一下opencv 和 pyqt 中的一些函数,练手还可以,作为小项目的话还有很多地方可以扩充和改进。

p.s. OpenCV C++版本 车道检测 https://blog.csdn.net/francislucien2017/article/details/83443639