今天我想用Spring Boot项目来集成Kafka并能够在本地跑起来,因为后面需要用到Kafka来做一个项目,具体的一些框架及中间件的知识我就不再讲解了,主要还是跟大家来分享一下我的代码和一些问题的展示:

1、首先我们要在本地搭建一个kafka的服务,在官网下载,地址是http://kafka.apache.org/downloads ,我选择的是

然后解压放到本地的一个路径,我的路径是:

然后进入到config目录,找到server.properties文件,修改

Kafka服务启动以来与Zk,关于Zk的搭建,大家可以到网上找相关资料,这个我就不讲述了。

2、启动本地的Zookeeper

3、然后我们来到kafka的根目录,shift + 鼠标右键, 选择 “在此处打开命令窗口”,输入命令:

.\bin\windows\kafka-server-start.bat .\config\server.properties

启动运行报错,错误信息,:

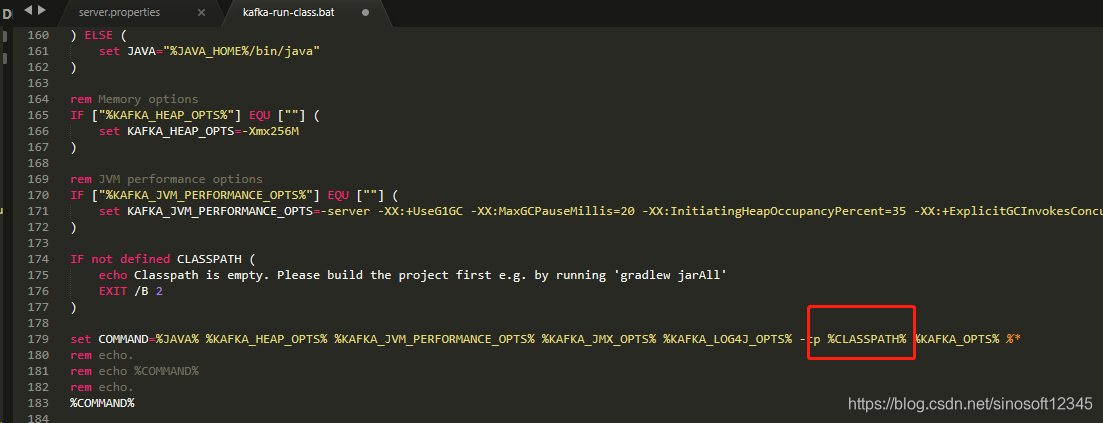

这个的原因,我们找到

找到

修改成如下配置,对标红的内容添加双引号

然后启动项目,但是还是报错:

[2019-02-25 16:25:21,951] ERROR Error while deleting the clean shutdown file in dir D:\kafka_2.12-2.1.1\tmp\kafka-logs (kafka.server.LogDirFailureChannel)

java.io.IOException: Map failed

at sun.nio.ch.FileChannelImpl.map(FileChannelImpl.java:939)

at kafka.log.AbstractIndex.<init>(AbstractIndex.scala:126)

at kafka.log.TimeIndex.<init>(TimeIndex.scala:54)

at kafka.log.LogSegment$.open(LogSegment.scala:635)

at kafka.log.Log.$anonfun$loadSegmentFiles$3(Log.scala:465)

at scala.collection.TraversableLike$WithFilter.$anonfun$foreach$1(TraversableLike.scala:788)

at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:32)

at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:29)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:194)

at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:787)

at kafka.log.Log.loadSegmentFiles(Log.scala:452)

at kafka.log.Log.$anonfun$loadSegments$1(Log.scala:563)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:12)

at kafka.log.Log.retryOnOffsetOverflow(Log.scala:2007)

at kafka.log.Log.loadSegments(Log.scala:557)

at kafka.log.Log.<init>(Log.scala:290)

at kafka.log.Log$.apply(Log.scala:2141)

at kafka.log.LogManager.loadLog(LogManager.scala:275)

at kafka.log.LogManager.$anonfun$loadLogs$12(LogManager.scala:345)

at kafka.utils.CoreUtils$$anon$1.run(CoreUtils.scala:63)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.OutOfMemoryError: Map failed

at sun.nio.ch.FileChannelImpl.map0(Native Method)

at sun.nio.ch.FileChannelImpl.map(FileChannelImpl.java:936)

... 24 more

[2019-02-25 16:25:21,957] INFO Logs loading complete in 8885 ms. (kafka.log.LogManager)

[2019-02-25 16:25:21,979] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2019-02-25 16:25:21,981] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2019-02-25 16:25:22,285] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.Acceptor)

[2019-02-25 16:25:22,325] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer)

[2019-02-25 16:25:22,359] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 16:25:22,362] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 16:25:22,363] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 16:25:22,390] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2019-02-25 16:25:22,400] INFO [ReplicaManager broker=0] Stopping serving replicas in dir D:\kafka_2.12-2.1.1\tmp\kafka-logs (kafka.server.ReplicaManager)

[2019-02-25 16:25:22,408] INFO [ReplicaFetcherManager on broker 0] Removed fetcher for partitions Set() (kafka.server.ReplicaFetcherManager)

[2019-02-25 16:25:22,408] INFO [ReplicaAlterLogDirsManager on broker 0] Removed fetcher for partitions Set() (kafka.server.ReplicaAlterLogDirsManager)

[2019-02-25 16:25:22,442] INFO [ReplicaManager broker=0] Broker 0 stopped fetcher for partitions and stopped moving logs for partitions because they are in the failed log directory D:\kafka_2.12-2.1.1\tmp\kafka-logs. (kafka.server.ReplicaManager)

[2019-02-25 16:25:22,447] INFO Stopping serving logs in dir D:\kafka_2.12-2.1.1\tmp\kafka-logs (kafka.log.LogManager)

[2019-02-25 16:25:22,452] ERROR Shutdown broker because all log dirs in D:\kafka_2.12-2.1.1\tmp\kafka-logs have failed (kafka.log.LogManager)这个的原因为本地的java版本不是64位的,所以报了这种错误,我重新安装了64位的jdk8,然后重新启动,结果可以正常启动了,输入命令: .\bin\windows\kafka-server-start.bat .\config\server.properties

D:\kafka_2.12-2.1.1>.\bin\windows\kafka-server-start.bat .\config\server.properties

[2019-02-25 18:50:19,056] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2019-02-25 18:50:19,706] INFO starting (kafka.server.KafkaServer)

[2019-02-25 18:50:19,708] INFO Connecting to zookeeper on localhost:2181 (kafka.server.KafkaServer)

[2019-02-25 18:50:19,737] INFO [ZooKeeperClient] Initializing a new session to localhost:2181. (kafka.zookeeper.ZooKeeperClient)

[2019-02-25 18:50:19,751] INFO Client environment:zookeeper.version=3.4.13-2d71af4dbe22557fda74f9a9b4309b15a7487f03, built on 06/29/2018 00:39 GMT (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,752] INFO Client environment:host.name=DESKTOP-U2O7KPS (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,752] INFO Client environment:java.version=1.8.0_121 (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,752] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,752] INFO Client environment:java.home=D:\Program Files\Java\jdk1.8.0_121\jre (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,752] INFO Client environment:java.class.path=.;D:\Program Files\Java\jdk1.8.0_121\lib\dt.jar;D:\Program Files\Java\jdk1.8.0_121\lib\tools.jar;;D:\kafka_2.12-2.1.1\libs\activation-1.1.1.jar;D:\kafka_2.12-2.1.1\libs\aopalliance-repackaged-2.5.0-b42.jar;D:\kafka_2.12-2.1.1\libs\argparse4j-0.7.0.jar;D:\kafka_2.12-2.1.1\libs\audience-annotations-0.5.0.jar;D:\kafka_2.12-2.1.1\libs\commons-lang3-3.8.1.jar;D:\kafka_2.12-2.1.1\libs\connect-api-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\connect-basic-auth-extension-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\connect-file-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\connect-json-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\connect-runtime-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\connect-transforms-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\guava-20.0.jar;D:\kafka_2.12-2.1.1\libs\hk2-api-2.5.0-b42.jar;D:\kafka_2.12-2.1.1\libs\hk2-locator-2.5.0-b42.jar;D:\kafka_2.12-2.1.1\libs\hk2-utils-2.5.0-b42.jar;D:\kafka_2.12-2.1.1\libs\jackson-annotations-2.9.8.jar;D:\kafka_2.12-2.1.1\libs\jackson-core-2.9.8.jar;D:\kafka_2.12-2.1.1\libs\jackson-databind-2.9.8.jar;D:\kafka_2.12-2.1.1\libs\jackson-jaxrs-base-2.9.8.jar;D:\kafka_2.12-2.1.1\libs\jackson-jaxrs-json-provider-2.9.8.jar;D:\kafka_2.12-2.1.1\libs\jackson-module-jaxb-annotations-2.9.8.jar;D:\kafka_2.12-2.1.1\libs\javassist-3.22.0-CR2.jar;D:\kafka_2.12-2.1.1\libs\javax.annotation-api-1.2.jar;D:\kafka_2.12-2.1.1\libs\javax.inject-1.jar;D:\kafka_2.12-2.1.1\libs\javax.inject-2.5.0-b42.jar;D:\kafka_2.12-2.1.1\libs\javax.servlet-api-3.1.0.jar;D:\kafka_2.12-2.1.1\libs\javax.ws.rs-api-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\javax.ws.rs-api-2.1.jar;D:\kafka_2.12-2.1.1\libs\jaxb-api-2.3.0.jar;D:\kafka_2.12-2.1.1\libs\jersey-client-2.27.jar;D:\kafka_2.12-2.1.1\libs\jersey-common-2.27.jar;D:\kafka_2.12-2.1.1\libs\jersey-container-servlet-2.27.jar;D:\kafka_2.12-2.1.1\libs\jersey-container-servlet-core-2.27.jar;D:\kafka_2.12-2.1.1\libs\jersey-hk2-2.27.jar;D:\kafka_2.12-2.1.1\libs\jersey-media-jaxb-2.27.jar;D:\kafka_2.12-2.1.1\libs\jersey-server-2.27.jar;D:\kafka_2.12-2.1.1\libs\jetty-client-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-continuation-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-http-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-io-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-security-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-server-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-servlet-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-servlets-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jetty-util-9.4.12.v20180830.jar;D:\kafka_2.12-2.1.1\libs\jopt-simple-5.0.4.jar;D:\kafka_2.12-2.1.1\libs\kafka-clients-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka-log4j-appender-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka-streams-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka-streams-examples-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka-streams-scala_2.12-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka-streams-test-utils-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka-tools-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-javadoc.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-javadoc.jar.asc;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-scaladoc.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-scaladoc.jar.asc;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-sources.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-sources.jar.asc;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-test-sources.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-test-sources.jar.asc;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-test.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1-test.jar.asc;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1.jar;D:\kafka_2.12-2.1.1\libs\kafka_2.12-2.1.1.jar.asc;D:\kafka_2.12-2.1.1\libs\log4j-1.2.17.jar;D:\kafka_2.12-2.1.1\libs\lz4-java-1.5.0.jar;D:\kafka_2.12-2.1.1\libs\maven-artifact-3.6.0.jar;D:\kafka_2.12-2.1.1\libs\metrics-core-2.2.0.jar;D:\kafka_2.12-2.1.1\libs\osgi-resource-locator-1.0.1.jar;D:\kafka_2.12-2.1.1\libs\plexus-utils-3.1.0.jar;D:\kafka_2.12-2.1.1\libs\reflections-0.9.11.jar;D:\kafka_2.12-2.1.1\libs\rocksdbjni-5.14.2.jar;D:\kafka_2.12-2.1.1\libs\scala-library-2.12.7.jar;D:\kafka_2.12-2.1.1\libs\scala-logging_2.12-3.9.0.jar;D:\kafka_2.12-2.1.1\libs\scala-reflect-2.12.7.jar;D:\kafka_2.12-2.1.1\libs\slf4j-api-1.7.25.jar;D:\kafka_2.12-2.1.1\libs\slf4j-log4j12-1.7.25.jar;D:\kafka_2.12-2.1.1\libs\snappy-java-1.1.7.2.jar;D:\kafka_2.12-2.1.1\libs\validation-api-1.1.0.Final.jar;D:\kafka_2.12-2.1.1\libs\zkclient-0.11.jar;D:\kafka_2.12-2.1.1\libs\zookeeper-3.4.13.jar;D:\kafka_2.12-2.1.1\libs\zstd-jni-1.3.7-1.jar (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,753] INFO Client environment:java.library.path=D:\Program Files\Java\jdk1.8.0_121\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;D:\Program Files\Java\jdk1.8.0_121\bin;D:\Program Files\Java\jdk1.8.0_121\jre\bin;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;D:\Program Files\IDM Computer Solutions\UltraEdit;D:\apache-maven-3.5.3\bin;D:\Program Files\nodejs\;D:\Program Files\erl10.1\bin;D:\Program Files\TortoiseSVN\bin;D:\Program Files\Python\Python36;C:\ProgramData\Oracle\Java\javapath;C:\Users\wangzhizhong\AppData\Local\Microsoft\WindowsApps;C:\Users\wangzhizhong\AppData\Roaming\npm;D:\Program Files\nodejs\node_global;D:\Program Files\Fiddler;D:\Program Files\Microsoft VS Code Insiders\bin;. (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,753] INFO Client environment:java.io.tmpdir=C:\Users\WANGZH~1\AppData\Local\Temp\ (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,755] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,761] INFO Client environment:os.name=Windows 10 (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,763] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,764] INFO Client environment:os.version=10.0 (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,766] INFO Client environment:user.name=wangzhizhong (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,768] INFO Client environment:user.home=C:\Users\wangzhizhong (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,773] INFO Client environment:user.dir=D:\kafka_2.12-2.1.1 (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,777] INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=6000 watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@703580bf (org.apache.zookeeper.ZooKeeper)

[2019-02-25 18:50:19,808] INFO [ZooKeeperClient] Waiting until connected. (kafka.zookeeper.ZooKeeperClient)

[2019-02-25 18:50:19,814] INFO Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn)

[2019-02-25 18:50:19,816] INFO Socket connection established to localhost/127.0.0.1:2181, initiating session (org.apache.zookeeper.ClientCnxn)

[2019-02-25 18:50:19,941] INFO Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x169243d59ab0000, negotiated timeout = 6000 (org.apache.zookeeper.ClientCnxn)

[2019-02-25 18:50:19,948] INFO [ZooKeeperClient] Connected. (kafka.zookeeper.ZooKeeperClient)

[2019-02-25 18:50:20,477] INFO Cluster ID = 9yPEX37HT1K4b05jFRn41A (kafka.server.KafkaServer)

[2019-02-25 18:50:20,551] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 300000

group.min.session.timeout.ms = 6000

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.1-IV2

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = null

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = D:\kafka_2.12-2.1.1\tmp\kafka-logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 2.1-IV2

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1000012

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port = 9092

principal.builder.class = null

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.consumer.default = 9223372036854775807

quota.producer.default = 9223372036854775807

quota.window.num = 11

quota.window.size.seconds = 1

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 10000

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 60000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 1

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.connect = localhost:2181

zookeeper.connection.timeout.ms = 6000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 6000

zookeeper.set.acl = false

zookeeper.sync.time.ms = 2000

(kafka.server.KafkaConfig)

[2019-02-25 18:50:20,564] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 300000

group.min.session.timeout.ms = 6000

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.1-IV2

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = null

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = D:\kafka_2.12-2.1.1\tmp\kafka-logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 2.1-IV2

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1000012

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port = 9092

principal.builder.class = null

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.consumer.default = 9223372036854775807

quota.producer.default = 9223372036854775807

quota.window.num = 11

quota.window.size.seconds = 1

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 10000

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 60000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 1

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.connect = localhost:2181

zookeeper.connection.timeout.ms = 6000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 6000

zookeeper.set.acl = false

zookeeper.sync.time.ms = 2000

(kafka.server.KafkaConfig)

[2019-02-25 18:50:20,598] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2019-02-25 18:50:20,598] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2019-02-25 18:50:20,602] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2019-02-25 18:50:20,691] INFO Loading logs. (kafka.log.LogManager)

[2019-02-25 18:50:20,789] INFO [Log partition=user-log-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:20,794] INFO [Log partition=user-log-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:20,844] INFO [ProducerStateManager partition=user-log-0] Writing producer snapshot at offset 1 (kafka.log.ProducerStateManager)

[2019-02-25 18:50:20,932] INFO [Log partition=user-log-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 1 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:20,943] INFO [ProducerStateManager partition=user-log-0] Loading producer state from snapshot file 'D:\kafka_2.12-2.1.1\tmp\kafka-logs\user-log-0\00000000000000000001.snapshot' (kafka.log.ProducerStateManager)

[2019-02-25 18:50:20,966] INFO [Log partition=user-log-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 1 in 220 ms (kafka.log.Log)

[2019-02-25 18:50:20,995] INFO [Log partition=__consumer_offsets-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:20,996] INFO [Log partition=__consumer_offsets-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,041] INFO [Log partition=__consumer_offsets-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,044] INFO [Log partition=__consumer_offsets-0, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 61 ms (kafka.log.Log)

[2019-02-25 18:50:21,056] INFO [Log partition=__consumer_offsets-1, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,057] INFO [Log partition=__consumer_offsets-1, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,095] INFO [Log partition=__consumer_offsets-1, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,099] INFO [Log partition=__consumer_offsets-1, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:21,115] INFO [Log partition=__consumer_offsets-10, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,116] INFO [Log partition=__consumer_offsets-10, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,163] INFO [Log partition=__consumer_offsets-10, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,168] INFO [Log partition=__consumer_offsets-10, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 62 ms (kafka.log.Log)

[2019-02-25 18:50:21,181] INFO [Log partition=__consumer_offsets-11, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,181] INFO [Log partition=__consumer_offsets-11, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,239] INFO [Log partition=__consumer_offsets-11, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,244] INFO [Log partition=__consumer_offsets-11, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 71 ms (kafka.log.Log)

[2019-02-25 18:50:21,255] INFO [Log partition=__consumer_offsets-12, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,255] INFO [Log partition=__consumer_offsets-12, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,296] INFO [Log partition=__consumer_offsets-12, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,299] INFO [Log partition=__consumer_offsets-12, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:21,311] INFO [Log partition=__consumer_offsets-13, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,312] INFO [Log partition=__consumer_offsets-13, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,351] INFO [Log partition=__consumer_offsets-13, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,355] INFO [Log partition=__consumer_offsets-13, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:21,366] INFO [Log partition=__consumer_offsets-14, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,368] INFO [Log partition=__consumer_offsets-14, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,406] INFO [Log partition=__consumer_offsets-14, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,410] INFO [Log partition=__consumer_offsets-14, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:21,424] INFO [Log partition=__consumer_offsets-15, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,424] INFO [Log partition=__consumer_offsets-15, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,482] INFO [Log partition=__consumer_offsets-15, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,486] INFO [Log partition=__consumer_offsets-15, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 71 ms (kafka.log.Log)

[2019-02-25 18:50:21,498] INFO [Log partition=__consumer_offsets-16, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,498] INFO [Log partition=__consumer_offsets-16, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,527] INFO [Log partition=__consumer_offsets-16, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,531] INFO [Log partition=__consumer_offsets-16, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 43 ms (kafka.log.Log)

[2019-02-25 18:50:21,544] INFO [Log partition=__consumer_offsets-17, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,544] INFO [Log partition=__consumer_offsets-17, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,641] INFO [Log partition=__consumer_offsets-17, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,653] INFO [Log partition=__consumer_offsets-17, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 118 ms (kafka.log.Log)

[2019-02-25 18:50:21,676] INFO [Log partition=__consumer_offsets-18, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,676] INFO [Log partition=__consumer_offsets-18, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,727] INFO [Log partition=__consumer_offsets-18, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,730] INFO [Log partition=__consumer_offsets-18, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 71 ms (kafka.log.Log)

[2019-02-25 18:50:21,742] INFO [Log partition=__consumer_offsets-19, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,743] INFO [Log partition=__consumer_offsets-19, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,772] INFO [Log partition=__consumer_offsets-19, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,775] INFO [Log partition=__consumer_offsets-19, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 41 ms (kafka.log.Log)

[2019-02-25 18:50:21,787] INFO [Log partition=__consumer_offsets-2, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,789] INFO [Log partition=__consumer_offsets-2, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,815] INFO [Log partition=__consumer_offsets-2, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,823] INFO [Log partition=__consumer_offsets-2, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 45 ms (kafka.log.Log)

[2019-02-25 18:50:21,835] INFO [Log partition=__consumer_offsets-20, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,835] INFO [Log partition=__consumer_offsets-20, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,871] INFO [Log partition=__consumer_offsets-20, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,875] INFO [Log partition=__consumer_offsets-20, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 51 ms (kafka.log.Log)

[2019-02-25 18:50:21,885] INFO [Log partition=__consumer_offsets-21, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,886] INFO [Log partition=__consumer_offsets-21, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,937] INFO [Log partition=__consumer_offsets-21, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,940] INFO [Log partition=__consumer_offsets-21, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 64 ms (kafka.log.Log)

[2019-02-25 18:50:21,951] INFO [Log partition=__consumer_offsets-22, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:21,952] INFO [Log partition=__consumer_offsets-22, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,982] INFO [Log partition=__consumer_offsets-22, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:21,988] INFO [Log partition=__consumer_offsets-22, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 45 ms (kafka.log.Log)

[2019-02-25 18:50:22,001] INFO [Log partition=__consumer_offsets-23, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,002] INFO [Log partition=__consumer_offsets-23, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,137] INFO [Log partition=__consumer_offsets-23, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,140] INFO [Log partition=__consumer_offsets-23, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 149 ms (kafka.log.Log)

[2019-02-25 18:50:22,155] INFO [Log partition=__consumer_offsets-24, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,155] INFO [Log partition=__consumer_offsets-24, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,214] INFO [Log partition=__consumer_offsets-24, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,219] INFO [Log partition=__consumer_offsets-24, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 76 ms (kafka.log.Log)

[2019-02-25 18:50:22,229] INFO [Log partition=__consumer_offsets-25, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,230] INFO [Log partition=__consumer_offsets-25, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,281] INFO [Log partition=__consumer_offsets-25, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,284] INFO [Log partition=__consumer_offsets-25, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 63 ms (kafka.log.Log)

[2019-02-25 18:50:22,295] INFO [Log partition=__consumer_offsets-26, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,295] INFO [Log partition=__consumer_offsets-26, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,325] INFO [Log partition=__consumer_offsets-26, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,328] INFO [Log partition=__consumer_offsets-26, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 40 ms (kafka.log.Log)

[2019-02-25 18:50:22,341] INFO [Log partition=__consumer_offsets-27, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,341] INFO [Log partition=__consumer_offsets-27, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,391] INFO [Log partition=__consumer_offsets-27, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,394] INFO [Log partition=__consumer_offsets-27, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 63 ms (kafka.log.Log)

[2019-02-25 18:50:22,404] INFO [Log partition=__consumer_offsets-28, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,405] INFO [Log partition=__consumer_offsets-28, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,436] INFO [Log partition=__consumer_offsets-28, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,439] INFO [Log partition=__consumer_offsets-28, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 42 ms (kafka.log.Log)

[2019-02-25 18:50:22,449] INFO [Log partition=__consumer_offsets-29, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,450] INFO [Log partition=__consumer_offsets-29, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,491] INFO [Log partition=__consumer_offsets-29, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,494] INFO [Log partition=__consumer_offsets-29, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:22,505] INFO [Log partition=__consumer_offsets-3, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,505] INFO [Log partition=__consumer_offsets-3, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,535] INFO [Log partition=__consumer_offsets-3, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,539] INFO [Log partition=__consumer_offsets-3, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 42 ms (kafka.log.Log)

[2019-02-25 18:50:22,554] INFO [Log partition=__consumer_offsets-30, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,554] INFO [Log partition=__consumer_offsets-30, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,590] INFO [Log partition=__consumer_offsets-30, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,593] INFO [Log partition=__consumer_offsets-30, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 51 ms (kafka.log.Log)

[2019-02-25 18:50:22,604] INFO [Log partition=__consumer_offsets-31, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,605] INFO [Log partition=__consumer_offsets-31, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,642] INFO [Log partition=__consumer_offsets-31, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,646] INFO [Log partition=__consumer_offsets-31, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 51 ms (kafka.log.Log)

[2019-02-25 18:50:22,657] INFO [Log partition=__consumer_offsets-32, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,657] INFO [Log partition=__consumer_offsets-32, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,690] INFO [Log partition=__consumer_offsets-32, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,693] INFO [Log partition=__consumer_offsets-32, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 45 ms (kafka.log.Log)

[2019-02-25 18:50:22,704] INFO [Log partition=__consumer_offsets-33, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,704] INFO [Log partition=__consumer_offsets-33, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,735] INFO [Log partition=__consumer_offsets-33, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,738] INFO [Log partition=__consumer_offsets-33, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 42 ms (kafka.log.Log)

[2019-02-25 18:50:22,749] INFO [Log partition=__consumer_offsets-34, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,750] INFO [Log partition=__consumer_offsets-34, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,789] INFO [Log partition=__consumer_offsets-34, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,792] INFO [Log partition=__consumer_offsets-34, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 51 ms (kafka.log.Log)

[2019-02-25 18:50:22,803] INFO [Log partition=__consumer_offsets-35, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,804] INFO [Log partition=__consumer_offsets-35, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,844] INFO [Log partition=__consumer_offsets-35, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,847] INFO [Log partition=__consumer_offsets-35, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:22,861] INFO [Log partition=__consumer_offsets-36, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,861] INFO [Log partition=__consumer_offsets-36, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,900] INFO [Log partition=__consumer_offsets-36, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,905] INFO [Log partition=__consumer_offsets-36, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 54 ms (kafka.log.Log)

[2019-02-25 18:50:22,921] INFO [Log partition=__consumer_offsets-37, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,921] INFO [Log partition=__consumer_offsets-37, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,967] INFO [Log partition=__consumer_offsets-37, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:22,970] INFO [Log partition=__consumer_offsets-37, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 61 ms (kafka.log.Log)

[2019-02-25 18:50:22,978] INFO [Log partition=__consumer_offsets-38, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:22,979] INFO [Log partition=__consumer_offsets-38, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,021] INFO [Log partition=__consumer_offsets-38, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,024] INFO [Log partition=__consumer_offsets-38, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 53 ms (kafka.log.Log)

[2019-02-25 18:50:23,035] INFO [Log partition=__consumer_offsets-39, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,035] INFO [Log partition=__consumer_offsets-39, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,077] INFO [Log partition=__consumer_offsets-39, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,080] INFO [Log partition=__consumer_offsets-39, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 54 ms (kafka.log.Log)

[2019-02-25 18:50:23,091] INFO [Log partition=__consumer_offsets-4, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,091] INFO [Log partition=__consumer_offsets-4, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,122] INFO [Log partition=__consumer_offsets-4, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,125] INFO [Log partition=__consumer_offsets-4, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 41 ms (kafka.log.Log)

[2019-02-25 18:50:23,136] INFO [Log partition=__consumer_offsets-40, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,136] INFO [Log partition=__consumer_offsets-40, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,177] INFO [Log partition=__consumer_offsets-40, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,180] INFO [Log partition=__consumer_offsets-40, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 52 ms (kafka.log.Log)

[2019-02-25 18:50:23,190] INFO [Log partition=__consumer_offsets-41, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,192] INFO [Log partition=__consumer_offsets-41, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,231] INFO [Log partition=__consumer_offsets-41, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,235] INFO [Log partition=__consumer_offsets-41, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 51 ms (kafka.log.Log)

[2019-02-25 18:50:23,243] INFO [Log partition=__consumer_offsets-42, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,243] INFO [Log partition=__consumer_offsets-42, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,287] INFO [Log partition=__consumer_offsets-42, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,290] INFO [Log partition=__consumer_offsets-42, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 54 ms (kafka.log.Log)

[2019-02-25 18:50:23,301] INFO [Log partition=__consumer_offsets-43, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,301] INFO [Log partition=__consumer_offsets-43, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,377] INFO [Log partition=__consumer_offsets-43, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,382] INFO [Log partition=__consumer_offsets-43, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 89 ms (kafka.log.Log)

[2019-02-25 18:50:23,392] INFO [Log partition=__consumer_offsets-44, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,393] INFO [Log partition=__consumer_offsets-44, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,497] INFO [Log partition=__consumer_offsets-44, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,500] INFO [Log partition=__consumer_offsets-44, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 117 ms (kafka.log.Log)

[2019-02-25 18:50:23,509] INFO [Log partition=__consumer_offsets-45, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,510] INFO [Log partition=__consumer_offsets-45, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,552] INFO [Log partition=__consumer_offsets-45, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,556] INFO [Log partition=__consumer_offsets-45, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 55 ms (kafka.log.Log)

[2019-02-25 18:50:23,567] INFO [Log partition=__consumer_offsets-46, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,567] INFO [Log partition=__consumer_offsets-46, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,597] INFO [Log partition=__consumer_offsets-46, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,602] INFO [Log partition=__consumer_offsets-46, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 44 ms (kafka.log.Log)

[2019-02-25 18:50:23,614] INFO [Log partition=__consumer_offsets-47, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,615] INFO [Log partition=__consumer_offsets-47, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,662] INFO [Log partition=__consumer_offsets-47, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,665] INFO [Log partition=__consumer_offsets-47, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 60 ms (kafka.log.Log)

[2019-02-25 18:50:23,674] WARN [Log partition=__consumer_offsets-48, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Found a corrupted index file corresponding to log file D:\kafka_2.12-2.1.1\tmp\kafka-logs\__consumer_offsets-48\00000000000000000000.log due to Corrupt time index found, time index file (D:\kafka_2.12-2.1.1\tmp\kafka-logs\__consumer_offsets-48\00000000000000000000.timeindex) has non-zero size but the last timestamp is 0 which is less than the first timestamp 1551087506465}, recovering segment and rebuilding index files... (kafka.log.Log)

[2019-02-25 18:50:23,674] INFO [Log partition=__consumer_offsets-48, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,704] INFO [ProducerStateManager partition=__consumer_offsets-48] Writing producer snapshot at offset 652 (kafka.log.ProducerStateManager)

[2019-02-25 18:50:23,707] INFO [Log partition=__consumer_offsets-48, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,709] INFO [Log partition=__consumer_offsets-48, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,734] INFO [ProducerStateManager partition=__consumer_offsets-48] Writing producer snapshot at offset 652 (kafka.log.ProducerStateManager)

[2019-02-25 18:50:23,776] INFO [Log partition=__consumer_offsets-48, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 652 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,778] INFO [ProducerStateManager partition=__consumer_offsets-48] Loading producer state from snapshot file 'D:\kafka_2.12-2.1.1\tmp\kafka-logs\__consumer_offsets-48\00000000000000000652.snapshot' (kafka.log.ProducerStateManager)

[2019-02-25 18:50:23,780] INFO [Log partition=__consumer_offsets-48, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 652 in 113 ms (kafka.log.Log)

[2019-02-25 18:50:23,792] INFO [Log partition=__consumer_offsets-49, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,792] INFO [Log partition=__consumer_offsets-49, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,841] INFO [Log partition=__consumer_offsets-49, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,844] INFO [Log partition=__consumer_offsets-49, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 61 ms (kafka.log.Log)

[2019-02-25 18:50:23,854] INFO [Log partition=__consumer_offsets-5, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,855] INFO [Log partition=__consumer_offsets-5, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,907] INFO [Log partition=__consumer_offsets-5, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,911] INFO [Log partition=__consumer_offsets-5, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 66 ms (kafka.log.Log)

[2019-02-25 18:50:23,924] INFO [Log partition=__consumer_offsets-6, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,924] INFO [Log partition=__consumer_offsets-6, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,962] INFO [Log partition=__consumer_offsets-6, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:23,967] INFO [Log partition=__consumer_offsets-6, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 54 ms (kafka.log.Log)

[2019-02-25 18:50:23,977] INFO [Log partition=__consumer_offsets-7, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:23,977] INFO [Log partition=__consumer_offsets-7, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:24,007] INFO [Log partition=__consumer_offsets-7, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:24,011] INFO [Log partition=__consumer_offsets-7, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 43 ms (kafka.log.Log)

[2019-02-25 18:50:24,022] INFO [Log partition=__consumer_offsets-8, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:24,023] INFO [Log partition=__consumer_offsets-8, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:24,073] INFO [Log partition=__consumer_offsets-8, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:24,076] INFO [Log partition=__consumer_offsets-8, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 63 ms (kafka.log.Log)

[2019-02-25 18:50:24,087] INFO [Log partition=__consumer_offsets-9, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Recovering unflushed segment 0 (kafka.log.Log)

[2019-02-25 18:50:24,087] INFO [Log partition=__consumer_offsets-9, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:24,208] INFO [Log partition=__consumer_offsets-9, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log)

[2019-02-25 18:50:24,212] INFO [Log partition=__consumer_offsets-9, dir=D:\kafka_2.12-2.1.1\tmp\kafka-logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 133 ms (kafka.log.Log)

[2019-02-25 18:50:24,218] INFO Logs loading complete in 3526 ms. (kafka.log.LogManager)

[2019-02-25 18:50:24,236] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2019-02-25 18:50:24,238] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2019-02-25 18:50:24,481] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.Acceptor)

[2019-02-25 18:50:24,516] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer)

[2019-02-25 18:50:24,549] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 18:50:24,554] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 18:50:24,554] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 18:50:24,568] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2019-02-25 18:50:24,627] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2019-02-25 18:50:24,660] INFO Result of znode creation at /brokers/ids/0 is: OK (kafka.zk.KafkaZkClient)

[2019-02-25 18:50:24,663] INFO Registered broker 0 at path /brokers/ids/0 with addresses: ArrayBuffer(EndPoint(DESKTOP-U2O7KPS,9092,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient)

[2019-02-25 18:50:24,765] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 18:50:24,765] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 18:50:24,765] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-02-25 18:50:24,788] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2019-02-25 18:50:24,790] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2019-02-25 18:50:24,803] INFO [GroupMetadataManager brokerId=0] Removed 0 expired offsets in 8 milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[2019-02-25 18:50:24,884] INFO [ProducerId Manager 0]: Acquired new producerId block (brokerId:0,blockStartProducerId:6000,blockEndProducerId:6999) by writing to Zk with path version 7 (kafka.coordinator.transaction.ProducerIdManager)

[2019-02-25 18:50:24,931] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2019-02-25 18:50:24,935] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2019-02-25 18:50:24,971] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2019-02-25 18:50:25,056] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2019-02-25 18:50:25,063] INFO [SocketServer brokerId=0] Started processors for 1 acceptors (kafka.network.SocketServer)

[2019-02-25 18:50:25,074] INFO Kafka version : 2.1.1 (org.apache.kafka.common.utils.AppInfoParser)

[2019-02-25 18:50:25,074] INFO Kafka commitId : 21234bee31165527 (org.apache.kafka.common.utils.AppInfoParser)

[2019-02-25 18:50:25,083] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

[2019-02-25 18:50:25,244] INFO [ReplicaFetcherManager on broker 0] Removed fetcher for partitions Set(__consumer_offsets-22, __consumer_offsets-30, __consumer_offsets-8, __consumer_offsets-21, __consumer_offsets-4, __consumer_offsets-27, __consumer_offsets-7, __consumer_offsets-9, __consumer_offsets-46, __consumer_offsets-25, __consumer_offsets-35, __consumer_offsets-41, __consumer_offsets-33, __consumer_offsets-23, __consumer_offsets-49, user-log-0, __consumer_offsets-47, __consumer_offsets-16, __consumer_offsets-28, __consumer_offsets-31, __consumer_offsets-36, __consumer_offsets-42, __consumer_offsets-3, __consumer_offsets-18, __consumer_offsets-37, __consumer_offsets-15, __consumer_offsets-24, __consumer_offsets-38, __consumer_offsets-17, __consumer_offsets-48, __consumer_offsets-19, __consumer_offsets-11, __consumer_offsets-13, __consumer_offsets-2, __consumer_offsets-43, __consumer_offsets-6, __consumer_offsets-14, __consumer_offsets-20, __consumer_offsets-0, __consumer_offsets-44, __consumer_offsets-39, __consumer_offsets-12, __consumer_offsets-45, __consumer_offsets-1, __consumer_offsets-5, __consumer_offsets-26, __consumer_offsets-29, __consumer_offsets-34, __consumer_offsets-10, __consumer_offsets-32, __consumer_offsets-40) (kafka.server.ReplicaFetcherManager)

[2019-02-25 18:50:25,442] INFO Replica loaded for partition __consumer_offsets-0 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,451] INFO [Partition __consumer_offsets-0 broker=0] __consumer_offsets-0 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,533] INFO Replica loaded for partition __consumer_offsets-29 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,534] INFO [Partition __consumer_offsets-29 broker=0] __consumer_offsets-29 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,623] INFO Replica loaded for partition __consumer_offsets-48 with initial high watermark 652 (kafka.cluster.Replica)

[2019-02-25 18:50:25,625] INFO [Partition __consumer_offsets-48 broker=0] __consumer_offsets-48 starts at Leader Epoch 0 from offset 652. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,632] INFO Replica loaded for partition __consumer_offsets-10 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,634] INFO [Partition __consumer_offsets-10 broker=0] __consumer_offsets-10 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,682] INFO Replica loaded for partition __consumer_offsets-45 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,683] INFO [Partition __consumer_offsets-45 broker=0] __consumer_offsets-45 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,726] INFO Replica loaded for partition __consumer_offsets-26 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,726] INFO [Partition __consumer_offsets-26 broker=0] __consumer_offsets-26 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,759] INFO Replica loaded for partition __consumer_offsets-7 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,759] INFO [Partition __consumer_offsets-7 broker=0] __consumer_offsets-7 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,792] INFO Replica loaded for partition __consumer_offsets-42 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,793] INFO [Partition __consumer_offsets-42 broker=0] __consumer_offsets-42 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,826] INFO Replica loaded for partition __consumer_offsets-4 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,827] INFO [Partition __consumer_offsets-4 broker=0] __consumer_offsets-4 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,858] INFO Replica loaded for partition __consumer_offsets-23 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,859] INFO [Partition __consumer_offsets-23 broker=0] __consumer_offsets-23 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,891] INFO Replica loaded for partition __consumer_offsets-1 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,892] INFO [Partition __consumer_offsets-1 broker=0] __consumer_offsets-1 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,927] INFO Replica loaded for partition __consumer_offsets-20 with initial high watermark 0 (kafka.cluster.Replica)

[2019-02-25 18:50:25,928] INFO [Partition __consumer_offsets-20 broker=0] __consumer_offsets-20 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-02-25 18:50:25,969] INFO Replica loaded for partition __consumer_offsets-39 with initial high watermark 0 (kafka.cluster.Replica)