版权声明:本文为博主原创文章,转载请注明出处。 https://blog.csdn.net/Yellow_python/article/details/86563960

单层卷积神经网络

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

"""加载样本集:手写数字"""

mnist = input_data.read_data_sets('data/', one_hot=True)

"""卷积神经网络"""

# 输入层

X = tf.placeholder('float', [None, 28, 28, 1]) # 批量、高、宽、通道数

y = tf.placeholder('float', [None, 10]) # 10分类(0~9)

# 卷积层

W_conv = tf.Variable(tf.truncated_normal([5, 5, 1, 32], stddev=0.1)) # 高、宽、通道数、filter个数

b_conv = tf.Variable(tf.constant(.1, shape=[32])) # filter个数

h_conv = tf.nn.conv2d(input=X, filter=W_conv, strides=[1, 1, 1, 1], padding='SAME') + b_conv # 滑窗步幅:水平1、垂直1

# ReLU激活

h_relu = tf.nn.relu(h_conv)

# 池化层

h_pool = tf.nn.max_pool(h_relu, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME') # 滑窗大小:水平2、垂直2;滑窗步幅:水平2、垂直2

# 平化层

h_flat = tf.reshape(h_pool, [-1, 14 * 14 * 32])

# 全连接层1

W_fc1 = tf.Variable(tf.truncated_normal([14 * 14 * 32, 256], stddev=.1)) # 指定256个神经元

b_fc1 = tf.Variable(tf.constant(.1, shape=[256]))

h_fc1 = tf.matmul(h_flat, W_fc1) + b_fc1

h_fc1 = tf.nn.relu(h_fc1)

# 全连接层2

W_fc2 = tf.Variable(tf.truncated_normal([256, 10], stddev=.1))

b_fc2 = tf.Variable(tf.constant(.1, shape=[10]))

h_fc2 = tf.matmul(h_fc1, W_fc2) + b_fc2

# softmax交叉熵损失

loss = tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=h_fc2)

# Adam优化器

optimizer = tf.train.AdamOptimizer().minimize(loss)

"""精度"""

prediction = tf.equal(tf.argmax(h_fc2, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(prediction, 'float'))

"""运行"""

sess = tf.InteractiveSession()

sess.run(tf.global_variables_initializer())

batch_size = 50

for i in range(501):

batch = mnist.train.next_batch(batch_size) # 分批

inputs = batch[0].reshape([batch_size, 28, 28, 1])

labels = batch[1]

optimizer.run(session=sess, feed_dict={X: inputs, y: labels}) # 训练

if i % 10 == 0:

train_accuracy = accuracy.eval(session=sess, feed_dict={X: inputs, y: labels})

print('step %d, accuracy %g' % (i, train_accuracy))

知识补充

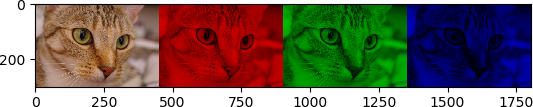

1、图像特征

from skimage import data # conda install pillow

import numpy as np, matplotlib.pyplot as mp

cat = data.chelsea()

print(cat.shape) # 高、宽、通道:(300, 451, 3)

# RGB通道

cat_R = cat.copy() # 红色通道

cat_R[:, :, 1] = cat_R[:, :, 2] = 0 # 绿、蓝通道像素设0

cat_G = cat.copy() # 绿色通道

cat_G[:, :, 0] = cat_G[:, :, 2] = 0 # 红、蓝通道像素设0

cat_B = cat.copy() # 蓝色通道

cat_B[:, :, 0] = cat_B[:, :, 1] = 0 # 红、绿通道像素设0

# 可视化

plot_image = np.concatenate((cat, cat_R, cat_G, cat_B), axis=1)

mp.imshow(plot_image)

mp.show()

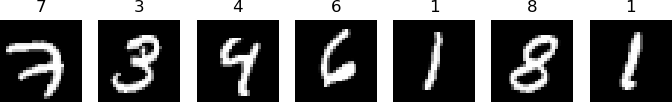

2、mnist数据集

from tensorflow.examples.tutorials.mnist import input_data

import numpy as np, matplotlib.pyplot as mp

mnist = input_data.read_data_sets('data/', one_hot=True)

train_images = mnist.train.images # shape(55000, 784)

train_labels = mnist.train.labels # shape(55000, 10)

test_images = mnist.test.images # shape(10000, 784)

testl_labels = mnist.test.labels # shape(10000, 10)

# 可视化

for i in range(7):

image = np.reshape(train_images[i, :], (28, 28))

label = np.argmax(train_labels[i, :])

mp.subplot(1, 7, i + 1)

mp.axis('off')

mp.imshow(image, cmap=mp.get_cmap('gray'))

mp.title(label)

mp.show()

3、TensorFlow语法

- 所有变量必须经过

初始化才能启用

import tensorflow as tf

"""创建tf格式的变量"""

a = tf.Variable([[1], [2]]) # <tf.Variable 'Variable:0' shape=(2, 1) dtype=int32_ref>

b = tf.Variable([[1, 2, 3]]) # <tf.Variable 'Variable_1:0' shape=(1, 3) dtype=int32_ref>

c = tf.matmul(a, b) # Tensor("MatMul:0", shape=(2, 3), dtype=int32)

print(a, b, c, sep='\n')

"""全局变量初始化"""

with tf.Session() as sess:

init = tf.global_variables_initializer()

sess.run(init)

print(sess.run(c))

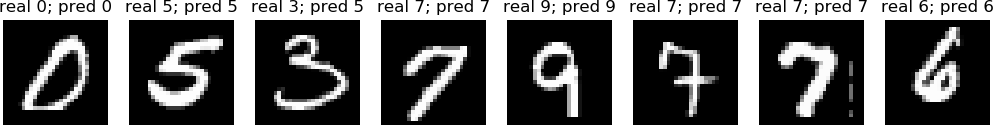

4、模型评估

- 完整CNN示例:2层卷积、再加上dropout

import tensorflow as tf, matplotlib.pyplot as mp

from tensorflow.examples.tutorials.mnist import input_data

"""加载样本集:手写数字"""

mnist = input_data.read_data_sets('data/', one_hot=True)

"""卷积神经网络"""

# 卷积、ReLU、池化

def conv(X, channels, filters):

W_conv = tf.Variable(tf.truncated_normal([5, 5, channels, filters], stddev=0.1))

b_conv = tf.Variable(tf.constant(.1, shape=[filters]))

h_conv = tf.nn.conv2d(input=X, filter=W_conv, strides=[1, 1, 1, 1], padding='SAME') + b_conv

h_relu = tf.nn.relu(h_conv)

return tf.nn.max_pool(h_relu, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

# 输入层

X = tf.placeholder('float', [None, 28, 28, 1])

y = tf.placeholder('float', [None, 10])

# 2次卷积

h_pool1 = conv(X, 1, 32)

h_pool2 = conv(h_pool1, 32, 64)

# 平化层

h_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

# 全连接层1

W_fc1 = tf.Variable(tf.truncated_normal([7 * 7 * 64, 512], stddev=.1))

b_fc1 = tf.Variable(tf.constant(.1, shape=[512]))

h_fc1 = tf.nn.relu(tf.matmul(h_flat, W_fc1) + b_fc1)

# Dropout减少过拟合

keep_prob = tf.placeholder('float')

h_drop = tf.nn.dropout(h_fc1, keep_prob)

# 全连接层2

W_fc2 = tf.Variable(tf.truncated_normal([512, 10], stddev=.1))

b_fc2 = tf.Variable(tf.constant(.1, shape=[10]))

h_fc2 = tf.matmul(h_drop, W_fc2) + b_fc2

# softmax交叉熵损失

h_softmax = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=h_fc2))

# Adam优化器

optimizer = tf.train.AdamOptimizer().minimize(h_softmax)

# 精度

prediction = tf.equal(tf.argmax(h_fc2, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(prediction, 'float'))

"""运行和预测"""

sess = tf.InteractiveSession()

sess.run(tf.global_variables_initializer())

batch_size = 50

for i in range(101):

batch = mnist.train.next_batch(batch_size)

inputs = batch[0].reshape([batch_size, 28, 28, 1])

labels = batch[1]

feed_dict = {X: inputs, y: labels, keep_prob: .9}

optimizer.run(feed_dict, sess)

if i % 10 == 0:

loss = h_softmax.eval(feed_dict, sess)

accu = accuracy.eval(feed_dict, sess)

print('step %3d; loss %.2f; accuracy %g' % (i, loss, accu))

if i == 100:

real = tf.argmax(y, 1)

realities = tf.argmax(y, 1).eval(feed_dict, sess)

predictions = tf.argmax(h_fc2, 1).eval(feed_dict, sess)

for i in range(8):

mp.subplot(1, 8, i + 1)

mp.axis('off')

mp.imshow(inputs[i].reshape(28, 28), cmap=mp.get_cmap('gray'))

mp.title('real %d; pred %d' % (realities[i], predictions[i]))

mp.show()

step 0; loss 6.68; accuracy 0.22

step 10; loss 2.36; accuracy 0.32

step 20; loss 1.52; accuracy 0.58

step 30; loss 0.93; accuracy 0.76

step 40; loss 0.54; accuracy 0.8

step 50; loss 0.43; accuracy 0.88

step 60; loss 0.20; accuracy 0.98

step 70; loss 0.47; accuracy 0.86

step 80; loss 0.39; accuracy 0.92

step 90; loss 0.28; accuracy 0.92

step 100; loss 0.21; accuracy 0.96

5、注释

| en | cn |

|---|---|

| convolution | 卷积 |

| placeholder | 占位符 |

| constant | 常量 |

| stride | 步幅 |

| optimize | 优化 |

| truncate | 截短 |