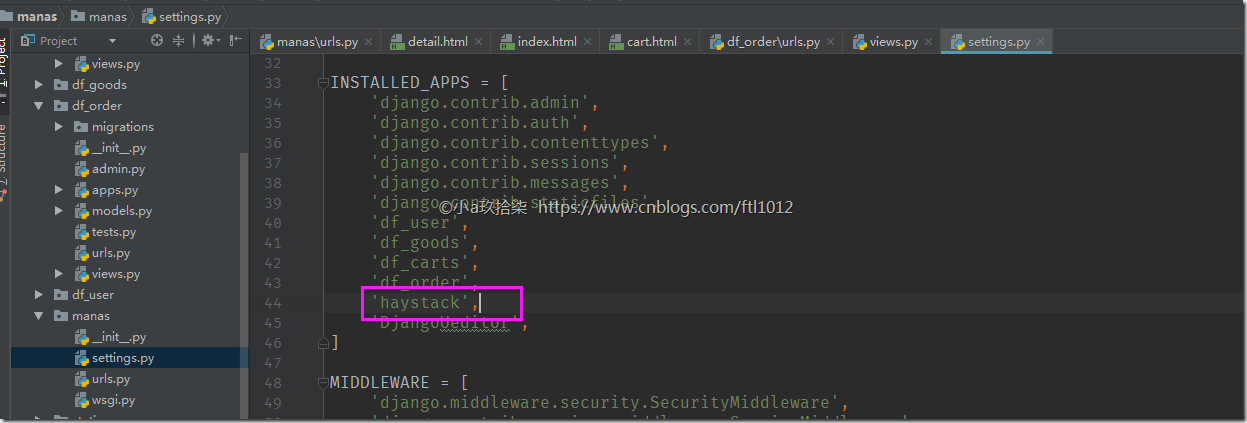

利用pip一次安装安装包

haystack:全文检索的框架

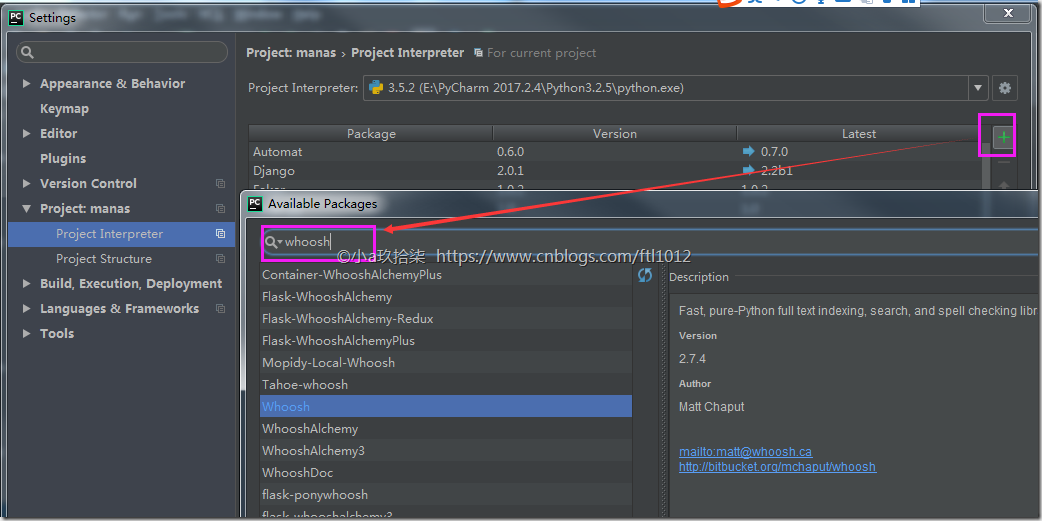

whoosh:纯Python编写的全文搜索引擎

jieba:一款免费的中文分词包

pip install django-haystack pip install whoosh pip install jieba

创建文件夹whoosh_index

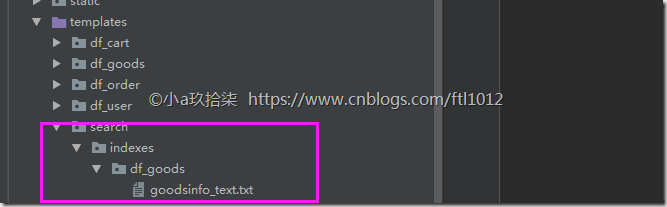

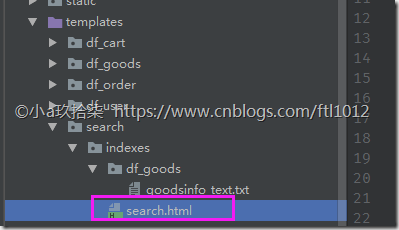

创建文件夹: templates/search、templates/search/indexes、templates/search/indexes/df_goods

被检索的模型对象的目录下创建文件(文件名称固定)

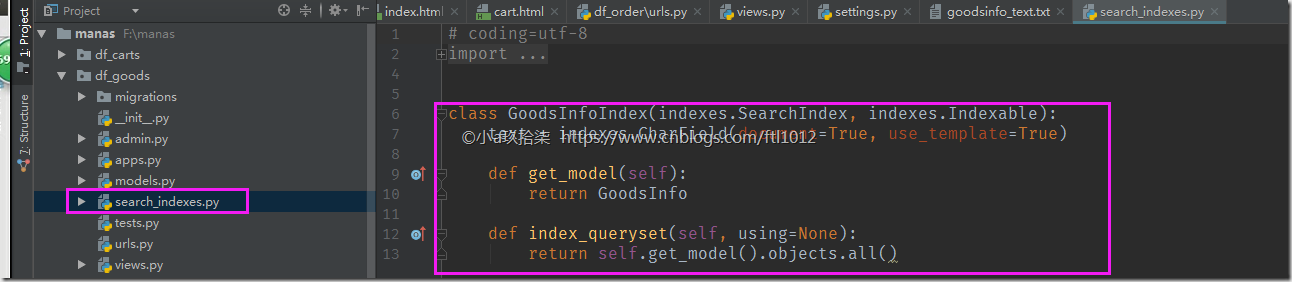

df_goods/search_indexes.py

# coding=utf-8

from haystack import indexes

from .models import GoodsInfo

class GoodsInfoIndex(indexes.SearchIndex, indexes.Indexable):

text = indexes.CharField(document=True, use_template=True)

def get_model(self):

# 返回对象类即可

return GoodsInfo

def index_queryset(self, using=None):

return self.get_model().objects.all()

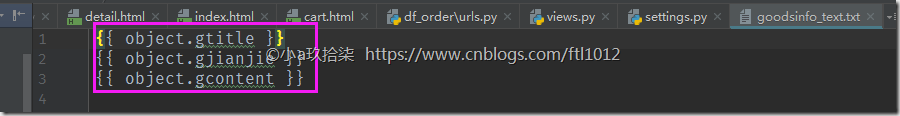

创建文件(固定格式: 模型类名_text.txt): templates/search/indexes/df_goods/goodsinfo_text.txt

文件内容: 对象的属性

{{ object.gtitle }}

{{ object.gjianjie }}

{{ object.gcontent }}

创建显示页面:

templates/search/indexes/search.html

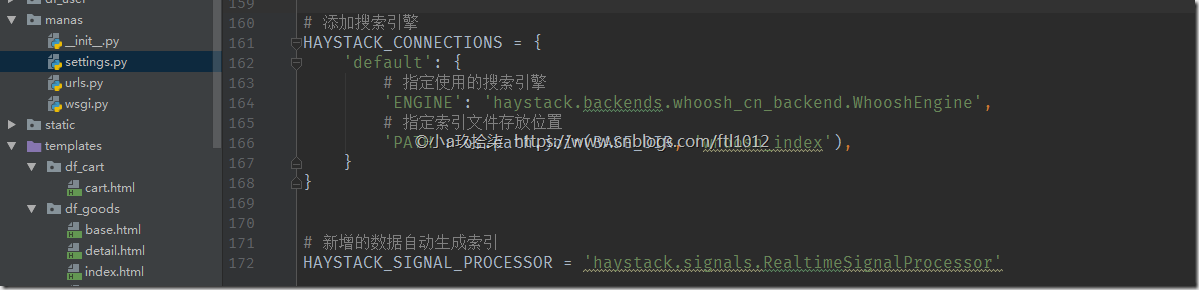

manas/settings.py

# 添加搜索引擎

HAYSTACK_CONNECTIONS = {

'default': {

# 指定使用的搜索引擎

'ENGINE': 'haystack.backends.whoosh_cn_backend.WhooshEngine',

# 指定索引文件存放位置

'PATH': os.path.join(BASE_DIR, 'whoosh_index'),

}

}

#新增的数据自动生成索引 HAYSTACK_SIGNAL_PROCESSOR = 'haystack.signals.RealtimeSignalProcessor'

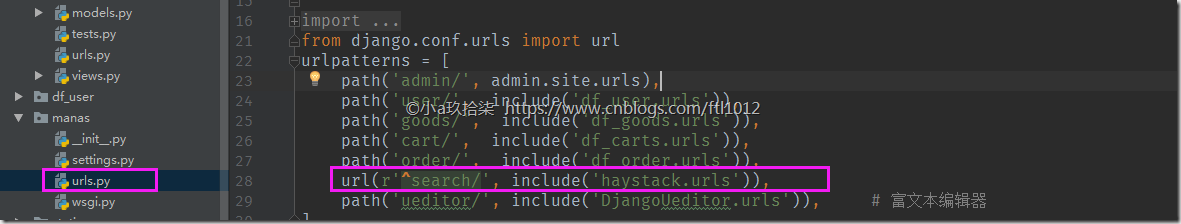

manas/urls.py

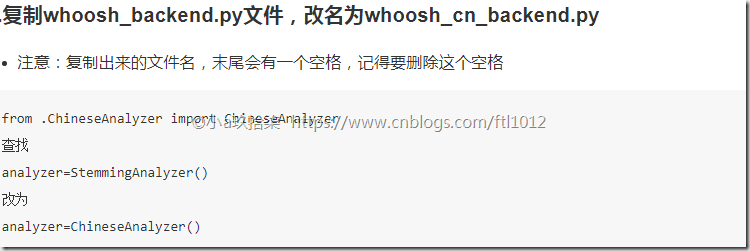

创建ChineseAnalyzer.py文件

- 保存在haystack的安装文件夹下,Linux路径如“/home/python/.virtualenvs/django_py2/lib/python2.7/site-packages/haystack/backends”

- 保存在haystack的安装文件,Window路径 C:\Users\Administrator\AppData\Roaming\Python\Python35\site-packages\haystack\backends.

import jieba

from whoosh.analysis import Tokenizer, Token

class ChineseTokenizer(Tokenizer):

def __call__(self, value, positions=False, chars=False,

keeporiginal=False, removestops=True,

start_pos=0, start_char=0, mode='', **kwargs):

t = Token(positions, chars, removestops=removestops, mode=mode,

**kwargs)

seglist = jieba.cut(value, cut_all=True)

for w in seglist:

t.original = t.text = w

t.boost = 1.0

if positions:

t.pos = start_pos + value.find(w)

if chars:

t.startchar = start_char + value.find(w)

t.endchar = start_char + value.find(w) + len(w)

yield t

def ChineseAnalyzer():

return ChineseTokenizer()

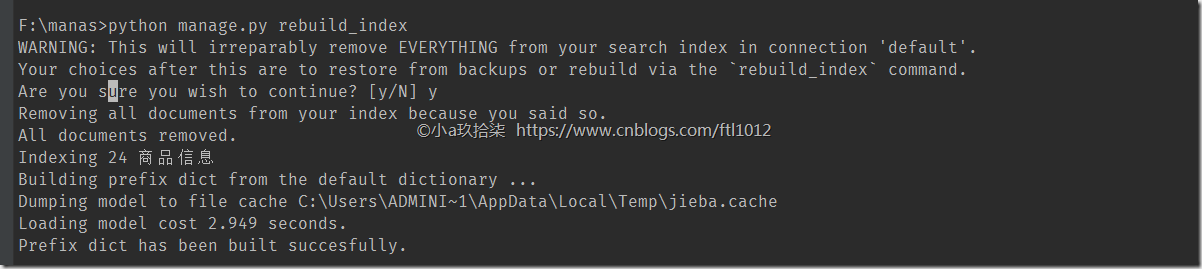

生成索引

python manage.py rebuild_index搜索栏实现搜索

<form method='get' action="/search/" target="_blank">

<input type="text" name="q">

<input type="submit" value="查询">

</form>

fas

fa