Kafka New Consumer API基于Kafka自身的group coordination protocol(老版本基于Zookeeper),new Consumer具有以下优势

1、合并过去High Level和Low Level的API,提供一个同时支持group coordination和lower level access

2、使用纯Java重写API,运行时不再依赖Scala和Zookeeper

3、更安全:Kafka0.9提供的security extensions,只支持new consumer

4、支持fault-tolerant group of consumer processes,老版本强依赖于zookeeper来实现,由于其中的逻辑极其复杂,所以其他编程语言实现这个特性非常困难,目前kafka官方已经将此特性在C client上实现了

虽然new consumer重构API并且使用新的coordination protocol,但是概念并没有根本改变,所以熟悉old consumer的用户不会难以理解new consumer。然而,需要额外关心下group management 和threading model。

Getting Started

基本概念:kafka一个topic中包含多个partition,每个partition只会分配给Consumer Group中的一个consumer member (即consumer thread)。old consumer通过zookeeper实现group management,new consumer由kafka broker负责,具体实现方式是通过为每个group分配一个broker作为其group coordinator,group coordinator负责关系group的状态,主要负责当group中member增加或移除,或者topic metadata更新时调解partition assignment ,reassigning partitions的动作称为rebalancing the group。

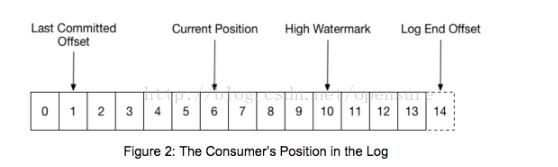

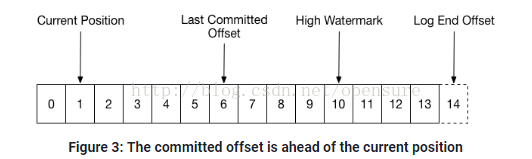

当一个Consumer Group初始化后,每个consumer member开始从partition顺序读取,consumer member会定期commit offset。如下图中,当前consumer member读取到offset 6处并且最后一个commit是在offset 1处。如果此时该consumer 挂了,group coordinator会分配一个新的consumer member从offset 1开始读取,我们可以发现,新接管的consumer member会再一次重复读取offset 1~offset 6的message。

另外,上图的High Watermark代表partition当前最后一个成功拷贝到所有replica的offset,在consumer视角中,最多只能读取到High Watermark所在的offset,即上图中的offset 10中,即使后面还是offset11~14,由于他们尚未完成全部replica,所以暂时无法读取,这种机制是为了防止consumer读取到unreplicated message,因为这些message之后可能被丢失(which could later be lost)

Configuration and Initialization

最简化配置

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "consumer-tutorial");

props.put("key.deserializer", StringDeserializer.class.getName());

props.put("value.deserializer", StringDeserializer.class.getName());

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);bootstrap.servers指定broker的地址,不需要全部指定,内部会自动发现

Topic Subscription

consumer.subscribe(Arrays.asList(“foo”, “bar”));subscribe topic之,consumer coordinator会自动分配partition,也可以使用assign API手动分配,但不会混合使用自动和手动两种模式。

subscribe 不是incremental的,即当subscribe 了foo和bar后,后续希望再subscribe一个 topic3,必须全量指定,即(“foo”, “bar”, "topic3")

Basic Poll Loop

subscribing topic之后,需要启动event loop,获取partition assignment并fetching data。听起来挺复杂,实际只需要在loop中调用poll。

poll函数需要传入一个timeout参数,由于loop本身是无限循环的,所以就需要有中断event loop的方法,有两种:

1、较小的timeout, 通过使用标志位来控制

2、较长的timeout, 调用consumer.wakeup()来退出循环

使用了一个相对较小的timeout,来确保在关闭消费者时,不会有太多的延迟

ConsumerRecords<String, String> records = consumer.poll(1000);下面的代码中,我们更改了timeout为Long.MAX_VALUE,意味着消费者会无限制地阻塞,直到有下一条记录返回的时候.

这时如果使用标志位也是无法退出循环的,所以只能由触发关闭的线程调用consumer.wakeup来中断进行中的poll,

这个调用会导致抛出WakeupException. wakeup在其他线程中调用是安全的(消费者线程中就这个方法是线程安全的).

注意:如果当前没有活动的poll,这个异常会在下次调用是才会抛出.本例中我们捕获了这个异常防止它传播给上层调用.

ConsumerRecords<String, String> records = consumer.poll(Long.MAX_VALUE);kafka consumer polling timeout:http://stackoverflow.com/questions/41030854/kafka-consumer-polling-timeout

Putting it all Together

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.errors.WakeupException;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

public class ConsumerLoop implements Runnable {

private final KafkaConsumer<String, String> consumer;

private final List<String> topics;

private final int id;

public ConsumerLoop(int id,

String groupId,

List<String> topics) {

this.id = id;

this.topics = topics;

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", groupId);

props.put("key.deserializer", StringDeserializer.class.getName());

props.put("value.deserializer", StringDeserializer.class.getName());

this.consumer = new KafkaConsumer<>(props);

}

@Override

public void run() {

try {

consumer.subscribe(topics);

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Long.MAX_VALUE);

for (ConsumerRecord<String, String> record : records) {

Map<String, Object> data = new HashMap<>();

data.put("partition", record.partition());

data.put("offset", record.offset());

data.put("value", record.value());

System.out.println(this.id + ": " + data);

}

}

} catch (WakeupException e) {

// ignore for shutdown

} finally {

consumer.close();

}

}

public void shutdown() {

consumer.wakeup();

}

}public static void main(String[] args) {

int numConsumers = 3;

String groupId = "consumer-tutorial-group"

List<String> topics = Arrays.asList("consumer-tutorial");

ExecutorService executor = Executors.newFixedThreadPool(numConsumers);

final List<ConsumerLoop> consumers = new ArrayList<>();

for (int i = 0; i < numConsumers; i++) {

ConsumerLoop consumer = new ConsumerLoop(i, groupId, topics);

consumers.add(consumer);

executor.submit(consumer);

}

Runtime.getRuntime().addShutdownHook(new Thread() {

@Override

public void run() {

for (ConsumerLoop consumer : consumers) {

consumer.shutdown();

}

executor.shutdown();

try {

executor.awaitTermination(5000, TimeUnit.MILLISECONDS);

} catch (InterruptedException e) {

e.printStackTrace;

}

}

});

}Consumer Liveness

consumer member被分配到partition后,会得到该paritition的lock,持有lock的期间,不会有其他consumer member读取同一个partition,但是一旦出于某些原因导致consumer member crash,就需要一种机制释放lock,然后将partition分配给一个新的consumer member。

group coordination protocol通过使用心跳机制解决该问题,调用poll获取数据同时会发送heartbeat,所以如果一旦应用停止调用poll获取数据,通过session.timeout.ms参数控制coordinator多长时间后未收到heartbeat就认为该consumer member crash,默认30秒。

需要注意的一点,如果单次poll期间对message处理的时间超过了session.timeout.ms就会被判定为heartbeat timeout,但是不建议将该值设置的过长,因为这会导致coordinator花费更长的时间检测到consumer crash

Delivery Semantics

当Consumer Group创建后,每个consumer member从partition的哪个offset开始读取是由auto.offset.reset控制的。如果consumer member在commit前crash了,那么下一个接手的member会重复读取一部分message。

commit默认是自动的(enable.auto.commit ),consumer周期性的(auto.commit.interval.ms)commit offset,如果希望手动commit,首先得取消自动commit

props.put(“enable.auto.commit”, “false”);使用commit api的关键点在于如何结合poll loop,它决定了Delivery Semantics

1、at least once

当commit policy保证last commit offset一定在当前offset之前

我们可以发现,自动commit时,就是使用的at least once,因为只有当所有message返回给应用后才会调用commit。

API层面是在当前poll loop的message处理完后调用commit

try {

while (running) {

ConsumerRecords<String, String> records = consumer.poll(1000);

for (ConsumerRecord<String, String> record : records)

System.out.println(record.offset() + ": " + record.value());

try {

consumer.commitSync();

} catch (CommitFailedException e) {

// application specific failure handling

}

}

} finally {

consumer.close();

}2、at most once

当commit policy保证last commit offset一定在当前offset之后

使用at most once交付语义时,如果consumer尚未完成当前poll loop的所有message前crash了,则会丢失未处理的那部分数据,因为新接管的consumer不会感知到这部分message。

API层面是在当前poll loop的message开始处理前调用commit

try {

while (running) {

ConsumerRecords<String, String> records = consumer.poll(1000);

try {

consumer.commitSync();

for (ConsumerRecord<String, String> record : records)

System.out.println(record.offset() + ": " + record.value());

} catch (CommitFailedException e) {

// application specific failure handling

}

}

} finally {

consumer.close();

}通过使用commit API,我们可以完全控制处理重复数据的数量。在极端情况下,可以为每条message commit一次,但是会影响吞吐量。如下

try {

while (running) {

ConsumerRecords<String, String> records = consumer.poll(1000);

try {

for (ConsumerRecord<String, String> record : records) {

System.out.println(record.offset() + ": " + record.value());

consumer.commitSync(Collections.singletonMap(record.partition(), new OffsetAndMetadata(record.offset() + 1)));

}

} catch (CommitFailedException e) {

// application specific failure handling

}

}

} finally {

consumer.close();

}commitSync方法接受一个map类型的参数。commit API允许你添加一些额外的metadata,比如说commit的时间、consumer所在的host,或任意其他,例子中,没有添加额外参数。

Instead of committing on every message received, a more reasonably policy might be to commit offsets as you finish handling the messages from each partition. The ConsumerRecords collection provides access to the set of partitions contained in it and to the messages for each partition. The example below demonstrates this policy.

try {

while (running) {

ConsumerRecords<String, String> records = consumer.poll(Long.MAX_VALUE);

for (TopicPartition partition : records.partitions()) {

List<ConsumerRecord<String, String>> partitionRecords = records.records(partition);

for (ConsumerRecord<String, String> record : partitionRecords)

System.out.println(record.offset() + ": " + record.value());

long lastoffset = partitionRecords.get(partitionRecords.size() - 1).offset();

consumer.commitSync(Collections.singletonMap(partition, new OffsetAndMetadata(lastoffset + 1)));

}

}

} finally {

consumer.close();

}上面都是使用同步API进行commit,consumer 同样支持异步commit:commitAsync,使用异步commit可以带来更高的吞吐量,代价是增加了发现错误的延时

try {

while (running) {

ConsumerRecords<String, String> records = consumer.poll(1000);

for (ConsumerRecord<String, String> record : records)

System.out.println(record.offset() + ": " + record.value());

consumer.commitAsync(new OffsetCommitCallback() {

@Override

public void onComplete(Map<TopicPartition, OffsetAndMetadata> offsets,

Exception exception) {

if (exception != null) {

// application specific failure handling

}

}

});

}

} finally {

consumer.close();

}commitAsync提供了一个回调函数,consumer完成commit后(无论失败还是成功)会调用该函数,如果不需要它,可以call commitAsync with no arguments.

Consumer Group Inspection

当激活一个consumer group后,可以在命令行查看

# bin/kafka-consumer-groups.sh –new-consumer –describe –group consumer-tutorial-group –bootstrap-server localhost:9092返回类似如下

GROUP, TOPIC, PARTITION, CURRENT OFFSET, LOG END OFFSET, LAG, OWNER

consumer-tutorial-group, consumer-tutorial, 0, 6667, 6667, 0, consumer-1_/127.0.0.1

consumer-tutorial-group, consumer-tutorial, 1, 6667, 6667, 0, consumer-2_/127.0.0.1

consumer-tutorial-group, consumer-tutorial, 2, 6666, 6666, 0, consumer-3_/127.0.0.1管理员可以通过该功能检查consumer group是否跟上producers

Using Manual Assignment

new consumer同样支持old consumer low level API,即不使用consumer group。使用new consumer,你只需要分配你希望读取的partition,然后开始polling message。

List<TopicPartition> partitions = new ArrayList<>();

for (PartitionInfo partition : consumer.partitionsFor(topic))

partitions.add(new TopicPartition(topic, partition.partition()));

consumer.assign(partitions);与subscribe类似,assign必须传完整的partition list,一旦partition assigned,poll loop机制就跟使用consumer group时完全一样了。

需要注意的是,无论使用simple consumer 或a consumer group,都必须指定一个合理的不冲突的group.id,如果simple consumer尝试使用一个active consumer group正在使用的group.id进行commit,会被coordinator拒绝,并抛出CommitFailedException。然而,两个simple consumer使用同一个group.id不会报错。

Conclusion

new consumer带来了一些列的好处,如cleaner API, better security, and reduced dependencies。本文主要关注点在于poll semantics和使用commit API 来控制delivery semantics。