数据集地址:https://download.csdn.net/download/fanzonghao/10940440

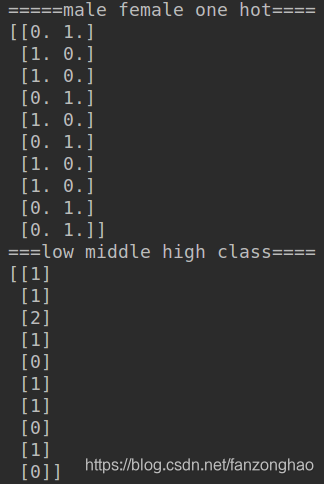

一,类别型特征和有序性特征 ,转变成onehot

def one_hot():

# 随机生成有序型特征和类别特征作为例子

X_train = np.array([['male', 'low'],

['female', 'low'],

['female', 'middle'],

['male', 'low'],

['female', 'high'],

['male', 'low'],

['female', 'low'],

['female', 'high'],

['male', 'low'],

['male', 'high']])

X_test = np.array([['male', 'low'],

['male', 'low'],

['female', 'middle'],

['female', 'low'],

['female', 'high']])

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

# 在训练集上进行编码操作

label_enc1 = LabelEncoder() # 首先将male, female用数字编码

one_hot_enc = OneHotEncoder() # 将数字编码转换为独热编码

label_enc2 = LabelEncoder() # 将low, middle, high用数字编码

tr_feat1_tmp = label_enc1.fit_transform(X_train[:, 0]).reshape(-1, 1) # reshape(-1, 1)保证为一维列向量

tr_feat1 = one_hot_enc.fit_transform(tr_feat1_tmp)

tr_feat1 = tr_feat1.todense()

print('=====male female one hot====')

print(tr_feat1)

tr_feat2 = label_enc2.fit_transform(X_train[:, 1]).reshape(-1, 1)

print('===low middle high class====')

print(tr_feat2)

X_train_enc = np.hstack((tr_feat1, tr_feat2))

print('=====train encode====')

print(X_train_enc)

# 在测试集上进行编码操作

te_feat1_tmp = label_enc1.transform(X_test[:, 0]).reshape(-1, 1) # reshape(-1, 1)保证为一维列向量

te_feat1 = one_hot_enc.transform(te_feat1_tmp)

te_feat1 = te_feat1.todense()

te_feat2 = label_enc2.transform(X_test[:, 1]).reshape(-1, 1)

X_test_enc = np.hstack((te_feat1, te_feat2))

print('====test encode====')

print(X_test_enc)

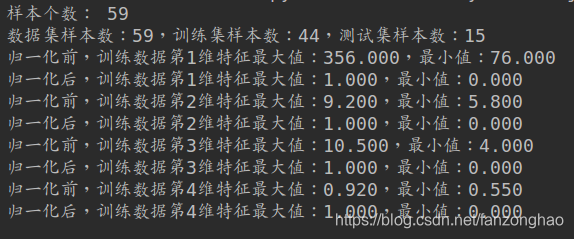

二,特征归一化

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from mpl_toolkits.mplot3d import Axes3D

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score

def load_data():

# 加载数据集

fruits_df = pd.read_table('fruit_data_with_colors.txt')

# print(fruits_df)

print('样本个数:', len(fruits_df))

# 创建目标标签和名称的字典

fruit_name_dict = dict(zip(fruits_df['fruit_label'], fruits_df['fruit_name']))

# 划分数据集

X = fruits_df[['mass', 'width', 'height', 'color_score']]

y = fruits_df['fruit_label']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=1/4, random_state=0)

print('数据集样本数:{},训练集样本数:{},测试集样本数:{}'.format(len(X), len(X_train), len(X_test)))

# print(X_train)

return X_train, X_test, y_train, y_test

#特征归一化

def minmax_scaler(X_train,X_test):

scaler = MinMaxScaler()

X_train_scaled = scaler.fit_transform(X_train)

# print(X_train_scaled)

#此时scaled得到一个最小最大值,对于test直接transform就行

X_test_scaled = scaler.transform(X_test)

for i in range(4):

print('归一化前,训练数据第{}维特征最大值:{:.3f},最小值:{:.3f}'.format(i + 1,

X_train.iloc[:, i].max(),

X_train.iloc[:, i].min()))

print('归一化后,训练数据第{}维特征最大值:{:.3f},最小值:{:.3f}'.format(i + 1,

X_train_scaled[:, i].max(),

X_train_scaled[:, i].min()))

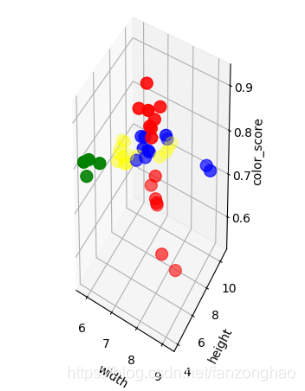

return X_train_scaled,X_test_scaleddef show_3D(X_train,X_train_scaled):

label_color_dict = {1: 'red', 2: 'green', 3: 'blue', 4: 'yellow'}

colors = list(map(lambda label: label_color_dict[label], y_train))

print(colors)

print(len(colors))

fig = plt.figure()

ax1 = fig.add_subplot(111, projection='3d', aspect='equal')

ax1.scatter(X_train['width'], X_train['height'], X_train['color_score'], c=colors, marker='o', s=100)

ax1.set_xlabel('width')

ax1.set_ylabel('height')

ax1.set_zlabel('color_score')

plt.show()

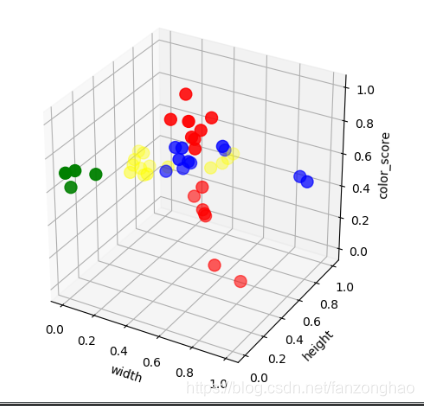

fig = plt.figure()

ax2 = fig.add_subplot(111, projection='3d', aspect='equal')

ax2.scatter(X_train_scaled[:, 1], X_train_scaled[:, 2], X_train_scaled[:, 3], c=colors, marker='o', s=100)

ax2.set_xlabel('width')

ax2.set_ylabel('height')

ax2.set_zlabel('color_score')

plt.show()

三,交叉验证

#交叉验证

def cross_val(X_train_scaled, y_train):

k_range = [2, 4, 5, 10]

cv_scores = []

for k in k_range:

knn = KNeighborsClassifier(n_neighbors=k)

scores = cross_val_score(knn, X_train_scaled, y_train, cv=3)

cv_score = np.mean(scores)

print('k={},验证集上的准确率={:.3f}'.format(k, cv_score))

cv_scores.append(cv_score)

print('np.argmax(cv_scores)=',np.argmax(cv_scores))

best_k = k_range[np.argmax(cv_scores)]

best_knn = KNeighborsClassifier(n_neighbors=best_k)

best_knn.fit(X_train_scaled, y_train)

print('测试集准确率:', best_knn.score(X_test_scaled, y_test))

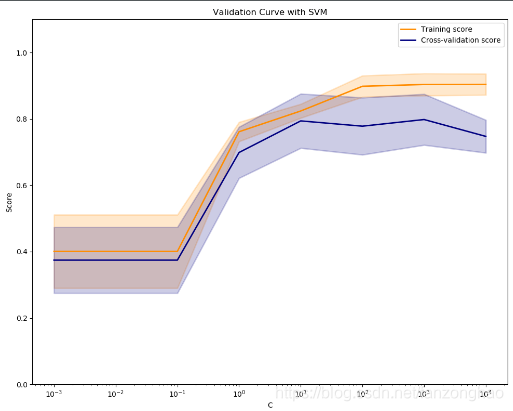

四,调用validation_curve 查看超参数对训练集和验证集的影响

# 调用validation_curve 查看超参数对训练集和验证集的影响

def show_effect(X_train_scaled, y_train):

from sklearn.model_selection import validation_curve

from sklearn.svm import SVC

c_range = [1e-3, 1e-2, 0.1, 1, 10, 100, 1000, 10000]

train_scores, test_scores = validation_curve(SVC(kernel='linear'), X_train_scaled, y_train,

param_name='C', param_range=c_range,

cv=5, scoring='accuracy')

print(train_scores)

print(train_scores.shape)

train_scores_mean = np.mean(train_scores, axis=1)

# print(train_scores_mean)

train_scores_std = np.std(train_scores, axis=1)

test_scores_mean = np.mean(test_scores, axis=1)

test_scores_std = np.std(test_scores, axis=1)

#

plt.figure(figsize=(10, 8))

plt.title('Validation Curve with SVM')

plt.xlabel('C')

plt.ylabel('Score')

plt.ylim(0.0, 1.1)

lw = 2

plt.semilogx(c_range, train_scores_mean, label="Training score",

color="darkorange", lw=lw)

plt.fill_between(c_range, train_scores_mean - train_scores_std,

train_scores_mean + train_scores_std, alpha=0.2,

color="darkorange", lw=lw)

plt.semilogx(c_range, test_scores_mean, label="Cross-validation score",

color="navy", lw=lw)

plt.fill_between(c_range, test_scores_mean - test_scores_std,

test_scores_mean + test_scores_std, alpha=0.2,

color="navy", lw=lw)

plt.legend(loc="best")

plt.show()

# 从上图可知对SVM,C=100为最优参数

svm_model = SVC(kernel='linear', C=1000)

svm_model.fit(X_train_scaled, y_train)

print(svm_model.score(X_test_scaled, y_test))可看成,刚开始方差较大,然后模型趋于稳定,最后过拟合,C=100为最优参数。

五,grid_search,模型存储与加载

def grid_search(X_train_scaled, y_train):

from sklearn.model_selection import GridSearchCV

from sklearn.tree import DecisionTreeClassifier

parameters = {'max_depth':[3, 5, 7, 9], 'min_samples_leaf': [1, 2, 3, 4]}

clf = GridSearchCV(DecisionTreeClassifier(), parameters, cv=3, scoring='accuracy')

print(clf)

clf.fit(X_train_scaled, y_train)

print('最优参数:', clf.best_params_)

print('验证集最高得分:', clf.best_score_)

# 获取最优模型

best_model = clf.best_estimator_

print('测试集上准确率:', best_model.score(X_test_scaled, y_test))

return best_modeldef model_save(best_model):

# 使用pickle

import pickle

model_path1 = './trained_model1.pkl'

# 保存模型到硬盘

with open(model_path1, 'wb') as f:

pickle.dump(best_model, f)

def load_model(X_test_scaled,y_test):

import pickle

# 加载保存的模型

model_path1 = './trained_model1.pkl'

with open(model_path1, 'rb') as f:

model = pickle.load(f)

# 预测

print('预测值为', model.predict([X_test_scaled[0, :]]))

print('真实值为', y_test.values[0])