版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/zhangmingbao2016/article/details/81903932

1、配置好kylin后,执行kylin.sh start,发现kylin的pid文件已经创建,但是不能从web访问,提示页面找不到!

原因:kylin的默认端口号7070(netstat -nap|grep 7070)被占用了,修改kylin的默认端口号,或者杀掉占用7070端口的进程,然后重启kylin即可。

2、编译kylin时提示路径不对,错误信息如下:

java.io.IOException: BulkLoad encountered an unrecoverable problem

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.bulkLoadPhase(LoadIncrementalHFiles.java:534)

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.doBulkLoad(LoadIncrementalHFiles.java:465)

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.doBulkLoad(LoadIncrementalHFiles.java:343)

at org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles.run(LoadIncrementalHFiles.java:1069)

at org.apache.kylin.engine.mr.MRUtil.runMRJob(MRUtil.java:97)

at org.apache.kylin.storage.hbase.steps.BulkLoadJob.run(BulkLoadJob.java:78)

at org.apache.kylin.engine.mr.MRUtil.runMRJob(MRUtil.java:97)

at org.apache.kylin.engine.mr.common.HadoopShellExecutable.doWork(HadoopShellExecutable.java:63)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:162)

at org.apache.kylin.job.execution.DefaultChainedExecutable.doWork(DefaultChainedExecutable.java:67)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:162)

at org.apache.kylin.job.impl.threadpool.DefaultScheduler$JobRunner.run(DefaultScheduler.java:300)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hbase.client.RetriesExhaustedException: Failed after attempts=35, exceptions:

Tue Aug 21 10:22:52 GMT+08:00 2018, RpcRetryingCaller{globalStartTime=1534818172934, pause=100, retries=35}, java.io.IOException: java.io.IOException: Wrong FS: hdfs://soapahdfs/kylin/kylin_metadata/kylin-11b487dd-6c0c-4b55-b5b6-008a8a5b9976/data_cube1/hfile/F1/f8bd23206df0414b8bd61315ed891707, expected: hdfs://soapahdfs:8020

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2239)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:112)

at org.apache.hadoop.hbase.ipc.RpcExecutor.consumerLoop(RpcExecutor.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$1.run(RpcExecutor.java:108)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalArgumentException: Wrong FS: hdfs://soapahdfs/kylin/kylin_metadata/kylin-11b487dd-6c0c-4b55-b5b6-008a8a5b9976/data_cube1/hfile/F1/f8bd23206df0414b8bd61315ed891707, expected: hdfs://soapahdfs:8020

at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:643)

at org.apache.hadoop.hdfs.DistributedFileSystem.getPathName(DistributedFileSystem.java:184)

at org.apache.hadoop.hdfs.DistributedFileSystem.access$000(DistributedFileSystem.java:101)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1068)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1064)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1064)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:397)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1398)

at org.apache.hadoop.hbase.regionserver.HRegionFileSystem.commitStoreFile(HRegionFileSystem.java:387)

at org.apache.hadoop.hbase.regionserver.HRegionFileSystem.bulkLoadStoreFile(HRegionFileSystem.java:466)

at org.apache.hadoop.hbase.regionserver.HStore.bulkLoadHFile(HStore.java:780)

at org.apache.hadoop.hbase.regionserver.HRegion.bulkLoadHFiles(HRegion.java:5404)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.bulkLoadHFile(RSRpcServices.java:1970)

at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:33650)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2188)

... 4 more

Tue Aug 21 10:22:53 GMT+08:00 2018, RpcRetryingCaller{globalStartTime=1534818172934, pause=100, retries=35}, java.io.IOException: java.io.IOException: Wrong FS: hdfs://soapahdfs/kylin/kylin_metadata/kylin-11b487dd-6c0c-4b55-b5b6-008a8a5b9976/data_cube1/hfile/F1/f8bd23206df0414b8bd61315ed891707, expected: hdfs://soapahdfs:8020

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2239)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:112)

at org.apache.hadoop.hbase.ipc.RpcExecutor.consumerLoop(RpcExecutor.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$1.run(RpcExecutor.java:108)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalArgumentException: Wrong FS: hdfs://soapahdfs/kylin/kylin_metadata/kylin-11b487dd-6c0c-4b55-b5b6-008a8a5b9976/data_cube1/hfile/F1/f8bd23206df0414b8bd61315ed891707, expected: hdfs://soapahdfs:8020

at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:643)

at org.apache.hadoop.hdfs.DistributedFileSystem.getPathName(DistributedFileSystem.java:184)

at org.apache.hadoop.hdfs.DistributedFileSystem.access$000(DistributedFileSystem.java:101)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1068)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1064)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1064)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:397)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1398)

at org.apache.hadoop.hbase.regionserver.HRegionFileSystem.commitStoreFile(HRegionFileSystem.java:387)

at org.apache.hadoop.hbase.regionserver.HRegionFileSystem.bulkLoadStoreFile(HRegionFileSystem.java:466)

at org.apache.hadoop.hbase.regionserver.HStore.bulkLoadHFile(HStore.java:780)

at org.apache.hadoop.hbase.regionserver.HRegion.bulkLoadHFiles(HRegion.java:5404)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.bulkLoadHFile(RSRpcServices.java:1970)

at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:33650)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2188)

... 4 more

Tue Aug 21 10:22:53 GMT+08:00 2018, RpcRetryingCaller{globalStartTime=1534818172934, pause=100, retries=35}, java.io.IOException: java.io.IOException: Wrong FS: hdfs://soapahdfs/kylin/kylin_metadata/kylin-11b487dd-6c0c-4b55-b5b6-008a8a5b9976/data_cube1/hfile/F1/f8bd23206df0414b8bd61315ed891707, expected: hdfs://soapahdfs:8020

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2239)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:112)原因:kylin中配置的文件路径和hbase配置的不一致,更加直白的说就是kylin.properties中的kylin.storage.hbase.cluster-fs属性要和hbase的配置文件hbse-site.xml中hbase.rootdir属性值保持一致,不然就会出现数据路径错误

3、编译Cube过程中出现Segment Overlap错误导致编译失败

原因:Cube构建过程中元数据损坏(metadata),导致损坏原因有多个,比如hadoop集群重启,数据量过大,编译Cube时hive数据表有改变,之前编译了Cube后改变hive表结构或者重新创建了hive表等,并且出现这个问题之后再重新编译也一直报这个问题,在这里提供一个临时性解决办法,反正软件界bug万能解决思路:重启和重装。

解决办法:

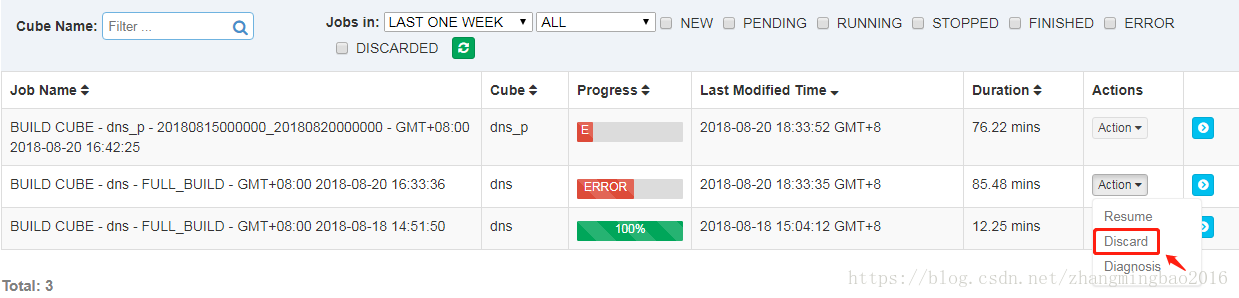

选中出问题的project,然后点击Monitor进入如下界面

点击构建错误的Action,点击Discard,然后回到Model界面,重新build这个Cube

4、创建完hive表从文件中加载数据,然后构建cube也成功了,发现所有的数据都在一列,其他列都为空怎么办?

原因:创建hive表时没有指定分隔符

解决办法:为了避免删除hive表重建的麻烦可以使用alter指令直接修改,命令如下:

alter table table_name set SERDEPROPERTIES('field.delim'='\t');把表名称(table_name )和分隔符(”\t”)换成你的即可,然后重新构建cube