版权声明:关注微信公众号:摸鱼科技资讯,联系我们 https://blog.csdn.net/qq_36949176/article/details/84595407

功能描述

*技术路线:scrapy

*目标:获取上交所和深交所所有的股票的名称和交易信息

*输出:保存到文件中

数据网站的确定,和之前博客相同:https://blog.csdn.net/qq_36949176/article/details/84487150

实例编写:

步骤

步骤1:建立工程和Spider模板

cmd命令

*\>scrapy startproject BaiduStocks

*\>cd BaiduStocks

*\>scrapy genspider stocks baidu.com

*进一步修改spiders/stocks.py文件步骤2:编写Spider

*配置stocks.py文件

*修改对返回页面的处理

*修改对新增URL爬取请求的处理

# -*- coding: utf-8 -*-

import scrapy

import re

class StocksSpider(scrapy.Spider):

name = 'stocks'

start_urls = ['http://quote.eastmoney.com/stocklist.html']

def parse(self, response):

for href in response.css('a::attr(href)').extract():

try:

stock=re.findall(r"[s][hz]\d{6}",href)[0]

url='https://gupiao.baidu.com/stock/'+stock+'.html'

yield scrapy.Request(url,callback=self.parse_stock)

except:

continue

def parse_stock(self,response):

infoDict={}

stockInfo=response.css('.stock-bets')

name=stockInfo.css('.bets-name').extract()[0]

keyList=stockInfo.css('dt').extract()

ValueList=stockInfo.css('dd').extract()

for i in range(len(keyList)):

key=re.findall(r'>.*</dt>',keyList[i])[0][1:-5]

try:

val=re.findall(r'\d+\.?.*</dd>',valueList[i])[0][0:-5]

except:

val='--'

infoDict[key]=val

infoDict.update(

{'股票名称':re.findall('\s.*\(',name)[0].split()[0]+\

re.findall('\>.*\<',name)[0][1:-1]})

yield infoDict步骤3:编写ITEM Pipelines

*配置pipelines.py文件

*定义对爬取项(Scraped Item)的处理类

*配置ITEM_PIPELINES选项

配置和定义类

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

class BaidustocksPipeline(object):

def process_item(self, item, spider):

return item

class BaidustocksInfoPipeline(object):

def open_spider(self,spider):

self.f=open('BaiduStockInfo.txt','w')

def close_spider(self,spider):

self.f.close()

def process_item(self,item,spider):

try:

line=str(dict(item))+'\n'

self.f.write(line)

except:

pass

return item 我们重新写了并命名了BaidustocksInfoPipeline这个类,然后我们需要修改设置setting的配置文件,将新写的类添加到程序中运行中去,我们在settings.py中找到ITEM_PIPELINES,取消注释并把里面的参数修改为自己重新写的这个类

ITEM_PIPELINES = {

'BaiduStocks.pipelines.BaidustocksInfoPipeline': 300,

}

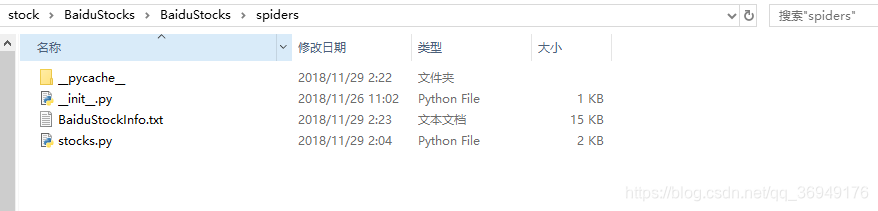

然后我们在stocks.py目录下,打开cmd,执行crawl命令运行程序

scrapy crawl stocks运行成功后生成txt文件数据储存在里面如下图所示