1.网络实现源代码

class AlexNet(nn.Module):

def __init__(self, num_classes=1000):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(64, 192, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

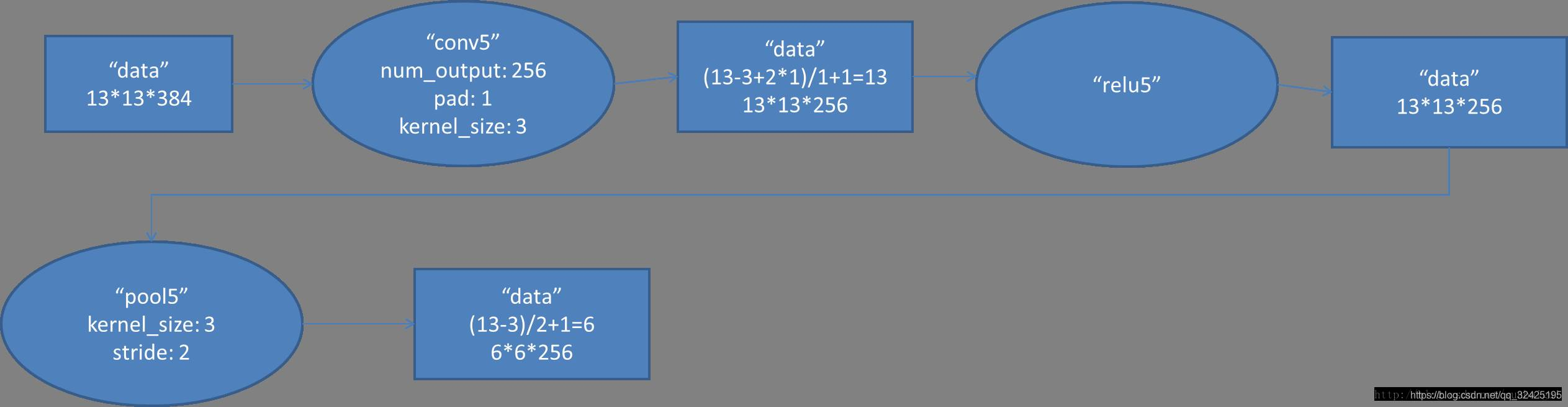

nn.Conv2d(192, 384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, num_classes),

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), 256 * 6 * 6)

x = self.classifier(x)

return x

# -*- coding=UTF-8 -*-

import sys

import os

import random

import cv2

import math

import time

import numpy as np

import tensorflow as tf

import linecache

import string

import skimage

import imageio

# 输入数据

import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

# 定义网络超参数

learning_rate = 0.001

training_iters = 200000

batch_size = 64

display_step = 20

# 定义网络参数

n_input = 784 # 输入的维度

n_classes = 10 # 标签的维度

dropout = 0.8 # Dropout 的概率

# 占位符输入

x = tf.placeholder(tf.types.float32, [None, n_input])

y = tf.placeholder(tf.types.float32, [None, n_classes])

keep_prob = tf.placeholder(tf.types.float32)

# 卷积操作

def conv2d(name, l_input, w, b):

return tf.nn.relu(tf.nn.bias_add( \

tf.nn.conv2d(l_input, w, strides=[1, 1, 1, 1], padding='SAME'),b) \

, name=name)

# 最大下采样操作

def max_pool(name, l_input, k):

return tf.nn.max_pool(l_input, ksize=[1, k, k, 1], \

strides=[1, k, k, 1], padding='SAME', name=name)

# 归一化操作

def norm(name, l_input, lsize=4):

return tf.nn.lrn(l_input, lsize, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name=name)

# 定义整个网络

def alex_net(_X, _weights, _biases, _dropout):

_X = tf.reshape(_X, shape=[-1, 28, 28, 1]) # 向量转为矩阵

# 卷积层

conv1 = conv2d('conv1', _X, _weights['wc1'], _biases['bc1'])

# 下采样层

pool1 = max_pool('pool1', conv1, k=2)

# 归一化层

norm1 = norm('norm1', pool1, lsize=4)

# Dropout

norm1 = tf.nn.dropout(norm1, _dropout)

# 卷积

conv2 = conv2d('conv2', norm1, _weights['wc2'], _biases['bc2'])

# 下采样

pool2 = max_pool('pool2', conv2, k=2)

# 归一化

norm2 = norm('norm2', pool2, lsize=4)

# Dropout

norm2 = tf.nn.dropout(norm2, _dropout)

# 卷积

conv3 = conv2d('conv3', norm2, _weights['wc3'], _biases['bc3'])

# 下采样

pool3 = max_pool('pool3', conv3, k=2)

# 归一化

norm3 = norm('norm3', pool3, lsize=4)

# Dropout

norm3 = tf.nn.dropout(norm3, _dropout)

# 全连接层,先把特征图转为向量

dense1 = tf.reshape(norm3, [-1, _weights['wd1'].get_shape().as_list()[0]])

dense1 = tf.nn.relu(tf.matmul(dense1, _weights['wd1']) + _biases['bd1'], name='fc1')

# 全连接层

dense2 = tf.nn.relu(tf.matmul(dense1, _weights['wd2']) + _biases['bd2'], name='fc2')

# Relu activation

# 网络输出层

out = tf.matmul(dense2, _weights['out']) + _biases['out']

return out

# 存储所有的网络参数

weights = {

'wc1': tf.Variable(tf.random_normal([3, 3, 1, 64])),

'wc2': tf.Variable(tf.random_normal([3, 3, 64, 128])),

'wc3': tf.Variable(tf.random_normal([3, 3, 128, 256])),

'wd1': tf.Variable(tf.random_normal([4*4*256, 1024])),

'wd2': tf.Variable(tf.random_normal([1024, 1024])),

'out': tf.Variable(tf.random_normal([1024, 10]))

}

biases = {

'bc1': tf.Variable(tf.random_normal([64])),

'bc2': tf.Variable(tf.random_normal([128])),

'bc3': tf.Variable(tf.random_normal([256])),

'bd1': tf.Variable(tf.random_normal([1024])),

'bd2': tf.Variable(tf.random_normal([1024])),

'out': tf.Variable(tf.random_normal([n_classes]))

}

# 构建模型

pred = alex_net(x, weights, biases, keep_prob)

# 定义损失函数和学习步骤

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(pred, y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

# 测试网络

correct_pred = tf.equal(tf.argmax(pred,1), tf.argmax(y,1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

# 初始化所有的共享变量

init = tf.initialize_all_variables()

# 开启一个训练

with tf.Session() as sess:

sess.run(init)

step = 1

# Keep training until reach max iterations

while step * batch_size < training_iters:

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

# 获取批数据

sess.run(optimizer, feed_dict={x: batch_xs, y: batch_ys, keep_prob: dropout})

if step % display_step == 0:

# 计算精度

acc = sess.run(accuracy, feed_dict={x: batch_xs, y: batch_ys, keep_prob: 1.})

# 计算损失值

loss = sess.run(cost, feed_dict={x: batch_xs, y: batch_ys, keep_prob: 1.})

print "Iter " + str(step*batch_size) + ", Minibatch Loss= " + "{:.6f}".format(loss) + ", Training Accuracy= " + "{:.5f}".format(acc)

step += 1

print "Optimization Finished!"

# 计算测试精度

print "Testing Accuracy:", sess.run(accuracy, feed_dict={x: mnist.test.images[:256], y: mnist.test.labels[:256], keep_prob: 1.}) 网络架构:

好的技术文档:https://yq.aliyun.com/articles/602853

https://blog.csdn.net/u012679707/article/details/80793916

https://www.sohu.com/a/134347664_642762:较为详细的介绍了各层网络和其他的网络

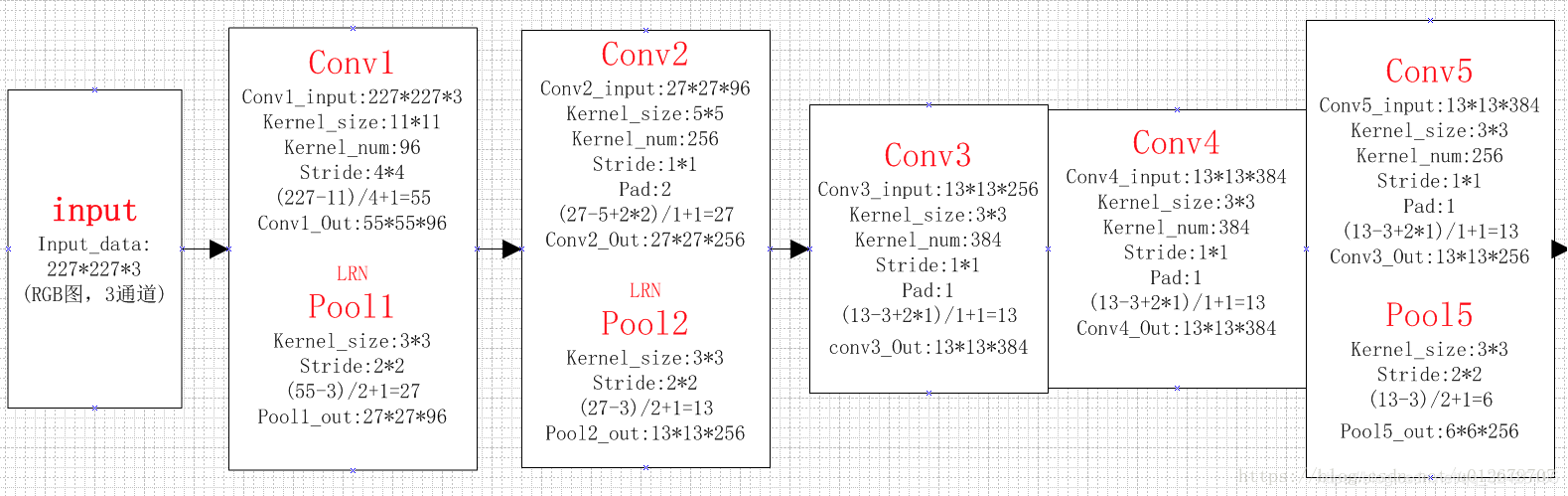

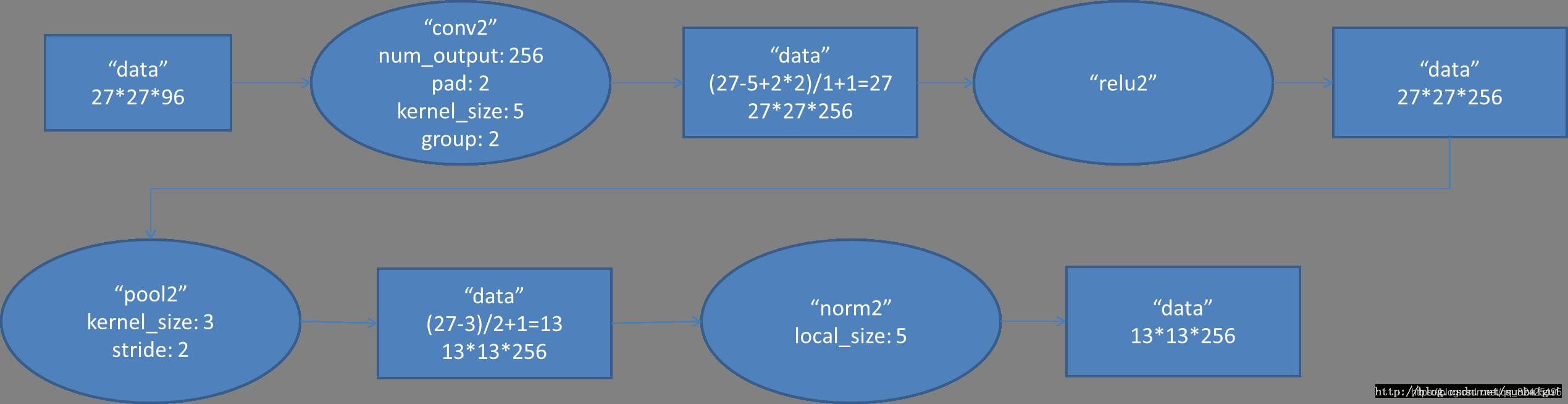

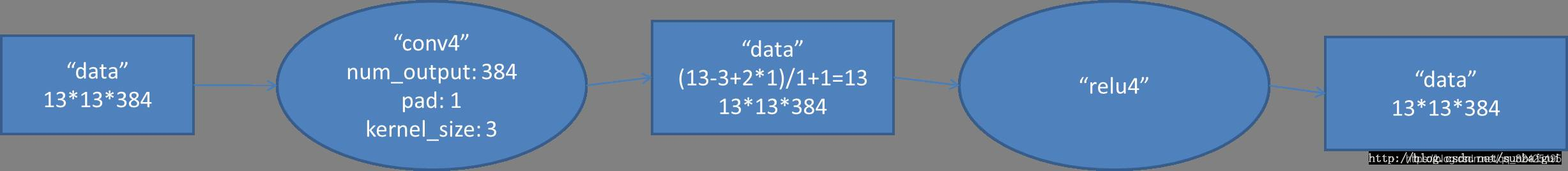

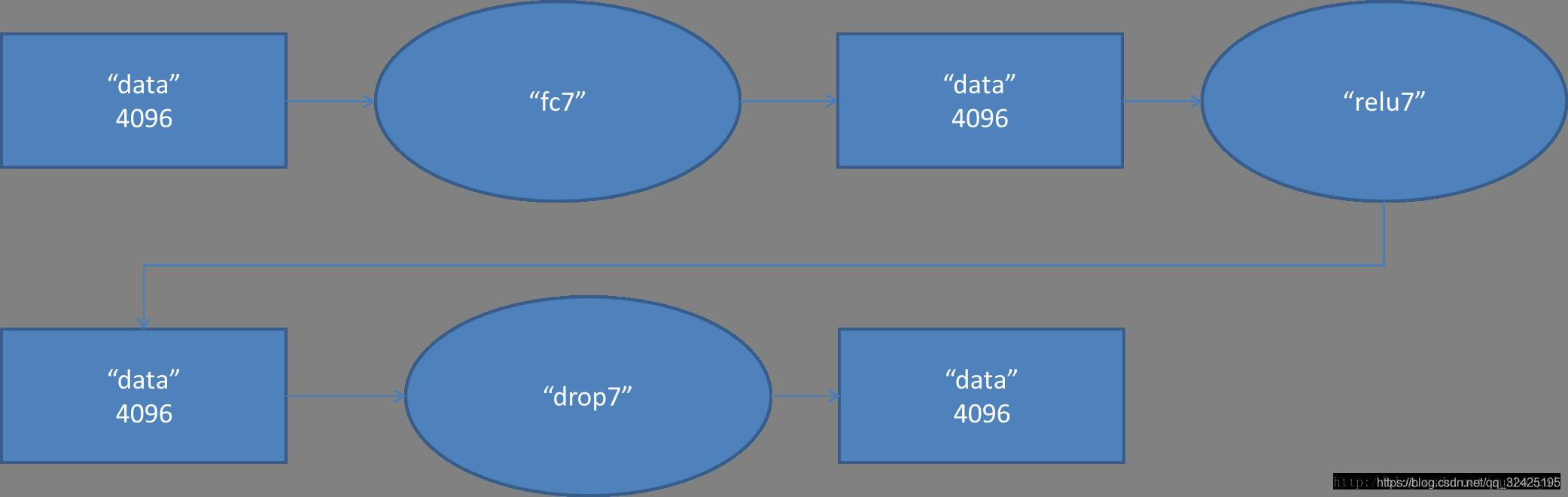

共8个层,各个层分开解析(参考https://www.sohu.com/a/134347664_642762):

(1)con - relu - pooling - LRN

(2)conv - relu - pool - LRN

(3)conv - relu

(4)conv-relu

(5)conv - relu - pool

(6)fc - relu - dropout

(7) fc - relu - dropout

(8)fc - softmax

减少过拟合的方法:

1.数据增强,随机地从256*256的原始图像中截取224*224大小的区域(以及水平翻转的镜像),相当于增加了2*(256-224)^2=2048倍的数据量。如果没有数据增强,仅靠原始的数据量,参数众多的CNN会陷入过拟合中,使用了数据增强后可以大大减轻过拟合,提升泛化能力。进行预测时,则是取图片的四个角加中间共5个位置,并进行左右翻转,一共获得10张图片,对他们进行预测并对10次结果求均值。同时,AlexNet论文中提到了会对图像的RGB数据进行PCA处理,并对主成分做一个标准差为0.1的高斯扰动,增加一些噪声,这个Trick可以让错误率再下降1%。

2.dropout

将不同的模型的预测结果结合起来时减少错误的有效方式之一

原文中的关键点:

1.神经网络模型效果:更多的数据、更好的网络、更好的防止过拟合

2.要训练大的数据量,就需要模型有这个能力

3.参数更新策略、参数初始化策略、学习率的改变

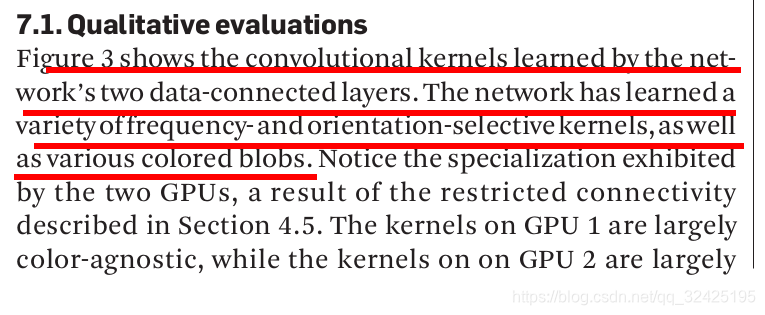

4、将卷积层的参数可视化,观察各个卷积层学到了什么

5.无监督学习的预训练可能改善网络的效果