前言

从学校出来的这半年时间,发现很少有时间可以静下来学习和写博文了,为了保持着学习热情,我希望自己抽出一部分时间来写一些Android框架源码阅读后的理解系列博文。

期许:希望可以和大家一起学习好此框架,也希望大家看博文前最好是先了解下框架的基本使用场景和使用方法,有什么问题可以留言给我,交流学习。

当然,再好的博文,也不如自己看一遍源码!

这次为大家带来的是《胖虎谈ImageLoader框架》系列,分析优秀的框架源码能让我们更迅速地提升,大家共勉!!

源码包下载地址:http://download.csdn.net/detail/u011133213/9210765

希望我们尊重每个人的成果,转载请注明出处。

转载于:CSDN 胖虎 , http://blog.csdn.net/ljphhj

正文

继上3篇博文《胖虎谈ImageLoader框架(一)》 《胖虎谈ImageLoader框架(二)》《胖虎谈ImageLoader框架(三)》

带来这篇《胖虎谈ImageLoader框架(四)》,前面3篇花了大篇幅在讲ImageLoader中的运作方式,当然有一大部分还是需要网友自己去看的,这次这篇博文做为ImageLoader框架的最后一篇博文,准备写一下关于ImageLoader中的内存缓存和SDCard缓存机制。

(ps:读此博文前,希望网友已经阅读并理解了《胖虎谈ImageLoader框架(一)》 《胖虎谈ImageLoader框架(二)》《胖虎谈ImageLoader框架(三)》再阅读此博文。)

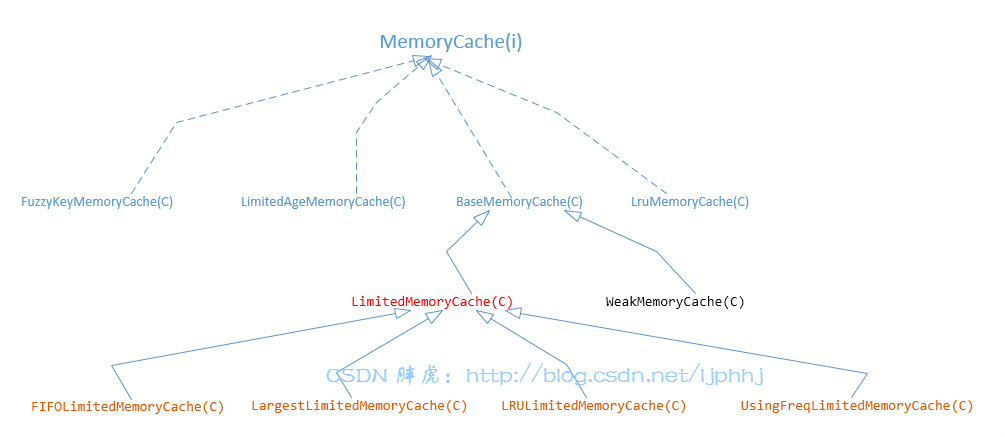

内存缓存机制

(路径:com.nostra13.universalimageloader.cache.memory.*)

FuzzyKeyMemoryCache, LimitedAgeMemoryCache,LruMemoryCache,BaseMemoryCache 这4个类都实现了MemoryCache接口,实现了以下接口:

public interface MemoryCache {

/**

* Puts value into cache by key

*

* @return <b>true</b> - if value was put into cache successfully, <b>false</b> - if value was <b>not</b> put into

* cache

*/

boolean put(String key, Bitmap value);

/** Returns value by key. If there is no value for key then null will be returned. */

Bitmap get(String key);

/** Removes item by key */

Bitmap remove(String key);

/** Returns all keys of cache */

Collection<String> keys();

/** Remove all items from cache */

void clear();

}下面让我们来看看他们之间的差异:

FuzzyKeyMemoryCache:这种内存缓存机制采用的策略是:当Put一个Bitmap时,检查是否有相同Key值的情况,清楚后再插入新的Bitmap。

public class FuzzyKeyMemoryCache implements MemoryCache {

private final MemoryCache cache;

private final Comparator<String> keyComparator;

public FuzzyKeyMemoryCache(MemoryCache cache, Comparator<String> keyComparator) {

this.cache = cache;

this.keyComparator = keyComparator;

}

@Override

public boolean put(String key, Bitmap value) {

// Search equal key and remove this entry

synchronized (cache) {

String keyToRemove = null;

for (String cacheKey : cache.keys()) {

if (keyComparator.compare(key, cacheKey) == 0) {

keyToRemove = cacheKey;

break;

}

}

if (keyToRemove != null) {

cache.remove(keyToRemove);

}

}

return cache.put(key, value);

}

@Override

public Bitmap get(String key) {

return cache.get(key);

}

@Override

public Bitmap remove(String key) {

return cache.remove(key);

}

@Override

public void clear() {

cache.clear();

}

@Override

public Collection<String> keys() {

return cache.keys();

}

}LimitedAgeMemoryCache:定义一个像Cookie一样的内存缓存管理模式,可以设定一个最久存储的时长,一旦过了这个时长,即remove这个Bitmap(在调用get(Key)的时候做的这个判断)

public class LimitedAgeMemoryCache implements MemoryCache {

private final MemoryCache cache;

private final long maxAge;

private final Map<String, Long> loadingDates = Collections.synchronizedMap(new HashMap<String, Long>());

/**

* @param cache Wrapped memory cache

* @param maxAge Max object age <b>(in seconds)</b>. If object age will exceed this value then it'll be removed from

* cache on next treatment (and therefore be reloaded).

*/

public LimitedAgeMemoryCache(MemoryCache cache, long maxAge) {

this.cache = cache;

this.maxAge = maxAge * 1000; // to milliseconds

}

@Override

public boolean put(String key, Bitmap value) {

boolean putSuccesfully = cache.put(key, value);

// 如果value put成功,将key对应的添加时间put到时间map中保存

if (putSuccesfully) {

loadingDates.put(key, System.currentTimeMillis());

}

return putSuccesfully;

}

@Override

public Bitmap get(String key) {

Long loadingDate = loadingDates.get(key);

// 看看是否超时,如果超时就remove掉

if (loadingDate != null && System.currentTimeMillis() - loadingDate > maxAge) {

cache.remove(key);

loadingDates.remove(key);

}

return cache.get(key);

}

@Override

public Bitmap remove(String key) {

loadingDates.remove(key);

return cache.remove(key);

}

@Override

public Collection<String> keys() {

return cache.keys();

}

@Override

public void clear() {

cache.clear();

loadingDates.clear();

}

}LruMemoryCache:采用LRU这种近期最少使用的策略来管理内存缓存,当一个新的Bitmap被Put时,如果Cache中空间不够,即将近期最少使用的Bitmap依次remove掉,直到Cache中空间足够存放新的Bitmap。

这里大家需要去了解一下LRU缓存的一种实现:LinkedList (LinkedHashMap)

public class LruMemoryCache implements MemoryCache {

private final LinkedHashMap<String, Bitmap> map;

private final int maxSize;

/** Size of this cache in bytes */

private int size;

/** @param maxSize Maximum sum of the sizes of the Bitmaps in this cache */

public LruMemoryCache(int maxSize) {

if (maxSize <= 0) {

throw new IllegalArgumentException("maxSize <= 0");

}

this.maxSize = maxSize;

//这边第三个参数为true,即实现了一个Lru置换算法的LinkedList,会不断将最近使用过(get or put)的Bitmap放到List的头部,最久未使用的则在List的尾部,清除的时候会从List尾部开始清除。

this.map = new LinkedHashMap<String, Bitmap>(0, 0.75f, true);

}

/**

* Returns the Bitmap for {@code key} if it exists in the cache. If a Bitmap was returned, it is moved to the head

* of the queue. This returns null if a Bitmap is not cached.

*/

@Override

public final Bitmap get(String key) {

if (key == null) {

throw new NullPointerException("key == null");

}

synchronized (this) {

return map.get(key);

}

}

/** Caches {@code Bitmap} for {@code key}. The Bitmap is moved to the head of the queue. */

@Override

public final boolean put(String key, Bitmap value) {

if (key == null || value == null) {

throw new NullPointerException("key == null || value == null");

}

synchronized (this) {

size += sizeOf(key, value);

Bitmap previous = map.put(key, value);

if (previous != null) {

size -= sizeOf(key, previous);

}

}

trimToSize(maxSize);

return true;

}

/**

* Remove the eldest entries until the total of remaining entries is at or below the requested size.

*

* @param maxSize the maximum size of the cache before returning. May be -1 to evict even 0-sized elements.

*/

private void trimToSize(int maxSize) {

while (true) {

String key;

Bitmap value;

synchronized (this) {

if (size < 0 || (map.isEmpty() && size != 0)) {

throw new IllegalStateException(getClass().getName() + ".sizeOf() is reporting inconsistent results!");

}

if (size <= maxSize || map.isEmpty()) {

break;

}

Map.Entry<String, Bitmap> toEvict = map.entrySet().iterator().next();

if (toEvict == null) {

break;

}

key = toEvict.getKey();

value = toEvict.getValue();

map.remove(key);

size -= sizeOf(key, value);

}

}

}

/** Removes the entry for {@code key} if it exists. */

@Override

public final Bitmap remove(String key) {

if (key == null) {

throw new NullPointerException("key == null");

}

synchronized (this) {

Bitmap previous = map.remove(key);

if (previous != null) {

size -= sizeOf(key, previous);

}

return previous;

}

}

@Override

public Collection<String> keys() {

synchronized (this) {

return new HashSet<String>(map.keySet());

}

}

@Override

public void clear() {

trimToSize(-1); // -1 will evict 0-sized elements

}

/**

* Returns the size {@code Bitmap} in bytes.

* <p/>

* An entry's size must not change while it is in the cache.

*/

private int sizeOf(String key, Bitmap value) {

return value.getRowBytes() * value.getHeight();

}

@Override

public synchronized final String toString() {

return String.format("LruCache[maxSize=%d]", maxSize);

}

}BaseMemoryCache : 实现了一个内部的Map, 对应的存储Value都是软引用(Reference< Bitmap >), 这也是有别于上面3个类中存储的(强引用)。

public abstract class BaseMemoryCache implements MemoryCache {

/** Stores not strong references to objects */

private final Map<String, Reference<Bitmap>> softMap = Collections.synchronizedMap(new HashMap<String, Reference<Bitmap>>());

@Override

public Bitmap get(String key) {

Bitmap result = null;

Reference<Bitmap> reference = softMap.get(key);

if (reference != null) {

result = reference.get();

}

return result;

}

@Override

public boolean put(String key, Bitmap value) {

softMap.put(key, createReference(value));

return true;

}

@Override

public Bitmap remove(String key) {

Reference<Bitmap> bmpRef = softMap.remove(key);

return bmpRef == null ? null : bmpRef.get();

}

@Override

public Collection<String> keys() {

synchronized (softMap) {

return new HashSet<String>(softMap.keySet());

}

}

@Override

public void clear() {

softMap.clear();

}

/** Creates {@linkplain Reference not strong} reference of value */

protected abstract Reference<Bitmap> createReference(Bitmap value);

}顺带说一下强引用(StrongReference),软引用(SoftReference), 弱引用(WeakReference),虚引用(PhantomReference)

强引用:普通的Object即是一个强引用,GC垃圾回收的时候不会回收强引用指向的对象,哪怕内存不足,宁愿抛出OOM异常。

软引用:当内存不足时,GC垃圾回收时才会回收只具有软引用指向的对象。

弱引用:无论内存是否足够,GC垃圾回收时一旦发现弱引用即会回收只具有弱引用指向的对象。

虚引用:如果一个对象仅持有虚引用,那么它就和没有任何引用一样,在任何时候都可能被垃圾回收器回收。WeakMemoryCache : 以弱引用的方式存储,因此这种内存缓存机制只要GC扫到该Bitmap对象,发现只有弱引用指向,即会被回收。

public class WeakMemoryCache extends BaseMemoryCache {

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

}LimitedMemoryCache : 被限制可存储区域Cache大小的内存缓存类,也是几种ImageLoader主要用到的内存缓存类(FIFOLimitedMemoryCache,LargestLimitedMemoryCache,LRULimitedMemoryCache,UsingFreqLimitedMemoryCache)的父类。子类中各自有各自的内存缓存淘汰策略,FIFO : 当Cache大小不够时,先淘汰最早添加进来的Bitmap(先进先出) , Largest : 当Cache大小不够时,先淘汰Bitmap所占用空间最大的。LRU: 当Cache大小不够时,最久未使用的先淘汰。UsingFreq:当Cache大小不够时,Bitmap被使用的次数(get函数被调用到的次数)最少的先淘汰。

public abstract class LimitedMemoryCache extends BaseMemoryCache {

private static final int MAX_NORMAL_CACHE_SIZE_IN_MB = 16;

private static final int MAX_NORMAL_CACHE_SIZE = MAX_NORMAL_CACHE_SIZE_IN_MB * 1024 * 1024;

private final int sizeLimit;

private final AtomicInteger cacheSize;

/**

* Contains strong references to stored objects. Each next object is added last. If hard cache size will exceed

* limit then first object is deleted (but it continue exist at {@link #softMap} and can be collected by GC at any

* time)

*/

private final List<Bitmap> hardCache = Collections.synchronizedList(new LinkedList<Bitmap>());

/** @param sizeLimit Maximum size for cache (in bytes) */

public LimitedMemoryCache(int sizeLimit) {

this.sizeLimit = sizeLimit;

cacheSize = new AtomicInteger();

if (sizeLimit > MAX_NORMAL_CACHE_SIZE) {

L.w("You set too large memory cache size (more than %1$d Mb)", MAX_NORMAL_CACHE_SIZE_IN_MB);

}

}

@Override

public boolean put(String key, Bitmap value) {

boolean putSuccessfully = false;

// Try to add value to hard cache

int valueSize = getSize(value);

int sizeLimit = getSizeLimit();

int curCacheSize = cacheSize.get();

if (valueSize < sizeLimit) {

while (curCacheSize + valueSize > sizeLimit) {

Bitmap removedValue = removeNext();

if (hardCache.remove(removedValue)) {

curCacheSize = cacheSize.addAndGet(-getSize(removedValue));

}

}

hardCache.add(value);

cacheSize.addAndGet(valueSize);

putSuccessfully = true;

}

// Add value to soft cache

super.put(key, value);

return putSuccessfully;

}

@Override

public Bitmap remove(String key) {

Bitmap value = super.get(key);

if (value != null) {

if (hardCache.remove(value)) {

cacheSize.addAndGet(-getSize(value));

}

}

return super.remove(key);

}

@Override

public void clear() {

hardCache.clear();

cacheSize.set(0);

super.clear();

}

protected int getSizeLimit() {

return sizeLimit;

}

protected abstract int getSize(Bitmap value);

protected abstract Bitmap removeNext();

}FIFOLimitedMemoryCache : 先进先出的策略来管理内存Cache。内部实现为一个LinkedList, 每次put到List末尾,当所有Bitmap超过了限定的Cache大小时,remove掉LinkedList头部的Bitmap,直到足够放下新的Bitmap为止。

public class FIFOLimitedMemoryCache extends LimitedMemoryCache {

private final List<Bitmap> queue = Collections.synchronizedList(new LinkedList<Bitmap>());

public FIFOLimitedMemoryCache(int sizeLimit) {

super(sizeLimit);

}

@Override

public boolean put(String key, Bitmap value) {

if (super.put(key, value)) {

queue.add(value);

return true;

} else {

return false;

}

}

@Override

public Bitmap remove(String key) {

Bitmap value = super.get(key);

if (value != null) {

queue.remove(value);

}

return super.remove(key);

}

@Override

public void clear() {

queue.clear();

super.clear();

}

@Override

protected int getSize(Bitmap value) {

return value.getRowBytes() * value.getHeight();

}

@Override

protected Bitmap removeNext() {

return queue.remove(0);

}

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

}LargestLimitedMemoryCache:内部维护一个HashMap< Bitmap , BitmapSize > 来存储Bitmap占用的空间大小,当Cache超过限定大小时,依次淘汰掉占用空间最大的Bitmap。

public class LargestLimitedMemoryCache extends LimitedMemoryCache {

/**

* Contains strong references to stored objects (keys) and sizes of the objects. If hard cache

* size will exceed limit then object with the largest size is deleted (but it continue exist at

* {@link #softMap} and can be collected by GC at any time)

*/

private final Map<Bitmap, Integer> valueSizes = Collections.synchronizedMap(new HashMap<Bitmap, Integer>());

public LargestLimitedMemoryCache(int sizeLimit) {

super(sizeLimit);

}

@Override

public boolean put(String key, Bitmap value) {

if (super.put(key, value)) {

valueSizes.put(value, getSize(value));

return true;

} else {

return false;

}

}

@Override

public Bitmap remove(String key) {

Bitmap value = super.get(key);

if (value != null) {

valueSizes.remove(value);

}

return super.remove(key);

}

@Override

public void clear() {

valueSizes.clear();

super.clear();

}

@Override

protected int getSize(Bitmap value) {

return value.getRowBytes() * value.getHeight();

}

@Override

protected Bitmap removeNext() {

Integer maxSize = null;

Bitmap largestValue = null;

Set<Entry<Bitmap, Integer>> entries = valueSizes.entrySet();

synchronized (valueSizes) {

for (Entry<Bitmap, Integer> entry : entries) {

if (largestValue == null) {

largestValue = entry.getKey();

maxSize = entry.getValue();

} else {

Integer size = entry.getValue();

if (size > maxSize) {

maxSize = size;

largestValue = entry.getKey();

}

}

}

}

valueSizes.remove(largestValue);

return largestValue;

}

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

}LRULimitedMemoryCache : LRU算法,最近未使用策略来淘汰Cache中的Bitmap。这个内部实现其实也是LinkedList, ImageLoader中用LinkedHashMap, 但如果你跟进去LinkedHashMap中的代码,看get和put相关的代码会发现,有一个方法:makeTail , 这个就是将每次调用get或者put的Bitmap放到最头部,这样永远取到的最尾部的Bitmap就是最长时间没被使用过的Bitmap,这样就可以直接进行淘汰了。

(注:要实现这样的LinkedHashMap, 构造LinkedHashMap时,构造函数中的第三个参数accessOrder, 需要传入true。)

[@param accessOrder: {@code true} if the ordering should be done based on the last access (from least-recently accessed to most-recently

accessed), and {@code false} if the ordering should be the order in which the entries were inserted.]

public class LRULimitedMemoryCache extends LimitedMemoryCache {

private static final int INITIAL_CAPACITY = 10;

private static final float LOAD_FACTOR = 1.1f;

/** Cache providing Least-Recently-Used logic */

private final Map<String, Bitmap> lruCache = Collections.synchronizedMap(new LinkedHashMap<String, Bitmap>(INITIAL_CAPACITY, LOAD_FACTOR, true));

/** @param maxSize Maximum sum of the sizes of the Bitmaps in this cache */

public LRULimitedMemoryCache(int maxSize) {

super(maxSize);

}

@Override

public boolean put(String key, Bitmap value) {

if (super.put(key, value)) {

lruCache.put(key, value);

return true;

} else {

return false;

}

}

@Override

public Bitmap get(String key) {

lruCache.get(key); // call "get" for LRU logic

return super.get(key);

}

@Override

public Bitmap remove(String key) {

lruCache.remove(key);

return super.remove(key);

}

@Override

public void clear() {

lruCache.clear();

super.clear();

}

@Override

protected int getSize(Bitmap value) {

return value.getRowBytes() * value.getHeight();

}

@Override

protected Bitmap removeNext() {

Bitmap mostLongUsedValue = null;

synchronized (lruCache) {

Iterator<Entry<String, Bitmap>> it = lruCache.entrySet().iterator();

if (it.hasNext()) {

Entry<String, Bitmap> entry = it.next();

mostLongUsedValue = entry.getValue();

it.remove();

}

}

return mostLongUsedValue;

}

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

}UsingFreqLimitedMemoryCache : 内部维护一个HashMap< Bitmap , UsedCount > 来存储Bitmap对应的使用次数,当Cache超过限定大小时,依次淘汰掉使用次数最少的Bitmap。

public class UsingFreqLimitedMemoryCache extends LimitedMemoryCache {

/**

* Contains strong references to stored objects (keys) and last object usage date (in milliseconds). If hard cache

* size will exceed limit then object with the least frequently usage is deleted (but it continue exist at

* {@link #softMap} and can be collected by GC at any time)

*/

private final Map<Bitmap, Integer> usingCounts = Collections.synchronizedMap(new HashMap<Bitmap, Integer>());

public UsingFreqLimitedMemoryCache(int sizeLimit) {

super(sizeLimit);

}

@Override

public boolean put(String key, Bitmap value) {

if (super.put(key, value)) {

usingCounts.put(value, 0);

return true;

} else {

return false;

}

}

@Override

public Bitmap get(String key) {

Bitmap value = super.get(key);

// Increment usage count for value if value is contained in hardCahe

if (value != null) {

Integer usageCount = usingCounts.get(value);

if (usageCount != null) {

usingCounts.put(value, usageCount + 1);

}

}

return value;

}

@Override

public Bitmap remove(String key) {

Bitmap value = super.get(key);

if (value != null) {

usingCounts.remove(value);

}

return super.remove(key);

}

@Override

public void clear() {

usingCounts.clear();

super.clear();

}

@Override

protected int getSize(Bitmap value) {

return value.getRowBytes() * value.getHeight();

}

@Override

protected Bitmap removeNext() {

Integer minUsageCount = null;

Bitmap leastUsedValue = null;

Set<Entry<Bitmap, Integer>> entries = usingCounts.entrySet();

synchronized (usingCounts) {

for (Entry<Bitmap, Integer> entry : entries) {

if (leastUsedValue == null) {

leastUsedValue = entry.getKey();

minUsageCount = entry.getValue();

} else {

Integer lastValueUsage = entry.getValue();

if (lastValueUsage < minUsageCount) {

minUsageCount = lastValueUsage;

leastUsedValue = entry.getKey();

}

}

}

}

usingCounts.remove(leastUsedValue);

return leastUsedValue;

}

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

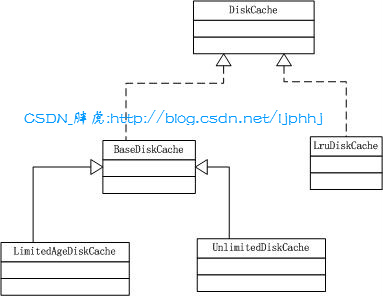

}SDCard缓存机制

(路径:com.nostra13.universalimageloader.cache.disc.*)

将SDCard缓存机制前,我们先把ImageLoader中关于文件名的生成策略讲一下。(因为涉及到SDCard缓存文件,那么文件名肯定要保证唯一,所以ImageLoader有几种文件名生成策略)

父类:FileNameGenerator 子类:HashCodeFileNameGenerator , Md5FileNameGenerator

从类名中能看出来,一种是HashCodeFileNameGenerator,这种方式是通过Image的Uri的HashCode值,来作为生成缓存图片的文件名。另一种是Md5FileNameGenerator,这种方式是通过Image的Uri计算出对应的MD5来作为生成缓存图片的文件名。

DiskCache:接口类,定义了一些基本的SDCard缓存操作方法。

public interface DiskCache {

/**

* Returns root directory of disk cache

*

* @return Root directory of disk cache

*/

File getDirectory();

/**

* Returns file of cached image

*

* @param imageUri Original image URI

* @return File of cached image or <b>null</b> if image wasn't cached

*/

File get(String imageUri);

/**

* Saves image stream in disk cache.

* Incoming image stream shouldn't be closed in this method.

*

* @param imageUri Original image URI

* @param imageStream Input stream of image (shouldn't be closed in this method)

* @param listener Listener for saving progress, can be ignored if you don't use

* {@linkplain com.nostra13.universalimageloader.core.listener.ImageLoadingProgressListener

* progress listener} in ImageLoader calls

* @return <b>true</b> - if image was saved successfully; <b>false</b> - if image wasn't saved in disk cache.

* @throws java.io.IOException

*/

boolean save(String imageUri, InputStream imageStream, IoUtils.CopyListener listener) throws IOException;

/**

* Saves image bitmap in disk cache.

*

* @param imageUri Original image URI

* @param bitmap Image bitmap

* @return <b>true</b> - if bitmap was saved successfully; <b>false</b> - if bitmap wasn't saved in disk cache.

* @throws IOException

*/

boolean save(String imageUri, Bitmap bitmap) throws IOException;

/**

* Removes image file associated with incoming URI

*

* @param imageUri Image URI

* @return <b>true</b> - if image file is deleted successfully; <b>false</b> - if image file doesn't exist for

* incoming URI or image file can't be deleted.

*/

boolean remove(String imageUri);

/** Closes disk cache, releases resources. */

void close();

/** Clears disk cache. */

void clear();

}BaseDiskCache : 实现了一些基本的文件生成操作,定义了成员变量,Cache文件夹和备用Cache文件夹,当get(imageUri)时,调用getFile(imageUri), 通过之前提到的某种类型的文件名生成策略(FileNameGenerator)从Cache文件夹中取出对应的文件(有可能不存在)。此类主要做了图片的压缩保存,和本地缓存的位置设定的操作。主要需要了解save和getFile两个方法函数的实现。

public abstract class BaseDiskCache implements DiskCache {

/** {@value */

public static final int DEFAULT_BUFFER_SIZE = 32 * 1024; // 32 Kb

/** {@value */

public static final Bitmap.CompressFormat DEFAULT_COMPRESS_FORMAT = Bitmap.CompressFormat.PNG;

/** {@value */

public static final int DEFAULT_COMPRESS_QUALITY = 100;

private static final String ERROR_ARG_NULL = " argument must be not null";

private static final String TEMP_IMAGE_POSTFIX = ".tmp";

protected final File cacheDir;

protected final File reserveCacheDir;

protected final FileNameGenerator fileNameGenerator;

protected int bufferSize = DEFAULT_BUFFER_SIZE;

protected Bitmap.CompressFormat compressFormat = DEFAULT_COMPRESS_FORMAT;

protected int compressQuality = DEFAULT_COMPRESS_QUALITY;

/** @param cacheDir Directory for file caching */

public BaseDiskCache(File cacheDir) {

this(cacheDir, null);

}

/**

* @param cacheDir Directory for file caching

* @param reserveCacheDir null-ok; Reserve directory for file caching. It's used when the primary directory isn't available.

*/

public BaseDiskCache(File cacheDir, File reserveCacheDir) {

this(cacheDir, reserveCacheDir, DefaultConfigurationFactory.createFileNameGenerator());

}

/**

* @param cacheDir Directory for file caching

* @param reserveCacheDir null-ok; Reserve directory for file caching. It's used when the primary directory isn't available.

* @param fileNameGenerator {@linkplain com.nostra13.universalimageloader.cache.disc.naming.FileNameGenerator

* Name generator} for cached files

*/

public BaseDiskCache(File cacheDir, File reserveCacheDir, FileNameGenerator fileNameGenerator) {

if (cacheDir == null) {

throw new IllegalArgumentException("cacheDir" + ERROR_ARG_NULL);

}

if (fileNameGenerator == null) {

throw new IllegalArgumentException("fileNameGenerator" + ERROR_ARG_NULL);

}

this.cacheDir = cacheDir;

this.reserveCacheDir = reserveCacheDir;

this.fileNameGenerator = fileNameGenerator;

}

@Override

public File getDirectory() {

return cacheDir;

}

@Override

public File get(String imageUri) {

return getFile(imageUri);

}

@Override

public boolean save(String imageUri, InputStream imageStream, IoUtils.CopyListener listener) throws IOException {

File imageFile = getFile(imageUri);

File tmpFile = new File(imageFile.getAbsolutePath() + TEMP_IMAGE_POSTFIX);

boolean loaded = false;

try {

OutputStream os = new BufferedOutputStream(new FileOutputStream(tmpFile), bufferSize);

try {

loaded = IoUtils.copyStream(imageStream, os, listener, bufferSize);

} finally {

IoUtils.closeSilently(os);

}

} finally {

if (loaded && !tmpFile.renameTo(imageFile)) {

loaded = false;

}

if (!loaded) {

tmpFile.delete();

}

}

return loaded;

}

@Override

public boolean save(String imageUri, Bitmap bitmap) throws IOException {

File imageFile = getFile(imageUri);

File tmpFile = new File(imageFile.getAbsolutePath() + TEMP_IMAGE_POSTFIX);

OutputStream os = new BufferedOutputStream(new FileOutputStream(tmpFile), bufferSize);

boolean savedSuccessfully = false;

try {

savedSuccessfully = bitmap.compress(compressFormat, compressQuality, os);

} finally {

IoUtils.closeSilently(os);

if (savedSuccessfully && !tmpFile.renameTo(imageFile)) {

savedSuccessfully = false;

}

if (!savedSuccessfully) {

tmpFile.delete();

}

}

bitmap.recycle();

return savedSuccessfully;

}

@Override

public boolean remove(String imageUri) {

return getFile(imageUri).delete();

}

@Override

public void close() {

// Nothing to do

}

@Override

public void clear() {

File[] files = cacheDir.listFiles();

if (files != null) {

for (File f : files) {

f.delete();

}

}

}

/** Returns file object (not null) for incoming image URI. File object can reference to non-existing file. */

protected File getFile(String imageUri) {

String fileName = fileNameGenerator.generate(imageUri);

File dir = cacheDir;

if (!cacheDir.exists() && !cacheDir.mkdirs()) {

if (reserveCacheDir != null && (reserveCacheDir.exists() || reserveCacheDir.mkdirs())) {

dir = reserveCacheDir;

}

}

return new File(dir, fileName);

}

public void setBufferSize(int bufferSize) {

this.bufferSize = bufferSize;

}

public void setCompressFormat(Bitmap.CompressFormat compressFormat) {

this.compressFormat = compressFormat;

}

public void setCompressQuality(int compressQuality) {

this.compressQuality = compressQuality;

}

}LimitedAgeDiskCache : 这种策略跟内存缓存那边的策略一样,只是内存缓存那边将Bitmap的put时间保存在了Map中,get的时候判断时间是否已经超时,判断是否淘汰,这边是取File的lastModifyed最后修改时间,然后来判断是否超时。如果超时就delete这个文件。

public class LimitedAgeDiskCache extends BaseDiskCache {

private final long maxFileAge;

private final Map<File, Long> loadingDates = Collections.synchronizedMap(new HashMap<File, Long>());

/**

* @param cacheDir Directory for file caching

* @param maxAge Max file age (in seconds). If file age will exceed this value then it'll be removed on next

* treatment (and therefore be reloaded).

*/

public LimitedAgeDiskCache(File cacheDir, long maxAge) {

this(cacheDir, null, DefaultConfigurationFactory.createFileNameGenerator(), maxAge);

}

/**

* @param cacheDir Directory for file caching

* @param maxAge Max file age (in seconds). If file age will exceed this value then it'll be removed on next

* treatment (and therefore be reloaded).

*/

public LimitedAgeDiskCache(File cacheDir, File reserveCacheDir, long maxAge) {

this(cacheDir, reserveCacheDir, DefaultConfigurationFactory.createFileNameGenerator(), maxAge);

}

/**

* @param cacheDir Directory for file caching

* @param reserveCacheDir null-ok; Reserve directory for file caching. It's used when the primary directory isn't available.

* @param fileNameGenerator Name generator for cached files

* @param maxAge Max file age (in seconds). If file age will exceed this value then it'll be removed on next

* treatment (and therefore be reloaded).

*/

public LimitedAgeDiskCache(File cacheDir, File reserveCacheDir, FileNameGenerator fileNameGenerator, long maxAge) {

super(cacheDir, reserveCacheDir, fileNameGenerator);

this.maxFileAge = maxAge * 1000; // to milliseconds

}

@Override

public File get(String imageUri) {

File file = super.get(imageUri);

if (file != null && file.exists()) {

boolean cached;

Long loadingDate = loadingDates.get(file);

if (loadingDate == null) {

cached = false;

loadingDate = file.lastModified();

} else {

cached = true;

}

if (System.currentTimeMillis() - loadingDate > maxFileAge) {

file.delete();

loadingDates.remove(file);

} else if (!cached) {

loadingDates.put(file, loadingDate);

}

}

return file;

}

@Override

public boolean save(String imageUri, InputStream imageStream, IoUtils.CopyListener listener) throws IOException {

boolean saved = super.save(imageUri, imageStream, listener);

rememberUsage(imageUri);

return saved;

}

@Override

public boolean save(String imageUri, Bitmap bitmap) throws IOException {

boolean saved = super.save(imageUri, bitmap);

rememberUsage(imageUri);

return saved;

}

@Override

public boolean remove(String imageUri) {

loadingDates.remove(getFile(imageUri));

return super.remove(imageUri);

}

@Override

public void clear() {

super.clear();

loadingDates.clear();

}

private void rememberUsage(String imageUri) {

File file = getFile(imageUri);

long currentTime = System.currentTimeMillis();

file.setLastModified(currentTime);

loadingDates.put(file, currentTime);

}

}UnlimitedDiskCache : 没有做任何处理,跟BaseDiskCache一样,不做任何限制的SDCard缓存策略。

public class UnlimitedDiskCache extends BaseDiskCache {

/** @param cacheDir Directory for file caching */

public UnlimitedDiskCache(File cacheDir) {

super(cacheDir);

}

/**

* @param cacheDir Directory for file caching

* @param reserveCacheDir null-ok; Reserve directory for file caching. It's used when the primary directory isn't available.

*/

public UnlimitedDiskCache(File cacheDir, File reserveCacheDir) {

super(cacheDir, reserveCacheDir);

}

/**

* @param cacheDir Directory for file caching

* @param reserveCacheDir null-ok; Reserve directory for file caching. It's used when the primary directory isn't available.

* @param fileNameGenerator {@linkplain com.nostra13.universalimageloader.cache.disc.naming.FileNameGenerator

* Name generator} for cached files

*/

public UnlimitedDiskCache(File cacheDir, File reserveCacheDir, FileNameGenerator fileNameGenerator) {

super(cacheDir, reserveCacheDir, fileNameGenerator);

}

}SDCard缓存机制的扩展类:DiskLruCache (LruDiskCache内部用到的也是这个),可见这个类内部做的事情肯定很重要,我们先来分析下,居然是LRU算法,那么必定要知道最近使用的是哪个文件,哪个文件最久未被使用???【这个是SDCard缓存中比较复杂的一种处理方式,希望大家可以自己也去看看这个类中的实现细节。这边暂时不做解析,如果感兴趣的人,看完了,咱们再留言交流。】

/**

* A cache that uses a bounded amount of space on a filesystem. Each cache

* entry has a string key and a fixed number of values. Each key must match

* the regex <strong>[a-z0-9_-]{1,64}</strong>. Values are byte sequences,

* accessible as streams or files. Each value must be between {@code 0} and

* {@code Integer.MAX_VALUE} bytes in length.

*

* <p>The cache stores its data in a directory on the filesystem. This

* directory must be exclusive to the cache; the cache may delete or overwrite

* files from its directory. It is an error for multiple processes to use the

* same cache directory at the same time.

*

* <p>This cache limits the number of bytes that it will store on the

* filesystem. When the number of stored bytes exceeds the limit, the cache will

* remove entries in the background until the limit is satisfied. The limit is

* not strict: the cache may temporarily exceed it while waiting for files to be

* deleted. The limit does not include filesystem overhead or the cache

* journal so space-sensitive applications should set a conservative limit.

*

* <p>Clients call {@link #edit} to create or update the values of an entry. An

* entry may have only one editor at one time; if a value is not available to be

* edited then {@link #edit} will return null.

* <ul>

* <li>When an entry is being <strong>created</strong> it is necessary to

* supply a full set of values; the empty value should be used as a

* placeholder if necessary.

* <li>When an entry is being <strong>edited</strong>, it is not necessary

* to supply data for every value; values default to their previous

* value.

* </ul>

* Every {@link #edit} call must be matched by a call to {@link Editor#commit}

* or {@link Editor#abort}. Committing is atomic: a read observes the full set

* of values as they were before or after the commit, but never a mix of values.

*

* <p>Clients call {@link #get} to read a snapshot of an entry. The read will

* observe the value at the time that {@link #get} was called. Updates and

* removals after the call do not impact ongoing reads.

*

* <p>This class is tolerant of some I/O errors. If files are missing from the

* filesystem, the corresponding entries will be dropped from the cache. If

* an error occurs while writing a cache value, the edit will fail silently.

* Callers should handle other problems by catching {@code IOException} and

* responding appropriately.

*/

final class DiskLruCache implements Closeable {

static final String JOURNAL_FILE = "journal";

static final String JOURNAL_FILE_TEMP = "journal.tmp";

static final String JOURNAL_FILE_BACKUP = "journal.bkp";

static final String MAGIC = "libcore.io.DiskLruCache";

static final String VERSION_1 = "1";

static final long ANY_SEQUENCE_NUMBER = -1;

static final Pattern LEGAL_KEY_PATTERN = Pattern.compile("[a-z0-9_-]{1,64}");

private static final String CLEAN = "CLEAN";

private static final String DIRTY = "DIRTY";

private static final String REMOVE = "REMOVE";

private static final String READ = "READ";

/*

* This cache uses a journal file named "journal". A typical journal file

* looks like this:

* libcore.io.DiskLruCache

* 1

* 100

* 2

*

* CLEAN 3400330d1dfc7f3f7f4b8d4d803dfcf6 832 21054

* DIRTY 335c4c6028171cfddfbaae1a9c313c52

* CLEAN 335c4c6028171cfddfbaae1a9c313c52 3934 2342

* REMOVE 335c4c6028171cfddfbaae1a9c313c52

* DIRTY 1ab96a171faeeee38496d8b330771a7a

* CLEAN 1ab96a171faeeee38496d8b330771a7a 1600 234

* READ 335c4c6028171cfddfbaae1a9c313c52

* READ 3400330d1dfc7f3f7f4b8d4d803dfcf6

*

* The first five lines of the journal form its header. They are the

* constant string "libcore.io.DiskLruCache", the disk cache's version,

* the application's version, the value count, and a blank line.

*

* Each of the subsequent lines in the file is a record of the state of a

* cache entry. Each line contains space-separated values: a state, a key,

* and optional state-specific values.

* o DIRTY lines track that an entry is actively being created or updated.

* Every successful DIRTY action should be followed by a CLEAN or REMOVE

* action. DIRTY lines without a matching CLEAN or REMOVE indicate that

* temporary files may need to be deleted.

* o CLEAN lines track a cache entry that has been successfully published

* and may be read. A publish line is followed by the lengths of each of

* its values.

* o READ lines track accesses for LRU.

* o REMOVE lines track entries that have been deleted.

*

* The journal file is appended to as cache operations occur. The journal may

* occasionally be compacted by dropping redundant lines. A temporary file named

* "journal.tmp" will be used during compaction; that file should be deleted if

* it exists when the cache is opened.

*/

private final File directory;

private final File journalFile;

private final File journalFileTmp;

private final File journalFileBackup;

private final int appVersion;

private long maxSize;

private int maxFileCount;

private final int valueCount;

private long size = 0;

private int fileCount = 0;

private Writer journalWriter;

private final LinkedHashMap<String, Entry> lruEntries =

new LinkedHashMap<String, Entry>(0, 0.75f, true);

private int redundantOpCount;

/**

* To differentiate between old and current snapshots, each entry is given

* a sequence number each time an edit is committed. A snapshot is stale if

* its sequence number is not equal to its entry's sequence number.

*/

private long nextSequenceNumber = 0;

/** This cache uses a single background thread to evict entries. */

final ThreadPoolExecutor executorService =

new ThreadPoolExecutor(0, 1, 60L, TimeUnit.SECONDS, new LinkedBlockingQueue<Runnable>());

private final Callable<Void> cleanupCallable = new Callable<Void>() {

public Void call() throws Exception {

synchronized (DiskLruCache.this) {

if (journalWriter == null) {

return null; // Closed.

}

trimToSize();

trimToFileCount();

if (journalRebuildRequired()) {

rebuildJournal();

redundantOpCount = 0;

}

}

return null;

}

};

private DiskLruCache(File directory, int appVersion, int valueCount, long maxSize, int maxFileCount) {

this.directory = directory;

this.appVersion = appVersion;

this.journalFile = new File(directory, JOURNAL_FILE);

this.journalFileTmp = new File(directory, JOURNAL_FILE_TEMP);

this.journalFileBackup = new File(directory, JOURNAL_FILE_BACKUP);

this.valueCount = valueCount;

this.maxSize = maxSize;

this.maxFileCount = maxFileCount;

}

/**

* Opens the cache in {@code directory}, creating a cache if none exists

* there.

*

* @param directory a writable directory

* @param valueCount the number of values per cache entry. Must be positive.

* @param maxSize the maximum number of bytes this cache should use to store

* @param maxFileCount the maximum file count this cache should store

* @throws IOException if reading or writing the cache directory fails

*/

public static DiskLruCache open(File directory, int appVersion, int valueCount, long maxSize, int maxFileCount)

throws IOException {

if (maxSize <= 0) {

throw new IllegalArgumentException("maxSize <= 0");

}

if (maxFileCount <= 0) {

throw new IllegalArgumentException("maxFileCount <= 0");

}

if (valueCount <= 0) {

throw new IllegalArgumentException("valueCount <= 0");

}

// If a bkp file exists, use it instead.

File backupFile = new File(directory, JOURNAL_FILE_BACKUP);

if (backupFile.exists()) {

File journalFile = new File(directory, JOURNAL_FILE);

// If journal file also exists just delete backup file.

if (journalFile.exists()) {

backupFile.delete();

} else {

renameTo(backupFile, journalFile, false);

}

}

// Prefer to pick up where we left off.

DiskLruCache cache = new DiskLruCache(directory, appVersion, valueCount, maxSize, maxFileCount);

if (cache.journalFile.exists()) {

try {

cache.readJournal();

cache.processJournal();

cache.journalWriter = new BufferedWriter(

new OutputStreamWriter(new FileOutputStream(cache.journalFile, true), Util.US_ASCII));

return cache;

} catch (IOException journalIsCorrupt) {

System.out

.println("DiskLruCache "

+ directory

+ " is corrupt: "

+ journalIsCorrupt.getMessage()

+ ", removing");

cache.delete();

}

}

// Create a new empty cache.

directory.mkdirs();

cache = new DiskLruCache(directory, appVersion, valueCount, maxSize, maxFileCount);

cache.rebuildJournal();

return cache;

}

private void readJournal() throws IOException {

StrictLineReader reader = new StrictLineReader(new FileInputStream(journalFile), Util.US_ASCII);

try {

String magic = reader.readLine();

String version = reader.readLine();

String appVersionString = reader.readLine();

String valueCountString = reader.readLine();

String blank = reader.readLine();

if (!MAGIC.equals(magic)

|| !VERSION_1.equals(version)

|| !Integer.toString(appVersion).equals(appVersionString)

|| !Integer.toString(valueCount).equals(valueCountString)

|| !"".equals(blank)) {

throw new IOException("unexpected journal header: [" + magic + ", " + version + ", "

+ valueCountString + ", " + blank + "]");

}

int lineCount = 0;

while (true) {

try {

readJournalLine(reader.readLine());

lineCount++;

} catch (EOFException endOfJournal) {

break;

}

}

redundantOpCount = lineCount - lruEntries.size();

} finally {

Util.closeQuietly(reader);

}

}

private void readJournalLine(String line) throws IOException {

int firstSpace = line.indexOf(' ');

if (firstSpace == -1) {

throw new IOException("unexpected journal line: " + line);

}

int keyBegin = firstSpace + 1;

int secondSpace = line.indexOf(' ', keyBegin);

final String key;

if (secondSpace == -1) {

key = line.substring(keyBegin);

if (firstSpace == REMOVE.length() && line.startsWith(REMOVE)) {

lruEntries.remove(key);

return;

}

} else {

key = line.substring(keyBegin, secondSpace);

}

Entry entry = lruEntries.get(key);

if (entry == null) {

entry = new Entry(key);

lruEntries.put(key, entry);

}

if (secondSpace != -1 && firstSpace == CLEAN.length() && line.startsWith(CLEAN)) {

String[] parts = line.substring(secondSpace + 1).split(" ");

entry.readable = true;

entry.currentEditor = null;

entry.setLengths(parts);

} else if (secondSpace == -1 && firstSpace == DIRTY.length() && line.startsWith(DIRTY)) {

entry.currentEditor = new Editor(entry);

} else if (secondSpace == -1 && firstSpace == READ.length() && line.startsWith(READ)) {

// This work was already done by calling lruEntries.get().

} else {

throw new IOException("unexpected journal line: " + line);

}

}

/**

* Computes the initial size and collects garbage as a part of opening the

* cache. Dirty entries are assumed to be inconsistent and will be deleted.

*/

private void processJournal() throws IOException {

deleteIfExists(journalFileTmp);

for (Iterator<Entry> i = lruEntries.values().iterator(); i.hasNext(); ) {

Entry entry = i.next();

if (entry.currentEditor == null) {

for (int t = 0; t < valueCount; t++) {

size += entry.lengths[t];

fileCount++;

}

} else {

entry.currentEditor = null;

for (int t = 0; t < valueCount; t++) {

deleteIfExists(entry.getCleanFile(t));

deleteIfExists(entry.getDirtyFile(t));

}

i.remove();

}

}

}

/**

* Creates a new journal that omits redundant information. This replaces the

* current journal if it exists.

*/

private synchronized void rebuildJournal() throws IOException {

if (journalWriter != null) {

journalWriter.close();

}

Writer writer = new BufferedWriter(

new OutputStreamWriter(new FileOutputStream(journalFileTmp), Util.US_ASCII));

try {

writer.write(MAGIC);

writer.write("\n");

writer.write(VERSION_1);

writer.write("\n");

writer.write(Integer.toString(appVersion));

writer.write("\n");

writer.write(Integer.toString(valueCount));

writer.write("\n");

writer.write("\n");

for (Entry entry : lruEntries.values()) {

if (entry.currentEditor != null) {

writer.write(DIRTY + ' ' + entry.key + '\n');

} else {

writer.write(CLEAN + ' ' + entry.key + entry.getLengths() + '\n');

}

}

} finally {

writer.close();

}

if (journalFile.exists()) {

renameTo(journalFile, journalFileBackup, true);

}

renameTo(journalFileTmp, journalFile, false);

journalFileBackup.delete();

journalWriter = new BufferedWriter(

new OutputStreamWriter(new FileOutputStream(journalFile, true), Util.US_ASCII));

}

private static void deleteIfExists(File file) throws IOException {

if (file.exists() && !file.delete()) {

throw new IOException();

}

}

private static void renameTo(File from, File to, boolean deleteDestination) throws IOException {

if (deleteDestination) {

deleteIfExists(to);

}

if (!from.renameTo(to)) {

throw new IOException();

}

}

/**

* Returns a snapshot of the entry named {@code key}, or null if it doesn't

* exist is not currently readable. If a value is returned, it is moved to

* the head of the LRU queue.

*/

public synchronized Snapshot get(String key) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null) {

return null;

}

if (!entry.readable) {

return null;

}

// Open all streams eagerly to guarantee that we see a single published

// snapshot. If we opened streams lazily then the streams could come

// from different edits.

File[] files = new File[valueCount];

InputStream[] ins = new InputStream[valueCount];

try {

File file;

for (int i = 0; i < valueCount; i++) {

file = entry.getCleanFile(i);

files[i] = file;

ins[i] = new FileInputStream(file);

}

} catch (FileNotFoundException e) {

// A file must have been deleted manually!

for (int i = 0; i < valueCount; i++) {

if (ins[i] != null) {

Util.closeQuietly(ins[i]);

} else {

break;

}

}

return null;

}

redundantOpCount++;

journalWriter.append(READ + ' ' + key + '\n');

if (journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

return new Snapshot(key, entry.sequenceNumber, files, ins, entry.lengths);

}

/**

* Returns an editor for the entry named {@code key}, or null if another

* edit is in progress.

*/

public Editor edit(String key) throws IOException {

return edit(key, ANY_SEQUENCE_NUMBER);

}

private synchronized Editor edit(String key, long expectedSequenceNumber) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (expectedSequenceNumber != ANY_SEQUENCE_NUMBER && (entry == null

|| entry.sequenceNumber != expectedSequenceNumber)) {

return null; // Snapshot is stale.

}

if (entry == null) {

entry = new Entry(key);

lruEntries.put(key, entry);

} else if (entry.currentEditor != null) {

return null; // Another edit is in progress.

}

Editor editor = new Editor(entry);

entry.currentEditor = editor;

// Flush the journal before creating files to prevent file leaks.

journalWriter.write(DIRTY + ' ' + key + '\n');

journalWriter.flush();

return editor;

}

/** Returns the directory where this cache stores its data. */

public File getDirectory() {

return directory;

}

/**

* Returns the maximum number of bytes that this cache should use to store

* its data.

*/

public synchronized long getMaxSize() {

return maxSize;

}

/** Returns the maximum number of files that this cache should store */

public synchronized int getMaxFileCount() {

return maxFileCount;

}

/**

* Changes the maximum number of bytes the cache can store and queues a job

* to trim the existing store, if necessary.

*/

public synchronized void setMaxSize(long maxSize) {

this.maxSize = maxSize;

executorService.submit(cleanupCallable);

}

/**

* Returns the number of bytes currently being used to store the values in

* this cache. This may be greater than the max size if a background

* deletion is pending.

*/

public synchronized long size() {

return size;

}

/**

* Returns the number of files currently being used to store the values in

* this cache. This may be greater than the max file count if a background

* deletion is pending.

*/

public synchronized long fileCount() {

return fileCount;

}

private synchronized void completeEdit(Editor editor, boolean success) throws IOException {

Entry entry = editor.entry;

if (entry.currentEditor != editor) {

throw new IllegalStateException();

}

// If this edit is creating the entry for the first time, every index must have a value.

if (success && !entry.readable) {

for (int i = 0; i < valueCount; i++) {

if (!editor.written[i]) {

editor.abort();

throw new IllegalStateException("Newly created entry didn't create value for index " + i);

}

if (!entry.getDirtyFile(i).exists()) {

editor.abort();

return;

}

}

}

for (int i = 0; i < valueCount; i++) {

File dirty = entry.getDirtyFile(i);

if (success) {

if (dirty.exists()) {

File clean = entry.getCleanFile(i);

dirty.renameTo(clean);

long oldLength = entry.lengths[i];

long newLength = clean.length();

entry.lengths[i] = newLength;

size = size - oldLength + newLength;

fileCount++;

}

} else {

deleteIfExists(dirty);

}

}

redundantOpCount++;

entry.currentEditor = null;

if (entry.readable | success) {

entry.readable = true;

journalWriter.write(CLEAN + ' ' + entry.key + entry.getLengths() + '\n');

if (success) {

entry.sequenceNumber = nextSequenceNumber++;

}

} else {

lruEntries.remove(entry.key);

journalWriter.write(REMOVE + ' ' + entry.key + '\n');

}

journalWriter.flush();

if (size > maxSize || fileCount > maxFileCount || journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

}

/**

* We only rebuild the journal when it will halve the size of the journal

* and eliminate at least 2000 ops.

*/

private boolean journalRebuildRequired() {

final int redundantOpCompactThreshold = 2000;

return redundantOpCount >= redundantOpCompactThreshold //

&& redundantOpCount >= lruEntries.size();

}

/**

* Drops the entry for {@code key} if it exists and can be removed. Entries

* actively being edited cannot be removed.

*

* @return true if an entry was removed.

*/

public synchronized boolean remove(String key) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null || entry.currentEditor != null) {

return false;

}

for (int i = 0; i < valueCount; i++) {

File file = entry.getCleanFile(i);

if (file.exists() && !file.delete()) {

throw new IOException("failed to delete " + file);

}

size -= entry.lengths[i];

fileCount--;

entry.lengths[i] = 0;

}

redundantOpCount++;

journalWriter.append(REMOVE + ' ' + key + '\n');

lruEntries.remove(key);

if (journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

return true;

}

/** Returns true if this cache has been closed. */

public synchronized boolean isClosed() {

return journalWriter == null;

}

private void checkNotClosed() {

if (journalWriter == null) {

throw new IllegalStateException("cache is closed");

}

}

/** Force buffered operations to the filesystem. */

public synchronized void flush() throws IOException {

checkNotClosed();

trimToSize();

trimToFileCount();

journalWriter.flush();

}

/** Closes this cache. Stored values will remain on the filesystem. */

public synchronized void close() throws IOException {

if (journalWriter == null) {

return; // Already closed.

}

for (Entry entry : new ArrayList<Entry>(lruEntries.values())) {

if (entry.currentEditor != null) {

entry.currentEditor.abort();

}

}

trimToSize();

trimToFileCount();

journalWriter.close();

journalWriter = null;

}

private void trimToSize() throws IOException {

while (size > maxSize) {

Map.Entry<String, Entry> toEvict = lruEntries.entrySet().iterator().next();

remove(toEvict.getKey());

}

}

private void trimToFileCount() throws IOException {

while (fileCount > maxFileCount) {

Map.Entry<String, Entry> toEvict = lruEntries.entrySet().iterator().next();

remove(toEvict.getKey());

}

}

/**

* Closes the cache and deletes all of its stored values. This will delete

* all files in the cache directory including files that weren't created by

* the cache.

*/

public void delete() throws IOException {

close();

Util.deleteContents(directory);

}

private void validateKey(String key) {

Matcher matcher = LEGAL_KEY_PATTERN.matcher(key);

if (!matcher.matches()) {

throw new IllegalArgumentException("keys must match regex [a-z0-9_-]{1,64}: \"" + key + "\"");

}

}

private static String inputStreamToString(InputStream in) throws IOException {

return Util.readFully(new InputStreamReader(in, Util.UTF_8));

}

/** A snapshot of the values for an entry. */

public final class Snapshot implements Closeable {

private final String key;

private final long sequenceNumber;

private File[] files;

private final InputStream[] ins;

private final long[] lengths;

private Snapshot(String key, long sequenceNumber, File[] files, InputStream[] ins, long[] lengths) {

this.key = key;

this.sequenceNumber = sequenceNumber;

this.files = files;

this.ins = ins;

this.lengths = lengths;

}

/**

* Returns an editor for this snapshot's entry, or null if either the

* entry has changed since this snapshot was created or if another edit

* is in progress.

*/

public Editor edit() throws IOException {

return DiskLruCache.this.edit(key, sequenceNumber);

}

/** Returns file with the value for {@code index}. */

public File getFile(int index) {

return files[index];

}

/** Returns the unbuffered stream with the value for {@code index}. */

public InputStream getInputStream(int index) {

return ins[index];

}

/** Returns the string value for {@code index}. */

public String getString(int index) throws IOException {

return inputStreamToString(getInputStream(index));

}

/** Returns the byte length of the value for {@code index}. */

public long getLength(int index) {

return lengths[index];

}

public void close() {

for (InputStream in : ins) {

Util.closeQuietly(in);

}

}

}

private static final OutputStream NULL_OUTPUT_STREAM = new OutputStream() {

@Override

public void write(int b) throws IOException {

// Eat all writes silently. Nom nom.

}

};

/** Edits the values for an entry. */

public final class Editor {

private final Entry entry;

private final boolean[] written;

private boolean hasErrors;

private boolean committed;

private Editor(Entry entry) {

this.entry = entry;

this.written = (entry.readable) ? null : new boolean[valueCount];

}

/**

* Returns an unbuffered input stream to read the last committed value,

* or null if no value has been committed.

*/

public InputStream newInputStream(int index) throws IOException {

synchronized (DiskLruCache.this) {

if (entry.currentEditor != this) {

throw new IllegalStateException();

}

if (!entry.readable) {

return null;

}

try {

return new FileInputStream(entry.getCleanFile(index));

} catch (FileNotFoundException e) {

return null;

}

}

}

/**

* Returns the last committed value as a string, or null if no value

* has been committed.

*/

public String getString(int index) throws IOException {

InputStream in = newInputStream(index);

return in != null ? inputStreamToString(in) : null;

}

/**

* Returns a new unbuffered output stream to write the value at

* {@code index}. If the underlying output stream encounters errors

* when writing to the filesystem, this edit will be aborted when

* {@link #commit} is called. The returned output stream does not throw

* IOExceptions.

*/

public OutputStream newOutputStream(int index) throws IOException {

synchronized (DiskLruCache.this) {

if (entry.currentEditor != this) {

throw new IllegalStateException();

}

if (!entry.readable) {

written[index] = true;

}

File dirtyFile = entry.getDirtyFile(index);

FileOutputStream outputStream;

try {

outputStream = new FileOutputStream(dirtyFile);

} catch (FileNotFoundException e) {

// Attempt to recreate the cache directory.

directory.mkdirs();

try {

outputStream = new FileOutputStream(dirtyFile);

} catch (FileNotFoundException e2) {

// We are unable to recover. Silently eat the writes.

return NULL_OUTPUT_STREAM;

}

}

return new FaultHidingOutputStream(outputStream);

}

}

/** Sets the value at {@code index} to {@code value}. */

public void set(int index, String value) throws IOException {

Writer writer = null;

try {

writer = new OutputStreamWriter(newOutputStream(index), Util.UTF_8);

writer.write(value);

} finally {

Util.closeQuietly(writer);

}

}

/**

* Commits this edit so it is visible to readers. This releases the

* edit lock so another edit may be started on the same key.

*/

public void commit() throws IOException {

if (hasErrors) {

completeEdit(this, false);

remove(entry.key); // The previous entry is stale.

} else {

completeEdit(this, true);

}

committed = true;

}

/**

* Aborts this edit. This releases the edit lock so another edit may be

* started on the same key.

*/

public void abort() throws IOException {

completeEdit(this, false);

}

public void abortUnlessCommitted() {

if (!committed) {

try {

abort();

} catch (IOException ignored) {

}

}

}

private class FaultHidingOutputStream extends FilterOutputStream {

private FaultHidingOutputStream(OutputStream out) {

super(out);

}

@Override public void write(int oneByte) {

try {

out.write(oneByte);

} catch (IOException e) {

hasErrors = true;

}

}

@Override public void write(byte[] buffer, int offset, int length) {

try {

out.write(buffer, offset, length);

} catch (IOException e) {

hasErrors = true;

}

}

@Override public void close() {

try {

out.close();

} catch (IOException e) {

hasErrors = true;

}

}

@Override public void flush() {

try {

out.flush();

} catch (IOException e) {

hasErrors = true;

}

}

}

}

private final class Entry {

private final String key;

/** Lengths of this entry's files. */

private final long[] lengths;

/** True if this entry has ever been published. */

private boolean readable;

/** The ongoing edit or null if this entry is not being edited. */

private Editor currentEditor;

/** The sequence number of the most recently committed edit to this entry. */

private long sequenceNumber;

private Entry(String key) {

this.key = key;

this.lengths = new long[valueCount];

}

public String getLengths() throws IOException {

StringBuilder result = new StringBuilder();

for (long size : lengths) {

result.append(' ').append(size);

}

return result.toString();

}

/** Set lengths using decimal numbers like "10123". */

private void setLengths(String[] strings) throws IOException {

if (strings.length != valueCount) {

throw invalidLengths(strings);

}

try {

for (int i = 0; i < strings.length; i++) {

lengths[i] = Long.parseLong(strings[i]);

}

} catch (NumberFormatException e) {

throw invalidLengths(strings);

}

}

private IOException invalidLengths(String[] strings) throws IOException {

throw new IOException("unexpected journal line: " + java.util.Arrays.toString(strings));

}

public File getCleanFile(int i) {

return new File(directory, key + "" + i);

}

public File getDirtyFile(int i) {

return new File(directory, key + "" + i + ".tmp");

}

}

}总结

这个ImageLoader框架系列的源码分析已经结束了,自我感觉似乎有点大篇幅的样子,不容易引起大家的兴趣,但是自知言语功力并不深厚,无法三言两语阐述清楚,有些地方故意留下伏笔,鼓励大家留言交流,这样子可以一起进步,之后的其他框架的源码分析会注意言语方面的提升,希望做到言简意赅。

另外,如果有在接触到ImageLoader中有什么特殊需求或者有什么问题,欢迎给我留言交流哈。