1: 豆瓣评论分析:

# 1). 获取豆瓣最新上映的所有电影的前2页评论信息;

# 2). 清洗数据;

# 3). 分析每个电影评论信息分析绘制成词云, 保存为png图片,文件名为: 电影名.png;

import threading

import requests

from bs4 import BeautifulSoup

# # 1). 爬取某一页的评论信息;

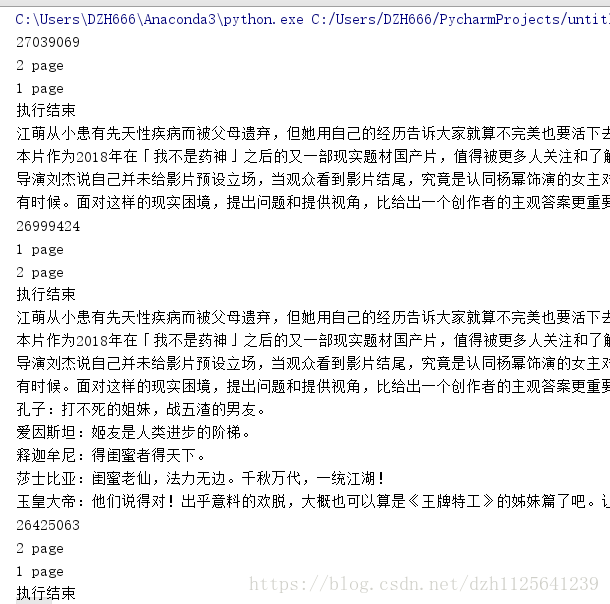

def getOnePageComment(id, pageNum):

# 1). 根据页数确定start变量的值

# 第一页: https://movie.douban.com/subject/26425063/comments?start=0&limit=20&sort=new_score&status=P

# 第二页: https://movie.douban.com/subject/26425063/comments?start=20&limit=20&sort=new_score&status=P

# 第三页: https://movie.douban.com/subject/26425063/comments?start=40&limit=20&sort=new_score&status=P

start = (pageNum-1)*20

url = "https://movie.douban.com/subject/%s/comments?start=%s&limit=20&sort=new_score&status=P" %(id, start)

# 2). 爬取评论信息的网页内容

content = requests.get(url).text

# 3). 通过bs4分析网页

soup = BeautifulSoup(content,'lxml')

# 分析网页得知, 所有的评论信息都是在span标签, 并且class为short;

commentsList = soup.find_all('span', class_='short')

pageComments = ""

# 依次遍历每一个span标签, 获取标签里面的评论信息, 并将所有的评论信息存储到pageComments变量中;

for commentTag in commentsList:

pageComments += commentTag.text

# return pageComments

print("%s page" %(pageNum))

#定义comments为全局变量

global comments

comments += pageComments

# 2).爬取最新电影的前2页评论信息;

url = 'https://movie.douban.com/cinema/nowplaying/xian/'

response = requests.get(url)

content = response.text

soup = BeautifulSoup(content, 'lxml')

now_playing_movie = soup.find_all('li', class_="list-item")

movie = []

for item in now_playing_movie:

movie2 = {}

movie2['id'] = item['id']

movie.append(movie2['id'])

comments = ''

threads = []

# 爬取前2页的评论信息;获取前几页就循环几次;

for id in movie:

print(id)

for pageNum in range(2): # 0 , 1 2

pageNum = pageNum + 1

# getOnePageComment(id, pageNum)

# 通过启动多线程获取每页评论信息

t = threading.Thread(target=getOnePageComment, args=(id, pageNum))

threads.append(t)

t.start()

# 等待所有的子线程执行结束, 再执行主线程内容;

_ = [thread.join() for thread in threads]

print("执行结束")

print(comments)

#2:爬取慕客网所有关于python的课程名及描述信息, 并通过词云进行分析展示;

- 网址: https://www.imooc.com/search/course?words=python

#3:python爬取今日百度热点前10的新闻