一、配置:

1、下载并解压:

http://archive.cloudera.com/cdh5/cdh/5/oozie-4.1.0-cdh5.14.2.tar.gz

tar -zxvf oozie-4.1.0-cdh5.14.2.tar.gz -C /opt/cdh5.14.2/解压完目录如下:

[root@master oozie-4.1.0]# ll

total 1284320

drwxr-xr-x 2 1106 4001 4096 Mar 28 04:40 bin

drwxr-xr-x 4 1106 4001 4096 Mar 28 04:19 conf

drwxr-xr-x 6 1106 4001 4096 Mar 28 04:34 docs

drwxr-xr-x 2 1106 4001 4096 Mar 28 04:40 lib

drwxr-xr-x 2 1106 4001 12288 Mar 28 04:40 libtools

-rw-r--r-- 1 1106 4001 37664 Mar 28 04:42 LICENSE.txt

-rw-r--r-- 1 1106 4001 909 Mar 28 04:42 NOTICE.txt

drwxr-xr-x 2 1106 4001 4096 Mar 28 04:40 oozie-core

-rw-r--r-- 1 1106 4001 46171 Mar 28 04:34 oozie-examples.tar.gz

-rw-r--r-- 1 1106 4001 134482441 Mar 28 04:32 oozie-hadooplibs-4.1.0-cdh5.14.2.tar.gz

drwxr-xr-x 9 1106 4001 4096 Mar 28 04:39 oozie-server

-r--r--r-- 1 1106 4001 520547352 Mar 28 04:41 oozie-sharelib-4.1.0-cdh5.14.2.tar.gz

-r--r--r-- 1 1106 4001 546253529 Mar 28 04:42 oozie-sharelib-4.1.0-cdh5.14.2-yarn.tar.gz

-rw-r--r-- 1 1106 4001 113625526 Mar 28 04:38 oozie.war

-rw-r--r-- 1 1106 4001 83519 Mar 28 04:19 release-log.txt

drwxr-xr-x 21 1106 4001 4096 Mar 28 04:42 src

2、关闭Hadoop的相关进程.

3、根据官网的提示,下载 ExtJS 2.2

4、根据官网的提示配置hadoop的core-site.xml:

NOTE: Configure the Hadoop cluster with proxyuser for the Oozie process.

The following two properties are required in Hadoop core-site.xml:

<!-- OOZIE -->

<property>

<name>hadoop.proxyuser.[OOZIE_SERVER_USER].hosts</name>

<value>[OOZIE_SERVER_HOSTNAME]</value>

</property>

<property>

<name>hadoop.proxyuser.[OOZIE_SERVER_USER].groups</name>

<value>[USER_GROUPS_THAT_ALLOW_IMPERSONATION]</value>

</property>

Replace the capital letter sections with specific values and then restart Hadoop.

具体配置如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--指定namenode的地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns1</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<!--用来指定使用hadoop时产生文件的存放目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/cdh5.14.2/hadoop-2.6.0/data/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master.cdh.com:2181,slave1.cdh.com:2181,slave2.cdh.com:2181</value>

</property>

<!-- OOZIE -->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>master.cdh.com</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>root</value><!--<value>*</value> 一开始配置这样,运行例子一直无法运行:User: root is not allowed to impersonate root-->

</property>

</configuration>

5、解压oozie-hadooplibs-4.1.0-cdh5.14.2.tar.gz到当前目录:

tar -zxvf oozie-hadooplibs-4.1.0-cdh5.14.2.tar.gz解压完后多了:/opt/cdh5.14.2/oozie-4.1.0/oozie-4.1.0-cdh5.14.2/hadooplibs

6、在Oozie的目录下创建目录:libext/,将hadooplibs下的jar包拷贝到libext目录下:

cp -r oozie-4.1.0-cdh5.14.2/hadooplibs/hadooplib-2.6.0-cdh5.14.2.oozie-4.1.0-cdh5.14.2/* libext/7 、将下载好的ext-2.2.zip拷贝到 libext/ 下:

cp /opt/software/ext-2.2.zip libext/8、创建war包以及关联文件,官网提示如下:

A "sharelib create -fs fs_default_name [-locallib sharelib]" command is available when running oozie-setup.sh for uploading new sharelib into hdfs where the first argument is the default fs name and the second argument is the Oozie sharelib to install, it can be a tarball or the expanded version of it. If the second argument is omitted, the Oozie sharelib tarball from the Oozie installation directory will be used. Upgrade command is deprecated, one should use create command to create new version of sharelib. Sharelib files are copied to new lib_ directory. At start, server picks the sharelib from latest time-stamp directory. While starting server also purge sharelib directory which is older than sharelib retention days (defined as oozie.service.ShareLibService.temp.sharelib.retention.days and 7 days is default).

"prepare-war [-d directory]" command is for creating war files for oozie with an optional alternative directory other than libext.

db create|upgrade|postupgrade -run [-sqlfile ] command is for create, upgrade or postupgrade oozie db with an optional sql file

Run the oozie-setup.sh script to configure Oozie with all the components added to the libext/ directory.

$ bin/oozie-setup.sh prepare-war [-d directory] [-secure]

sharelib create -fs <FS_URI> [-locallib <PATH>]

sharelib upgrade -fs <FS_URI> [-locallib <PATH>]

db create|upgrade|postupgrade -run [-sqlfile <FILE>]

具体操作如下:

a.创建war包:

bin/oozie-setup.sh prepare-war结果:

New Oozie WAR file with added 'ExtJS library, JARs' at /opt/cdh5.14.2/oozie-4.1.0/oozie-server/webapps/oozie.war

INFO: Oozie is ready to be started

b.启动hadoop相关进程

c.执行官网的第二条命令:

先配置oozie-site.xml:

<?xml version="1.0"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<!--

Refer to the oozie-default.xml file for the complete list of

Oozie configuration properties and their default values.

-->

<!-- Proxyuser Configuration -->

<!--

<property>

<name>oozie.service.ProxyUserService.proxyuser.#USER#.hosts</name>

<value>*</value>

<description>

List of hosts the '#USER#' user is allowed to perform 'doAs'

operations.

The '#USER#' must be replaced with the username o the user who is

allowed to perform 'doAs' operations.

The value can be the '*' wildcard or a list of hostnames.

For multiple users copy this property and replace the user name

in the property name.

</description>

</property>

<property>

<name>oozie.service.ProxyUserService.proxyuser.#USER#.groups</name>

<value>*</value>

<description>

List of groups the '#USER#' user is allowed to impersonate users

from to perform 'doAs' operations.

The '#USER#' must be replaced with the username o the user who is

allowed to perform 'doAs' operations.

The value can be the '*' wildcard or a list of groups.

For multiple users copy this property and replace the user name

in the property name.

</description>

</property>

-->

<!-- Default proxyuser configuration for Hue -->

<property>

<name>oozie.service.ProxyUserService.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>oozie.service.ProxyUserService.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>oozie.service.HadoopAccessorService.hadoop.configurations</name>

<value>*=/opt/cdh5.14.2/hadoop-2.6.0/etc/hadoop</value>

</property>

</configuration>

bin/oozie-setup.sh sharelib create -fs hdfs://master.cdh.com:8020 -locallib oozie-sharelib-4.1.0-cdh5.14.2-yarn.tar.gz[root@master oozie-4.1.0]# bin/oozie-setup.sh sharelib create -fs hdfs://ns1 -locallib oozie-sharelib-4.1.0-cdh5.14.2-yarn.tar.gz

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cdh5.14.2/oozie-4.1.0/libtools/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cdh5.14.2/oozie-4.1.0/libtools/slf4j-simple-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cdh5.14.2/oozie-4.1.0/libext/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

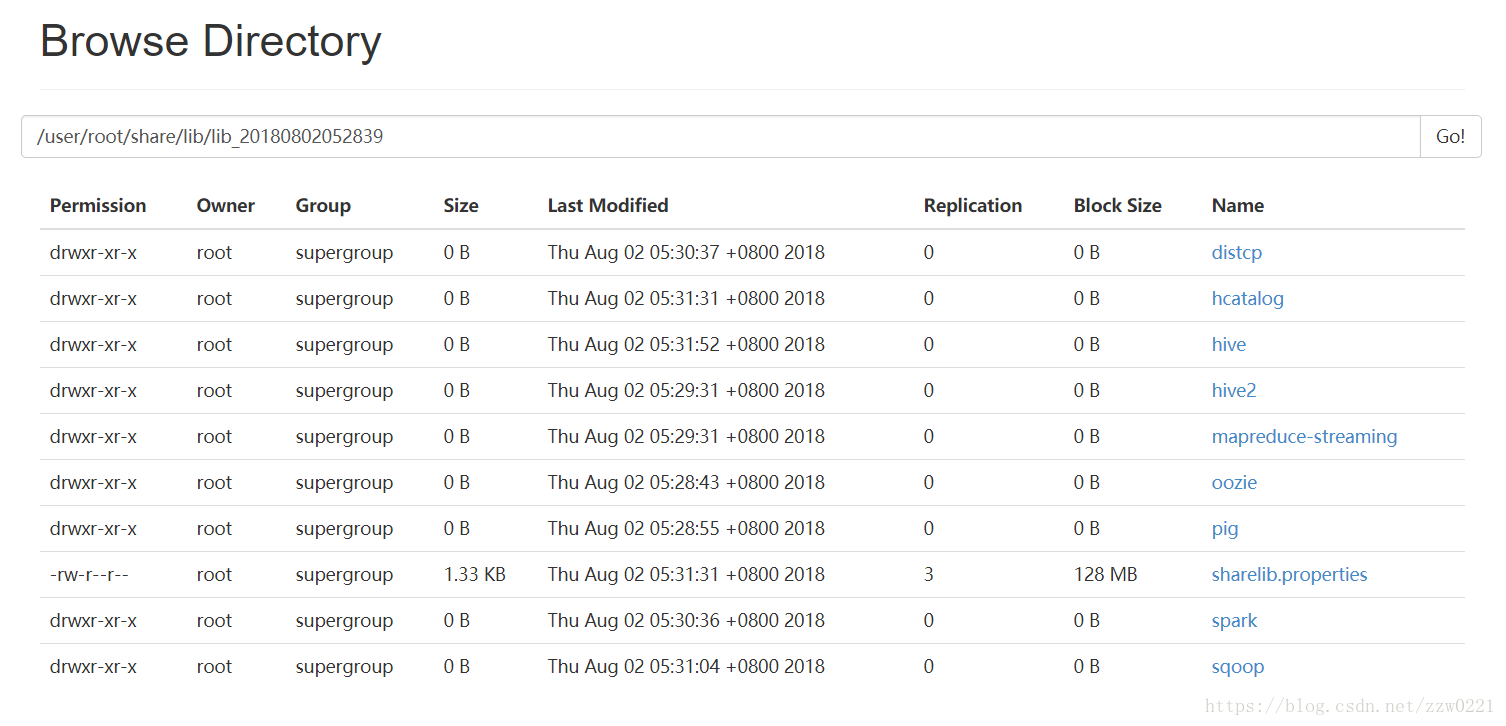

the destination path for sharelib is: /user/root/share/lib/lib_20180802052839结果:在hdfs生成了相应的文件:

d.创建数据库:

bin/ooziedb.sh create -sqlfile oozie.sql -run DB Connection结果:

[root@master oozie-4.1.0]# bin/ooziedb.sh create -sqlfile oozie.sql -run DB Connection

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

Validate DB Connection

DONE

Check DB schema does not exist

DONE

Check OOZIE_SYS table does not exist

DONE

Create SQL schema

DONE

Create OOZIE_SYS table

DONE

Oozie DB has been created for Oozie version '4.1.0-cdh5.14.2'

The SQL commands have been written to: oozie.sql

[root@master oozie-4.1.0]#

9、启动Oozie:

bin/oozied.sh start结果:

[root@master oozie-4.1.0]# bin/oozied.sh start

Setting OOZIE_HOME: /opt/cdh5.14.2/oozie-4.1.0

Setting OOZIE_CONFIG: /opt/cdh5.14.2/oozie-4.1.0/conf

Sourcing: /opt/cdh5.14.2/oozie-4.1.0/conf/oozie-env.sh

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

Setting OOZIE_CONFIG_FILE: oozie-site.xml

Setting OOZIE_DATA: /opt/cdh5.14.2/oozie-4.1.0/data

Setting OOZIE_LOG: /opt/cdh5.14.2/oozie-4.1.0/logs

Setting OOZIE_LOG4J_FILE: oozie-log4j.properties

Setting OOZIE_LOG4J_RELOAD: 10

Setting OOZIE_HTTP_HOSTNAME: master.cdh.com

Setting OOZIE_HTTP_PORT: 11000

Setting OOZIE_ADMIN_PORT: 11001

Setting OOZIE_HTTPS_PORT: 11443

Setting OOZIE_BASE_URL: http://master.cdh.com:11000/oozie

Setting CATALINA_BASE: /opt/cdh5.14.2/oozie-4.1.0/oozie-server

Setting OOZIE_HTTPS_KEYSTORE_FILE: /root/.keystore

Setting OOZIE_HTTPS_KEYSTORE_PASS: password

Setting OOZIE_HTTPS_CIPHERS: TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA384,TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256,TLS_ECDH_RSA_WITH_AES_256_CBC_SHA384,TLS_ECDH_RSA_WITH_AES_256_CBC_SHA,TLS_ECDH_RSA_WITH_AES_128_CBC_SHA256,TLS_ECDH_RSA_WITH_AES_128_CBC_SHA,TLS_ECDH_RSA_WITH_3DES_EDE_CBC_SHA,TLS_RSA_WITH_AES_256_CBC_SHA256,TLS_RSA_WITH_AES_256_CBC_SHA,TLS_RSA_WITH_AES_128_CBC_SHA256,TLS_RSA_WITH_AES_128_CBC_SHA,TLS_RSA_WITH_3DES_EDE_CBC_SHA,TLS_DHE_RSA_WITH_AES_256_CBC_SHA256,TLS_DHE_RSA_WITH_AES_256_CBC_SHA,TLS_DHE_RSA_WITH_AES_128_CBC_SHA256,TLS_DHE_RSA_WITH_AES_128_CBC_SHA,TLS_DHE_RSA_WITH_3DES_EDE_CBC_SHA

Setting OOZIE_INSTANCE_ID: master.cdh.com

Setting CATALINA_OUT: /opt/cdh5.14.2/oozie-4.1.0/logs/catalina.out

Setting CATALINA_PID: /opt/cdh5.14.2/oozie-4.1.0/oozie-server/temp/oozie.pid

Using CATALINA_OPTS: -Xmx1024m -Dderby.stream.error.file=/opt/cdh5.14.2/oozie-4.1.0/logs/derby.log

Adding to CATALINA_OPTS: -Doozie.home.dir=/opt/cdh5.14.2/oozie-4.1.0 -Doozie.config.dir=/opt/cdh5.14.2/oozie-4.1.0/conf -Doozie.log.dir=/opt/cdh5.14.2/oozie-4.1.0/logs -Doozie.data.dir=/opt/cdh5.14.2/oozie-4.1.0/data -Doozie.instance.id=master.cdh.com -Doozie.config.file=oozie-site.xml -Doozie.log4j.file=oozie-log4j.properties -Doozie.log4j.reload=10 -Doozie.http.hostname=master.cdh.com -Doozie.admin.port=11001 -Doozie.http.port=11000 -Doozie.https.port=11443 -Doozie.base.url=http://master.cdh.com:11000/oozie -Doozie.https.keystore.file=/root/.keystore -Doozie.https.keystore.pass=password -Doozie.https.ciphers=TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA384,TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256,TLS_ECDH_RSA_WITH_AES_256_CBC_SHA384,TLS_ECDH_RSA_WITH_AES_256_CBC_SHA,TLS_ECDH_RSA_WITH_AES_128_CBC_SHA256,TLS_ECDH_RSA_WITH_AES_128_CBC_SHA,TLS_ECDH_RSA_WITH_3DES_EDE_CBC_SHA,TLS_RSA_WITH_AES_256_CBC_SHA256,TLS_RSA_WITH_AES_256_CBC_SHA,TLS_RSA_WITH_AES_128_CBC_SHA256,TLS_RSA_WITH_AES_128_CBC_SHA,TLS_RSA_WITH_3DES_EDE_CBC_SHA,TLS_DHE_RSA_WITH_AES_256_CBC_SHA256,TLS_DHE_RSA_WITH_AES_256_CBC_SHA,TLS_DHE_RSA_WITH_AES_128_CBC_SHA256,TLS_DHE_RSA_WITH_AES_128_CBC_SHA,TLS_DHE_RSA_WITH_3DES_EDE_CBC_SHA -Djava.library.path=

Using CATALINA_BASE: /opt/cdh5.14.2/oozie-4.1.0/oozie-server

Using CATALINA_HOME: /opt/cdh5.14.2/oozie-4.1.0/oozie-server

Using CATALINA_TMPDIR: /opt/cdh5.14.2/oozie-4.1.0/oozie-server/temp

Using JRE_HOME: /opt/java/jdk1.7.0_80

Using CLASSPATH: /opt/cdh5.14.2/oozie-4.1.0/oozie-server/bin/bootstrap.jar

Using CATALINA_PID: /opt/cdh5.14.2/oozie-4.1.0/oozie-server/temp/oozie.pid

查看当前进程多了一个Bootstrap:

[root@master oozie-4.1.0]# jps

91772 JobHistoryServer

90742 NameNode

91329 ResourceManager

90616 QuorumPeerMain

91193 DFSZKFailoverController

90841 DataNode

97592 Bootstrap

91027 JournalNode

91428 NodeManager

97606 Jps

或者用另外一种方式启动为前台进程:

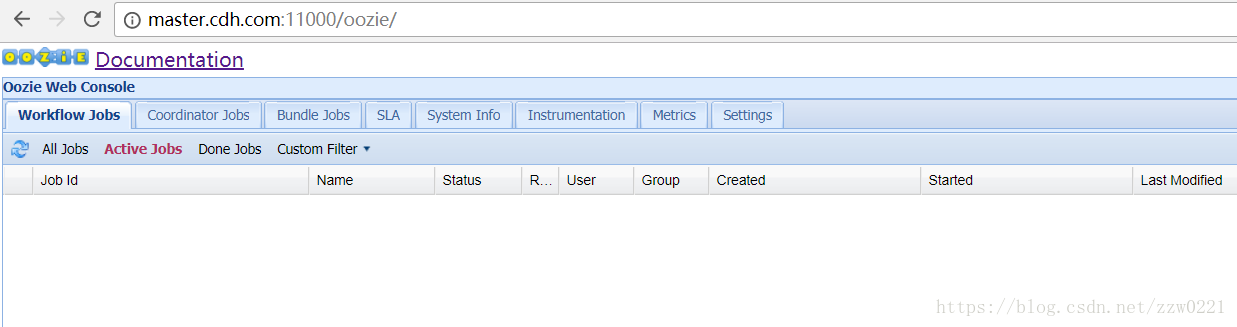

bin/oozied.sh run启动后访问地址:

二、Oozie的官方例子:

2.1、解压官方的例子包:

tar -zxvf oozie-examples.tar.gz解压完成后生成文件夹examples,examples里面的文件如下:

[root@master examples]# ll

total 12

drwxr-xr-x 26 1106 4001 4096 Mar 28 04:19 apps

drwxr-xr-x 4 root root 4096 Aug 2 10:17 input-data

drwxr-xr-x 3 1106 4001 4096 Mar 28 04:19 src

2.2、将examples上传到hdfs

[root@master hadoop-2.6.0]# bin/hdfs dfs -put /opt/cdh5.14.2/oozie-4.1.0/examples/ examples2.3、修改/opt/cdh5.14.2/oozie-4.1.0/examples/apps/map-reduce/job.properties

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

nameNode=hdfs:ns1

jobTracker=master.cdh.com:8032

queueName=default

examplesRoot=examples

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/apps/map-reduce/workflow.xml

outputDir=map-reduce

2.4、将本地的/opt/cdh5.14.2/oozie-4.1.0/examples/apps/map-reduce/job.properties替换到hdfs上的job.properties

2.5、运行:

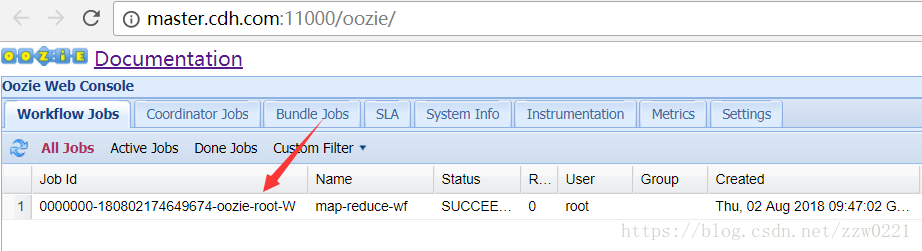

[root@master oozie-4.1.0]# bin/oozie job -oozie http://master.cdh.com:11000/oozie -config examples/apps/map-reduce/job.properties -run

job: 0000000-180802174649674-oozie-root-W

2.6、在Oozie上查看运行情况:执行运行命令后产生的job id与Oozie页面上的Job id相对应

三、编写并允许新API中的Oozie workflow MapReduce Action

3.1、在Oozie的根目录创建目录oozie-apps,并把examples/apps目录下的map-reduce复制到oozie-apps中,并重命名为mr-usercount-wf

3.2、修改job.properties

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

nameNode=hdfs://ns1

jobTracker=master.cdh.com:8032

queueName=default

oozieAppsRoot=user/root/oozie-apps

oozieDataRoot=user/root/oozie/datas

oozie.wf.application.path=${nameNode}/${oozieAppsRoot}/mr-usercount-wf/workflow.xml

inputDir=mr-usercount-wf/input

outputDir=mr-usercount-wf/output

3.3、修改workflow.xml

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<workflow-app xmlns="uri:oozie:workflow:0.5" name="mr-usercount-wf">

<start to="mr-node-usercount"/>

<action name="mr-node-usercount">

<map-reduce>

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<prepare>

<delete path="${nameNode}/${oozieDataRoot}/${outputDir}"/>

</prepare>

<configuration>

<property>

<name>mapreduce.job.queuename</name>

<value>${queueName}</value>

</property>

<property>

<name>mapred.mapper.new-api</name>

<value>true</value>

</property>

<property>

<name>mapred.reducer.new-api</name>

<value>true</value>

</property>

<!--map key value class-->

<property>

<name>mapreduce.job.map.class</name>

<value>com.zzw.mapreduce.userinfo.UserInfoMapper</value>

</property>

<property>

<name>mapreduce.map.output.key.class</name>

<value>org.apache.hadoop.io.Text</value>

</property>

<property>

<name>mapreduce.map.output.value.class</name>

<value>org.apache.hadoop.io.IntWritable</value>

</property>

<!--reduce key value class-->

<property>

<name>mapreduce.job.reduce.class</name>

<value>com.zzw.mapreduce.userinfo.UserInfoReducer</value>

</property>

<property>

<name>mapreduce.job.output.key.class</name>

<value>org.apache.hadoop.io.Text</value>

</property>

<property>

<name>mapreduce.job.output.value.class</name>

<value>org.apache.hadoop.io.IntWritable</value>

</property>

<property>

<name>mapreduce.input.fileinputformat.inputdir</name>

<value>${nameNode}/${oozieDataRoot}/${inputDir}</value>

</property>

<property>

<name>mapreduce.output.fileoutputformat.outputdir</name>

<value>${nameNode}/${oozieDataRoot}/${outputDir}</value>

</property>

</configuration>

</map-reduce>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="fail">

<message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>

3.4、将之前打包的统计每月新注册用户的jar包放到oozie-apps/lib 目录下

3.5、将oozie-apps上传到hdfs

3.6、在hdfs上创建input的目录,如job.properties所定义,并把文件放到hdfs

bin/hdfs dfs -mkdir -p oozie/datas/mr-usercount-wf/input

bin/hdfs dfs -put /opt/data/bbc/20180728/tb_user.csv /user/root/oozie/datas/mr-usercount-wf/input3.7、运行:

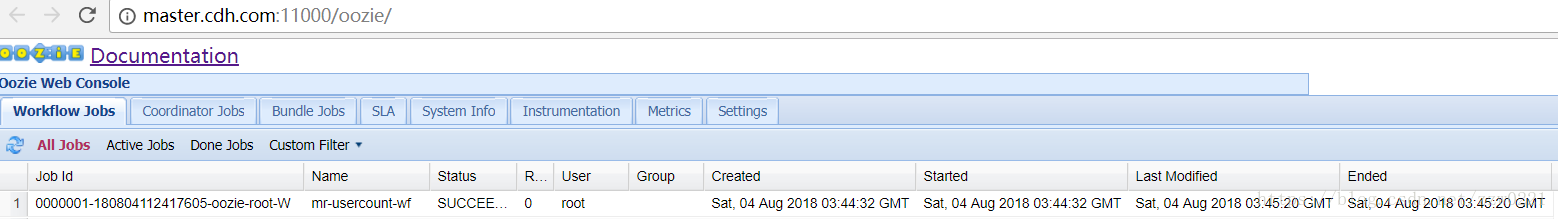

[root@master oozie-4.1.0]# bin/oozie job -oozie http://master.cdh.com:11000/oozie -config oozie-apps/mr-usercount-wf/job.properties -run

job: 0000001-180804112417605-oozie-root-W

3.8、查看Oozie的页面信息:

3.9、查看MapReduce运行结果:

[root@master hadoop-2.6.0]# bin/hdfs dfs -text /user/root/oozie/datas/mr-usercount-wf/output/part*

18/08/04 12:09:55 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2017-12 41

2018-02 14

2018-05 21

2017-10 17

2018-03 32

2018-06 10

2017-11 28

2018-01 18

2018-04 13

2018-07 3

3.10 附上两个java文件:

UserInfoMapper.java

package com.zzw.mapreduce.userinfo;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.util.Date;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class UserInfoMapper extends Mapper<LongWritable, Text, Text, IntWritable>

{

private Text mapOutputKey = new Text();

private IntWritable mapOutputValue = new IntWritable(1);

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException

{

String lineValue = value.toString();

String[] splits = lineValue.split("\\t");

if ((null != splits) && (splits.length == 13))

{

String createTime = splits[11];

System.out.println("createTime=" + createTime);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM");

String str_createTime = sdf.format(new Date(Long.parseLong(createTime)));

mapOutputKey.set(str_createTime);

context.write(mapOutputKey, mapOutputValue);

}

}

}

UserInfoReducer.java

package com.zzw.mapreduce.userinfo;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class UserInfoReducer extends

Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable outputValue = new IntWritable();

@Override

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

outputValue.set(sum);

context.write(key, outputValue);

}

}