版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/lx1309244704/article/details/83892399

上传解压 sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz,重命名

tar -zxf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop进入sqoop下的conf目录:cd /home/sqoop/conf,然后将sqoop-env-template.sh 拷贝一份命名为 sqoop-env.sh

cd /home/sqoop/conf

cp sqoop-env-template.sh sqoop-env.sh然后编辑:vi sqoop-env.sh, HBASE_HOME这个我没有,所以可以不填,运行时说找不到HBASE_HOME的也是没有关系的。

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/home/hadoop

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop

#set the path to where bin/hbase is available

#export HBASE_HOME=

#Set the path to where bin/hive is available

export HIVE_HOME=/home/hive

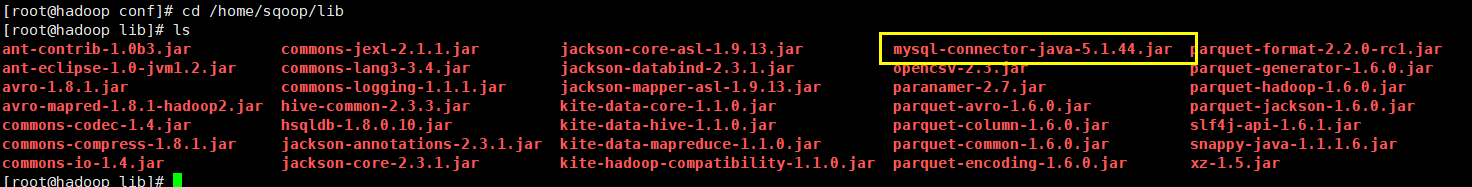

加入mysql的jdbc驱动包,因为我们在安装hive的时候,在hive下的lib下添加过mysql的驱动包,那我们直接拷贝过来就好,

cp /home/hive/lib/mysql-connector-java-5.1.44.jar /home/sqoop/lib

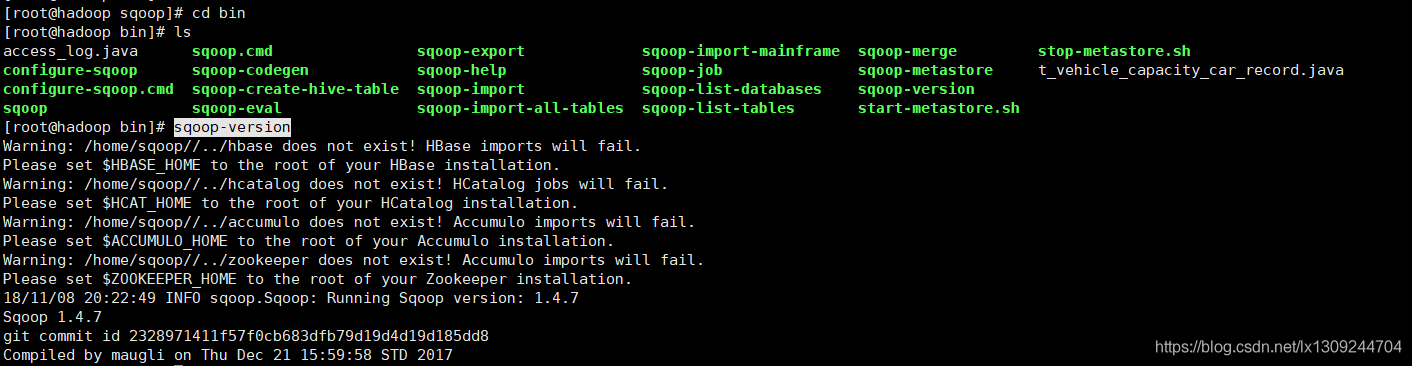

然后验证sqoop是否安装成功,进入sqoop/bin,然后输入 sqoop-version

sqoop-version

安装成功

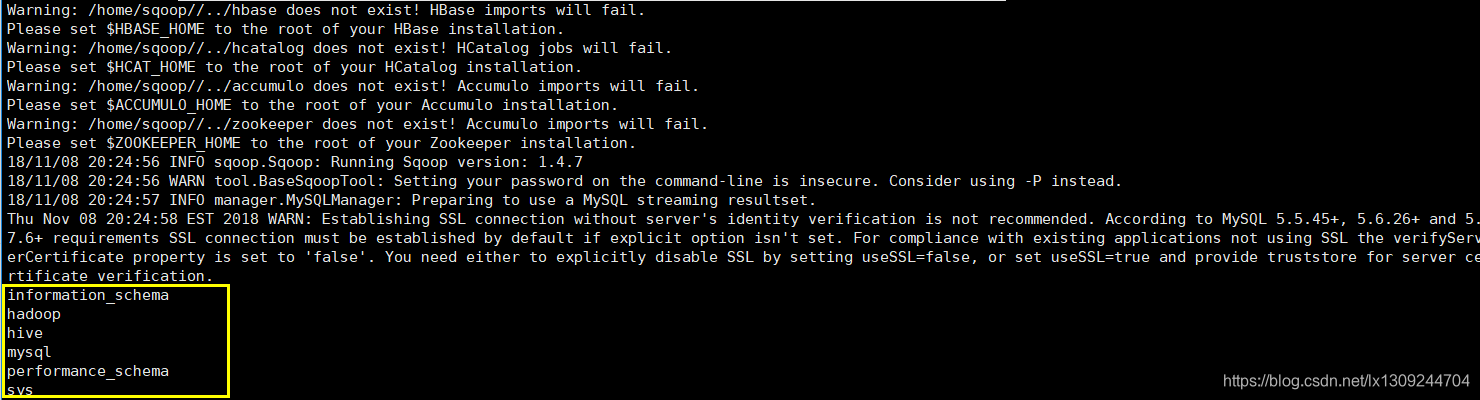

验证sqoop和mysql的联通性

./sqoop-list-databases --connect jdbc:mysql://localhost:3306 --username root --password root成功如下:

从数据库导入hdfs:不指定位置,默认在/user/root目录下面

bin/sqoop import --connect jdbc:mysql://192.168.131.155:3306/hadoop --username root --password root --table shadowsocks_log --m 1从数据库导入hdfs:指定位置

bin/sqoop import --connect jdbc:mysql://192.168.131.155:3306/hadoop --username root --password root --table shadowsocks_log --target-dir /sqoop/mysql/hadoop/shadowsocks_log --m 1从数据库导入hive:

bin/sqoop import --connect jdbc:mysql://192.168.131.155:3306/hadoop --username root --password root --table shadowsocks_log --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-table shadowsocks_log