版权声明:版权声明:本文为博主原创文章,博客地址:https://blog.csdn.net/imbingoer 未经博主允许不得转载 https://blog.csdn.net/imbingoer/article/details/85048068

原文参考链接:https://blog.csdn.net/ljx1528/article/details/81436741

接着上一篇centos安装docker写:

介绍我现在的环境:

centos7.5 台式机三台

分配的环境

192.168.14.125 k8s-master

192.168.14.203 k8s-node1

192.168.14.154 k8s-node2

一、前提设置

- 关闭所有机器的防火墙(三台机器均要执行)

systemctl stop firewalld.service #停止

systemctl disable firewalld.service #禁用

firewall-cmd --state #查看状态

- 关闭selinux

打开config文件(三台机器均要执行)

vi /etc/selinux/config

然后修改enforcing为disabled

- 为各主机设置hostname

master 机器执行: sudo hostnamectl --static set-hostname k8s-master

node1 机器执行: sudo hostnamectl --static set-hostname k8s-node1

node2机器执行: sudo hostnamectl --static set-hostname k8s-node2

-修改hosts文件(三台机器均要执行)

打开文件

vi /etc/hosts

添加如下内容

192.168.14.125 k8s-master

192.168.14.125 etcd

192.168.14.125 registry

192.168.14.203 k8s-node1

192.168.14.154 k8s-node2

二、安装docker(三台机器均安装)

yum -y install docker #安装docker

chkconfig docker on #设置开机启动

service docker start #开启service

如果之前有安装过docker的话,可以先卸载重新执行以上命令

卸载命令如下

yum list installed | grep docker

然后根据上面的命令显示的结果执行删除命令

yum -y remove *** # 其中***表示yum list installed | grep docker显示的已经安装的docker 列表

在一次删除完docker已安装列表之后,执行

rm -rf /var/lib/docker # 删除容器,镜像等等

三、安装etcd

目前我把etcd部署在了k8s-master节点上,所以该部分在k8s-master上执行。

- 安装etcd

yum -y install etcd

- 修改etcd默认配置文件

打开文件

vim /etc/etcd/etcd.conf

修改文件

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="default"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.14.125:2379"

- 启动并验证etcd服务是否正常

依次执行以下四条命令

systemctl start etcd

systemctl enable etcd

etcdctl set testdir/testkey0 0

etcdctl -C http://etcd:2379 cluster-healt

四、部署k8s master (在k8s-master机器上执行)

- 安装k8s

yum -y install kubernetes

- 修改k8s配置文件

打开/etc/kubernetes/apiserver 文件

vim /etc/kubernetes/apiserver

修改内容

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.14.125:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=""

打开文件/etc/kubernetes/config

vim /etc/kubernetes/config

修改内容

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://k8s-master:8080"

- 启动服务&设置开机启动

依次执行以下命令

systemctl enable kube-apiserver.service

systemctl start kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl start kube-scheduler.service

五、部署k8s-node1 (单独在k8s-node1机器上执行)

- 安装k8s

yum -y install kubernetes

- 配置k8s

打开文件/etc/kubernetes/config

vim /etc/kubernetes/config

修改文件

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://192.168.14.125:8080"

打开文件 /etc/kubernetes/kubelet

vim /etc/kubernetes/kubelet

修改文件

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_HOSTNAME="--hostname-override=192.168.14.203"

KUBELET_API_SERVER="--api-servers=http://192.168.14.125:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

KUBELET_ARGS="--cluster-dns=192.168.14.125 --cluster-domain=cluster.local"

- 启动服务 & 设置开机启动

systemctl enable kubelet.service

systemctl start kubelet.service

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

六、部署k8s-node2(单独在k8s-node2机器上执行)

- 安装k8s

yum -y install kubernetes

- 配置k8s

打开文件/etc/kubernetes/config

vim /etc/kubernetes/config

修改文件

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://192.168.14.125:8080"

打开文件 /etc/kubernetes/kubelet

vim /etc/kubernetes/kubelet

修改文件

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_HOSTNAME="--hostname-override=192.168.14.153"

KUBELET_API_SERVER="--api-servers=http://192.168.14.125:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

KUBELET_ARGS="--cluster-dns=192.168.14.125 --cluster-domain=cluster.local"

- 启动服务 & 设置开机启动

systemctl enable kubelet.service

systemctl start kubelet.service

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

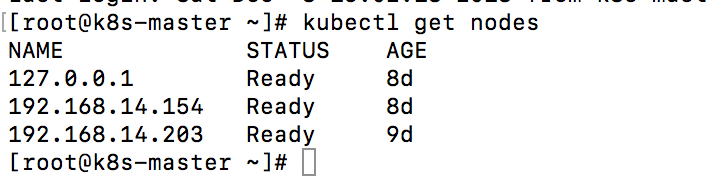

- 查看节点是否安装工正常(在k8s-master机器上执行)

执行命令

kubectl get nodes

显示的结果为以下类似情况则表明安装正常

七、创建覆盖网络 Flannel

- 安装flannel(三台机器上均执行)

yum -y install flannel

- 配置flannel (三台机器上均执行)

打开文件 /etc/sysconfig/flanneld

vim /etc/sysconfig/flanneld

配置文件

k8s-master中的flanneld文件配置如下

FLANNEL_ETCD_ENDPOINTS="http://192.168.14.125:2379"

FLANNEL_ETCD_PREFIX="/k8s/network"

k8s-node1中的flanneld文件配置如下

FLANNEL_ETCD_ENDPOINTS="http://192.168.14.125:2379"

FLANNEL_ETCD_PREFIX="/k8s/network"

k8s-node2中的flanneld文件配置如下

FLANNEL_ETCD_ENDPOINTS="http://192.168.14.125:2379"

FLANNEL_ETCD_PREFIX="/k8s/network"

- 配置etcd中关于flannel的key(单独在k8s-master中执行)

执行命令

etcdctl set /k8s/network/config '{"Network": "172.20.0.0/16"}'

检查配置

etcdctl get /k8s/network/config

- 启动Flannel

1、在k8s-master上执行

systemctl enable flanneld.service

systemctl start flanneld.service

service docker restart

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

2、在k8s-node1上执行

systemctl enable flanneld.service

systemctl start flanneld.service

service docker restart

systemctl restart kubelet.service

systemctl restart kube-proxy.service

3、在k8s-node2上执行

systemctl enable flanneld.service

systemctl start flanneld.service

service docker restart

systemctl restart kubelet.service

systemctl restart kube-proxy.service