1、数据包方向

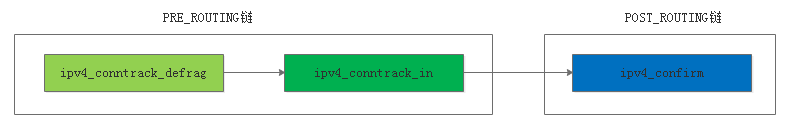

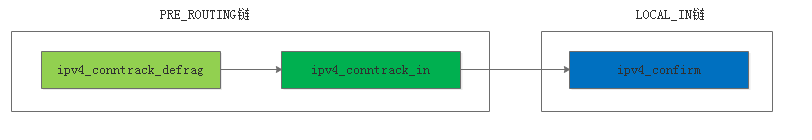

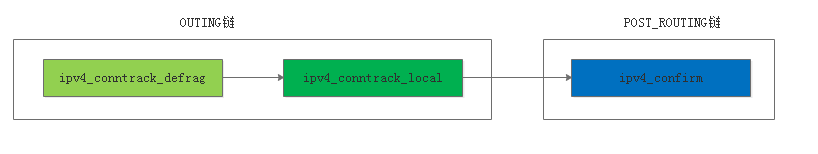

要分析连接链接跟踪的实现我们就要先分析数据包在协议栈中的方向,总的来说主要分为三个方向:本机转发的数据包、本机接受的数据包、本机产生的数据包,我们之前分析了连接跟踪只在四个链上注册了钩子函数,分别是PRE_ROUTING链、OUT链、LOCAL_IN链、POST_ROUTING链。PRE_ROUTING链上注册的是ipv4_conntrack_in,OUT链上注册的是ipv4_conntrack_local。LOCAL_IN链、POST_ROUTING链上注册的是ipv4_confirm。其中PRE_ROUTING链、OUTING链是连接跟踪的入口。LOCAL_IN、POST_ROUTING是链接跟踪的出口。

static struct nf_hook_ops ipv4_conntrack_ops[] __read_mostly = {

{

/*刚进入netfilter框架在第一个PREROUTEING链上建立连接跟踪*/

.hook = ipv4_conntrack_in,

.owner = THIS_MODULE,

.pf = NFPROTO_IPV4,

.hooknum = NF_INET_PRE_ROUTING,

.priority = NF_IP_PRI_CONNTRACK,

},

{

/*本机产生的数据包在OUT链上建立连接跟踪*/

.hook = ipv4_conntrack_local,

.owner = THIS_MODULE,

.pf = NFPROTO_IPV4,

.hooknum = NF_INET_LOCAL_OUT,

.priority = NF_IP_PRI_CONNTRACK,

},

{

/*数据包最后出去在POSTROUTING链上连接跟踪确认*/

.hook = ipv4_confirm,

.owner = THIS_MODULE,

.pf = NFPROTO_IPV4,

.hooknum = NF_INET_POST_ROUTING,

.priority = NF_IP_PRI_CONNTRACK_CONFIRM,

},

{

/*在LOCAL_IN链进入本机的数据连接跟踪确认*/

.hook = ipv4_confirm,

.owner = THIS_MODULE,

.pf = NFPROTO_IPV4,

.hooknum = NF_INET_LOCAL_IN,

.priority = NF_IP_PRI_CONNTRACK_CONFIRM,

},

};

ip分片的hook函数

static struct nf_hook_ops ipv4_defrag_ops[] = {

{

/*对数据进行分片*/

.hook = ipv4_conntrack_defrag,

.owner = THIS_MODULE,

.pf = PF_INET,

.hooknum = NF_INET_PRE_ROUTING,

.priority = NF_IP_PRI_CONNTRACK_DEFRAG,

},

{

.hook = ipv4_conntrack_defrag,

.owner = THIS_MODULE,

.pf = PF_INET,

.hooknum = NF_INET_LOCAL_OUT,

.priority = NF_IP_PRI_CONNTRACK_DEFRAG,

},

};1.1、本机转发数据包

本机转发的数据包要经过PRE_ROUTING链、POST_ROUTING链,本机转发要经过的hook函数是ipv4_conntrack_defrag->ipv4_conntrack_in->ipv4_confirm,ipv4_conntrack_defrag主要做数据包分片、ipv4_conntrack_in建立一条连接跟踪选项,

ipv4_confirm确认一条链接跟踪选项。

1.2、发往本机的数据包

发往本机的数据包经过了PRE_ROUTING链、LOCAL_IN链,经过的hook函数是ipv4_conntrack_defrag->ipv4_conntrack_in->ipv4_confirm。

1.3、本机产生的数据包

本机产生的数据包经过了OUTING链、POST_ROUTING链,经过的hook函数是ipv4_conntrack_defrag->ipv4_conntrack_local->ipv4_confirm。

2、ipv4_conntrack_defrag

ipv4_conntrack_defrag主要是做ip分片,先检查skb->nfct选项是否为空不为空就说明该数据包的链接跟踪选项已经建立,就直接返回。没有就调用nf_ct_ipv4_gather_frags做ip分片。

static unsigned int ipv4_conntrack_defrag(unsigned int hooknum,

struct sk_buff *skb,

const struct net_device *in,

const struct net_device *out,

int (*okfn)(struct sk_buff *))

{

...

/*该数据包的连接跟踪选项已经建立就直接返回*/

if (skb->nfct && !nf_ct_is_template((struct nf_conn *)skb->nfct))

return NF_ACCEPT;

#endif

#endif

/* Gather fragments. */

if (ip_hdr(skb)->frag_off & htons(IP_MF | IP_OFFSET)) {

enum ip_defrag_users user = nf_ct_defrag_user(hooknum, skb);

//数据包分片

if (nf_ct_ipv4_gather_frags(skb, user))

return NF_STOLEN;

}

return NF_ACCEPT;

}

nf_ct_ipv4_gather_frags最终调用ip_defrag做数据分片

/* Returns new sk_buff, or NULL */

static int nf_ct_ipv4_gather_frags(struct sk_buff *skb, u_int32_t user)

{

...

/*对数据包分片重组*/

err = ip_defrag(skb, user);

...

}3、ipv4_conntrack_in

ipv4_conntrack_in实际是调用的nf_conntrack_in,下面我们来分析nf_conntrack_in,ipvr_conntrack_local函数也是调用nf_conntrack_in,这个函数是在PRE_ROUTING链和OUTING链调用,PRE_ROUTING链、OUTING链数netfilter的两个入口链,调用这个函数主要是初始化一条链接、更新链接状态。

3.1、判断sk->nfct

首先判断skb->nfct不为NULl而且nf_ct_is_template为NULL说明数据包已经建立了连接跟踪选项,就直接返回

unsigned int

nf_conntrack_in(struct net *net, u_int8_t pf, unsigned int hooknum,

struct sk_buff *skb)

{

...

/*nfct不为NULL说明已经建立连接跟踪选项*/

if (skb->nfct) {

/* Previously seen (loopback or untracked)? Ignore. */

tmpl = (struct nf_conn *)skb->nfct;

if (!nf_ct_is_template(tmpl)) {

NF_CT_STAT_INC_ATOMIC(net, ignore);

return NF_ACCEPT;

}

skb->nfct = NULL;

}

...

}内联函数nf_ct_is_template实际就是判断不是IPS_TEMPLATE_BIT

static inline int nf_ct_is_template(const struct nf_conn *ct)

{

return test_bit(IPS_TEMPLATE_BIT, &ct->status);

}3.2、获取协议号

首先调用__nf_ct_l3proto_find根据三层协议号在nf_ct_l3protos获取之前注册的struct nf_conntrack_l3proto实例,然后调用struct nf_conntrack_l3proto结构体中的get_l4proto函数后去四层协议号。最后根据三层协议pf、四层协议号protonum在nf_ct_protos获取之前注册的struct nf_conntrack_l4proto实例

unsigned int

nf_conntrack_in(struct net *net, u_int8_t pf, unsigned int hooknum,

struct sk_buff *skb)

{

...

/* rcu_read_lock()ed by nf_hook_slow */

/*根据三层协议号在nf_ct_l3protos数组中寻找三层struct nf_conntrack_l3proto实例*/

l3proto = __nf_ct_l3proto_find(pf);

/*获取四层协议号*/

ret = l3proto->get_l4proto(skb, skb_network_offset(skb),

&dataoff, &protonum);

if (ret <= 0) {

pr_debug("not prepared to track yet or error occured\n");

NF_CT_STAT_INC_ATOMIC(net, error);

NF_CT_STAT_INC_ATOMIC(net, invalid);

ret = -ret;

goto out;

}

/*根据三层协议号、四层协议号获取四层struct nf_conntrack_l4proto实例*/

l4proto = __nf_ct_l4proto_find(pf, protonum);

...

}3.3 resolve_normal_ct

调用resolve_normal_ct获取struct nf_conn结构体和链接状态在reply方向数据包标志set_reply

...

/*从tuple hash表中获取struct nf_conn结构体和reply方向数据包标志*/

ct = resolve_normal_ct(net, tmpl, skb, dataoff, pf, protonum,

l3proto, l4proto, &set_reply, &ctinfo);

...resolve_nomal_ct函数做以下事情

(1)获取tuple

调用nf_ct_get_tuple获取数据包的tuple,然后在hash表中查找这个tuple如果没有找到就新建一个并加入到unconfirmed链表中

static inline struct nf_conn *

resolve_normal_ct(struct net *net, struct nf_conn *tmpl,

...)

{

...

//获取tuple

if (!nf_ct_get_tuple(skb, skb_network_offset(skb),

dataoff, l3num, protonum, &tuple, l3proto,

l4proto)) {

pr_debug("resolve_normal_ct: Can't get tuple\n");

return NULL;

}

...

}nf_ct_get_tuple函数主要根据协议号调用pkt_to_tuple生成一个tuple,tcp/udp协议就是生成五元组(源ip、目的ip、源端口、目的端口、协议号),icmp协议就是(id、code、type)。

bool

nf_ct_get_tuple(const struct sk_buff *skb,

...)

{

memset(tuple, 0, sizeof(*tuple));

tuple->src.l3num = l3num;

/*三层协议从skb中获取源ip、目的ip保存到tuple*/

if (l3proto->pkt_to_tuple(skb, nhoff, tuple) == 0)

return false;

tuple->dst.protonum = protonum;

/*方向orig*/

tuple->dst.dir = IP_CT_DIR_ORIGINAL;

/*四层协议tcp/udp后去源端口、目的端口保存到tuple

如果是icmp就获取type、code、id*/

return l4proto->pkt_to_tuple(skb, dataoff, tuple);

}(3)判断tuple是否存在

调用nf_conntrack_find_get在hash表中查找前面的tuple是否存在,如果不存在就调用init_conntrack新建一个tuple

static inline struct nf_conn *

resolve_normal_ct(struct net *net, struct nf_conn *tmpl,

...)

{...

//hash表中查找tuple

/* look for tuple match */

h = nf_conntrack_find_get(net, zone, &tuple);

if (!h) {

//没有找到就新建一个tuple

h = init_conntrack(net, tmpl, &tuple, l3proto, l4proto,

skb, dataoff);

if (!h)

return NULL;

if (IS_ERR(h))

return (void *)h;

}

//获取连接跟踪结构体

ct = nf_ct_tuplehash_to_ctrack(h);

...

}nf_conntrack_find_get

struct nf_conntrack_tuple_hash *

nf_conntrack_find_get(struct net *net, u16 zone,

const struct nf_conntrack_tuple *tuple)

{

...

begin:

/*查找tuple*/

h = __nf_conntrack_find(net, zone, tuple);

...

}__nf_conntrack_find

struct nf_conntrack_tuple_hash *

__nf_conntrack_find(struct net *net, u16 zone,

const struct nf_conntrack_tuple *tuple)

{

...

/*根据tuple元素算出hash值*/

unsigned int hash = hash_conntrack(net, zone, tuple);

...

begin:

/*遍历链表查找tuple*/

hlist_nulls_for_each_entry_rcu(h, n, &net->ct.hash[hash], hnnode) {

if (nf_ct_tuple_equal(tuple, &h->tuple) &&

nf_ct_zone(nf_ct_tuplehash_to_ctrack(h)) == zone) {

NF_CT_STAT_INC(net, found);

local_bh_enable();

return h;

}

NF_CT_STAT_INC(net, searched);

}

...

}init_conntrack

init_conntrack(struct net *net, struct nf_conn *tmpl,

const struct nf_conntrack_tuple *tuple,

struct nf_conntrack_l3proto *l3proto,

struct nf_conntrack_l4proto *l4proto,

struct sk_buff *skb,

unsigned int dataoff)

{

...

/*tuplehash的reply方向的tuple赋值,起始就是orig方向

的反过来*/

if (!nf_ct_invert_tuple(&repl_tuple, tuple, l3proto, l4proto)) {

pr_debug("Can't invert tuple.\n");

return NULL;

}

/*分配一个nf_conn结构体*/

ct = nf_conntrack_alloc(net, zone, tuple, &repl_tuple, GFP_ATOMIC);

if (IS_ERR(ct)) {

pr_debug("Can't allocate conntrack.\n");

return (struct nf_conntrack_tuple_hash *)ct;

}

/*对nf_conn进行四层协议的初始化*/

if (!l4proto->new(ct, skb, dataoff)) {

nf_conntrack_free(ct);

pr_debug("init conntrack: can't track with proto module\n");

return NULL;

}

nf_ct_acct_ext_add(ct, GFP_ATOMIC);

ecache = tmpl ? nf_ct_ecache_find(tmpl) : NULL;

nf_ct_ecache_ext_add(ct, ecache ? ecache->ctmask : 0,

ecache ? ecache->expmask : 0,

GFP_ATOMIC);

spin_lock_bh(&nf_conntrack_lock);

/*查找是否是已建立连接的期望连接*/

exp = nf_ct_find_expectation(net, zone, tuple);

if (exp) {

pr_debug("conntrack: expectation arrives ct=%p exp=%p\n",

ct, exp);

/* Welcome, Mr. Bond. We've been expecting you... */

/*如果是期望连接设置IPS_EXPECTED_BIT标志位

并且给ct->master赋值期望*/

__set_bit(IPS_EXPECTED_BIT, &ct->status);

ct->master = exp->master;

if (exp->helper) {

help = nf_ct_helper_ext_add(ct, GFP_ATOMIC);

if (help)

rcu_assign_pointer(help->helper, exp->helper);

}

#ifdef CONFIG_NF_CONNTRACK_MARK

ct->mark = exp->master->mark;

#endif

#ifdef CONFIG_NF_CONNTRACK_SECMARK

ct->secmark = exp->master->secmark;

#endif

nf_conntrack_get(&ct->master->ct_general);

NF_CT_STAT_INC(net, expect_new);

} else {

__nf_ct_try_assign_helper(ct, tmpl, GFP_ATOMIC);

NF_CT_STAT_INC(net, new);

}

/* Overload tuple linked list to put us in unconfirmed list. */

/*将新建立的连接tuple加入到unconfirmed表中*/

hlist_nulls_add_head_rcu(&ct->tuplehash[IP_CT_DIR_ORIGINAL].hnnode,

&net->ct.unconfirmed);

spin_unlock_bh(&nf_conntrack_lock);

if (exp) {

if (exp->expectfn)

exp->expectfn(ct, exp);

nf_ct_expect_put(exp);

}

return &ct->tuplehash[IP_CT_DIR_ORIGINAL];

}

init_conntrack首先调用nf_ct_invert_tuple初始化tuplehash的reply方向的tuple。调用nf_conntrack_alloc分配一个struct nf_conn,然后判断是是否是已建立链接的期望链接,如果是就设置链接状态标志IPS_EXPECTED_BIT,然后把nf_conn加入到unconfirmed表中。

nf_ct_invert_tuple调用invert_tuple初始化reply方向的tuple。就是orig方向的反过来

bool

nf_ct_invert_tuple(struct nf_conntrack_tuple *inverse,

const struct nf_conntrack_tuple *orig,

const struct nf_conntrack_l3proto *l3proto,

const struct nf_conntrack_l4proto *l4proto)

{

memset(inverse, 0, sizeof(*inverse));

inverse->src.l3num = orig->src.l3num;

/*三层reply方向的初始化*/

if (l3proto->invert_tuple(inverse, orig) == 0)

return false;

inverse->dst.dir = !orig->dst.dir;

inverse->dst.protonum = orig->dst.protonum;

/*四层reply方向的tuple初始化*/

return l4proto->invert_tuple(inverse, orig);

}nf_conntrack_alloc分配struct nf_conn要判断当前链接总数是否大于最大值nf_conntrack_max,如果大于,则根据tuple算出hash值,对于连接跟踪项的status的 IPS_ASSURED_BIT位没有被置位的连接跟踪项,则强制删除。

struct nf_conn *nf_conntrack_alloc(struct net *net, u16 zone,

const struct nf_conntrack_tuple *orig,

const struct nf_conntrack_tuple *repl,

gfp_t gfp)

{

...

/*连接跟踪数量已经超过最大值nf_conntrack_max

根据tuple算出hash值,对于连接跟踪项的status的

IPS_ASSURED_BIT位没有被置位的连接跟踪项,则强制删除。*/

if (nf_conntrack_max &&

unlikely(atomic_read(&net->ct.count) > nf_conntrack_max)) {

unsigned int hash = hash_conntrack(net, zone, orig);

if (!early_drop(net, hash)) {

atomic_dec(&net->ct.count);

if (net_ratelimit())

printk(KERN_WARNING

"nf_conntrack: table full, dropping"

" packet.\n");

return ERR_PTR(-ENOMEM);

}

}

/*为struct nf_conn分配空间*/

ct = kmem_cache_alloc(net->ct.nf_conntrack_cachep, gfp);

if (ct == NULL) {

pr_debug("nf_conntrack_alloc: Can't alloc conntrack.\n");

atomic_dec(&net->ct.count);

return ERR_PTR(-ENOMEM);

}

...

}(4)、获取struct nf_conn

static inline struct nf_conn *

resolve_normal_ct(struct net *net, struct nf_conn *tmpl,

...)

{...

//根据tuple得到nf_conn

ct = nf_ct_tuplehash_to_ctrack(h);

...

}根据hash值获取struct nf_conn

nf_ct_tuplehash_to_ctrack(const struct nf_conntrack_tuple_hash *hash)

{

return container_of(hash, struct nf_conn,

tuplehash[hash->tuple.dst.dir]);

}

(5)设置链接状态

链接状态要分以下四种情况:

a)、如果数据包的方向是reply,说明链接的两个方向都有数据包,就设置数据包状态为IP_CT_ESTABLISHED + IP_CT_IS_REPLY,并且将set_reply设置为1,表示reply方向有数据了

b)、数据包的方向是orig,但已经收到了reply方向的数据包就将设置数据包状态为IP_CT_ESTABLISHED。

c)、数据包的方向是orig,还没有收到reply方向的数据,是一个期望链接就设置数据包期望链接标志IPS_EXPECTED_BIT

d)、数据包方向是orig,还没有收到reply方向的数据,而且不是一个期望链接,就设置数据包状态为IP_CT_NEW

static inline struct nf_conn *

resolve_normal_ct(struct net *net, struct nf_conn *tmpl,

...)

{

...

/* It exists; we have (non-exclusive) reference. */

/*数据包是reply方向表名连接双向已经建立

设置数据包的状态为IP_CT_ESTABLISHED + IP_CT_IS_REPLY*/

if (NF_CT_DIRECTION(h) == IP_CT_DIR_REPLY) {

*ctinfo = IP_CT_ESTABLISHED + IP_CT_IS_REPLY;

/* Please set reply bit if this packet OK */

*set_reply = 1;

} else {

/* Once we've had two way comms, always ESTABLISHED. */

/*数据包是orig方向,以及收到reply方向的数据则

设置数据包状态为IP_CT_ESTABLISHED*/

if (test_bit(IPS_SEEN_REPLY_BIT, &ct->status)) {

pr_debug("nf_conntrack_in: normal packet for %p\n", ct);

//两个方向都已经建立了

*ctinfo = IP_CT_ESTABLISHED;

/*还没有收到reply方向数据包,是一个期望连接设置

数据包状态为IP_CT_RELATED*/

} else if (test_bit(IPS_EXPECTED_BIT, &ct->status)) {

pr_debug("nf_conntrack_in: related packet for %p\n",

ct);

*ctinfo = IP_CT_RELATED;

} else {

pr_debug("nf_conntrack_in: new packet for %p\n", ct);

/*没有收到relply方向的数据包,而且不是期望连接

设置数据包状态为IP_CT_NEW*/

*ctinfo = IP_CT_NEW;

}

*set_reply = 0;

}

...

}resolve_normal_ct函数代码:

static inline struct nf_conn *

resolve_normal_ct(struct net *net, struct nf_conn *tmpl,

struct sk_buff *skb,

unsigned int dataoff,

u_int16_t l3num,

u_int8_t protonum,

struct nf_conntrack_l3proto *l3proto,

struct nf_conntrack_l4proto *l4proto,

int *set_reply,

enum ip_conntrack_info *ctinfo)

{

struct nf_conntrack_tuple tuple;

struct nf_conntrack_tuple_hash *h;

struct nf_conn *ct;

u16 zone = tmpl ? nf_ct_zone(tmpl) : NF_CT_DEFAULT_ZONE;

//获取tuple

if (!nf_ct_get_tuple(skb, skb_network_offset(skb),

dataoff, l3num, protonum, &tuple, l3proto,

l4proto)) {

pr_debug("resolve_normal_ct: Can't get tuple\n");

return NULL;

}

//hash表中查找tuple

/* look for tuple match */

h = nf_conntrack_find_get(net, zone, &tuple);

if (!h) {

//没有找到就新建一个tuple

h = init_conntrack(net, tmpl, &tuple, l3proto, l4proto,

skb, dataoff);

if (!h)

return NULL;

if (IS_ERR(h))

return (void *)h;

}

//根据tuple得到nf_conn

ct = nf_ct_tuplehash_to_ctrack(h);

/* It exists; we have (non-exclusive) reference. */

/*数据包是reply方向表名连接双向已经建立

设置数据包的状态为IP_CT_ESTABLISHED + IP_CT_IS_REPLY*/

if (NF_CT_DIRECTION(h) == IP_CT_DIR_REPLY) {

*ctinfo = IP_CT_ESTABLISHED + IP_CT_IS_REPLY;

/* Please set reply bit if this packet OK */

*set_reply = 1;

} else {

/* Once we've had two way comms, always ESTABLISHED. */

/*数据包是orig方向,以及收到reply方向的数据则

设置连接跟踪状态为IP_CT_ESTABLISHED*/

if (test_bit(IPS_SEEN_REPLY_BIT, &ct->status)) {

pr_debug("nf_conntrack_in: normal packet for %p\n", ct);

//两个方向都已经建立了

*ctinfo = IP_CT_ESTABLISHED;

/*还没有收到reply方向数据包,是一个期望连接设置

连接跟踪状态为IP_CT_RELATED*/

} else if (test_bit(IPS_EXPECTED_BIT, &ct->status)) {

pr_debug("nf_conntrack_in: related packet for %p\n",

ct);

*ctinfo = IP_CT_RELATED;

} else {

pr_debug("nf_conntrack_in: new packet for %p\n", ct);

/*没有收到relply方向的数据包,而且不是期望连接

设置连接状态为IP_CT_NEW*/

*ctinfo = IP_CT_NEW;

}

*set_reply = 0;

}

skb->nfct = &ct->ct_general;

skb->nfctinfo = *ctinfo;

return ct;

}3.4 reply方向数据包处理

调用resolve_normal_ct可以得到该数据包是否是reply方向的,如果是set_reply就会设置1,设置链接状态为IPS_SEEN_REPLY_BIT,调用nf_conntrack_event_cache处理状态改变事件

unsigned int

nf_conntrack_in(struct net *net, u_int8_t pf, unsigned int hooknum,

struct sk_buff *skb)

{

...

/*当在reply方向收到数据包后设置链接状态为IPS_SEEN_REPLY_BIT

状态改变调用nf_conntrack_event_cache ,由nfnetlink模块处理状态改变的事件*/

if (set_reply && !test_and_set_bit(IPS_SEEN_REPLY_BIT, &ct->status))

nf_conntrack_event_cache(IPCT_REPLY, ct);

}nf_conntrack_in代码:

unsigned int

nf_conntrack_in(struct net *net, u_int8_t pf, unsigned int hooknum,

struct sk_buff *skb)

{

struct nf_conn *ct, *tmpl = NULL;

enum ip_conntrack_info ctinfo;

struct nf_conntrack_l3proto *l3proto;

struct nf_conntrack_l4proto *l4proto;

unsigned int dataoff;

u_int8_t protonum;

int set_reply = 0;

int ret;

/*nfct不为NULL说明已经建立连接跟踪选项*/

if (skb->nfct) {

/* Previously seen (loopback or untracked)? Ignore. */

tmpl = (struct nf_conn *)skb->nfct;

if (!nf_ct_is_template(tmpl)) {

NF_CT_STAT_INC_ATOMIC(net, ignore);

return NF_ACCEPT;

}

skb->nfct = NULL;

}

/* rcu_read_lock()ed by nf_hook_slow */

/*根据三层协议号在nf_ct_l3protos数组中寻找三层struct nf_conntrack_l3proto实例*/

l3proto = __nf_ct_l3proto_find(pf);

/*获取四层协议号*/

ret = l3proto->get_l4proto(skb, skb_network_offset(skb),

&dataoff, &protonum);

if (ret <= 0) {

pr_debug("not prepared to track yet or error occured\n");

NF_CT_STAT_INC_ATOMIC(net, error);

NF_CT_STAT_INC_ATOMIC(net, invalid);

ret = -ret;

goto out;

}

/*根据三层协议号、四层协议号获取四层struct nf_conntrack_l4proto实例*/

l4proto = __nf_ct_l4proto_find(pf, protonum);

/* It may be an special packet, error, unclean...

* inverse of the return code tells to the netfilter

* core what to do with the packet. */

if (l4proto->error != NULL) {

ret = l4proto->error(net, tmpl, skb, dataoff, &ctinfo,

pf, hooknum);

if (ret <= 0) {

NF_CT_STAT_INC_ATOMIC(net, error);

NF_CT_STAT_INC_ATOMIC(net, invalid);

ret = -ret;

goto out;

}

}

/*从tuple hash表中获取struct nf_conn结构体和reply方向数据标志*/

ct = resolve_normal_ct(net, tmpl, skb, dataoff, pf, protonum,

l3proto, l4proto, &set_reply, &ctinfo);

if (!ct) {

/* Not valid part of a connection */

NF_CT_STAT_INC_ATOMIC(net, invalid);

ret = NF_ACCEPT;

goto out;

}

if (IS_ERR(ct)) {

/* Too stressed to deal. */

NF_CT_STAT_INC_ATOMIC(net, drop);

ret = NF_DROP;

goto out;

}

NF_CT_ASSERT(skb->nfct);

/*填充tuple结构中四层的元素*/

ret = l4proto->packet(ct, skb, dataoff, ctinfo, pf, hooknum);

if (ret <= 0) {

/* Invalid: inverse of the return code tells

* the netfilter core what to do */

pr_debug("nf_conntrack_in: Can't track with proto module\n");

nf_conntrack_put(skb->nfct);

skb->nfct = NULL;

NF_CT_STAT_INC_ATOMIC(net, invalid);

if (ret == -NF_DROP)

NF_CT_STAT_INC_ATOMIC(net, drop);

ret = -ret;

goto out;

}

/*当在reply方向收到数据包后设置链接状态为IPS_SEEN_REPLY_BIT

状态改变调用nf_conntrack_event_cache ,由nfnetlink模块处理状态改变的事件*/

if (set_reply && !test_and_set_bit(IPS_SEEN_REPLY_BIT, &ct->status))

nf_conntrack_event_cache(IPCT_REPLY, ct);

out:

if (tmpl)

nf_ct_put(tmpl);

return ret;

}