Scapy爬虫实战:使用代理访问

前面我们简单的设置了headers就可以骗过ip138.com,但是绝大多数比较复杂的网站就不是那么好骗的了,这个时候我们需要更高级的方案,富人靠科技,穷人靠变异,如果不差钱的话,可以考虑VPN,也可以使用免费的代理。我们这里试着使用代理。

Middleware 中间件设置代理

middlewares.py

from tutorial.settings import PROXIES

class TutorialDownloaderMiddleware(object):

def process_request(self, request, spider):

request.meta['proxy'] = "http://%s" % random.choice(PROXIES)

return None

settings.py

PROXIES = [

'113.59.59.73:58451',

'113.214.13.1:8000',

'119.7.192.27:8088',

'60.13.74.183:80',

'110.180.90.181:8088',

'125.38.239.199:8088'

]

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

'tutorial.middlewares.TutorialDownloaderMiddleware': 1,

}

spider

# -*- coding: utf-8 -*-

import scrapy

class Ip138Spider(scrapy.Spider):

name = 'ip138'

allowed_domains = ['www.ip138.com','2018.ip138.com']

start_urls = ['http://2018.ip138.com/ic.asp']

def parse(self, response):

print("*" * 40)

print("response text: %s" % response.text)

print("response headers: %s" % response.headers)

print("response meta: %s" % response.meta)

print("request headers: %s" % response.request.headers)

print("request cookies: %s" % response.request.cookies)

print("request meta: %s" % response.request.meta)

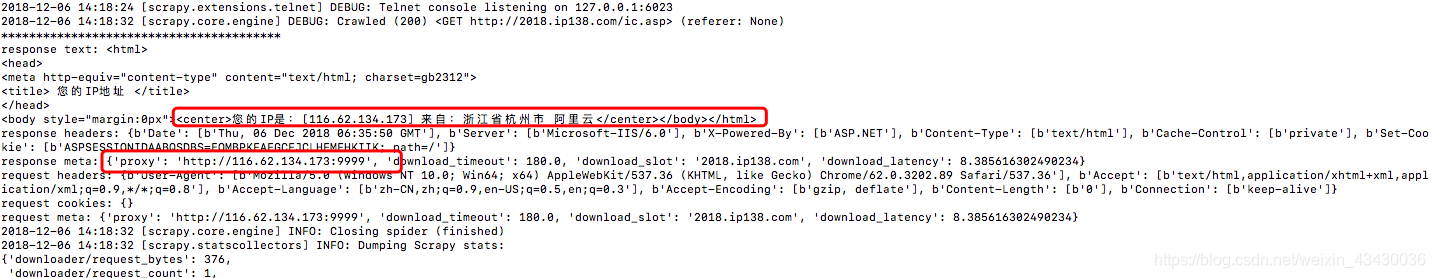

运行结果如下:

配置meta使用proxy

# -*- coding: utf-8 -*-

import scrapy

class Ip138Spider(scrapy.Spider):

name = 'ip138'

allowed_domains = ['www.ip138.com','2018.ip138.com']

start_urls = ['http://2018.ip138.com/ic.asp']

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url, meta={'proxy':'http://116.62.134.173:9999'} ,callback=self.parse)

def parse(self, response):

print("*" * 40)

print("response text: %s" % response.text)

print("response headers: %s" % response.headers)

print("response meta: %s" % response.meta)

print("request headers: %s" % response.request.headers)

print("request cookies: %s" % response.request.cookies)

print("request meta: %s" % response.request.meta)

运行结果

快代理

我们可以使用上一些免费代理 https://www.kuaidaili.com/free/inha/

速度比较满,但是还可以用。