前言

java集合架构

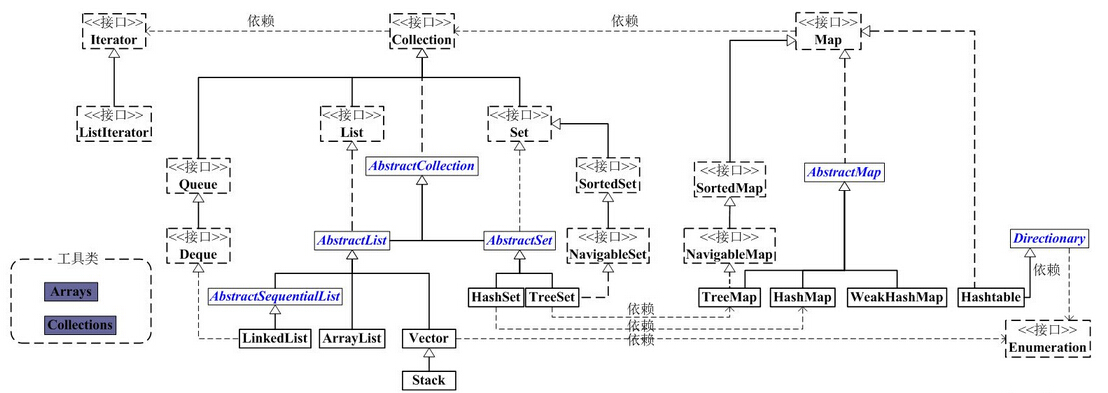

从上图中可以看出,集合类主要分为两大类:Collection和Map。

Collection是List、Set等集合高度抽象出来的接口,它包含了这些集合的基本操作,它主要又分为两大部分:List和Set。

List接口通常表示一个列表(数组、队列、链表、栈等),其中的元素可以重复,常用实现类为ArrayList和LinkedList,另外还有不常用的Vector。另外,LinkedList还是实现了Queue接口,因此也可以作为队列使用。

Set接口通常表示一个集合,其中的元素不允许重复(通过hashcode和equals函数保证),常用实现类有HashSet和TreeSet,HashSet是通过Map中的HashMap实现的,而TreeSet是通过Map中的TreeMap实现的。另外,TreeSet还实现了SortedSet接口,因此是有序的集合(集合中的元素要实现Comparable接口,并覆写Compartor函数才行)。

我们看到,抽象类AbstractCollection、AbstractList和AbstractSet分别实现了Collection、List和Set接口,这就是在Java集合框架中用的很多的适配器设计模式,用这些抽象类去实现接口,在抽象类中实现接口中的若干或全部方法,这样下面的一些类只需直接继承该抽象类,并实现自己需要的方法即可,而不用实现接口中的全部抽象方法。

Map是一个映射接口,其中的每个元素都是一个key-value键值对,同样抽象类AbstractMap通过适配器模式实现了Map接口中的大部分函数,TreeMap、HashMap、WeakHashMap等实现类都通过继承AbstractMap来实现,另外,不常用的HashTable直接实现了Map接口,它和Vector都是JDK1.0就引入的集合类。

Iterator是遍历集合的迭代器(不能遍历Map,只用来遍历Collection),Collection的实现类都实现了iterator()函数,它返回一个Iterator对象,用来遍历集合,ListIterator则专门用来遍历List。而Enumeration则是JDK1.0时引入的,作用与Iterator相同,但它的功能比Iterator要少,它只能再Hashtable、Vector和Stack中使用。

Arrays和Collections是用来操作数组、集合的两个工具类,例如在ArrayList和Vector中大量调用了Arrays.Copyof()方法,而Collections中有很多静态方法可以返回各集合类的synchronized版本,即线程安全的版本,当然了,如果要用线程安全的结合类,首选Concurrent并发包下的对应的集合类。

HashMap简介

容量(capacity)和负载因子(loadFactor)

源码解析

import java.io.*;

public class HashMap1<K,V>

extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable

{

/**

* The default initial capacity - MUST be a power of two.

默认初始化容量16, 并且容量必须是2的整数次幂

*/

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

/**

* The maximum capacity, used if a higher value is implicitly specified

* by either of the constructors with arguments.

* MUST be a power of two <= 1<<30.

通过参数构建HashMap时,最大容量是2的30次幂,传入容量过大时将会被这个值替换

*/

static final int MAXIMUM_CAPACITY = 1 << 30;

/**

* The load factor used when none specified in constructor.

负载因子默认值是0.75f

*/

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* An empty table instance to share when the table is not inflated.

哈希表没有被初始化时,先初始化为一个空表

*/

static final Entry<?,?>[] EMPTY_TABLE = {};

/**

* The table, resized as necessary. Length MUST Always be a power of two.

哈希表,有必要时会扩容,长度必须是2的整数次幂

*/

transient Entry<K,V>[] table = (Entry<K,V>[]) EMPTY_TABLE;

/**

* The number of key-value mappings contained in this map.

map中存放键值对的数量

*/

transient int size;

/**

* The next size value at which to resize (capacity * load factor).

* HashMap阈值,用于判断是否需要扩容(threshold = capacity*loadfactor)

*/

//初始化HashMap时,哈希表为空,此时threshold为容量capacity大小,

//当inflate初始化哈希表时,threshold赋值为capacity*loadfactor

int threshold;

//负载因子

final float loadFactor;

//HashMap改动的次数

transient int modCount;

/**

* The default threshold of map capacity above which alternative hashing is

* used for String keys. Alternative hashing reduces the incidence of

* collisions due to weak hash code calculation for String keys.

* <p/>

* This value may be overridden by defining the system property

* {@code jdk.map.althashing.threshold}. A property value of {@code 1}

* forces alternative hashing to be used at all times whereas

* {@code -1} value ensures that alternative hashing is never used.

*/

static final int ALTERNATIVE_HASHING_THRESHOLD_DEFAULT = Integer.MAX_VALUE;

/**

* holds values which can't be initialized until after VM is booted.

*/

private static class Holder {

/**

* Table capacity above which to switch to use alternative hashing.

*/

static final int ALTERNATIVE_HASHING_THRESHOLD;

static {

String altThreshold = java.security.AccessController.doPrivileged(

new sun.security.action.GetPropertyAction(

"jdk.map.althashing.threshold"));

int threshold;

try {

threshold = (null != altThreshold)

? Integer.parseInt(altThreshold)

: ALTERNATIVE_HASHING_THRESHOLD_DEFAULT;

// disable alternative hashing if -1

if (threshold == -1) {

threshold = Integer.MAX_VALUE;

}

if (threshold < 0) {

throw new IllegalArgumentException("value must be positive integer.");

}

} catch(IllegalArgumentException failed) {

throw new Error("Illegal value for 'jdk.map.althashing.threshold'", failed);

}

ALTERNATIVE_HASHING_THRESHOLD = threshold;

}

}

/**

* A randomizing value associated with this instance that is applied to

* hash code of keys to make hash collisions harder to find. If 0 then

* alternative hashing is disabled.

*/

transient int hashSeed = 0;

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and load factor.

根据指定的容量和负载因子创建一个空的HashMap,在进行put操作时会判断哈希表是否为空,空就初始化哈希表

*/

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

threshold = initialCapacity;

init();

}

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and the default load factor (0.75).

根据指定的容量和默认的负载因子0.75创建一个空的HashMap

*/

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty <tt>HashMap</tt> with the default initial capacity

* (16) and the default load factor (0.75).

*/

public HashMap() {

this(DEFAULT_INITIAL_CAPACITY, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs a new <tt>HashMap</tt> with the same mappings as the

* specified <tt>Map</tt>. The <tt>HashMap</tt> is created with

* default load factor (0.75) and an initial capacity sufficient to

* hold the mappings in the specified <tt>Map</tt>.

*

* @param m the map whose mappings are to be placed in this map

* @throws NullPointerException if the specified map is null

*/

public HashMap(Map<? extends K, ? extends V> m) {

this(Math.max((int) (m.size() / DEFAULT_LOAD_FACTOR) + 1,

DEFAULT_INITIAL_CAPACITY), DEFAULT_LOAD_FACTOR);

inflateTable(threshold);

putAllForCreate(m);

}

private static int roundUpToPowerOf2(int number) {

// assert number >= 0 : "number must be non-negative";

int rounded = number >= MAXIMUM_CAPACITY

? MAXIMUM_CAPACITY

: (rounded = Integer.highestOneBit(number)) != 0

? (Integer.bitCount(number) > 1) ? rounded << 1 : rounded

: 1;

return rounded;

}

/**

* Inflates the table.

*/

private void inflateTable(int toSize) {

// Find a power of 2 >= toSize

//找到大于toSize最小的2的整数次幂

int capacity = roundUpToPowerOf2(toSize);

threshold = (int) Math.min(capacity * loadFactor, MAXIMUM_CAPACITY + 1);

table = new Entry[capacity];

initHashSeedAsNeeded(capacity);

}

void init() {

}

final boolean initHashSeedAsNeeded(int capacity) {

boolean currentAltHashing = hashSeed != 0;

boolean useAltHashing = sun.misc.VM.isBooted() &&

(capacity >= Holder.ALTERNATIVE_HASHING_THRESHOLD);

boolean switching = currentAltHashing ^ useAltHashing;

if (switching) {

hashSeed = useAltHashing

? sun.misc.Hashing.randomHashSeed(this)

: 0;

}

return switching;

}

/**

* Retrieve object hash code and applies a supplemental hash function to the

* result hash, which defends against poor quality hash functions. This is

* critical because HashMap uses power-of-two length hash tables, that

* otherwise encounter collisions for hashCodes that do not differ

* in lower bits. Note: Null keys always map to hash 0, thus index 0.

*/

final int hash(Object k) {

int h = hashSeed;

if (0 != h && k instanceof String) {

return sun.misc.Hashing.stringHash32((String) k);

}

h ^= k.hashCode();

// This function ensures that hashCodes that differ only by

// constant multiples at each bit position have a bounded

// number of collisions (approximately 8 at default load factor).

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

}

/**

* 根据key对应hash值和哈希表长度获取key在哈希表中下标

*/

static int indexFor(int h, int length) {

// assert Integer.bitCount(length) == 1 : "length must be a non-zero power of 2";

return h & (length-1);

}

/**

* 获取map中键值对数量

*/

public int size() {

return size;

}

public boolean isEmpty() {

return size == 0;

}

/**

*/

public V get(Object key) {

if (key == null)

return getForNullKey();

Entry<K,V> entry = getEntry(key);

return null == entry ? null : entry.getValue();

}

/**

* Offloaded version of get() to look up null keys. Null keys map

* to index 0. This null case is split out into separate methods

* for the sake of performance in the two most commonly used

* operations (get and put), but incorporated with conditionals in

* others.

*/

private V getForNullKey() {

if (size == 0) {

return null;

}

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null)

return e.value;

}

return null;

}

/**

* Returns <tt>true</tt> if this map contains a mapping for the

* specified key.

*

* @param key The key whose presence in this map is to be tested

* @return <tt>true</tt> if this map contains a mapping for the specified

* key.

*/

public boolean containsKey(Object key) {

return getEntry(key) != null;

}

/**

根据key获取entry对象,如果 没有那么返回null

整体操作就是首先根据key的哈希值获取在table中下标,然后拿到链表后遍历链表

*/

final Entry<K,V> getEntry(Object key) {

if (size == 0) {

return null;

}

int hash = (key == null) ? 0 : hash(key);

for (Entry<K,V> e = table[indexFor(hash, table.length)];

e != null;

e = e.next) {

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

}

return null;

}

//存储key-value键值对

public V put(K key, V value) {

//判断下如果table为空那么初始化哈希表

if (table == EMPTY_TABLE) {

inflateTable(threshold);

}

if (key == null)

return putForNullKey(value);

int hash = hash(key);

//获取在哈希表中下标

int i = indexFor(hash, table.length);

//拿到下标位置的Entry链表对象,遍历链表所有元素,判断链表上是否已存在key为当前插入key的Entry对象

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

//如果链表上已存在当前插入的key那么将原来value替换掉

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

//如果链表上不存在当前key,创建Entry

modCount++;

addEntry(hash, key, value, i);

return null;

}

/**

* 插入key为null的value

*/

private V putForNullKey(V value) {

//讲key为null的键值对放到table下标为0的链表中

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(0, null, value, 0);

return null;

}

/**

* This method is used instead of put by constructors and

* pseudoconstructors (clone, readObject). It does not resize the table,

* check for comodification, etc. It calls createEntry rather than

* addEntry.

*/

private void putForCreate(K key, V value) {

int hash = null == key ? 0 : hash(key);

int i = indexFor(hash, table.length);

/**

* Look for preexisting entry for key. This will never happen for

* clone or deserialize. It will only happen for construction if the

* input Map is a sorted map whose ordering is inconsistent w/ equals.

*/

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k)))) {

e.value = value;

return;

}

}

createEntry(hash, key, value, i);

}

private void putAllForCreate(Map<? extends K, ? extends V> m) {

for (Map.Entry<? extends K, ? extends V> e : m.entrySet())

putForCreate(e.getKey(), e.getValue());

}

/**

* Rehashes the contents of this map into a new array with a

* larger capacity. This method is called automatically when the

* number of keys in this map reaches its threshold.

*

* If current capacity is MAXIMUM_CAPACITY, this method does not

* resize the map, but sets threshold to Integer.MAX_VALUE.

* This has the effect of preventing future calls.

*

* @param newCapacity the new capacity, MUST be a power of two;

* must be greater than current capacity unless current

* capacity is MAXIMUM_CAPACITY (in which case value

* is irrelevant).

*/

void resize(int newCapacity) {

Entry[] oldTable = table;

int oldCapacity = oldTable.length;

if (oldCapacity == MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return;

}

Entry[] newTable = new Entry[newCapacity];

transfer(newTable, initHashSeedAsNeeded(newCapacity));

table = newTable;

threshold = (int)Math.min(newCapacity * loadFactor, MAXIMUM_CAPACITY + 1);

}

/**

* Transfers all entries from current table to newTable.

*/

void transfer(Entry[] newTable, boolean rehash) {

int newCapacity = newTable.length;

for (Entry<K,V> e : table) {

while(null != e) {

Entry<K,V> next = e.next;

if (rehash) {

e.hash = null == e.key ? 0 : hash(e.key);

}

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i];

newTable[i] = e;

e = next;

}

}

}

/**

* Copies all of the mappings from the specified map to this map.

* These mappings will replace any mappings that this map had for

* any of the keys currently in the specified map.

*

* @param m mappings to be stored in this map

* @throws NullPointerException if the specified map is null

*/

public void putAll(Map<? extends K, ? extends V> m) {

int numKeysToBeAdded = m.size();

if (numKeysToBeAdded == 0)

return;

if (table == EMPTY_TABLE) {

inflateTable((int) Math.max(numKeysToBeAdded * loadFactor, threshold));

}

/*

* Expand the map if the map if the number of mappings to be added

* is greater than or equal to threshold. This is conservative; the

* obvious condition is (m.size() + size) >= threshold, but this

* condition could result in a map with twice the appropriate capacity,

* if the keys to be added overlap with the keys already in this map.

* By using the conservative calculation, we subject ourself

* to at most one extra resize.

*/

if (numKeysToBeAdded > threshold) {

int targetCapacity = (int)(numKeysToBeAdded / loadFactor + 1);

if (targetCapacity > MAXIMUM_CAPACITY)

targetCapacity = MAXIMUM_CAPACITY;

int newCapacity = table.length;

while (newCapacity < targetCapacity)

newCapacity <<= 1;

if (newCapacity > table.length)

resize(newCapacity);

}

for (Map.Entry<? extends K, ? extends V> e : m.entrySet())

put(e.getKey(), e.getValue());

}

/**

* Removes the mapping for the specified key from this map if present.

*

* @param key key whose mapping is to be removed from the map

* @return the previous value associated with <tt>key</tt>, or

* <tt>null</tt> if there was no mapping for <tt>key</tt>.

* (A <tt>null</tt> return can also indicate that the map

* previously associated <tt>null</tt> with <tt>key</tt>.)

*/

public V remove(Object key) {

Entry<K,V> e = removeEntryForKey(key);

return (e == null ? null : e.value);

}

/**

* Removes and returns the entry associated with the specified key

* in the HashMap. Returns null if the HashMap contains no mapping

* for this key.

*/

final Entry<K,V> removeEntryForKey(Object key) {

if (size == 0) {

return null;

}

int hash = (key == null) ? 0 : hash(key);

int i = indexFor(hash, table.length);

Entry<K,V> prev = table[i];

Entry<K,V> e = prev;

while (e != null) {

Entry<K,V> next = e.next;

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k)))) {

modCount++;

size--;

if (prev == e)

table[i] = next;

else

prev.next = next;

e.recordRemoval(this);

return e;

}

prev = e;

e = next;

}

return e;

}

/**

* Special version of remove for EntrySet using {@code Map.Entry.equals()}

* for matching.

*/

final Entry<K,V> removeMapping(Object o) {

if (size == 0 || !(o instanceof Map.Entry))

return null;

Map.Entry<K,V> entry = (Map.Entry<K,V>) o;

Object key = entry.getKey();

int hash = (key == null) ? 0 : hash(key);

int i = indexFor(hash, table.length);

Entry<K,V> prev = table[i];

Entry<K,V> e = prev;

while (e != null) {

Entry<K,V> next = e.next;

if (e.hash == hash && e.equals(entry)) {

modCount++;

size--;

if (prev == e)

table[i] = next;

else

prev.next = next;

e.recordRemoval(this);

return e;

}

prev = e;

e = next;

}

return e;

}

/**

* Removes all of the mappings from this map.

* The map will be empty after this call returns.

*/

public void clear() {

modCount++;

Arrays.fill(table, null);

size = 0;

}

/**

* Returns <tt>true</tt> if this map maps one or more keys to the

* specified value.

*

* @param value value whose presence in this map is to be tested

* @return <tt>true</tt> if this map maps one or more keys to the

* specified value

*/

public boolean containsValue(Object value) {

if (value == null)

return containsNullValue();

Entry[] tab = table;

for (int i = 0; i < tab.length ; i++)

for (Entry e = tab[i] ; e != null ; e = e.next)

if (value.equals(e.value))

return true;

return false;

}

/**

* Special-case code for containsValue with null argument

*/

private boolean containsNullValue() {

Entry[] tab = table;

for (int i = 0; i < tab.length ; i++)

for (Entry e = tab[i] ; e != null ; e = e.next)

if (e.value == null)

return true;

return false;

}

/**

* Returns a shallow copy of this <tt>HashMap</tt> instance: the keys and

* values themselves are not cloned.

*

* @return a shallow copy of this map

*/

public Object clone() {

HashMap<K,V> result = null;

try {

result = (HashMap<K,V>)super.clone();

} catch (CloneNotSupportedException e) {

// assert false;

}

if (result.table != EMPTY_TABLE) {

result.inflateTable(Math.min(

(int) Math.min(

size * Math.min(1 / loadFactor, 4.0f),

// we have limits...

HashMap.MAXIMUM_CAPACITY),

table.length));

}

result.entrySet = null;

result.modCount = 0;

result.size = 0;

result.init();

result.putAllForCreate(this);

return result;

}

static class Entry<K,V> implements Map.Entry<K,V> {

final K key;

V value;

Entry<K,V> next;

int hash;

/**

* Creates new entry.

*/

Entry(int h, K k, V v, Entry<K,V> n) {

value = v;

next = n;

key = k;

hash = h;

}

public final K getKey() {

return key;

}

public final V getValue() {

return value;

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

public final boolean equals(Object o) {

if (!(o instanceof Map.Entry))

return false;

Map.Entry e = (Map.Entry)o;

Object k1 = getKey();

Object k2 = e.getKey();

if (k1 == k2 || (k1 != null && k1.equals(k2))) {

Object v1 = getValue();

Object v2 = e.getValue();

if (v1 == v2 || (v1 != null && v1.equals(v2)))

return true;

}

return false;

}

public final int hashCode() {

return Objects.hashCode(getKey()) ^ Objects.hashCode(getValue());

}

public final String toString() {

return getKey() + "=" + getValue();

}

/**

* This method is invoked whenever the value in an entry is

* overwritten by an invocation of put(k,v) for a key k that's already

* in the HashMap.

*/

void recordAccess(HashMap<K,V> m) {

}

/**

* This method is invoked whenever the entry is

* removed from the table.

*/

void recordRemoval(HashMap<K,V> m) {

}

}

/**

* Adds a new entry with the specified key, value and hash code to

* the specified bucket. It is the responsibility of this

* method to resize the table if appropriate.

*

* Subclass overrides this to alter the behavior of put method.

*/

void addEntry(int hash, K key, V value, int bucketIndex) {

//如果哈希表大小已经达到扩容阈值,并且下标对应值不为空,那么将哈希表扩容为原来两倍

if ((size >= threshold) && (null != table[bucketIndex])) {

resize(2 * table.length);

hash = (null != key) ? hash(key) : 0;

bucketIndex = indexFor(hash, table.length);

}

createEntry(hash, key, value, bucketIndex);

}

/**

创建新的entry对象

*/

void createEntry(int hash, K key, V value, int bucketIndex) {

//首先获取哈希表中bucketIndex下标对应的Entry对象

Entry<K,V> e = table[bucketIndex];

//然后根据新传进来的key-value键值对创建一个entry对象,并且next属性指向原来bucketIndex下标位置上的entry对象,

//然后将新创建的entry对象,放到哈希表bucketIndex位置。

table[bucketIndex] = new Entry<>(hash, key, value, e);

size++;

}

private abstract class HashIterator<E> implements Iterator<E> {

Entry<K,V> next; // next entry to return

int expectedModCount; // For fast-fail

int index; // current slot

Entry<K,V> current; // current entry

HashIterator() {

expectedModCount = modCount;

if (size > 0) { // advance to first entry

Entry[] t = table;

while (index < t.length && (next = t[index++]) == null)

;

}

}

public final boolean hasNext() {

return next != null;

}

final Entry<K,V> nextEntry() {

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

Entry<K,V> e = next;

if (e == null)

throw new NoSuchElementException();

if ((next = e.next) == null) {

Entry[] t = table;

while (index < t.length && (next = t[index++]) == null)

;

}

current = e;

return e;

}

public void remove() {

if (current == null)

throw new IllegalStateException();

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

Object k = current.key;

current = null;

HashMap.this.removeEntryForKey(k);

expectedModCount = modCount;

}

}

private final class ValueIterator extends HashIterator<V> {

public V next() {

return nextEntry().value;

}

}

private final class KeyIterator extends HashIterator<K> {

public K next() {

return nextEntry().getKey();

}

}

private final class EntryIterator extends HashIterator<Map.Entry<K,V>> {

public Map.Entry<K,V> next() {

return nextEntry();

}

}

// Subclass overrides these to alter behavior of views' iterator() method

Iterator<K> newKeyIterator() {

return new KeyIterator();

}

Iterator<V> newValueIterator() {

return new ValueIterator();

}

Iterator<Map.Entry<K,V>> newEntryIterator() {

return new EntryIterator();

}

// Views

private transient Set<Map.Entry<K,V>> entrySet = null;

/**

* Returns a {@link Set} view of the keys contained in this map.

* The set is backed by the map, so changes to the map are

* reflected in the set, and vice-versa. If the map is modified

* while an iteration over the set is in progress (except through

* the iterator's own <tt>remove</tt> operation), the results of

* the iteration are undefined. The set supports element removal,

* which removes the corresponding mapping from the map, via the

* <tt>Iterator.remove</tt>, <tt>Set.remove</tt>,

* <tt>removeAll</tt>, <tt>retainAll</tt>, and <tt>clear</tt>

* operations. It does not support the <tt>add</tt> or <tt>addAll</tt>

* operations.

*/

public Set<K> keySet() {

Set<K> ks = keySet;

return (ks != null ? ks : (keySet = new KeySet()));

}

private final class KeySet extends AbstractSet<K> {

public Iterator<K> iterator() {

return newKeyIterator();

}

public int size() {

return size;

}

public boolean contains(Object o) {

return containsKey(o);

}

public boolean remove(Object o) {

return HashMap.this.removeEntryForKey(o) != null;

}

public void clear() {

HashMap.this.clear();

}

}

/**

* Returns a {@link Collection} view of the values contained in this map.

* The collection is backed by the map, so changes to the map are

* reflected in the collection, and vice-versa. If the map is

* modified while an iteration over the collection is in progress

* (except through the iterator's own <tt>remove</tt> operation),

* the results of the iteration are undefined. The collection

* supports element removal, which removes the corresponding

* mapping from the map, via the <tt>Iterator.remove</tt>,

* <tt>Collection.remove</tt>, <tt>removeAll</tt>,

* <tt>retainAll</tt> and <tt>clear</tt> operations. It does not

* support the <tt>add</tt> or <tt>addAll</tt> operations.

*/

public Collection<V> values() {

Collection<V> vs = values;

return (vs != null ? vs : (values = new Values()));

}

private final class Values extends AbstractCollection<V> {

public Iterator<V> iterator() {

return newValueIterator();

}

public int size() {

return size;

}

public boolean contains(Object o) {

return containsValue(o);

}

public void clear() {

HashMap.this.clear();

}

}

/**

* Returns a {@link Set} view of the mappings contained in this map.

* The set is backed by the map, so changes to the map are

* reflected in the set, and vice-versa. If the map is modified

* while an iteration over the set is in progress (except through

* the iterator's own <tt>remove</tt> operation, or through the

* <tt>setValue</tt> operation on a map entry returned by the

* iterator) the results of the iteration are undefined. The set

* supports element removal, which removes the corresponding

* mapping from the map, via the <tt>Iterator.remove</tt>,

* <tt>Set.remove</tt>, <tt>removeAll</tt>, <tt>retainAll</tt> and

* <tt>clear</tt> operations. It does not support the

* <tt>add</tt> or <tt>addAll</tt> operations.

*

* @return a set view of the mappings contained in this map

*/

public Set<Map.Entry<K,V>> entrySet() {

return entrySet0();

}

private Set<Map.Entry<K,V>> entrySet0() {

Set<Map.Entry<K,V>> es = entrySet;

return es != null ? es : (entrySet = new EntrySet());

}

private final class EntrySet extends AbstractSet<Map.Entry<K,V>> {

public Iterator<Map.Entry<K,V>> iterator() {

return newEntryIterator();

}

public boolean contains(Object o) {

if (!(o instanceof Map.Entry))

return false;

Map.Entry<K,V> e = (Map.Entry<K,V>) o;

Entry<K,V> candidate = getEntry(e.getKey());

return candidate != null && candidate.equals(e);

}

public boolean remove(Object o) {

return removeMapping(o) != null;

}

public int size() {

return size;

}

public void clear() {

HashMap.this.clear();

}

}

/**

* Save the state of the <tt>HashMap</tt> instance to a stream (i.e.,

* serialize it).

*

* @serialData The <i>capacity</i> of the HashMap (the length of the

* bucket array) is emitted (int), followed by the

* <i>size</i> (an int, the number of key-value

* mappings), followed by the key (Object) and value (Object)

* for each key-value mapping. The key-value mappings are

* emitted in no particular order.

*/

private void writeObject(java.io.ObjectOutputStream s)

throws IOException

{

// Write out the threshold, loadfactor, and any hidden stuff

s.defaultWriteObject();

// Write out number of buckets

if (table==EMPTY_TABLE) {

s.writeInt(roundUpToPowerOf2(threshold));

} else {

s.writeInt(table.length);

}

// Write out size (number of Mappings)

s.writeInt(size);

// Write out keys and values (alternating)

if (size > 0) {

for(Map.Entry<K,V> e : entrySet0()) {

s.writeObject(e.getKey());

s.writeObject(e.getValue());

}

}

}

private static final long serialVersionUID = 362498820763181265L;

/**

* Reconstitute the {@code HashMap} instance from a stream (i.e.,

* deserialize it).

*/

private void readObject(java.io.ObjectInputStream s)

throws IOException, ClassNotFoundException

{

// Read in the threshold (ignored), loadfactor, and any hidden stuff

s.defaultReadObject();

if (loadFactor <= 0 || Float.isNaN(loadFactor)) {

throw new InvalidObjectException("Illegal load factor: " +

loadFactor);

}

// set other fields that need values

table = (Entry<K,V>[]) EMPTY_TABLE;

// Read in number of buckets

s.readInt(); // ignored.

// Read number of mappings

int mappings = s.readInt();

if (mappings < 0)

throw new InvalidObjectException("Illegal mappings count: " +

mappings);

// capacity chosen by number of mappings and desired load (if >= 0.25)

int capacity = (int) Math.min(

mappings * Math.min(1 / loadFactor, 4.0f),

// we have limits...

HashMap.MAXIMUM_CAPACITY);

// allocate the bucket array;

if (mappings > 0) {

inflateTable(capacity);

} else {

threshold = capacity;

}

init(); // Give subclass a chance to do its thing.

// Read the keys and values, and put the mappings in the HashMap

for (int i = 0; i < mappings; i++) {

K key = (K) s.readObject();

V value = (V) s.readObject();

putForCreate(key, value);

}

}

// These methods are used when serializing HashSets

int capacity() { return table.length; }

float loadFactor() { return loadFactor; }

}

上面代码太多,有点乱,下面总结几点:

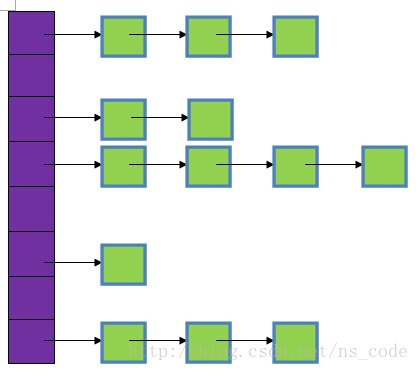

1、首先要清楚HashMap的存储结构,如下图所示:

图中,紫色部分即代表哈希表table,也称为哈希数组,数组的每个元素都是一个单链表的头节点,链表是用来解决冲突的,如果不同的key映射到了数组的同一位置处,就将其放入单链表中。

2、首先看链表中节点的数据结构:

它的结构元素除了key、value、hash外,还有next,next指向下一个节点。另外,这里覆写了equals和hashCode方法来保证键值对的独一无二。

3、HashMap共有四个构造方法。构造方法中提到了两个很重要的参数:初始容量和加载因子。这两个参数是影响HashMap性能的重要参数,其中容量表示哈希表中槽的数量(即哈希数组的长度),初始容量是创建哈希表时的容量(从构造函数中可以看出,如果不指明,则默认为16),加载因子是哈希表在其容量自动增加之前可以达到多满的一种尺度,当哈希表中的条目数超出了加载因子与当前容量的乘积时,则要对该哈希表进行 resize 操作(即扩容)。

下面说下加载因子,如果加载因子越大,对空间的利用更充分,但是查找效率会降低(链表长度会越来越长);如果加载因子太小,那么表中的数据将过于稀疏(很多空间还没用,就开始扩容了),对空间造成严重浪费。如果我们在构造方法中不指定,则系统默认加载因子为0.75,这是一个比较理想的值,一般情况下我们是无需修改的。

另外,无论我们指定的容量为多少,构造方法都会将实际容量设为不小于指定容量的2的次方的一个数,且最大值不能超过2的30次方

4、HashMap中key和value都允许为null。

5、要重点分析下HashMap中用的最多的两个方法put和get。先从比较简单的get方法着手,源码如下:

//根据key获取value

public V get(Object key) {

if (key == null)

return getForNullKey();

Entry<K,V> entry = getEntry(key);

//因为可能不存在key对应的entry对象,所以需要处理entry为null

return null == entry ? null : entry.getValue();

}

//获取key为null的值

private V getForNullKey() {

if (size == 0) {

return null;

}

//HashMap将key为null的值放在table[0]的位置的链表,但是不是定是链表的第一个位置

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null)

return e.value;

}

return null;

}

//根据key获取key对应的Entry对象

final Entry<K,V> getEntry(Object key) {

//如果table的size为0直接返回空

if (size == 0) {

return null;

}

//否则根据key获取哈希表中链表,遍历,获取链表中key和hash值相同的Entry的value

int hash = (key == null) ? 0 : hash(key);

for (Entry<K,V> e = table[indexFor(hash, table.length)];

e != null;

e = e.next) {

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

}

return null;

}

首先,如果key为null,则直接从哈希表的第一个位置table[0]对应的链表上查找。记住,key为null的键值对永远都放在以table[0]为头结点的链表中,当然不一定是存放在头结点table[0]中。 如果key不为null,则先求的key的hash值,根据hash值找到在table中的索引,在该索引对应的单链表中查找是否有键值对的key与目标key相等,有就返回对应的value,没有则返回null。

put方法稍微复杂些,代码如下:

如果table为EMPTY_TABLE,那么,初始化table,inflateTable的源码如下:

private void inflateTable(int toSize) {

// 设置比toSize大的2的整数次幂的值为初始化容量

int capacity = roundUpToPowerOf2(toSize);

threshold = (int) Math.min(capacity * loadFactor, MAXIMUM_CAPACITY + 1);

table = new Entry[capacity];

initHashSeedAsNeeded(capacity);

}

如果key不为null,则同样先求出key的hash值,根据hash值得出在table中的索引,而后遍历对应的单链表,如果单链表中存在与目标key相等的键值对,则将新的value覆盖旧的value,比将旧的value返回,如果找不到与目标key相等的键值对,或者该单链表为空,则将该键值对插入到改单链表的头结点位置(每次新插入的节点都是放在头结点的位置),该操作是有addEntry方法实现的,它的源码如下:

//新增entry。将key-value插入指定位置,bucketIndex是链表位置索引

void addEntry(int hash, K key, V value, int bucketIndex) {

//如果HashMap的size达到扩容阈值,并且索引所在位置链表不为空,那么扩容

if ((size >= threshold) && (null != table[bucketIndex])) {

//扩容,数组长度变为原来的两倍

resize(2 * table.length);

hash = (null != key) ? hash(key) : 0;

//获取key在新生成哈希表中位置索引

bucketIndex = indexFor(hash, table.length);

}

createEntry(hash, key, value, bucketIndex);

}

void createEntry(int hash, K key, V value, int bucketIndex) {

//保存bucketIndex位置的值到entry

Entry<K,V> e = table[bucketIndex];

//设置bucketIndex位置元素为“新Entry”,并且设置e为“新Entry”的下一个节点

table[bucketIndex] = new Entry<>(hash, key, value, e);

size++;

}

两外注意addEntry方法中第一行代码,每次加入键值对时,都要判断当前已用的槽的数目是否大于等于阀值(容量*加载因子),如果大于等于,则进行扩容,将容量扩为原来容量的2倍。

6、关于扩容。上面我们看到了扩容的方法,resize方法,它的源码如下:

很明显,是新建了一个HashMap的底层数组,而后调用transfer方法,将就HashMap的全部元素添加到新的HashMap中(要重新计算元素在新的数组中的索引位置)。transfer方法的源码如下:

很明显,扩容是一个相当耗时的操作,因为它需要重新计算这些元素在新的数组中的位置并进行复制处理。因此,我们在用HashMap的时,最好能提前预估下HashMap中元素的个数,这样有助于提高HashMap的性能。

7、注意containsKey方法和containsValue方法。前者直接可以通过key的哈希值将搜索范围定位到指定索引对应的链表,而后者要对哈希数组的每个链表进行搜索。

8、我们重点来分析下求hash值和索引值的方法,这两个方法便是HashMap设计的最为核心的部分,二者结合能保证哈希表中的元素尽可能均匀地散列。

计算哈希值的方法如下:

它只是一个数学公式,IDK这样设计对hash值的计算,自然有它的好处,至于为什么这样设计,我们这里不去追究,只要明白一点,用的位的操作使hash值的计算效率很高。

由hash值找到对应索引的方法如下:

这个我们要重点说下,我们一般对哈希表的散列很自然地会想到用hash值对length取模(即除法散列法),Hashtable中也是这样实现的,这种方法基本能保证元素在哈希表中散列的比较均匀,但取模会用到除法运算,效率很低,HashMap中则通过h&(length-1)的方法来代替取模,同样实现了均匀的散列,但效率要高很多,这也是HashMap对Hashtable的一个改进。

接下来,我们分析下为什么哈希表的容量一定要是2的整数次幂。首先,length为2的整数次幂的话,h&(length-1)就相当于对length取模,这样便保证了散列的均匀,同时也提升了效率;其次,length为2的整数次幂的话,为偶数,这样length-1为奇数,奇数的最后一位是1,这样便保证了h&(length-1)的最后一位可能为0,也可能为1(这取决于h的值),即与后的结果可能为偶数,也可能为奇数,这样便可以保证散列的均匀性,而如果length为奇数的话,很明显length-1为偶数,它的最后一位是0,这样h&(length-1)的最后一位肯定为0,即只能为偶数,这样任何hash值都只会被散列到数组的偶数下标位置上,这便浪费了近一半的空间,因此,length取2的整数次幂,是为了使不同hash值发生碰撞的概率较小,这样就能使元素在哈希表中均匀地散列。