1.基础环境配置

1.1 节点硬件规划

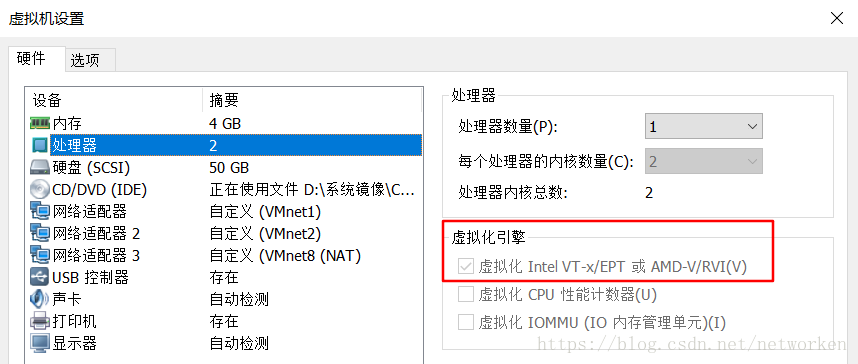

本次搭建使用VMware Workstation虚拟出3台CentOS7虚拟机作为主机节点,节点架构:1个controller控制节点、1个compute计算节点、1个cinder块存储节点。硬件配置具体如下:

| 节点名称 | CPU | 内存 | 磁盘 | 操作系统镜像 |

|---|---|---|---|---|

| controller节点 | 2VCPU | 4GB | 50GB | CentOS-7-x86_64-Minimal-1804.iso |

| compute1节点 | 2VCPU | 4GB | 50GB | CentOS-7-x86_64-Minimal-1804.iso |

| cinder1节点 | 2VCPU | 4GB | 50GB系统盘,50GB存储盘 | CentOS-7-x86_64-Minimal-1804.iso |

说明:硬件规划可以根据自己需求灵活调整,这里使用CentOS-7-x86_64-Minimal-1804,minimal版占用资源更少,下载地址:https://www.centos.org/。

Vmware Workstation虚拟机开启虚拟化引擎:

查看操作系统及内核版本:

[root@controller ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

[root@controller ~]# uname -sr

Linux 4.16.11-1.el7.elrepo.x86_641.2 节点网络规划

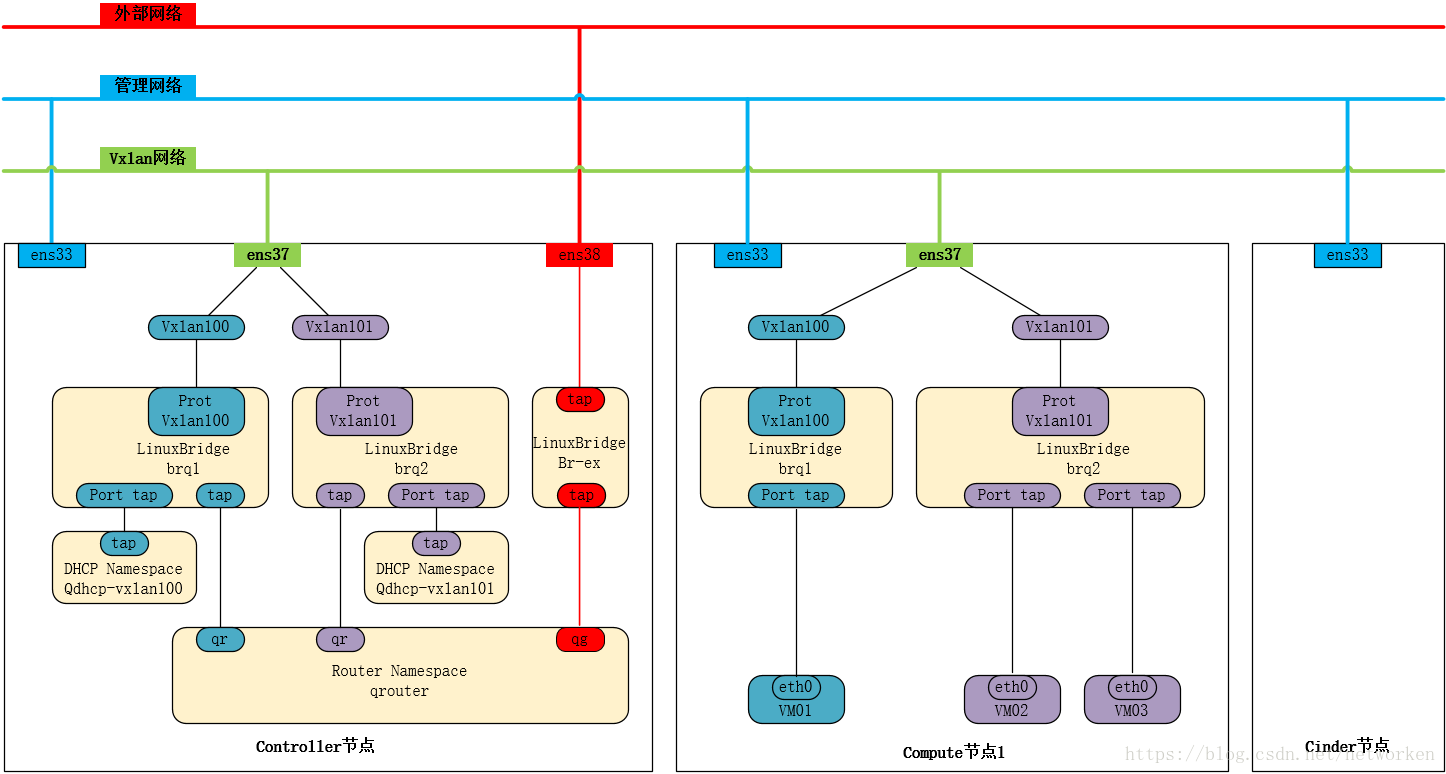

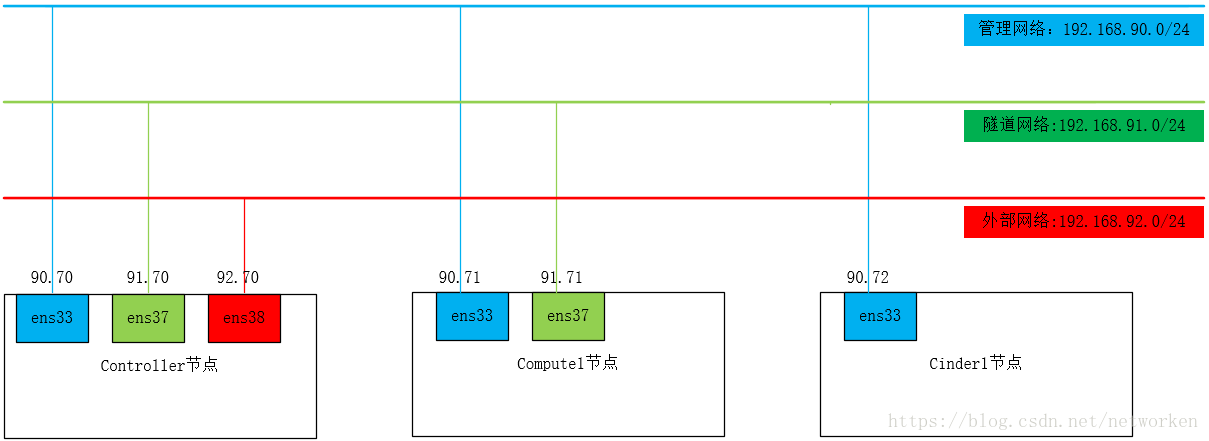

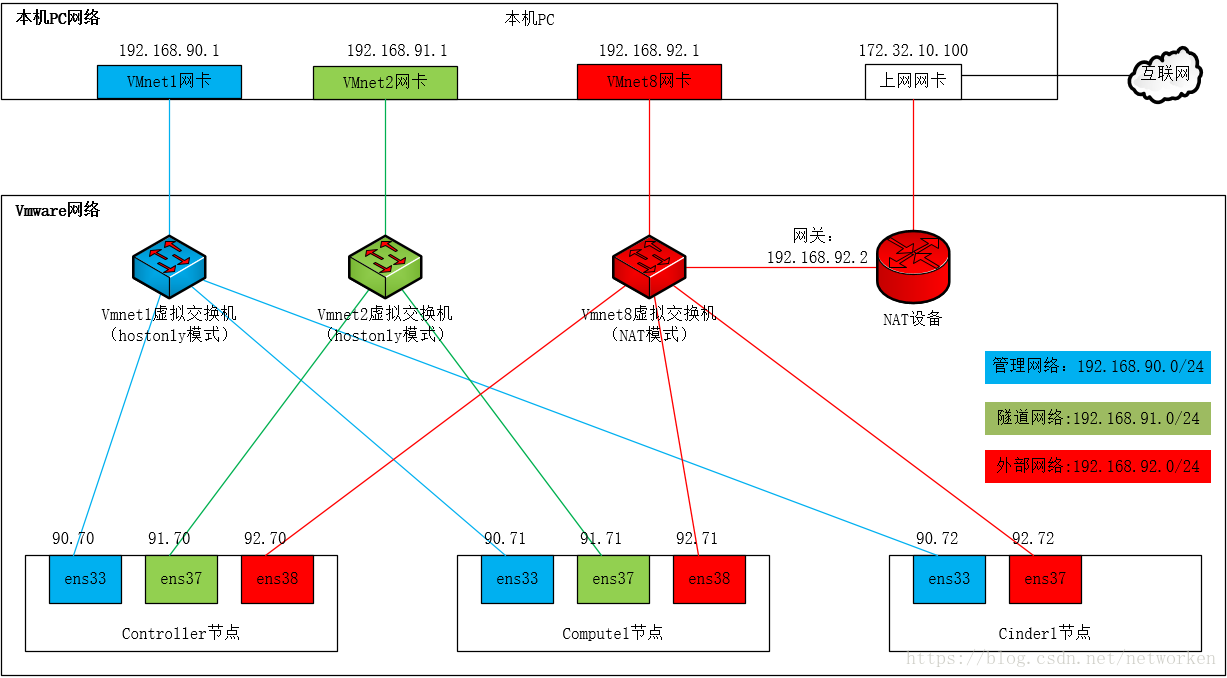

本次搭建网络使用linuxbridge+vxlan模式,包含三个网络平面:管理网络,外部网络和租户隧道网络,具体规划如下:

| 节点名称 | 网卡编号 | 网卡名称 | 网卡模式 | 虚拟交换机 | 网络类型 | IP地址 | 网关 |

|---|---|---|---|---|---|---|---|

| controller节点 | 网卡1 | ens33 | 仅主机模式 | vmnet1 | 管理网络 | 192.168.90.70 | 无 |

| 网卡2 | ens37 | 仅主机模式 | vmnet2 | 隧道网络 | 192.168.91.70 | 无 | |

| 网卡3 | ens38 | NAT模式 | vmnet8 | 外部网络 | 192.168.92.70 | 192.168.92.2/24 | |

| compute1节点 | 网卡1 | ens33 | 仅主机模式 | vmnet1 | 管理网络 | 192.168.90.71 | 无 |

| 网卡2 | ens37 | 仅主机模式 | vmnet2 | 隧道网络 | 192.168.91.71 | 无 | |

| 网卡3 | ens38 | NAT模式 | vmnet8 | 部署网络 | 192.168.92.71 | 192.168.92.2/24 | |

| cinder1节点 | 网卡1 | ens33 | 仅主机模式 | vmnet1 | 管理网络 | 192.168.90.72 | 无 |

| 网卡2 | ens37 | NAT模式 | vmnet8 | 部署网络 | 192.168.92.72 | 192.168.92.2/24 |

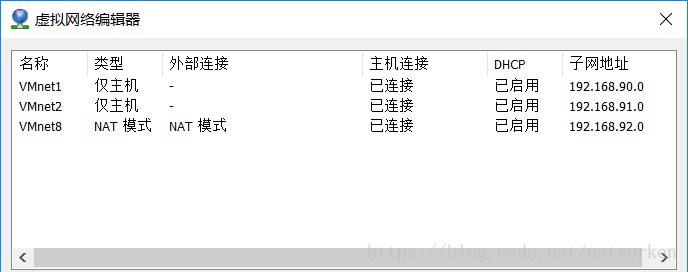

VMware Workstation虚拟网络编辑器配置信息,这里新创建VMnet2作为隧道网络:

网络规划说明:

- 控制节点3块网卡,计算节点3块网卡,存储节点2块网卡。特别注意,计算节点和存储节点的最后一块网卡仅用于连接互联网部署Oenstack软件包,如果搭建有本地yum源,这两块网卡是不需要的,不属于openstack架构体系中的网络。

- 管理网络配置为仅主机模式,官方解释通过管理网络访问互联网安装软件包,如果搭建的有内部yum源,管理网络是不需要访问互联网的,配置成hostonly模式也可以。

- 隧道网络配置为仅主机模式,因为隧道网络不需要访问互联网,仅用来承载openstack内部租户的网络流量。

- 外部网络配置为NAT模式,控制节点的外部网络主要是实现openstack租户网络对外网的访问,另外openstack软件包的部署安装也走这个网络,

- 特别注意:计算节点和存储节点的外部网络仅用来部署openstack软件包,没有其他用途。

三种网络平面说明:

- 管理网络(management/API网络):

提供系统管理相关功能,用于节点之间各服务组件内部通信以及对数据库服务的访问,所有节点都需要连接到管理网络,这里管理网络也承载了API网络的流量,将API网络和管理网络合并,OpenStack各组件通过API网络向用户暴露API服务。 隧道网络(tunnel网络或self-service网络):

提供租户虚拟网络的承载网络(VXLAN or GRE)。openstack里面使用gre或者vxlan模式,需要有隧道网络;隧道网络采用了点到点通信协议代替了交换连接,在openstack里,这个tunnel就是虚拟机走网络数据流量用的。这个网络所承载的网络和官方文档Networking Option 2: Self-service networks相对应。外部网络(external网络或者provider网络):

openstack网络至少要包括一个外部网络,这个网络能够访问OpenStack安装环境之外的网络,并且非openstack环境中的设备能够访问openstack外部网络的某个IP。另外外部网络为OpenStack环境中的虚拟机提供浮动IP,实现openstack外部网络对内部虚拟机实例的访问。这个网络和官方文档Networking Option 1: Provider networks相对应。注意,这里没有规划存储平面网络,cinder存储节点使用管理网络承载存储网络数据。

本次搭建Openstack网络结构图:

本次搭建环境整体网络图:

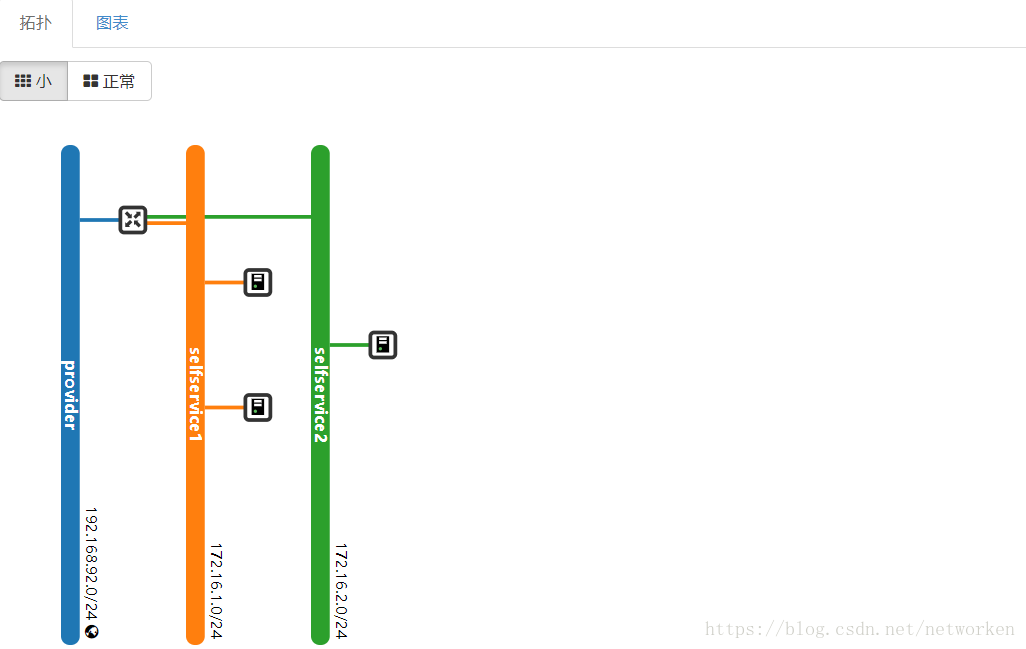

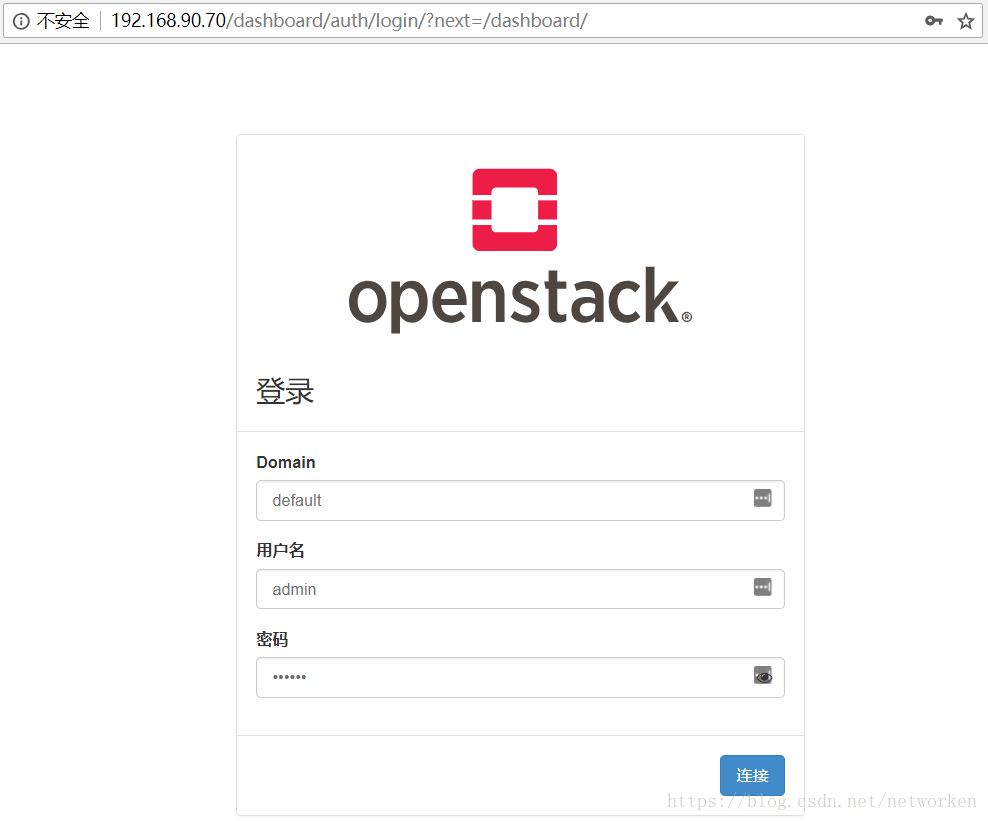

搭建完成后的内部网络图:

1.3 关闭防火墙

1.关闭selinux

# sed -i 's/enforcing/disabled/g' /etc/selinux/config

# setenforce 02.关闭firewalld防火墙

# systemctl stop firewalld.service && systemctl disable firewalld.service

# firewall-cmd --state #查看是否关闭1.4 配置yum源

以下操作在所有节点执行

配置国内阿里云yum源以获取更快的下载速度:

1.备份CentOS官方源:

# mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup2.下载阿里云yum源:

# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo3.测试yum源是否正常

# yum clean all && yum makecache也可以搭建本地yum源,参考链接:https://blog.csdn.net/networken/article/details/80729234

1.5 配置节点IP

1.控制节点网络配置:

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens33

ONBOOT=yes

#####################

IPADDR=192.168.90.70

PREFIX=24

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens37

ONBOOT=yes

#####################

IPADDR=192.168.91.70

PREFIX=24

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens38

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens38

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens38

ONBOOT=yes

#####################

IPADDR=192.168.92.70

PREFIX=24

GATEWAY=192.168.92.2

DNS1=114.114.114.114

DNS2=1.1.1.1

[root@controller ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cb:a5:19 brd ff:ff:ff:ff:ff:ff

inet 192.168.90.70/24 brd 192.168.90.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::2801:f5c2:4e5a:d003/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cb:a5:23 brd ff:ff:ff:ff:ff:ff

inet 192.168.91.70/24 brd 192.168.91.255 scope global noprefixroute ens37

valid_lft forever preferred_lft forever

inet6 fe80::8af8:8b5c:793f:e719/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: ens38: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cb:a5:2d brd ff:ff:ff:ff:ff:ff

inet 192.168.92.70/24 brd 192.168.92.255 scope global noprefixroute ens38

valid_lft forever preferred_lft forever

inet6 fe80::e8d3:6442:89c0:cd4a/64 scope link noprefixroute

valid_lft forever preferred_lft forever2.计算节点网络配置:

[root@compute1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens33

ONBOOT=yes

#####################

IPADDR=192.168.90.71

PREFIX=24

[root@compute1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens37

ONBOOT=yes

#####################

IPADDR=192.168.91.71

PREFIX=24

[root@compute1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens38

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens38

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens38

ONBOOT=yes

#####################

IPADDR=192.168.92.71

PREFIX=24

GATEWAY=192.168.92.2

DNS1=114.114.114.114

DNS2=1.1.1.1

[root@compute1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c7:ba:9b brd ff:ff:ff:ff:ff:ff

inet 192.168.90.71/24 brd 192.168.90.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::ffb5:9b12:f911:89ec/64 scope link noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::b01a:e132:1923:175/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::2801:f5c2:4e5a:d003/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

3: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c7:ba:a5 brd ff:ff:ff:ff:ff:ff

inet 192.168.91.71/24 brd 192.168.91.255 scope global noprefixroute ens37

valid_lft forever preferred_lft forever

inet6 fe80::e8fe:eea6:f4f:2d85/64 scope link noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::8af8:8b5c:793f:e719/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

4: ens38: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c7:ba:af brd ff:ff:ff:ff:ff:ff

inet 192.168.92.71/24 brd 192.168.92.255 scope global noprefixroute ens38

valid_lft forever preferred_lft forever

inet6 fe80::e64:a89f:4312:ea34/64 scope link noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::e8d3:6442:89c0:cd4a/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever3.存储节点网络配置:

[root@cinder1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens33

ONBOOT=yes

#####################

IPADDR=192.168.90.72

PREFIX=24

[root@cinder1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

#UUID=162a12c5-b271-4a13-865e-0fdb876e64b8

DEVICE=ens37

ONBOOT=yes

#####################

IPADDR=192.168.92.72

PREFIX=24

GATEWAY=192.168.92.2

DNS1=114.114.114.114

DNS2=1.1.1.1

[root@cinder1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:97:4f:52 brd ff:ff:ff:ff:ff:ff

inet 192.168.90.72/24 brd 192.168.90.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::b01a:e132:1923:175/64 scope link noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::2801:f5c2:4e5a:d003/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

3: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:97:4f:5c brd ff:ff:ff:ff:ff:ff

inet 192.168.92.72/24 brd 192.168.92.255 scope global noprefixroute ens37

valid_lft forever preferred_lft forever

inet6 fe80::8af8:8b5c:793f:e719/64 scope link noprefixroute

valid_lft forever preferred_lft forever4.验证网络是否正常:

[root@controller ~]# ping –c 4 openstack.org #控制节点访问互联网

[root@compute1 ~]# ping –c 4 openstack.org #计算节点访问互联网

[root@cinder1 ~]# ping –c 4 openstack.org #存储节点访问互联网

[root@controller ~]# ping –c 4 192.168.90.71 #访问计算节点管理网络

[root@controller ~]# ping –c 4 192.168.91.71 #访问计算节点隧道网络

[root@controller ~]# ping –c 4 192.168.92.71 #访问计算节点外部网络1.6 配置主机名

控制节点执行:

[root@localhost ~]# hostnamectl set-hostname controller计算节点执行:

[root@localhost ~]# hostnamectl set-hostname compute1存储节点执行:

[root@localhost ~]# hostnamectl set-hostname cinder1所有节点执行,注销账号重新登录,验证主机名是否配置成功:

[root@localhost ~]# exit1.7 配置主机名解析

所有节点执行,配置相同,注意这里使用管理网络IP地址:

[root@controller ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# controller

192.168.90.70 controller

# compute1

192.168.90.71 compute1

#cinder1

192.168.90.72 cinder12.验证主机名解析是否正常:

ping计算节点:

[root@controller ~]# ping -c 4 compute1

PING compute1 (190.168.90.71) 56(84) bytes of data.

64 bytes from compute1 (190.168.90.71): icmp_seq=1 ttl=64 time=0.301 ms

64 bytes from compute1 (190.168.90.71): icmp_seq=2 ttl=64 time=0.990 ms

64 bytes from compute1 (190.168.90.71): icmp_seq=3 ttl=64 time=0.376 ms

64 bytes from compute1 (190.168.90.71): icmp_seq=4 ttl=64 time=0.321 ms

--- compute1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3102ms

rtt min/avg/max/mdev = 0.301/0.497/0.990/0.285 msping存储节点:

[root@controller ~]# ping -c 4 cinder1

PING cinder1 (190.168.90.72) 56(84) bytes of data.

64 bytes from cinder1 (190.168.90.72): icmp_seq=1 ttl=64 time=0.262 ms

64 bytes from cinder1 (190.168.90.72): icmp_seq=2 ttl=64 time=0.388 ms

64 bytes from cinder1 (190.168.90.72): icmp_seq=3 ttl=64 time=1.11 ms

64 bytes from cinder1 (190.168.90.72): icmp_seq=4 ttl=64 time=0.796 ms

--- cinder1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3057ms

rtt min/avg/max/mdev = 0.262/0.640/1.115/0.338 ms1.8 配置NTP服务

以下在控制节点进行配置

1.安装软件包:

[root@controller ~]# yum install chrony2.修改配置文件:

[root@controller ~]# vim /etc/chrony.conf

allow 192.168.0.0/16 去掉注释,允许其他节点网段同步时间,请配置为对应网段3.重启服务并加入开机启动项:

[root@controller ~]# systemctl enable chronyd.service && systemctl start chronyd.service4查看时间同步状态:

MS列中包含^*的行,指明NTP服务当前同步的服务器。当前同步的源为time5.aliyun.com:

[root@controller ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^? 85.199.214.101 1 6 3 36 -24ms[ -24ms] +/- 114ms

^* time5.aliyun.com 2 6 77 37 +4343us[ +22ms] +/- 43ms

^- ntp1.ams1.nl.leaseweb.net 2 6 77 35 -43ms[ -43ms] +/- 367ms

^? ntp.de.fdw.no 0 8 0 - +0ns[ +0ns] +/- 0ns5.查看当前时间是否准确,其中NTP synchronized: yes说明同步成功

[root@controller ~]# timedatectl

Local time: Fri 2018-06-08 10:36:39 CST

Universal time: Fri 2018-06-08 8:36:39 UTC

RTC time: Fri 2018-06-08 8:36:39

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: yes

RTC in local TZ: no

DST active: n/a以下在计算节点进行配置:

1.安装软件包:

[root@compute1 ~]# yum install chrony2.修改配置文件,使计算节点与控制节点同步时间:

[root@compute1 ~]# vim /etc/chrony.conf #注释3-6行,并增加第7行内容

1 # Use public servers from the pool.ntp.org project.

2 # Please consider joining the pool (http://www.pool.ntp.org/join.html).

3 #server 0.centos.pool.ntp.org iburst

4 #server 1.centos.pool.ntp.org iburst

5 #server 2.centos.pool.ntp.org iburst

6 #server 3.centos.pool.ntp.org iburst

7 server 192.168.92.70 iburst3.重启服务并设置开机启动

[root@compute1 ~]# systemctl enable chronyd.service && systemctl start chronyd.service4.查看时间同步状态,当前同步的源为controller

[root@compute1 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

^* controller 3 6 377 21 +7732us[+9937us] +/- 24ms5.查看时间是否与控制节点一致

[root@compute1 ~]# timedatectl

Local time: Sat 2018-06-09 10:09:32 CST

Universal time: Fri 2018-06-08 8:09:32 UTC

RTC time: Fri 2018-06-08 8:09:32

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: yes

RTC in local TZ: no

DST active: n/a存储节点配置同计算节点,这里省略

NTP的其他操作命令:

# timedatectl set-ntp yes #启用ntp同步服务

# timedatectl set-timezone Asia/Shanghai #设置时区

# yum install –y ntpdate #安装时间同步工具

# ntpdate 0.centos.pool.ntp.org #强制与网络NTP服务器同步时间

# ntpdate 192.168.92.70 #强制与控制节点同步时间注意:各个节点时间不同步后续可能出现各种问题,建议配置准确在进行后续操作。

2.安装基础软件包

2.1 安装OpenStack软件包

以下操作在所有节点执行

1.启用OpenStack存储库,安装queens版本的存储库

# yum install centos-release-openstack-queens2.升级所有软件包,如果升级后内核更新,请重启节点启用新内核。

# yum upgrade3.安装openstack客户端

# yum install python-openstackclient4.安装 openstack-selinux软件包以自动管理OpenStack服务的安全策略:

# yum install openstack-selinux2.2 安装mariadb数据库

以下操作在控制节点执行

大多数OpenStack服务使用SQL数据库来存储信息。数据库通常在控制器节点上运行。本次搭建使用MariaDB数据库, OpenStack服务还支持其他SQL数据库,包括 PostgreSQL等。

1.安装软件包

# yum install mariadb mariadb-server python2-PyMySQL2.创建并编辑/etc/my.cnf.d/openstack.cnf文件并完成以下操作:

配置以下内容, bind-address设置为控制节点的管理IP地址,以使其他节点能够通过管理网络进行访问:

[root@controller ~]# vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 192.168.90.70

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf83.启动数据库服务并设置服务开机启动:

# systemctl start mariadb.service && systemctl enable mariadb.service 4.运行mysql_secure_installation 脚本初始化数据库服务,并为数据库root帐户设置密码(这里设为123456):

[root@controller ~]# mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MariaDB

root user without the proper authorisation.

Set root password? [Y/n] Y

New password:

Re-enter new password:

Password updated successfully!

Reloading privilege tables..

... Success!

By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n] Y

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] Y

... Success!

By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n] Y

- Dropping test database...

... Success!

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n] Y

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.

Thanks for using MariaDB!2.3 安装RabbitMQ消息队列

以下在控制节点执行

OpenStack使用消息队列(Message queue)来协调服务之间的操作和状态信息,消息队列服务通常在控制节点上运行,OpenStack支持多种消息队列服务,包括RabbitMQ, Qpid和ZeroMQ。

1.安装软件包:

# yum install rabbitmq-server2.启动消息队列服务并设置服务开机启动

# systemctl enable rabbitmq-server.service && systemctl start rabbitmq-server.service3.添加openstack 用户,并设置密码,这里设置为123456

# rabbitmqctl add_user openstack 1234564.为openstack用户增加配置、读取及写入相关权限

# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/" ...2.4 安装Memcached缓存数据库

以下在控制节点执行

身份认证服务使用Memcached缓存令牌,memcached服务通常在控制节点上运行。

1.安装软件包

# yum install memcached python-memcached2.编辑/etc/sysconfig/memcached文件并完成以下操作:

使用控制节点的管理IP地址配置服务。这使其他节点能够通过管理网络进行访问:

[root@controller ~]# vim /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 192.168.90.70,::1" #增加一行3.启动Memcached服务并将其配置为在系统引导时启动:

# systemctl enable memcached.service && systemctl start memcached.service2.5 安装Etcd服务

以下操作在控制节点执行

OpenStack服务可能使用Etcd,这是一个可靠的分布式键值存储,用于分布式密钥锁定,存储配置,跟踪服务的实时性和其他场景。

1.安装软件包

[root@controller ~]# yum install etcd2.编辑/etc/etcd/etcd.conf文件,以控制节点管理IP地址设置相关选项,以使其他节点通过管理网络进行访问

[root@controller ~]# vim /etc/etcd/etcd.conf

#[Member]

#ETCD_CORS=""

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

ETCD_LISTEN_PEER_URLS="http://192.168.90.70:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.90.70:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

ETCD_NAME="controller"

#ETCD_SNAPSHOT_COUNT="100000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_QUOTA_BACKEND_BYTES="0"

#ETCD_MAX_REQUEST_BYTES="1572864"

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s"

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s"

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s"

#

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.90.70:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.90.70:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_DISCOVERY_SRV=""

ETCD_INITIAL_CLUSTER="controller=http://192.168.90.70:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_STRICT_RECONFIG_CHECK="true"

#ETCD_ENABLE_V2="true"3.启动etcd服务并设为开机启动:

[root@controller ~]# systemctl enable etcd && systemctl start etcd3 安装OpenStack服务

1.Openstack Queens部署时至少需要安装以下服务,按照下面指定的顺序安装服务:

认证服务(Identity service)– keystone installation for Queens

镜像服务(Image service)– glance installation for Queens

- 计算服务(Compute service)– nova installation for Queens

- 网络服务(Networking service)– neutron installation for Queens

2.我们建议在最小部署以上服务后也安装以下组件:

- 仪表盘(Dashboard)– horizon installation for Queens

- 块存储服务(Block Storage service)– cinder installation for Queens

3.1 安装keystone服务

以下在控制节点执行

本节描述如何在控制节点上安装和配置OpenStack身份认证服务,即称为keystone。出于可扩展性的目的,此配置部署了Fernet tokens和Apache HTTP服务器来处理请求。

3.1.1 认证服务概述

OpenStack认证服务提供单一的集成点,用于管理身份验证、授权和服务目录。

认证服务通常是用户与之交互的第一个服务。一旦经过身份验证,最终用户可以使用其身份来访问其他OpenStack服务。同样,其他OpenStack服务利用认证服务来确保用户是他们本人,并且发现部署中的其他服务在哪里。认证服务还可以与一些外部用户管理系统(如LDAP)集成。

用户和服务可以通过使用由认证服务管理的服务目录来定位其他服务。顾名思义,服务目录是OpenStack部署中可用服务的集合。每个服务可以有一个或多个端点,每个端点可以是三种类型之一:管理员、内部或公共。在生产环境中,出于安全原因,不同的端点类型可能驻留在暴露给不同类型用户的单独网络上。例如,公共API网络可能从因特网上可见,因此客户可以管理他们的云。管理API网络可能局限于管理云基础设施的组织内的操作员。内部API网络可能局限于包含OpenStack服务的主机。此外,OpenStack支持多个区域的可扩展性。为了简单起见,本指南使用管理网络来实现所有端点类型和默认的TrimOne区域。在认证服务中创建的区域、服务和端点一起构成部署的服务目录。部署中的每个OpenStack服务需要一个服务条目,其中存储在标识服务中的相应端点。这一切都可以在认证服务安装和配置之后完成。

认证服务包含这些组件:

Server

一个中央服务器使用RESTful接口提供认证和授权服务。

Drivers

驱动程序或服务后端集成到中央服务器。它们用于访问OpenStack外部的库中的身份信息,并且可能已经存在于部署OpenStack的基础设施中(例如,SQL数据库或LDAP服务器)。

Modules

中间件模块运行在使用认证服务的OpenStack组件的地址空间中。这些模块拦截服务请求,提取用户凭据,并将其发送到集中式服务器进行授权。中间件模块和OpenStack组件之间的集成使用Python Web服务器网关接口。

3.1.2 安装和配置keystone

创建keystone数据库

1.以root用户连接到数据库服务器:

$ mysql -u root -p2.创建keystone数据库:

MariaDB [(none)]> CREATE DATABASE keystone;3.授予keystone数据库适当的访问权限:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY '123456';注意:这里密码设置为123456

安装和配置keystone组件

1.安装软件包

# yum install openstack-keystone httpd mod_wsgi2.编辑并修改/etc/keystone/keystone.conf配置文件

[root@controller ~]# vim /etc/keystone/keystone.conf在 [database]部分, 配置数据库访问权限:

[database]

# ...

connection = mysql+pymysql://keystone:123456@controller/keystone注意这里的密码为123456

在[token] 部分, 配置Fernet token provider

[token]

# ...

provider = fernet3.同步认证服务数据库:

# su -s /bin/sh -c "keystone-manage db_sync" keystone4.初始化Fernet key库:

# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone5.引导身份认证服务:

# keystone-manage bootstrap --bootstrap-password 123456 \

--bootstrap-admin-url http://controller:35357/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne替换ADMIN_PASS为管理用户的合适密码,这里为123456

配置apache http服务

1.编辑/etc/httpd/conf/httpd.conf文件并配置ServerName选项以引用控制节点:

[root@controller ~]# vim /etc/httpd/conf/httpd.conf

ServerName controller2.创建到/usr/share/keystone/wsgi-keystone.conf文件的链接:

[root@controller ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/3.启动Apache HTTP服务并配置开机启动

[root@controller ~]# systemctl enable httpd.service && systemctl start httpd.service4.配置administrative 账户

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3注意这里admin密码为123456,这里的密码要和keystone-manage bootstrap命令中使用的密码相同

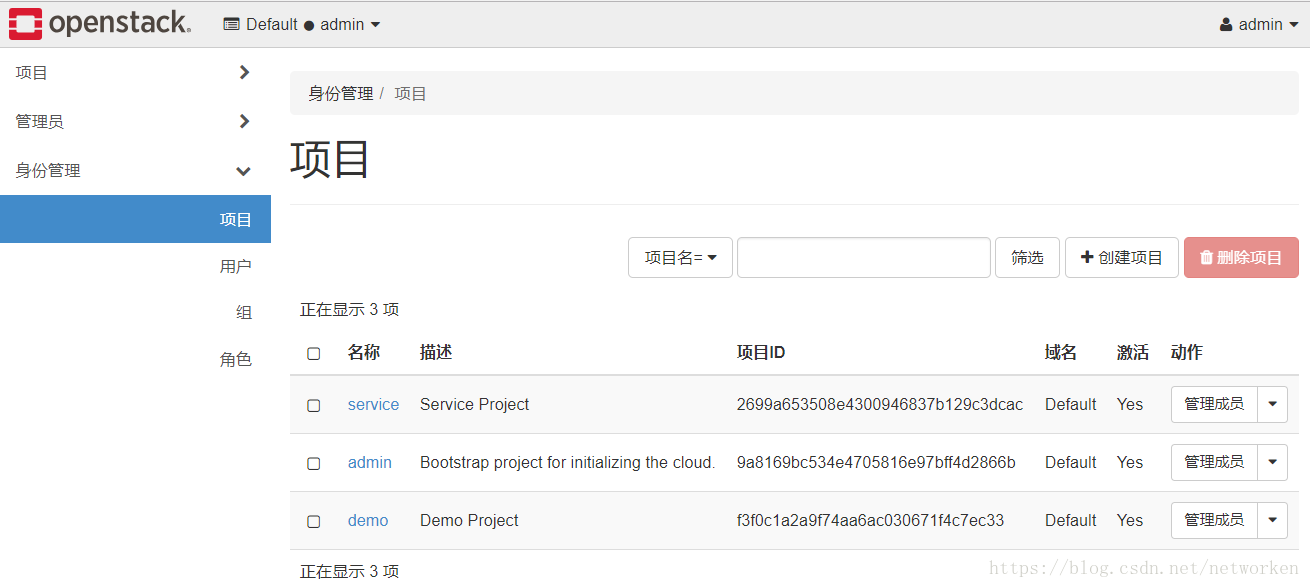

3.1.3 创建项目和用户

身份服务为每个OpenStack服务提供身份验证服务。身份验证服务使用域,项目,用户和角色的组合(domain, projects, users, and roles)。

1.创建域,尽管上面keystone-manage bootstrap步骤已经存在“default”域,但创建新域的正式方法是:

[root@controller ~]# openstack domain create --description "Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Domain |

| enabled | True |

| id | 2cb7b71c381c49179ad106c53fc78f20 |

| name | example |

| tags | [] |

+-------------+----------------------------------+2.创建服务项目,本指南使用一个服务项目,其中包含您添加到环境中的每项服务的唯一用户。

[root@controller ~]# openstack project create - -domain default \

- -description “Service Project” service

[root@controller ~]# openstack project create --domain default \

> --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | 2699a653508e4300946837b129c3dcac |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+3.创建平台demon项目,普通(非管理员)任务应该使用非特权项目和用户。 作为示例,这里创建演示项目和用户。创建演示项目:

[root@controller ~]# openstack project create - -domain default \

- -description “Demo Project” demo

执行结果:

[root@controller ~]# openstack project create --domain default \

> --description "Demo Project" demo

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | f3f0c1a2a9f74aa6ac030671f4c7ec33 |

| is_domain | False |

| name | demo |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+4.创建demo用户

[root@controller ~]# openstack user create - -domain default \

- -password-prompt demo

执行结果:

[root@controller ~]# openstack user create --domain default \

> --password-prompt demo

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | a331e97f2ac6444484371a70e1299636 |

| name | demo |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+5.创建用户角色:

[root@controller ~]# openstack role create user

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | 8e50219ae4a44daea5fba43dc3a3bdbc |

| name | user |

+-----------+----------------------------------+6.将用户角色添加到demo项目和用户:

$ openstack role add --project demo --user demo user注意:该命令没有输出,另外您可以重复此过程来创建其他项目和用户。

3.1.4 验证认证服务操作

1.取消设置临时OS_AUTH_URL和OS_PASSWORD环境变量:

$ unset OS_AUTH_URL OS_PASSWORD2.作为管理员用户,请求身份验证令牌

[root@controller ~]# openstack –os-auth-url http://controller:35357/v3 \

- -os-project-domain-name Default –os-user-domain-name Default \

- -os-project-name admin –os-username admin token issue

执行结果:

[root@controller ~]# openstack --os-auth-url http://controller:35357/v3 \

> --os-project-domain-name Default --os-user-domain-name Default \

> --os-project-name admin --os-username admin token issue

Password:

+------------+-----------------------------------------------------------------------

| Field | Value |

+------------+-----------------------------------------------------------------------

| expires | 2018-06-09T04:16:32+0000 |

| id | gAAAAABbG0aQ7FW5iNpQsV6GeG3MScdTa-5R1X6P50quK1upikbaaRjGLyHjJ9DGAhYxwIRS5EQbFowGM48ihU--S5okbkP97my7WftHu3R13KV1aOClwT9zYKPziyoHuL4jUKLABtxAfDktz4He1BDrzA5EuQUnFlVTIv6plTWUJQUVHIpSGGs |

| project_id | 9a8169bc534e4705816e97bff4d2866b |

| user_id | fd008b033f4f48e2a17fc88686d89e88 |

+------------+-----------------------------------------------------------------------3.作为demo用户,请求身份验证令牌:

[root@controller ~]# openstack –os-auth-url http://controller:5000/v3 \

- -os-project-domain-name Default –os-user-domain-name Default \

- -os-project-name demo –os-username demo token issue

执行结果:

[root@controller ~]# openstack --os-auth-url http://controller:5000/v3 \

> --os-project-domain-name Default --os-user-domain-name Default \

> --os-project-name demo --os-username demo token issue

Password:

+------------+-----------------------------------------------------------------------

| Field | Value |

+------------+-----------------------------------------------------------------------

| expires | 2018-06-09T04:17:53+0000 |

| id | gAAAAABbG0bhmKyZk9WcBA79JucaEcdhkKh0Da5Fu1QfyukeajZEfHPb3ElV1wuRzn59qeL0Oz-AbzScWWodoAYiYRuRFPCL1aesbItKeBGDFSbd54bng4lsHmqSMmZ-lViOEkAZAcKNAU5Tp-sd4LmGSMsHC2mgffhjOFim4gcLFoWVT2Kq2u8 |

| project_id | f3f0c1a2a9f74aa6ac030671f4c7ec33 |

| user_id | a331e97f2ac6444484371a70e1299636 |

+------------+-----------------------------------------------------------------------注意:此命令使用演示用户和API端口5000的密码,该端口只允许对Identity Service API进行常规(非管理员)访问。

3.1.5 创建客户端环境脚本

前面几节使用了环境变量和命令选项的组合,通过openstack客户端与Identity服务进行交互。 为了提高客户端操作的效率,OpenStack支持简单的客户端环境脚本,也称为OpenRC文件。 这些脚本通常包含所有客户端的常用选项,但也支持独特的选项。

创建脚本

为管理员和演示项目和用户创建客户端环境脚本。后续所有操作将引用这些脚本来为客户端操作加载适当的凭据。

1.创建并编辑admin-openrc文件并添加以下内容:

[root@controller ~]# vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2注意这里的admin用户密码为123456,替换为您在Identity Service中为admin用户设置的密码。

2.创建并编辑demo-openrc文件并添加以下内容:

[root@controller ~]# vim demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2注意这里的demo用户密码为123456,替换为您在Identity Service中为demo用户设置的密码。

完成以后如下,我这里创建在/root目录下:

[root@controller ~]# ll

-rw-r–r– 1 root root 262 Jun 9 11:20 admin-openrc

-rw-r–r– 1 root root 259 Jun 9 11:22 demo-openrc

使用脚本

要以特定项目和用户身份运行客户端,只需在运行客户端环境脚本之前加载相关的客户端环境脚本即可。 例如:

1.加载admin-openrc文件以使用Identity服务的位置以及管理项目和用户凭据执行环境变量:

[root@controller ~]# . admin-openrc

Request an authentication token:2.请求身份验证令牌:

[root@controller ~]# openstack token issue

+------------+-----------------------------------------------------------------------

| Field | Value |

+------------+-----------------------------------------------------------------------

| expires | 2018-06-09T04:25:27+0000 |

| id | gAAAAABbG0inBBbRy30qPo674Pg5b6bxWNqRvtaOtc4j4U_88qtk_4U9xViPO3au2e_cGQw8s7b0mst9abScy6dEUVsEzQ042XgYnxarB-V7mAVXUevJCn9OV-FQdMlULZXcM0d4w4iVosOLpLdfsS27ZsVwYs_-r1_AohNGKAYAN2g77AOE_OY |

| project_id | 9a8169bc534e4705816e97bff4d2866b |

| user_id | fd008b033f4f48e2a17fc88686d89e88 |

+------------+-----------------------------------------------------------------------3.2 安装Glance服务

以下操作在控制节点执行

本节介绍如何在控制节点上安装和配置镜像服务,即glance。 为了简单起见,该配置将镜像存储在本地文件系统上。

3.2.1 镜像服务概述

镜像服务(glance)使用户能够发现,注册和检索虚拟机镜像。 它提供了一个REST API,使您可以查询虚拟机镜像元数据并检索实际镜像。 您可以将通过镜像服务提供的虚拟机映像存储在各种位置,从简单的文件系统到对象存储系统(如OpenStack对象存储)。

为了简单起见,本指南描述了将Image服务配置为使用文件后端,该后端上载并存储在托管Image服务的控制节点上的目录中。 默认情况下,该目录是/ var / lib / glance / images /。

OpenStack Image服务是基础架构即服务(IaaS)的核心。 它接受磁盘或服务器映像的API请求,以及来自最终用户或OpenStack Compute组件的元数据定义。 它还支持在各种存储库类型(包括OpenStack对象存储)上存储磁盘或服务器映像。

OpenStack镜像服务包括以下组件:

glance-api

接受镜像API调用以进行镜像发现,检索和存储。

glance-registry

存储,处理和检索有关镜像的元数据。 元数据包括例如大小和类型等项目。

Database

存储镜像元数据,您可以根据自己的喜好选择数据库。 大多数部署使用MySQL或SQLite。

Storage repository for image files(镜像文件的存储库)

支持各种存储库类型,包括常规文件系统(或安装在glance-api控制节点上的任何文件系统),Object Storage,RADOS块设备,VMware数据存储和HTTP。 请注意,某些存储库仅支持只读用法。

Metadata definition service(元数据定义服务)

用于供应商,管理员,服务和用户的通用API来有意义地定义他们自己的定制元数据。 此元数据可用于不同类型的资源,如镜像,开发,卷,定制和聚合。 定义包括新属性的关键字,描述,约束和它可以关联的资源类型。

3.2.2 创建glance数据库

1.创建glance数据库

以root用户连接到数据库:

# mysql -u root -p创建glance数据库:

MariaDB [(none)]> CREATE DATABASE glance;授予对glance数据库的正确访问权限:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY '123456';2.获取admin用户的环境变量

$ . admin-openrc3.要创建服务凭据,请完成以下步骤

创建glance用户:

[root@controller ~]# openstack user create --domain default --password-prompt glance

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 5480c338e19f42e59b35c7965d950fa6 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+把admin角色添加到glance用户和项目中

$ openstack role add --project service --user glance admin说明:此条命令执行不返回信息

创建glance服务实体:

[root@controller ~]# openstack service create –name glance \

- -description “OpenStack Image” image

执行结果:

[root@controller ~]# openstack service create --name glance \

> --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | f776846b998a41859d7f93cac56cf4c0 |

| name | glance |

| type | image |

+-------------+----------------------------------+4.创建镜像服务API端点

[root@controller ~]# openstack endpoint create –region RegionOne \

image public http://controller:9292

[root@controller ~]# openstack endpoint create –region RegionOne \

image internal http://controller:9292

[root@controller ~]# openstack endpoint create –region RegionOne \

image admin http://controller:9292

执行结果:

[root@controller ~]# openstack endpoint create --region RegionOne \

> image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 253e29a9b76648b1919d374eb10e152f |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f776846b998a41859d7f93cac56cf4c0 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | a4e5834004af45279b20008f16bcb4b9 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f776846b998a41859d7f93cac56cf4c0 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 46707abe74924e5096b150b70056247c |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f776846b998a41859d7f93cac56cf4c0 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+3.2.3 安装和配置组件

1.安装软件包:

# yum install openstack-glance2.编辑/etc/glance/glance-api.conf文件,完成以下操作

# vim /etc/glance/glance-api.conf在[database] 部分,配置数据库访问:

[database]

#..

connection = mysql+pymysql://glance:123456@controller/glance在[keystone_authtoken] and [paste_deploy]部分,配置认证服务访问:

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone在 [glance_store]部分, 配置本地文件系统存储和映像文件的位置:

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/3.编辑/etc/glance/glance-registry.conf配置文件

# vim /etc/glance/glance-registry.conf在[database]部分, 配置数据库访问:

[database]

connection = mysql+pymysql://glance:123456@controller/glance在[keystone_authtoken] 和 [paste_deploy] 部分, 配置认证服务访问:

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone4.同步镜像服务数据库

# su -s /bin/sh -c "glance-manage db_sync" glance5.完成安装,启动镜像服务并设为开机启动:

# systemctl enable openstack-glance-api.service \

openstack-glance-registry.service

# systemctl start openstack-glance-api.service \

openstack-glance-registry.service3.2.4 验证操作

使用CirrOS验证Image服务的操作,这是一个小型Linux映像,可帮助您测试OpenStack部署。

有关如何下载和构建映像的更多信息,请参阅OpenStack虚拟机映像指南https://docs.openstack.org/image-guide/。有关如何管理映像的信息,请参阅OpenStack最终用户指南https://docs.openstack.org/queens/user/

1.获取admin用户的环境变量

# . admin-openrc2.下载镜像

# wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img3.将镜像上传到image服务,指定磁盘格式为QCOW2,指定裸容器格式和公开可见性,以便所有项目都可以访问它:

[root@controller ~]# openstack image create “cirros” \

- -file cirros-0.4.0-x86_64-disk.img \

- -disk-format qcow2 –container-format bare \

- -public

执行结果:

[root@controller ~]# openstack image create "cirros" \

> --file cirros-0.4.0-x86_64-disk.img \

> --disk-format qcow2 --container-format bare \

> --public

+------------------+------------------------------------------------------+

| Field | Value |

+------------------+------------------------------------------------------+

| checksum | 443b7623e27ecf03dc9e01ee93f67afe |

| container_format | bare |

| created_at | 2018-06-09T07:25:34Z |

| disk_format | qcow2 |

| file | /v2/images/de140769-4ce3-4a1b-9651-07a915b21caa/file |

| id | de140769-4ce3-4a1b-9651-07a915b21caa |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | 9a8169bc534e4705816e97bff4d2866b |

| protected | False |

| schema | /v2/schemas/image |

| size | 12716032 |

| status | active |

| tags | |

| updated_at | 2018-06-09T07:25:34Z |

| virtual_size | None |

| visibility | public |

+------------------+------------------------------------------------------+3.查看上传的镜像,镜像状态应为active状态

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| de140769-4ce3-4a1b-9651-07a915b21caa | cirros | active |

+--------------------------------------+--------+--------+glance具体配置选项可参考:

https://docs.openstack.org/glance/queens/configuration/index.html

3.3 安装compute服务

本节介绍如何在控制节点上安装和配置计算服务,代号为nova

3.3.1 计算服务概述

使用OpenStack Compute来托管和管理云计算系统。OpenStack Compute是基础架构即服务(IaaS)系统的重要组成部分。主要模块是用Python实现的。

OpenStack Compute与OpenStack Identity进行交互以进行身份验证; 用于磁盘和服务器映像的OpenStack映像服务; 和用于用户和管理界面的OpenStack Dashboard。镜像访问受到项目和用户的限制; 每个项目的限额是有限的(例如,实例的数量)。OpenStack Compute可以在标准硬件上水平扩展,并下载映像以启动实例。

OpenStack Compute包含以下内容及组件:

nova-api service

接受并响应最终用户计算API调用。该服务支持OpenStack Compute API。它执行一些策略并启动大多数编排活动,例如运行实例。

nova-api-metadata service

接受来自实例的元数据请求。nova-api-metadata通常在nova-network 安装多主机模式下运行时使用该服务。有关详细信息,请参阅计算管理员指南中的元数据服务。

nova-compute service

通过管理程序API创建和终止虚拟机实例的工作守护程序。例如:

- XenAPI for XenServer/XCP

- libvirt for KVM or QEMU

- VMwareAPI for VMware

处理相当复杂。基本上,守护进程接受来自队列的动作并执行一系列系统命令,例如启动KVM实例并更新其在数据库中的状态。

nova-placement-api service

跟踪每个提供者的库存和使用情况。有关详情,请参阅 Placement API。

nova-scheduler service

从队列中获取虚拟机实例请求,并确定它在哪个计算服务器主机上运行。

nova-conductor module

调解nova-compute服务和数据库之间的交互。它消除了由nova-compute服务直接访问云数据库的情况 。该nova-conductor模块水平缩放。但是,请勿将其部署到nova-compute运行服务的节点上。有关更多信息,请参阅配置选项中的conductor部分 。

nova-consoleauth daemon(守护进程)

为控制台代理提供的用户授权令牌。见 nova-novncproxy和nova-xvpvncproxy。此服务必须运行以使控制台代理正常工作。您可以在群集配置中针对单个nova-consoleauth服务运行任一类型的代理。有关信息,请参阅关于nova-consoleauth。

nova-novncproxy daemon

提供通过VNC连接访问正在运行的实例的代理。支持基于浏览器的novnc客户端。

nova-spicehtml5proxy daemon

提供通过SPICE连接访问正在运行的实例的代理。支持基于浏览器的HTML5客户端。

nova-xvpvncproxy daemon

提供通过VNC连接访问正在运行的实例的代理。支持OpenStack特定的Java客户端。

The queue队列

守护进程之间传递消息的中心集线器。通常用RabbitMQ实现 ,也可以用另一个AMQP消息队列实现,例如ZeroMQ。

SQL database

存储云基础架构的大部分构建时间和运行时状态,其中包括:

- Available instance types 可用的实例类型

- Instances in use 正在使用的实例

- Available networks 可用的网络

- Projects 项目

理论上,OpenStack Compute可以支持SQLAlchemy支持的任何数据库。通用数据库是用于测试和开发工作的SQLite3,MySQL,MariaDB和PostgreSQL。

3.3.2 安装和配置控制节点

以下在控制节点执行

3.3.2.1 创建数据库

1.以root账户登录数据库

# mysql -u root –p2.创建nova_api, nova, nova_cell0数据库

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;数据库登录授权

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \

IDENTIFIED BY '123456';2.执行admin-openrc凭证

[root@controller ~]# . admin-openrc3.创建计算服务凭证

创建nova用户:

[root@controller ~]# openstack user create --domain default --password-prompt nova

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fb6776d19862405ba0c42ec91b6282a0 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+为nova用户添加admin角色:

# openstack role add --project service --user nova admin创建nova服务端点:

[root@controller ~]# openstack service create –name nova \

- -description “OpenStack Compute” compute

执行结果:

[root@controller ~]# openstack service create --name nova \

> --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | f162653676dd41a2a4faa98ae64c33f1 |

| name | nova |

| type | compute |

+-------------+----------------------------------+4.创建compute API 服务端点:

[root@controller ~]# openstack endpoint create –region RegionOne \

compute public http://controller:8774/v2.1

[root@controller ~]# openstack endpoint create –region RegionOne \

compute admin http://controller:8774/v2.1

[root@controller ~]# openstack endpoint create –region RegionOne \

compute admin http://controller:8774/v2.1

执行结果:

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 84b34dc0bedc483990e32c48830368ab |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f162653676dd41a2a4faa98ae64c33f1 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2c253051b30444d9807a37bdb05c4c66 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f162653676dd41a2a4faa98ae64c33f1 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5aefc27a9f0440e2a95f1189e25f3822 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f162653676dd41a2a4faa98ae64c33f1 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+5.创建一个placement服务用户

[root@controller ~]# openstack user create --domain default --password-prompt placement

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | eb7cae6117f74fa6990f8e234efc9c82 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+6.添加placement用户为项目服务admin角色

# openstack role add --project service --user placement admin7.在服务目录中创建Placement API条目:

[root@controller ~]# openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 656007c5c64d4c3f91fd0dff1a32a754 |

| name | placement |

| type | placement |

+-------------+----------------------------------+8.创建Placement API服务端点

[root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 4a5b28fe29a94c03a4059d37388f88f1 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 656007c5c64d4c3f91fd0dff1a32a754 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 0f554f27f16c4387ac6a88e9f98697c4 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 656007c5c64d4c3f91fd0dff1a32a754 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3aa80b39dc244907a034bf6b4fcd12e2 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 656007c5c64d4c3f91fd0dff1a32a754 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+3.3.2.2 安装和配置组件

1.安装软件包

# yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api2.编辑 /etc/nova/nova.conf文件并完成以下操作

# vim /etc/nova/nova.conf在 [DEFAULT] 部分, 只启用计算和元数据API:

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata在[api_database] 和 [database] 部分, 配置数据库访问:

[api_database]

# ...

connection = mysql+pymysql://nova:123456@controller/nova_api

[database]

# ...

connection = mysql+pymysql://nova:123456@controller/nova在 [DEFAULT] 部分, 配置RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller在[api] 和 [keystone_authtoken] 部分, 配置认证服务访问:

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456在[DEFAULT] 部分,使用控制节点的管理接口IP地址配置my_ip选项:

[DEFAULT]

# ...

my_ip = 192.168.90.70在[DEFAULT] 部分, 启用对网络服务的支持:

[DEFAULT]

# ...

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver在 [vnc] 部分,使用控制节点的管理接口IP地址配置VNC代理:

[vnc]

enabled = true

# ...

server_listen = $my_ip

server_proxyclient_address = $my_ip在[glance] 部分, 配置Image服务API的位置:

[glance]

# ...

api_servers = http://controller:9292在 [oslo_concurrency] 部分, 配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp在 [placement] 部分, 配置 Placement API:

[placement]

# ...

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123456由于软件包的一个bug,需要在/etc/httpd/conf.d/00-nova-placement-api.conf文件中添加如下配置,来启用对Placement API的访问:

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>添加配置:

[root@controller ~]# vim /etc/httpd/conf.d/00-nova-placement-api.conf

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova group=nova

WSGIScriptAlias / /usr/bin/nova-placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/nova/nova-placement-api.log #这里放在此行之后

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

</VirtualHost>

Alias /nova-placement-api /usr/bin/nova-placement-api

<Location /nova-placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>重新启动httpd服务

# systemctl restart httpd3.同步nova-api数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning忽略此输出中的任何弃用消息。

4.注册cell0数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning5.创建cell1 cell

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

f1c7672c-8127-4bc0-9f60-acc5364222dc6.同步nova数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

/usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)7.验证 nova、 cell0、 cell1数据库是否注册正确

[root@controller ~]# nova-manage cell_v2 list_cells

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

+-------+--------------------------------------+------------------------------------

| Name | UUID | Transport URL | Database Connection |

+-------+--------------------------------------+------------------------------------

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 |

| cell1 | f1c7672c-8127-4bc0-9f60-acc5364222dc | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova |

+-------+--------------------------------------+------------------------------------3.3.2.3 完成安装启动服务

启动计算服务并配置为开机启动

# systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

# systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service3.3.3 安装和配置计算节点

以下操作在计算节点执行

3.3.3.1 安装和配置组件

1.安装软件包

# yum install openstack-nova-compute2.编辑/etc/nova/nova.conf配置文件并完成以下操作

# vim /etc/nova/nova.conf在[DEFAULT] 部分, 只启用计算和元数据API:

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata在[DEFAULT] 部分, 配置RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller在[api] 和 [keystone_authtoken] 部分, 配置认证服务访问:

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456在[DEFAULT] 部分, 配置 my_ip选项:

[DEFAULT]

# ...

my_ip = 192.168.90.71这里使用计算节点管理IP地址

在 [DEFAULT] 部分, 启用对网络服务的支持:

[DEFAULT]

# ...

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver在 [vnc] 部分, 启用和配置远程控制台访问:

[vnc]

# ...

enabled = True

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html服务器组件侦听所有IP地址,并且代理组件只侦听计算节点的管理接口IP地址。 基本URL指示您可以使用Web浏览器访问此计算节点上实例的远程控制台的位置。

如果用于访问远程控制台的Web浏览器驻留在无法解析控制器主机名的主机上,则必须用控制节点的管理接口IP地址替换控制器。

在 [glance] 部分, 配置Image服务API的位置:

[glance]

# ...

api_servers = http://controller:9292在 [oslo_concurrency]部分, 配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp在 [placement] 部分, 配置 Placement API:

[placement]

# ...

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 1234563.3.3.2 完成配置启动服务

1.确定您的计算节点是否支持虚拟机的硬件加速:

$ egrep -c '(vmx|svm)' /proc/cpuinfo如果此命令返回值为1或更大,则您的计算节点支持通常不需要额外配置的硬件加速。

如果此命令返回零值,则您的计算节点不支持硬件加速,并且您必须配置libvirt才能使用QEMU而不是KVM。(我这里返回值为2,所有并没有执行下面这一步,配置文件未做任何更改)

在/etc/nova/nova.conf文件中编辑 [libvirt] 部分:

# vim /etc/nova/nova.conf

[libvirt]

# ...

virt_type = qemu2.启动计算服务(包括其相关性),并将其配置为在系统引导时自动启动:

# systemctl enable libvirtd.service openstack-nova-compute.service

# systemctl start libvirtd.service openstack-nova-compute.service注意:如果NOVA计算服务无法启动,检查/var/log/nova/nova-compute.log。控制节点上的错误消息AMQP服务器:5672是不可达的,可能指示控制节点上的防火墙阻止对端口5672的访问。配置防火墙以打开控制节点上的端口5672,并重新启动计算节点上的Nova计算服务。

如果想要清除防火墙规则执行以下命令:

# iptables -F

# iptables -X

# iptables -Z3.3.3.3 添加compute节点到cell数据库

以下在控制节点上执行

- 执行admin-openrc,验证有几个计算节点在数据库中

[root@controller ~]. admin-openrc

[root@controller ~]# openstack compute service list --service nova-compute

+----+--------------+----------+------+---------+-------+----------------------------

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+----------+------+---------+-------+----------------------------+

| 7 | nova-compute | compute1 | nova | enabled | up | 2018-06-09T16:57:28.000000 |

+----+--------------+----------+------+---------+-------+----------------------------2.发现计算节点

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': f1c7672c-8127-4bc0-9f60-acc5364222dc

Checking host mapping for compute host 'compute1': 8ca1dfdc-5ed4-456b-a10e-a74b645fdbf8

Creating host mapping for compute host 'compute1': 8ca1dfdc-5ed4-456b-a10e-a74b645fdbf8

Found 1 unmapped computes in cell: f1c7672c-8127-4bc0-9f60-acc5364222dc添加新计算节点时,必须在控制节点上运行nova-manage cell_v2 discover_hosts以注册这些新计算节点。 或者,您可以在/etc/nova/nova.conf中设置适当的时间间隔:

[scheduler]

discover_hosts_in_cells_interval = 3003.3.4 验证计算服务操作

以下操作在控制节点执行

1.列出服务组件以验证每个进程成功启动和注册:

[root@controller ~]#. admin-openrc

[root@controller ~]# openstack compute service list

+----+------------------+------------+----------+---------+-------+------------------

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+------------+----------+---------+-------+------------------

| 1 | nova-consoleauth | controller | internal | enabled | up | 2018-06-09T17:02:22.000000 |

| 2 | nova-scheduler | controller | internal | enabled | up | 2018-06-09T17:02:14.000000 |

| 3 | nova-conductor | controller | internal | enabled | up | 2018-06-09T17:02:23.000000 |

| 7 | nova-compute | compute1 | nova | enabled | up | 2018-06-09T17:02:18.000000 |

+----+------------------+------------+----------+---------+-------+------------------此输出应显示在控制节点上启用三个服务组件,并在计算节点上启用一个服务组件。

2.列出身份服务中的API端点以验证与身份服务的连接:

[root@controller ~]# openstack catalog list

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| keystone | identity | RegionOne |

| | | admin: http://controller:35357/v3/ |

| | | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | |

| placement | placement | RegionOne |

| | | internal: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | RegionOne |

| | | public: http://controller:8778 |

| | | |

| nova | compute | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | |

+-----------+-----------+-----------------------------------------+3.列出Image服务中的镜像以验证与Image服务的连通性:

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| de140769-4ce3-4a1b-9651-07a915b21caa | cirros | active |

+--------------------------------------+--------+--------+4.检查cells和placement API是否正常运行

[root@controller ~]# nova-status upgrade check

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

Option "os_region_name" from group "placement" is deprecated. Use option "region-name" from group "placement".

+---------------------------+

| Upgrade Check Results |

+---------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+---------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+---------------------------+

| Check: Resource Providers |

| Result: Success |

| Details: None |

+---------------------------+nova配置参考:https://docs.openstack.org/nova/queens/admin/index.html

3.4 安装neutron服务

3.4.1 网络服务概述

OpenStack Networking(neutron)允许您创建由其他OpenStack服务管理的接口设备并将其连接到网络。可以实现插件以适应不同的网络设备和软件,为OpenStack架构和部署提供灵活性。

网络服务包含以下组件:

neutron-server

接受API请求并将其路由到适当的OpenStack Networking插件以便采取行动。

OpenStack Networking plug-ins and agents

插拔端口,创建网络或子网,并提供IP地址。这些插件和代理根据特定云中使用的供应商和技术而有所不同。OpenStack Networking带有用于思科虚拟和物理交换机,NEC OpenFlow产品,Open vSwitch,Linux桥接和VMware NSX产品的插件和代理。

通用代理是L3(第3层),DHCP(动态主机IP寻址)和插件代理。

Messaging queue

大多数OpenStack Networking安装用于在neutron-server和各种代理之间路由信息。还充当存储特定插件的网络状态的数据库。

OpenStack Networking主要与OpenStack Compute进行交互,为其实例提供网络和连接。

3.4.3 安装和配置controller节点

以下操作在控制节点执行

3.4.3.1 创建数据库

- 要创建数据库,需要完成以下操作

以root用户使用数据库连接客户端连接到数据库服务器:

$ mysql -u root –p创建neutron数据库:

MariaDB [(none)] CREATE DATABASE neutron;授予neutron数据库适当访问权,这里密码为123456:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY '123456';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY '123456';2.加载管理员凭据以获得仅管理员访问的CLI命令:

$ . admin-openrc3.创建服务凭证,完成以下操作

创建neutron用户

[root@controller ~]# openstack user create --domain default --password-prompt neutron

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 988aa6330c27474bb54b662aeb7173a7 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+添加admin角色到neutron用户

# openstack role add --project service --user neutron admin创建neutron服务实体

[root@controller ~]# openstack service create –name neutron \

- -description “OpenStack Networking” network

执行结果:

[root@controller ~]# openstack service create --name neutron \

> --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | 2547ba8ceeb1436184e78e29017f8930 |

| name | neutron |

| type | network |

+-------------+----------------------------------+4.创建网络服务API端点

$ openstack endpoint create –region RegionOne \

network public http://controller:9696

$ openstack endpoint create –region RegionOne \

network internal http://controller:9696

$ openstack endpoint create –region RegionOne \

network admin http://controller:9696

执行结果:

[root@controller ~]# openstack endpoint create --region RegionOne network public http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 4008ce59ae324f59a1bcafd3d68df1b2 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | cd9f54256386473b9a98a9422e274c5a |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne network internal http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8d8aed23061a48f3a7b0a8aefb8d5681 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | cd9f54256386473b9a98a9422e274c5a |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne network admin http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 4bdb3e41746e4b2cbeadcf1fc5227363 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | cd9f54256386473b9a98a9422e274c5a |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

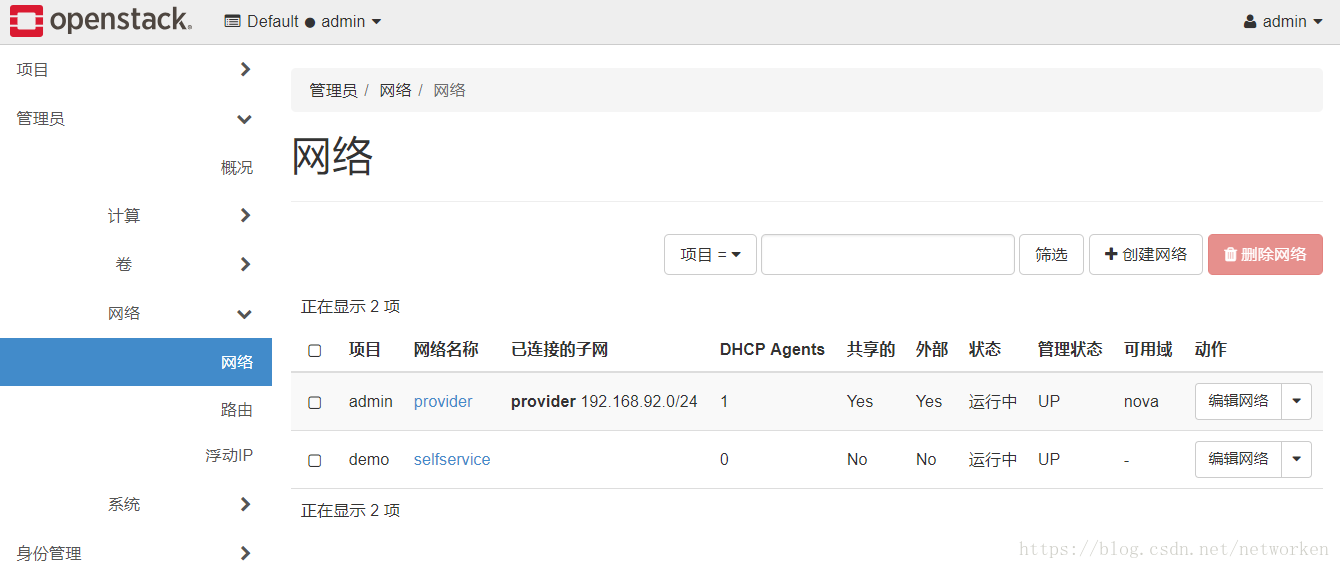

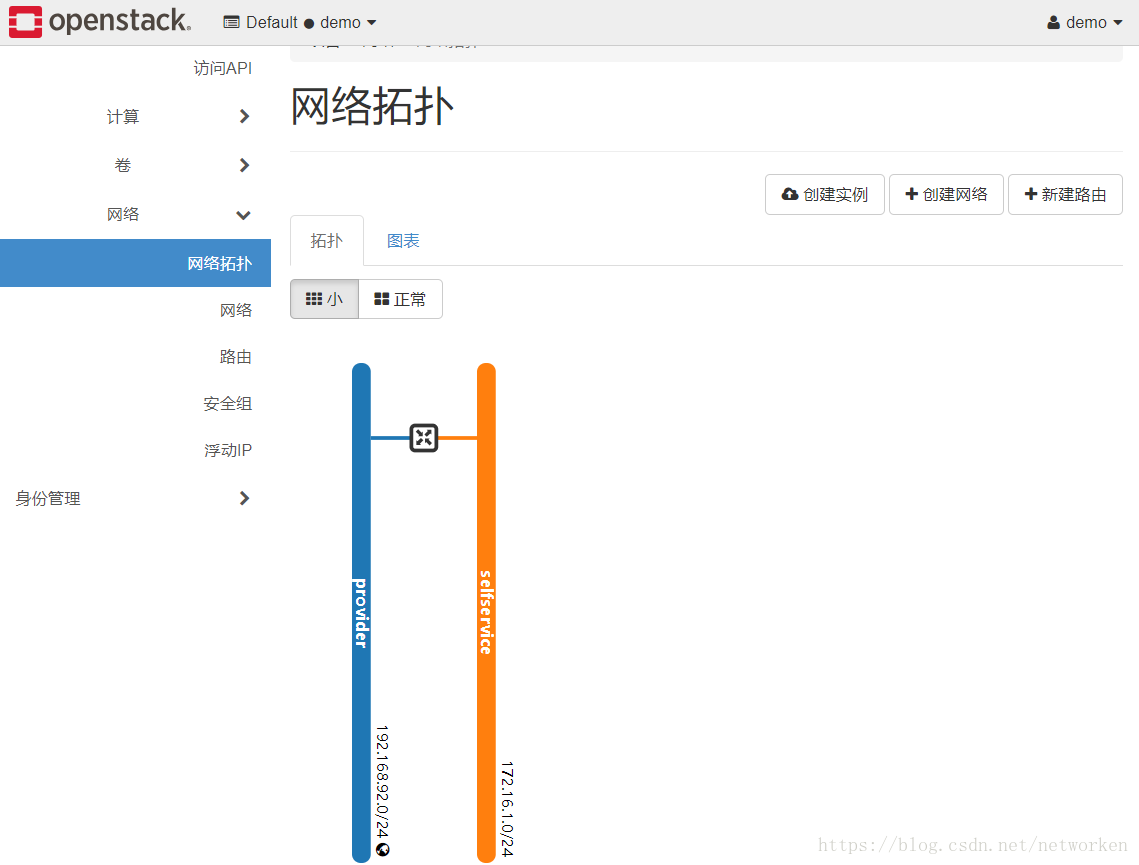

+--------------+----------------------------------+3.4.3.2 配置网络部分

您可以使用选项1和2所代表的两种体系结构之一来部署网络服务。

- 选项1部署了仅支持将实例附加到提供者(外部)网络的最简单的可能架构。 没有自助服务(专用)网络,路由器或浮动IP地址。

只有管理员或其他特权用户才能管理提供商网络。 - 选项2增加了选项1,其中支持将实例附加到自助服务网络的第3层服务。

演示或其他非特权用户可以管理自助服务网络,包括提供自助服务和提供商网络之间连接的路由器。此外,浮动IP地址可提供与使用来自外部网络(如Internet)的自助服务网络的实例的连接。 自助服务网络通常使用隧道网络。隧道网络协议(如VXLAN),选项2还支持将实例附加到提供商网络。

以下两项配置二选一:

Networking Option 1: Provider networks

Networking Option 2: Self-service networks

这里选择Networking Option 2: Self-service networks

1.安装组件

# yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables2.配置服务组件

编辑/etc/neutron/neutron.conf配置文件,并完成以下操作:

# vim /etc/neutron/neutron.conf在[database]部分, 配置数据库访问:

[database]

connection = mysql+pymysql://neutron:123456@controller/neutron在[DEFAULT]部分, 启用模块化第2层(ML2)插件,路由器服务和overlapping IP addresses:

[DEFAULT]

# ...

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true在 [DEFAULT] 部分, 配置RabbitMQ消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller在 [DEFAULT]和 [keystone_authtoken]部分, 配置认证服务访问:

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456在 [DEFAULT] 和[nova] 部分, 配置网络通知计算网络拓扑更改:

[DEFAULT]

# ...

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

# ...

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456在 [oslo_concurrency] 部分,配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp3.配置网络二层插件

ML2插件使用Linux桥接机制为实例构建第2层(桥接和交换)虚拟网络基础结构。

编辑/etc/neutron/plugins/ml2/ml2_conf.ini 文件并完成以下操作:

# vim /etc/neutron/plugins/ml2/ml2_conf.ini在 [ml2]部分,启用 flat, VLAN, and VXLAN 网络:

[ml2]

# ...

type_drivers = flat,vlan,vxlan在 [ml2]部分,启用VXLAN 自助服务网络:

[ml2]

# ...

tenant_network_types = vxlan在 [ml2]部分, 启用Linux网桥和第2层集群机制:

[ml2]

# ...

mechanism_drivers = linuxbridge,l2population在 [ml2]部分, 启用端口安全扩展驱动程序:

[ml2]

# ...

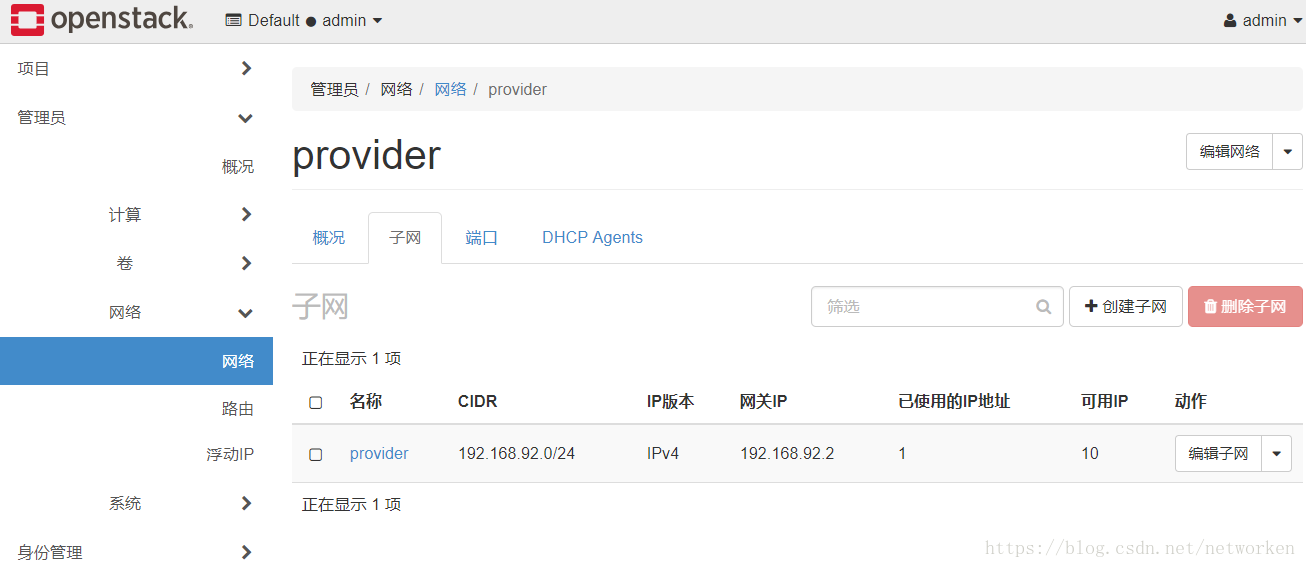

extension_drivers = port_security在 [ml2_type_flat] 部分, 将提供者虚拟网络配置为扁平网络:

[ml2_type_flat]

# ...

flat_networks = provider在 [ml2_type_vxlan] 部分, 为自助服务网络配置VXLAN网络标识符范围:

[ml2_type_vxlan]

# ...

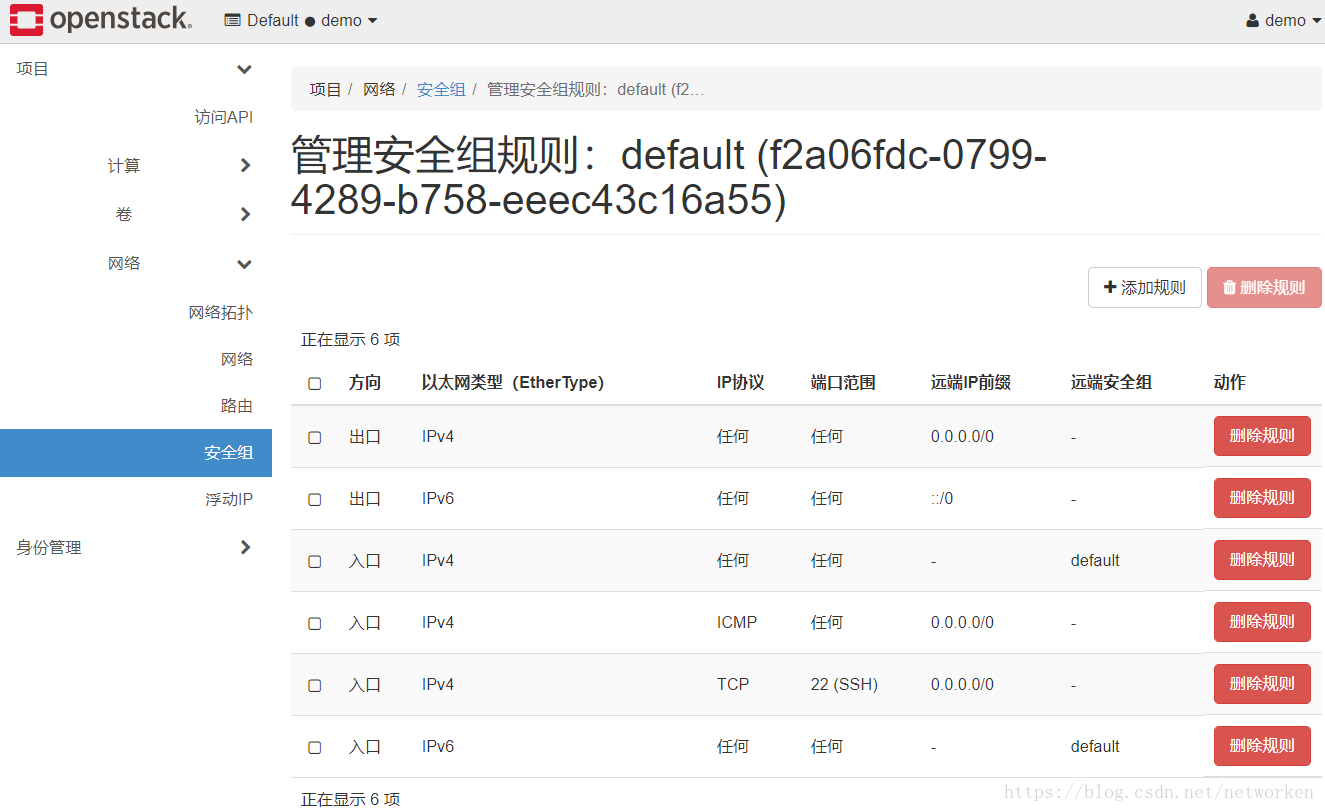

vni_ranges = 1:1000在 [securitygroup] 部分, 启用ipset以提高安全组规则的效率:

[securitygroup]

# ...

enable_ipset = true4.配置linux网桥代理

Linux桥接代理为实例构建层-2(桥接和交换)虚拟网络基础结构,并处理安全组

编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini 文件并完成以下操作:

# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini在 [linux_bridge]部分, 将提供者虚拟网络映射到提供者物理网络接口

[linux_bridge]

physical_interface_mappings = provider:ens38注意:这里的ens38物理网卡是外部网络的网卡(underlying provider physical network interface)。

在[vxlan]部分中,启用vxlan隧道网络,配置处理隧道网络的物理网络接口的IP地址,并启用

layer-2 population:

[vxlan]

enable_vxlan = true

local_ip = 192.168.91.70

l2_population = true注意这里的ip地址192.168.91.70为隧道网络的ip地址(IP address of the underlying physical network interface that handles overlay networks)

在 [securitygroup]部分, 启用安全组并配置Linux网桥iptables防火墙驱动程序:

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver通过验证下列所有SysTL值设置为1以确保Linux操作系统内核支持网桥过滤器:

net.bridge.bridge-nf-call-iptables

net.bridge.bridge-nf-call-ip6tables$ vim /usr/lib/sysctl.d/00-system.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

$ sysctl -p5.配置三层代理

Layer-3(L3)代理为自助虚拟网络提供路由和NAT服务。

编辑/etc/neutron/l3_agent.ini 文件并完成以下操作:

$ vim /etc/neutron/l3_agent.ini在 [DEFAULT]部分, 配置Linux网桥接口驱动程序和外部网络桥接器:

[DEFAULT]

# ...

interface_driver = linuxbridge7.配置DHCP代理

DHCP代理为虚拟网络提供DHCP服务。

编辑/etc/neutron/dhcp_agent.ini文件并完成以下操作:

$ vim /etc/neutron/dhcp_agent.ini在[DEFAULT]部分,配置Linux网桥接口驱动程序,Dnsmasq DHCP驱动程序,并启用隔离的元数据,以便提供商网络上的实例可以通过网络访问元数据:

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true3.4.3.5 配置metadata

元数据代理为实例提供配置信息,例如凭据。

编辑 /etc/neutron/metadata_agent.ini文件并完成以下操作:

# vim /etc/neutron/metadata_agent.ini[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = 1234563.4.3.6 配置计算服务使用网络服务

编辑/etc/nova/nova.conf文件并执行以下操作:

# vim /etc/nova/nova.conf在[neutron]部分,配置访问参数,启用元数据代理并配置秘密:

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

service_metadata_proxy = true

metadata_proxy_shared_secret = 1234563.4.3.7 完成安装启动服务

1.网络服务初始化脚本需要一个指向ML2插件配置文件/etc/neutron/plugins/ml2/ml2_conf.ini的符号链接/etc/neutron/plugin.ini。 如果此符号链接不存在,请使用以下命令创建它:

# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini2.同步数据库

# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron3.重启compute API服务

#systemctl restart openstack-nova-api.service4.启动网络服务并设为开机启动

# systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

# systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service5.对于联网选项2,还启用并启动第三层服务:

# systemctl enable neutron-l3-agent.service && systemctl start neutron-l3-agent.service3.4.4 安装和配置compute节点

以下操作在计算节点执行

计算节点处理实例的连接和安全组。

3.4.4.1 安装和配置组件

1.安装组件

# yum install openstack-neutron-linuxbridge ebtables ipset2.配置公共组件

网络通用组件配置包括身份验证机制,消息队列和插件。

编辑/etc/neutron/neutron.conf文件并完成以下操作:

在[database]部分,注释掉任何connection选项,因为计算节点不直接访问数据库。

# vim /etc/neutron/neutron.conf在[DEFAULT]部分中,配置RabbitMQ 消息队列访问:

[DEFAULT]

#...

transport_url = rabbit://openstack:123456@controller在[DEFAULT]和[keystone_authtoken]部分中,配置身份服务访问:

[DEFAULT]

#...

auth_strategy = keystone

[keystone_authtoken]

#...

auth_uri = http://controller:5000