目录

打开spark源码下的pom.xml文件,修改对应的java和intellij里的maven版本

打开intellij,Inport Project,将源码导入intellij中

准备条件

1、下载安装intellij

2、下载安装jdk1.8

3、下载安装scala2.11.8

4、下载安装maven3.5.3(本人使用的版本,不低于3.3.9就ok)

5、下载安装Git(可省略)

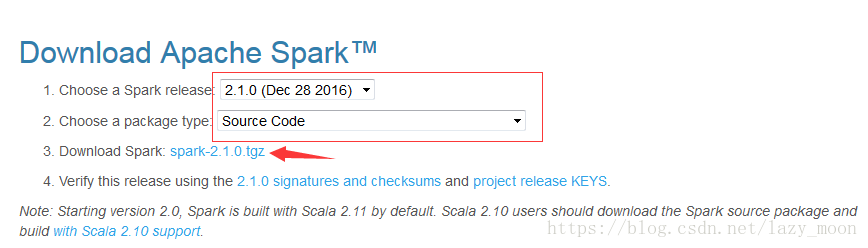

6、下载安装spark-2.1.0-bin-hadoop2.7,配置环境变量(同Linux环境下)

7、下载安装hadoop-2.7.4,配置环境变量(同Linux环境下)

下载spark源码,并解压

打开spark源码下的pom.xml文件,修改对应的java和intellij里的maven版本

打开intellij,Inport Project,将源码导入intellij中

1、导入

2、选择“Import project form external model”并且选择Maven,点击【Next】

3、勾选这三个框框,点击【Next】

需要加载一会

4、添加yarn和hadoop2.7选项,其他保持默认,hadoop的版本要与你之前安装的环境相对应,如果你是采用2.4版本的,那么就选择2.4版本的。点击【Next】

5、点击【Finish】

这个时候开始疯狂地下载Spark所需要的资源

等全部资源下载完成就OK了。

问题总结(十分重要)

问题1:not found:type SparkFlumeProtocol

flume-sink所需要的资源IDEA没有自动下载

解决方案:

在Maven Projects栏中找到Spark Project External Flume Sink

右击【Spark Project External Flume Sink】

点击【Generate Sources and Update Folders】,这个过程可能会因为一些不可知的原因导致无效,需要多尝试几遍,我第一遍成功之后,我清理了一下maven库中的垃圾文件,重新Generate Sources and Update Folders,然后还是没有成功,尝试第三遍的时候就好了。

IDEA会重新下载Flume Sink相关资源,重新build

成功之后,org.apache.spark.streaming.flume.sink.SparkAvroCallbackHandler这个类继承的SparkFlumeProtocol就不会报错了。

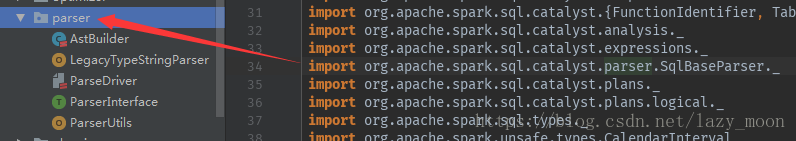

问题2:Error:(34, 45) object SqlBaseParser is not a member of package org.apache.spark.sql.catalyst.parser

import org.apache.spark.sql.catalyst.parser.SqlBaseParser._

SqlBaseParser不在org.apache.spark.sql.catalyst.parser这个包里,这么看的确不在这个包中,

解决方案:

spark-catalyst工程中,执行“mvn clean compile”,重新编译spark-catalyst

进入Project Structure,执行以下步骤,注意第三步,目录层级别选择错了

成功之后org.apache.spark.sql.catalyst.parser.AstBuilder中引用的org.apache.spark.sql.catalyst.parser.SqlBaseParser._就不会报错了

我们在spark-2.1.0\sql\catalyst\target\generated-sources\antlr4\org\apache\spark\sql\catalyst\parser中找到了SqlBaseParser

问题3:Error:(52, 75) not found: value TCLIService public abstract class ThriftCLIService extends AbstractService implements TCLIService.Iface, Runnable

解决方案:

进入Project Structure,执行以下步骤,注意第三步,目录层级别选择错了

成功之后org.apache.hive.service.cli.thrift.ThriftCLIService继承的org.apache.hive.service.cli.thrift.TCLIService就不会报错了

到这里,我们的spark源码已经不会报错了。

Maven编译打包前的准备

1、检查Maven

在Setting中,检查并设置maven版本,在最开始时我们修改过pom.xml文件,修改了maven版本和jdk版本,maven版本要与之相对应。

2、检查scala包

在Project Structure中修改

3、设置maven

-Xmx2g -XX:MaxPermSize=512M -XX:ReservedCodeCacheSize=512mMaven编译打包

在编译的最后,出现了一个错误

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-antrun-plugin:1.8:run (default) on project spark-core_2.11: An Ant BuildException has occured: Execute failed: java.io.IOException: Cannot run program "bash" (in directory "E:\spark-source\spark-2.1.0\core"): CreateProcess error=2, 系统找不到指定的文件。

[ERROR] around Ant part ...<exec executable="bash">... @ 4:27 in E:\spark-source\spark-2.1.0\core\target\antrun\build-main.xml

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <goals> -rf :spark-core_2.11

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0问题出在“Execute failed: java.io.IOException: Cannot run program "bash" (in directory "E:\spark-source\spark-2.1.0\core"): CreateProcess error=2, 系统找不到指定的文件。”这里。

解决办法是安装Git,将Git下面的bin路径加入系统Path中

解决了这个问题,重新编译

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Spark Project Parent POM 2.1.0 ..................... SUCCESS [ 11.060 s]

[INFO] Spark Project Tags ................................. SUCCESS [ 11.773 s]

[INFO] Spark Project Sketch ............................... SUCCESS [ 18.642 s]

[INFO] Spark Project Networking ........................... SUCCESS [ 21.922 s]

[INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 15.266 s]

[INFO] Spark Project Unsafe ............................... SUCCESS [ 25.778 s]

[INFO] Spark Project Launcher ............................. SUCCESS [ 23.044 s]

[INFO] Spark Project Core ................................. SUCCESS [05:40 min]

[INFO] Spark Project ML Local Library ..................... SUCCESS [01:01 min]

[INFO] Spark Project GraphX ............................... SUCCESS [01:28 min]

[INFO] Spark Project Streaming ............................ SUCCESS [02:24 min]

[INFO] Spark Project Catalyst ............................. SUCCESS [04:26 min]

[INFO] Spark Project SQL .................................. SUCCESS [06:14 min]

[INFO] Spark Project ML Library ........................... SUCCESS [05:16 min]

[INFO] Spark Project Tools ................................ SUCCESS [ 17.478 s]

[INFO] Spark Project Hive ................................. SUCCESS [03:44 min]

[INFO] Spark Project REPL ................................. SUCCESS [ 48.452 s]

[INFO] Spark Project YARN Shuffle Service ................. SUCCESS [ 14.111 s]

[INFO] Spark Project YARN ................................. SUCCESS [01:35 min]

[INFO] Spark Project Assembly ............................. SUCCESS [ 6.867 s]

[INFO] Spark Project External Flume Sink .................. SUCCESS [ 40.589 s]

[INFO] Spark Project External Flume ....................... SUCCESS [01:02 min]

[INFO] Spark Project External Flume Assembly .............. SUCCESS [ 6.343 s]

[INFO] Spark Integration for Kafka 0.8 .................... SUCCESS [01:12 min]

[INFO] Spark Project Examples ............................. SUCCESS [01:40 min]

[INFO] Spark Project External Kafka Assembly .............. SUCCESS [ 6.682 s]

[INFO] Spark Integration for Kafka 0.10 ................... SUCCESS [01:01 min]

[INFO] Spark Integration for Kafka 0.10 Assembly .......... SUCCESS [ 6.573 s]

[INFO] Kafka 0.10 Source for Structured Streaming ......... SUCCESS [01:11 min]

[INFO] Spark Project Java 8 Tests 2.1.0 ................... SUCCESS [ 21.143 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 42:58 min

[INFO] Finished at: 2018-10-09T23:25:48+08:00

[INFO] ------------------------------------------------------------------------编译成功!!!

打好的jar包就在spark-2.1.0\assembly\target\scala-2.11\jars里